A Linguist of Algorithms

Celebrated by ACL with a Lifetime Achievement Award, Kathleen McKeown continues to drive bold, cross-disciplinary research that redefines the field of natural language processing.

Celebrated by ACL with a Lifetime Achievement Award, Kathleen McKeown continues to drive bold, cross-disciplinary research that redefines the field of natural language processing.

CS researchers were among the experts who considered AI from every angle at the kick-off session of the Columbia AI Summit.

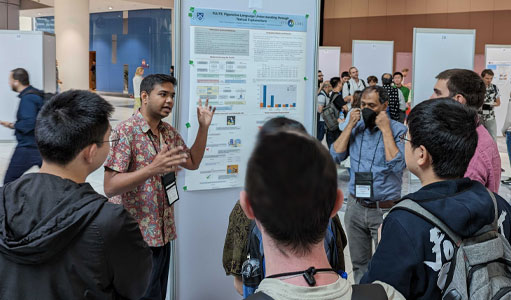

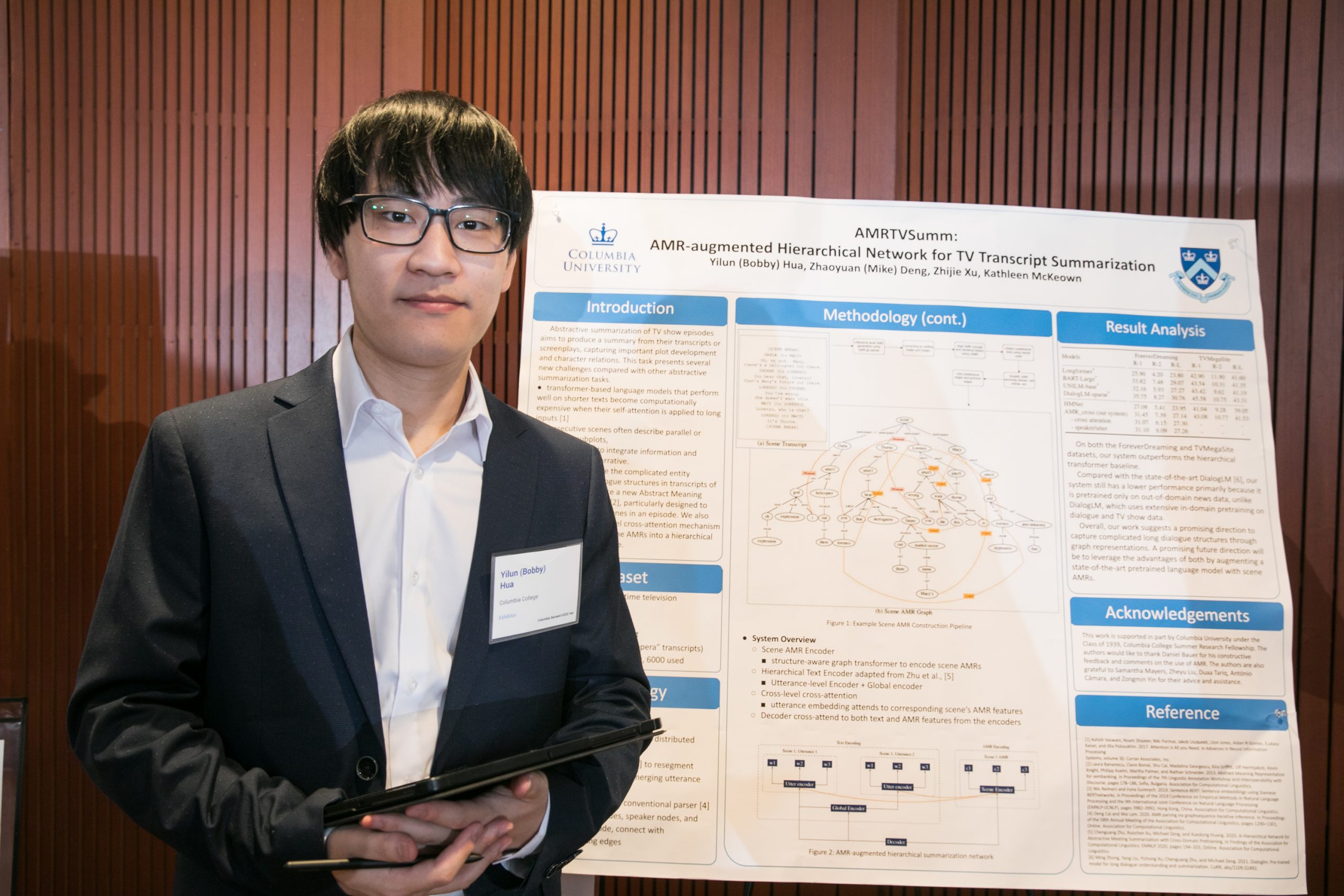

CS researchers presented their work at the 62nd Annual Meeting of the Association for Computational Linguistics (ACL 2024).

Parallel Structures in Pre-training Data Yield In-Context Learning

Yanda Chen Columbia University, Chen Zhao New York University, Zhou Yu Columbia University, Kathleen McKeown Columbia University, He He New York University

Abstract:

Pre-trained language models (LMs) are capable of in-context learning (ICL): they can adapt to a task with only a few examples given in the prompt without any parameter update. However, it is unclear where this capability comes from as there is a stark distribution shift between pre-training text and ICL prompts. In this work, we study what patterns of the pretraining data contribute to ICL. We find that LMs’ ICL ability depends on parallel structures in the pre-training data—pairs of phrases following similar templates in the same context window. Specifically, we detect parallel structures by checking whether training on one phrase improves prediction of the other, and conduct ablation experiments to study their effect on ICL. We show that removing parallel structures in the pre-training data reduces LMs’ ICL accuracy by 51% (vs 2% from random ablation). This drop persists even when excluding common patterns such as n-gram repetitions and long-range dependency, showing the diversity and generality of parallel structures. A closer look at the detected parallel structures indicates that they cover diverse linguistic tasks and span long distances in the data.

Getting Serious about Humor: Crafting Humor Datasets with Unfunny Large Language Models

Zachary Horvitz Columbia University, Jingru Chen Columbia University, Rahul Aditya Columbia University, Harshvardhan Srivastava Columbia University, Robert West EPFL, Zhou Yu Columbia University, Kathleen McKeown Columbia University

Abstract:

Humor is a fundamental facet of human cognition and interaction. Yet, despite recent advances in natural language processing, humor detection remains a challenging task that is complicated by the scarcity of datasets that pair humorous texts with similar non-humorous counterparts. We investigate whether large language models (LLMs) can generate synthetic data for humor detection via editing texts. We benchmark LLMs on an existing human dataset and show that current LLMs display an impressive ability to “unfun” jokes, as judged by humans and as measured on the downstream task of humor detection. We extend our approach to a code-mixed English-Hindi humor dataset where we find that GPT-4’s synthetic data is highly rated by bilingual annotators and provides challenging adversarial examples for humor classifiers.

ICLEF: In-Context Learning with Expert Feedback for Explainable Style Transfer

Arkadiy Saakyan Columbia University and Smaranda Muresan Columbia University

Abstract:

While state-of-the-art large language models (LLMs) can excel at adapting text from one style to another, current work does not address the explainability of style transfer models. Recent work has explored generating textual explanations from larger teacher models and distilling them into smaller student models. One challenge with such approach is that LLM outputs may contain errors that require expertise to correct, but gathering and incorporating expert feedback is difficult due to cost and availability. To address this challenge, we propose ICLEF, a novel human-AI collaboration approach to model distillation that incorporates scarce expert human feedback by combining in-context learning and model self-critique. We show that our method leads to generation of high-quality synthetic explainable style transfer datasets for formality (E-GYAFC) and subjective bias (EWNC). Via automatic and human evaluation, we show that specialized student models finetuned on our datasets outperform generalist teacher models on the explainable style transfer task in one-shot settings, and perform competitively compared to few-shot teacher models, highlighting the quality of the data and the role of expert feedback. In an extrinsic task of authorship attribution, we show that explanations generated by smaller models fine-tuned on E-GYAFC are more predictive of authorship than explanations generated by few-shot teacher models.

ProLex: A Benchmark for Language Proficiency-oriented Lexical Substitution

Xuanming Zhang Columbia University, Zixun Chen Columbia University, and Zhou Yu Columbia University

Abstract:

Lexical Substitution discovers appropriate substitutes for a given target word in a context sentence. However, the task fails to consider substitutes that are of equal or higher proficiency than the target, an aspect that could be beneficial for language learners looking to improve their writing. To bridge this gap, we propose a new task — language proficiencyoriented lexical substitution. We also introduce ProLex, a novel benchmark designed to assess systems’ ability to generate not only appropriate substitutes but also substitutes that demonstrate better language proficiency. Besides the benchmark, we propose models that can automatically perform the new task. We show that our best model, a Llama2-13B model fine-tuned with task-specific synthetic data, outperforms ChatGPT by an average of 3.2% in F-score and achieves comparable results with GPT-4 on ProLex.

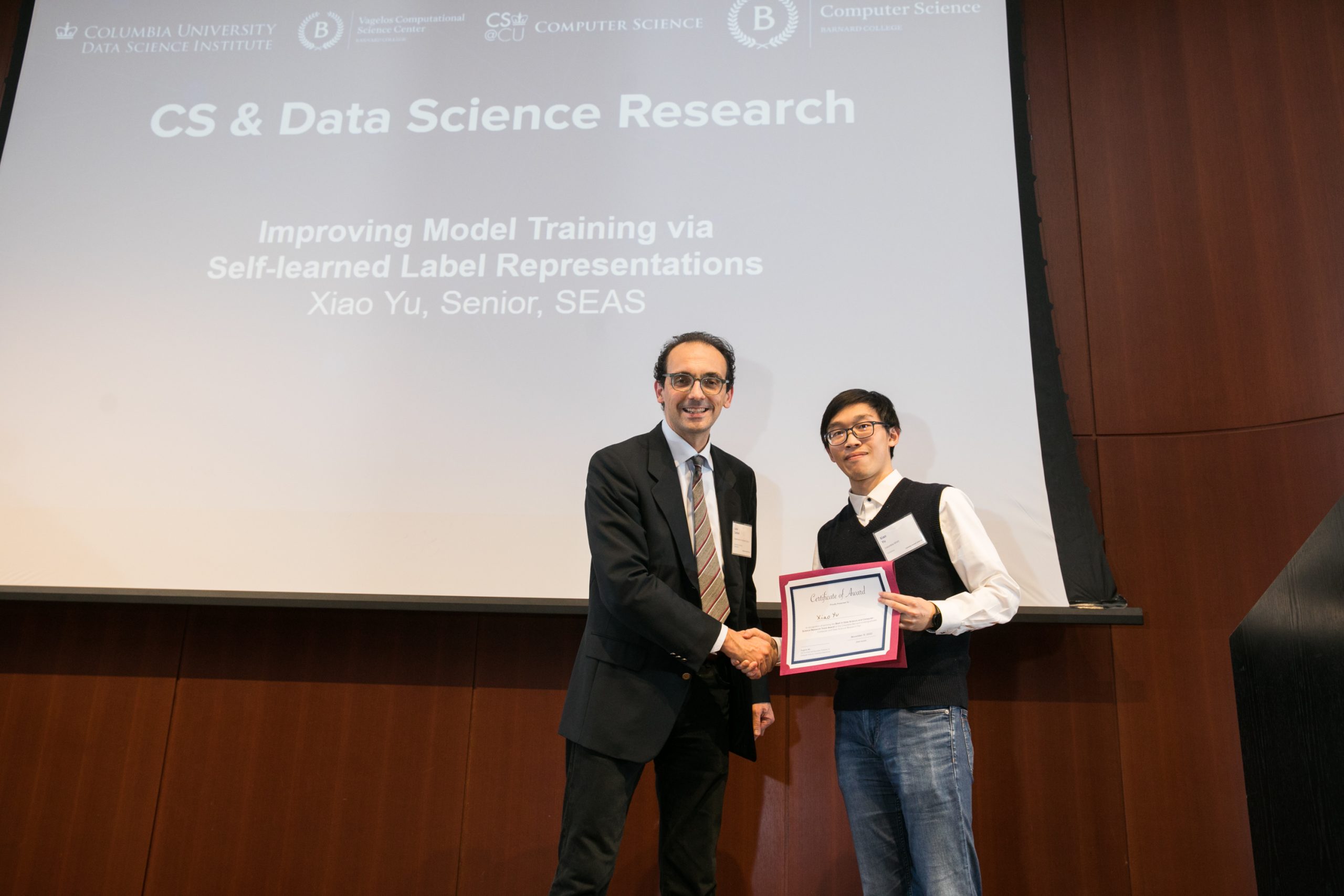

LOCALRQA: From Generating Data to Locally Training, Testing, and Deploying Retrieval-Augmented QA Systems

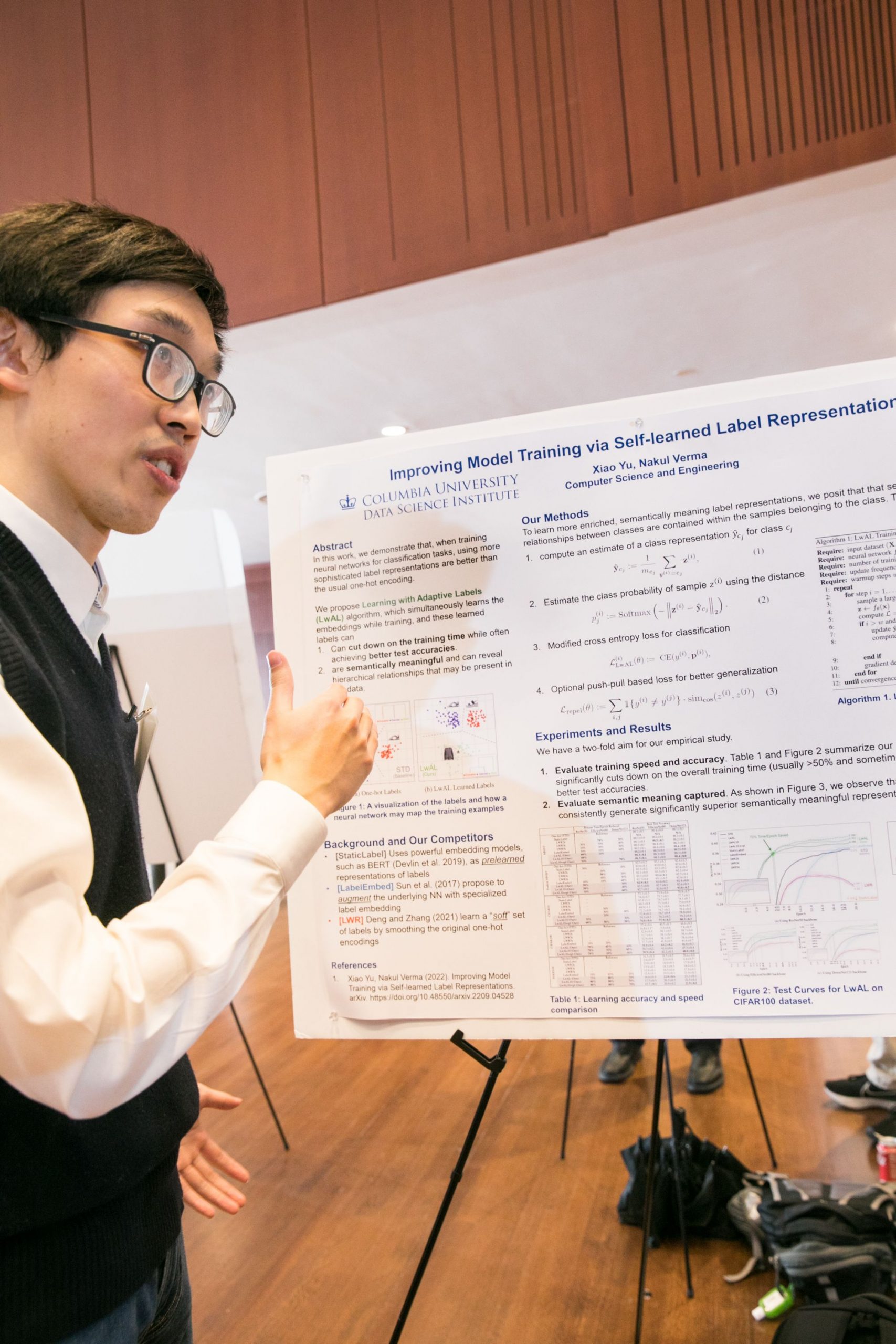

Xiao Yu Columbia University, Yunan Lu Columbia University, Zhou Yu Columbia University

Abstract:

Retrieval-augmented question-answering systems combine retrieval techniques with large language models to provide answers that are more accurate and informative. Many existing toolkits allow users to quickly build such systems using off-the-shelf models, but they fall short in supporting researchers and developers to customize the model training, testing, and deployment process. We propose LOCALRQA1 , an open-source toolkit that features a wide selection of model training algorithms, evaluation methods, and deployment tools curated from the latest research. As a showcase, we build QA systems using online documentation obtained from Databricks and Faire’s websites. We find 7B-models trained and deployed using LOCALRQA reach a similar performance compared to using OpenAI’s text-ada-002 and GPT-4-turbo.

Large Language Models are Few-Shot Training Example Generators: A Case Study in Fallacy Recognition

Tariq Alhindi Mohamed bin Zayed University of Artificial Intelligence, Smaranda Muresan Columbia University, Preslav Nakov Mohamed bin Zayed University of Artificial Intelligence

Abstract:

Recognizing fallacies is crucial for ensuring the quality and validity of arguments across various domains. However, computational fallacy recognition faces challenges due to the diverse genres, domains, and types of fallacies found in datasets. This leads to a highly multiclass, and even multi-label, setup with substantial class imbalance. In this study, we aim to enhance existing models for fallacy recognition by incorporating additional context and by leveraging large language models to generate synthetic data, thus increasing the representation of the infrequent classes. We experiment with GPT-3.5 to generate synthetic examples and we examine the impact of prompt settings for this. Moreover, we explore zero-shot and few-shot scenarios to evaluate the effectiveness of using the generated examples for training smaller models within a unified fallacy recognition framework. Furthermore, we analyze the overlap between the synthetic data and existing fallacy datasets. Finally, we investigate the usefulness of providing supplementary context for detecting fallacy types that need such context, e.g., diversion fallacies. Our evaluation results demonstrate consistent improvements across fallacy types, datasets, and generators. The code and the synthetic datasets are all publicly available.

Brian Smith received an NSF CAREER Award to develop a framework that will improve digital imagery so that blind and low-vision (BLV) individuals can better perceive and interact with visual content in the digital realm.

The paper “An Empirical Study of API Stability and Adoption in the Android Ecosystem”, was recognized as the Most Impactful Paper from among the published papers at ICSME ’13.

The projects will explore algorithmic fairness, unified methods for interpreting artistic images found on the internet, and the development of a differentially-private data market system.

“Revisiting Residue Codes for Modern Memories” from the Computer Engineering group is one of the Top Picks for IEEE Micro Magazine.

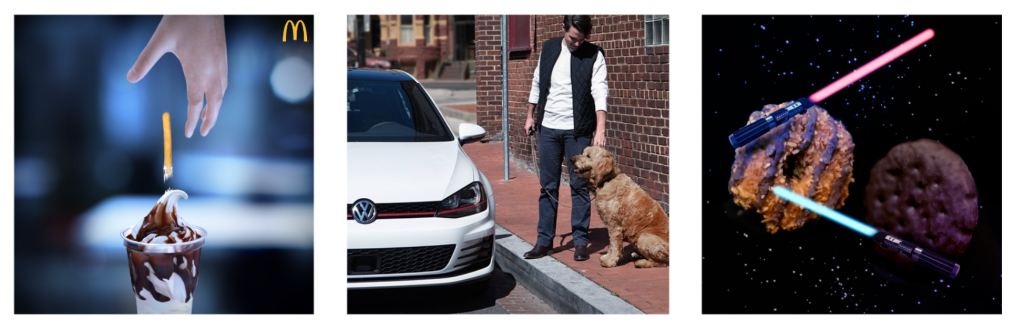

After growing up in Jiangsu, China, Sitong Wang studied electrical engineering at Chongqing University and the University of Cincinnati. During her co-op at the Hong Kong University of Science and Technology (HKUST), she was introduced to Human-Computer Interaction (HCI). This research area understands and enhances the interaction between humans and computers. She became interested in the field and then took her master’s at Columbia CS. Wang was intrigued by how computation can power the creative process when she worked on a design challenge that blends pop culture references with products or services and helped a group of students promote their beverage start-up.

Encouraged by the creative work she could do, Wang joined the Computational Design Lab as a PhD student to continue to work with Assistant Professor Lydia Chilton and explore ways to design AI-powered creativity support tools. She recently published her first first-author research paper at the Conference on Human Factors in Computing Systems (CHI 2023). She and colleagues designed PopBlends, a system that automatically suggests conceptual blends by connecting a user’s topic with a pop culture domain. Their user study shows that people found twice as many blend suggestions as they did without the system and with half the mental demand.

We caught up with Wang to discuss her research, her work on generative AI tools, and what it is like to be a graduate student at Columbia.

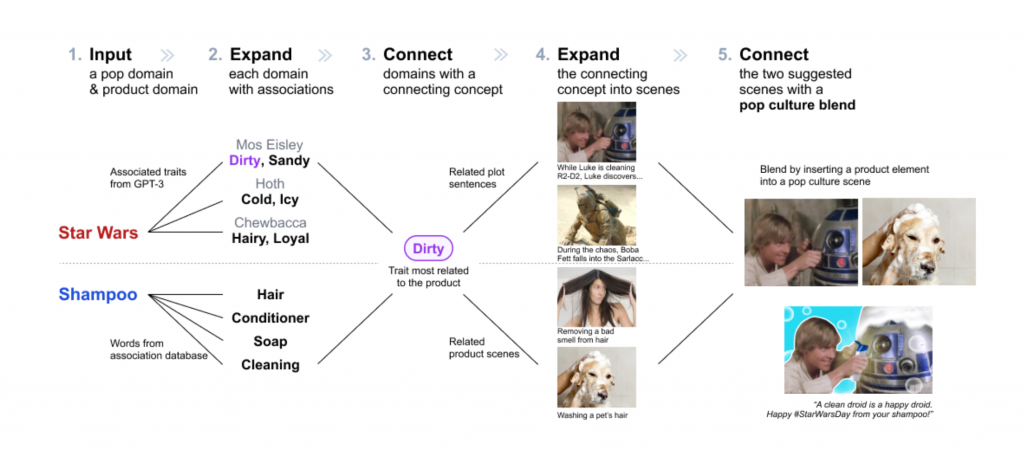

In the paper, we tackled the creative challenge of designing pop culture blends—images that use pop culture references to promote a product or service. We designed PopBlends, an automated pipeline consisting of three complementary strategies to find creative connections between a product and a pop culture domain.

Our work explores how large language models (LLMs) can provide associative knowledge and commonsense reasoning for creative tasks. We also discuss how to combine the power of traditional knowledge bases and LLMs to support creators in their divergent and convergent thinking.

It can help people, especially those without a design background, create pop culture blends more easily to advertise their brands. We want to make the design process more enjoyable and less cognitively demanding for everyone. We hope to enhance people’s creativity and productivity by scaffolding the creative process and using the power of computation to help people explore the design space more efficiently.

Pop culture is important in everyday communication. Pop culture blends are helpful for online campaigns because they capture attention and connect the product to something people already know and like. However, creating these images is a challenging conceptual blending task and requires finding connections between two very different domains.

So we built an automated computational pipeline that can effectively support divergent and convergent thinking in finding such creative connections. We explored how to apply generative AI to creative workflows to assist people better—generative AI is powerful, but it is not perfect—thus, it is valuable to use different strategies that combine a knowledge base (which is accurate) and LLM (which has a vast amount of data) to support creative tasks.

Conceptual blending is complex—the design space is vast and valuable connections are rare—to tackle this challenge, we need to scaffold the ideation process and combine the intelligence of humans and machines. When we started this project, GPT-3 was not yet available; we tried traditional NLP techniques to find attribute associations (e.g., Chewbacca is fluffy) but faced challenges. Then, by chance, we tried GPT-3, which worked well with the necessary prompt engineering.

I was surprised by the associative reasoning capability of LLMs—which is technically a model that predicts the most probable next word. It easily listed related concepts for different domains and could suggest possible creative connections. I was also surprised by the hallucinations the LLMs made through our experiments, and the models could say things that were not true with great confidence.

As an emerging technology, LLMs are powerful in many ways and open up new opportunities for the computational design field. However, LLMs currently have a lot of limitations; it is essential to explore how to build system architectures around them to produce valuable results for people.

I was both nervous and excited because it had been a long time since I had presented in front of a crowd (since we did everything online during COVID). It was also my first time presenting at a computing conference, and the “Large Language Models” session I attended was very popular.

I am grateful to my labmate Vivian Liu, who provided valuable advice, helped me rehearse, and took pictures of me. The presentation went well, and I am glad we had the opportunity to present our work to a large audience of researchers. I would also like to express my gratitude to the researchers I met during the conference, as they provided encouragement and helpful tips that greatly contributed to my experience.

I am working on a tool to help journalists transform their print articles into reels using generative AI by assisting them in the creative stages of producing scripts, character boards, and storyboards. In this work, in addition to LLMs, we incorporate text-to-image models and try to combine the power of both to support creators.

During the summer, I will work as a research intern at Adobe, where I will be focusing on AI and video authoring. Our work will revolve around facilitating the future of podcast video creation.

My undergraduate program offered great co-op opportunities that allowed me to explore different paths, including roles as an engineer, UI designer, and research intern across Chongqing, Charlottesville, and Hong Kong. During my final co-op, I had the opportunity to work in the HCI lab at the Hong Kong University of Science and Technology (HKUST). This experience ignited my passion for HCI research and marked the beginning of my research journey in this field.

I enjoy exploring unanswered questions, particularly those that reside at the intersection of multiple disciplines. A PhD program provides an excellent opportunity to work on the problems that interest me the most. In addition, I think the training provided at the PhD level can enhance essential skills such as leadership, collaboration, critical thinking, and effective communication.

My research interest lies in the creativity support in the HCI field. I am particularly interested in exploring the role of multimodal generative AI in creativity support tools. I enjoy developing co-creative interactive systems to support everyone in their everyday creative tasks.

I want to explore the role of generative AI models in future creativity support tools and build co-creative intelligent systems that support multimodal creativity, especially in the dimensions of audio and videos, as they are how we interact with the world. I also want to explore some theoretical questions, such as the overtrust/overreliance in AI, and see how we might understand and resolve them.

I love the vibrant environment of Columbia and NYC and how Columbia is strong in diverse disciplines, such as journalism, business, and law. It is an ideal place to do multi-disciplinary collaborative research.

Also, I got to know Professor Chilton well during my masters at Columbia. She is incredibly supportive and wonderful, and we share many common interests. That is why I chose to continue to work with her for my PhD journey.

The highlight would be when I witnessed the success of the students I mentored. It was such a rewarding process to guide and help undergraduate students interested in HCI research begin their journey.

Enjoy your time in NYC! Please don’t burn yourself out; learn how to manage your time efficiently. Don’t be afraid to try new things—start with manageable tasks, but also step out of your comfort zone. You will have fun!

If you want to do research, find research questions that genuinely interest you and be prepared to face challenges. Most importantly, preserve and trust yourself and your collaborators. Your efforts will eventually pay off!

Research from the department has been accepted to the 2023 Computer Vision and Pattern Recognition (CVPR) Conference. The annual event explores machine learning, artificial intelligence, and computer vision research and its applications.

CoWs on Pasture: Baselines and Benchmarks for Language-Driven Zero-Shot Object Navigation

Samir Yitzhak Gadre Columbia University, Mitchell Wortsman University of Washington, Gabriel Ilharco University of Washington, Ludwig Schmidt University of Washington, Shuran Song Columbia University

For robots to be generally useful, they must be able to find arbitrary objects described by people (i.e., be language-driven) even without expensive navigation training on in-domain data (i.e., perform zero-shot inference). We explore these capabilities in a unified setting: language-driven zero-shot object navigation (L-ZSON). Inspired by the recent success of open-vocabulary models for image classification, we investigate a straightforward framework, CLIP on Wheels (CoW), to adapt open-vocabulary models to this task without fine-tuning. To better evaluate L-ZSON, we introduce the Pasture benchmark, which considers finding uncommon objects, objects described by spatial and appearance attributes, and hidden objects described relative to visible objects. We conduct an in-depth empirical study by directly deploying 21 CoW baselines across Habitat, RoboTHOR, and Pasture. In total, we evaluate over 90k navigation episodes and find that (1) CoW baselines often struggle to leverage language descriptions, but are proficient at finding uncommon objects. (2) A simple CoW, with CLIP-based object localization and classical exploration — and no additional training — matches the navigation efficiency of a state-of-the-art ZSON method trained for 500M steps on Habitat MP3D data. This same CoW provides a 15.6 percentage point improvement in success over a state-of-the-art RoboTHOR ZSON model.

Towards Fast Adaptation of Pretrained Contrastive Models for Multi-Channel Video-Language Retrieval

Xudong Lin Columbia University, Simran Tiwari Columbia University, Shiyuan Huang Columbia University, Manling Li UIUC, Mike Zheng Shou National University of Singapore, Heng Ji UIUC, Shih-Fu Chang Columbia University

Multi-channel video-language retrieval require models to understand information from different channels (e.g. video+question, video+speech) to correctly link a video with a textual response or query. Fortunately, contrastive multimodal models are shown to be highly effective at aligning entities in images/videos and text, e.g., CLIP; text contrastive models are extensively studied recently for their strong ability of producing discriminative sentence embeddings, e.g., SimCSE. However, there is not a clear way to quickly adapt these two lines to multi-channel video-language retrieval with limited data and resources. In this paper, we identify a principled model design space with two axes: how to represent videos and how to fuse video and text information. Based on categorization of recent methods, we investigate the options of representing videos using continuous feature vectors or discrete text tokens; for the fusion method, we explore the use of a multimodal transformer or a pretrained contrastive text model. We extensively evaluate the four combinations on five video-language datasets. We surprisingly find that discrete text tokens coupled with a pretrained contrastive text model yields the best performance, which can even outperform state-of-the-art on the iVQA and How2QA datasets without additional training on millions of video-text data. Further analysis shows that this is because representing videos as text tokens captures the key visual information and text tokens are naturally aligned with text models that are strong retrievers after the contrastive pretraining process. All the empirical analysis establishes a solid foundation for future research on affordable and upgradable multimodal intelligence.

DiGeo: Discriminative Geometry-Aware Learning for Generalized Few-Shot Object Detection

Jiawei Ma Columbia University, Yulei Niu Columbia University, Jincheng Xu Columbia University, Shiyuan Huang Columbia University, Guangxing Han Columbia University, Shih-Fu Chang Columbia University

Generalized few-shot object detection aims to achieve precise detection on both base classes with abundant annotations and novel classes with limited training data. Existing approaches enhance few-shot generalization with the sacrifice of base-class performance, or maintain high precision in base-class detection with limited improvement in novel-class adaptation. In this paper, we point out the reason is insufficient Discriminative feature learning for all of the classes. As such, we propose a new training framework, DiGeo, to learn Geometry-aware features of inter-class separation and intra-class compactness. To guide the separation of feature clusters, we derive an offline simplex equiangular tight frame (ETF) classifier whose weights serve as class centers and are maximally and equally separated. To tighten the cluster for each class, we include adaptive class-specific margins into the classification loss and encourage the features close to the class centers. Experimental studies on two few-shot benchmark datasets (VOC, COCO) and one long-tail dataset (LVIS) demonstrate that, with a single model, our method can effectively improve generalization on novel classes without hurting the detection of base classes.

Supervised Masked Knowledge Distillation for Few-Shot Transformers

Han Lin Columbia University, Guangxing Han Columbia University, Jiawei Ma Columbia University, Shiyuan Huang Columbia University, Xudong Lin Columbia University, Shih-Fu Chang Columbia University

Vision Transformers (ViTs) emerge to achieve impressive performance on many data-abundant computer vision tasks by capturing long-range dependencies among local features. However, under few-shot learning (FSL) settings on small datasets with only a few labeled data, ViT tends to overfit and suffers from severe performance degradation due to its absence of CNN-alike inductive bias. Previous works in FSL avoid such problem either through the help of self-supervised auxiliary losses, or through the dextile uses of label information under supervised settings. But the gap between self-supervised and supervised few-shot Transformers is still unfilled. Inspired by recent advances in self-supervised knowledge distillation and masked image modeling (MIM), we propose a novel Supervised Masked Knowledge Distillation model (SMKD) for few-shot Transformers which incorporates label information into self-distillation frameworks. Compared with previous self-supervised methods, we allow intra-class knowledge distillation on both class and patch tokens, and introduce the challenging task of masked patch tokens reconstruction across intra-class images. Experimental results on four few-shot classification benchmark datasets show that our method with simple design outperforms previous methods by a large margin and achieves a new start-of-the-art. Detailed ablation studies confirm the effectiveness of each component of our model. Code for this paper is available here: this https URL.

FLEX: Full-Body Grasping Without Full-Body Grasps

Purva Tendulkar Columbia University, Dídac Surís Columbia University, Carl Vondrick Columbia University

Synthesizing 3D human avatars interacting realistically with a scene is an important problem with applications in AR/VR, video games and robotics. Towards this goal, we address the task of generating a virtual human — hands and full body — grasping everyday objects. Existing methods approach this problem by collecting a 3D dataset of humans interacting with objects and training on this data. However, 1) these methods do not generalize to different object positions and orientations, or to the presence of furniture in the scene, and 2) the diversity of their generated full-body poses is very limited. In this work, we address all the above challenges to generate realistic, diverse full-body grasps in everyday scenes without requiring any 3D full-body grasping data. Our key insight is to leverage the existence of both full-body pose and hand grasping priors, composing them using 3D geometrical constraints to obtain full-body grasps. We empirically validate that these constraints can generate a variety of feasible human grasps that are superior to baselines both quantitatively and qualitatively. See our webpage for more details: this https URL.

Humans As Light Bulbs: 3D Human Reconstruction From Thermal Reflection

Ruoshi Liu Columbia University, Carl Vondrick Columbia University

The relatively hot temperature of the human body causes people to turn into long-wave infrared light sources. Since this emitted light has a larger wavelength than visible light, many surfaces in typical scenes act as infrared mirrors with strong specular reflections. We exploit the thermal reflections of a person onto objects in order to locate their position and reconstruct their pose, even if they are not visible to a normal camera. We propose an analysis-by-synthesis framework that jointly models the objects, people, and their thermal reflections, which combines generative models with differentiable rendering of reflections. Quantitative and qualitative experiments show our approach works in highly challenging cases, such as with curved mirrors or when the person is completely unseen by a normal camera.

Tracking Through Containers and Occluders in the Wild

Basile Van Hoorick Columbia University, Pavel Tokmakov Toyota Research Institute, Simon Stent Woven Planet, Jie Li Toyota Research Institute, Carl Vondrick Columbia University

Tracking objects with persistence in cluttered and dynamic environments remains a difficult challenge for computer vision systems. In this paper, we introduce TCOW, a new benchmark and model for visual tracking through heavy occlusion and containment. We set up a task where the goal is to, given a video sequence, segment both the projected extent of the target object, as well as the surrounding container or occluder whenever one exists. To study this task, we create a mixture of synthetic and annotated real datasets to support both supervised learning and structured evaluation of model performance under various forms of task variation, such as moving or nested containment. We evaluate two recent transformer-based video models and find that while they can be surprisingly capable of tracking targets under certain settings of task variation, there remains a considerable performance gap before we can claim a tracking model to have acquired a true notion of object permanence.

Doubly Right Object Recognition: A Why Prompt for Visual Rationales

Chengzhi Mao Columbia University, Revant Teotia Columbia University, Amrutha Sundar Columbia University, Sachit Menon Columbia University, Junfeng Yang Columbia University, Xin Wang Microsoft Research, Carl Vondrick Columbia University

Many visual recognition models are evaluated only on their classification accuracy, a metric for which they obtain strong performance. In this paper, we investigate whether computer vision models can also provide correct rationales for their predictions. We propose a “doubly right” object recognition benchmark, where the metric requires the model to simultaneously produce both the right labels as well as the right rationales. We find that state-of-the-art visual models, such as CLIP, often provide incorrect rationales for their categorical predictions. However, by transferring the rationales from language models into visual representations through a tailored dataset, we show that we can learn a “why prompt,” which adapts large visual representations to produce correct rationales. Visualizations and empirical experiments show that our prompts significantly improve performance on doubly right object recognition, in addition to zero-shot transfer to unseen tasks and datasets.

What You Can Reconstruct From a Shadow

Ruoshi Liu Columbia University, Sachit Menon Columbia University, Chengzhi Mao Columbia University, Dennis Park Toyota Research Institute, Simon Stent Woven Planet, Carl Vondrick Columbia University

3D reconstruction is a fundamental problem in computer vision, and the task is especially challenging when the object to reconstruct is partially or fully occluded. We introduce a method that uses the shadows cast by an unobserved object in order to infer the possible 3D volumes under occlusion. We create a differentiable image formation model that allows us to jointly infer the 3D shape of an object, its pose, and the position of a light source. Since the approach is end-to-end differentiable, we are able to integrate learned priors of object geometry in order to generate realistic 3D shapes of different object categories. Experiments and visualizations show that the method is able to generate multiple possible solutions that are consistent with the observation of the shadow. Our approach works even when the position of the light source and object pose are both unknown. Our approach is also robust to real-world images where ground-truth shadow mask is unknown.

CLIP-Sculptor: Zero-Shot Generation of High-Fidelity and Diverse Shapes From Natural Language

Aditya Sanghi Autodesk Research, Rao Fu Brown University, Vivian Liu Columbia University, Karl D.D. Willis Autodesk Research, Hooman Shayani Autodesk Research, Amir H. Khasahmadi Autodesk Research, Srinath Sridhar Brown University, Daniel Ritchie Brown University

Recent works have demonstrated that natural language can be used to generate and edit 3D shapes. However, these methods generate shapes with limited fidelity and diversity. We introduce CLIP-Sculptor, a method to address these constraints by producing high-fidelity and diverse 3D shapes without the need for (text, shape) pairs during training. CLIP-Sculptor achieves this in a multi-resolution approach that first generates in a low-dimensional latent space and then upscales to a higher resolution for improved shape fidelity. For improved shape diversity, we use a discrete latent space which is modeled using a transformer conditioned on CLIP’s image-text embedding space. We also present a novel variant of classifier-free guidance, which improves the accuracy-diversity trade-off. Finally, we perform extensive experiments demonstrating that CLIP-Sculptor outperforms state-of-the-art baselines.

Research papers from the department were accepted to the 11th International Conference on Learning Representations (ICLR 2023). ICLR is the premier conference on deep learning where researchers gather to discuss their work in the fields of artificial intelligence, statistics, and data science.

Visual Classification via Description from Large Language Models

Sachit Menon Columbia University, Carl Vondrick Columbia University

Keywords: vision-language models, CLIP, prompting, GPT-3, large language models, zero-shot recognition, multimodal

TL;DR: We enhance zero-shot recognition with vision-language models by comparing to category descriptors from GPT-3, enabling better performance in an interpretable setting that also allows for the incorporation of new concepts and bias mitigation.

Abstract:

Vision-language models such as CLIP have shown promising performance on a variety of recognition tasks using the standard zero-shot classification procedure — computing similarity between the query image and the embedded words for each category. By only using the category name, they neglect to make use of the rich context of additional information that language affords. The procedure gives no intermediate understanding of why a category is chosen and furthermore provides no mechanism for adjusting the criteria used towards this decision. We present an alternative framework for classification with VLMs, which we call classification by description. We ask VLMs to check for descriptive features rather than broad categories: to find a tiger, look for its stripes; its claws; and more. By basing decisions on these descriptors, we can provide additional cues that encourage using the features we want to be used. In the process, we can get a clear idea of what the model “thinks” it is seeing to make its decision; it gains some level of inherent explainability. We query large language models (e.g., GPT-3) for these descriptors to obtain them in a scalable way. Extensive experiments show our framework has numerous advantages past interpretability. We show improvements in accuracy on ImageNet across distribution shifts; demonstrate the ability to adapt VLMs to recognize concepts unseen during training; and illustrate how descriptors can be edited to effectively mitigate bias compared to the baseline.

CROM: Continuous Reduced-Order Modeling of PDEs Using Implicit Neural Representations

Peter Yichen Chen Columbia University, Jinxu Xiang Columbia University, Dong Heon Cho Columbia University, Yue Chang University of Toronto, G A Pershing Columbia University, Henrique Teles Maia Columbia University, Maurizio M Chiaramonte Meta Reality Labs Research, Kevin Thomas Carlberg Meta Reality Labs Research, Eitan Grinspun University of Toronto

Keywords: PDE, implicit neural representation, neural field, latent space traversal, reduced-order modeling, numerical methods

TL;DR: We accelerate PDE solvers via rapid latent space traversal of continuous vector fields leveraging implicit neural representations.

Abstract:

The long runtime of high-fidelity partial differential equation (PDE) solvers makes them unsuitable for time-critical applications. We propose to accelerate PDE solvers using reduced-order modeling (ROM). Whereas prior ROM approaches reduce the dimensionality of discretized vector fields, our continuous reduced-order modeling (CROM) approach builds a low-dimensional embedding of the continuous vector fields themselves, not their discretization. We represent this reduced manifold using continuously differentiable neural fields, which may train on any and all available numerical solutions of the continuous system, even when they are obtained using diverse methods or discretizations. We validate our approach on an extensive range of PDEs with training data from voxel grids, meshes, and point clouds. Compared to prior discretization-dependent ROM methods, such as linear subspace proper orthogonal decomposition (POD) and nonlinear manifold neural-network-based autoencoders, CROM features higher accuracy, lower memory consumption, dynamically adaptive resolutions, and applicability to any discretization. For equal latent space dimension, CROM exhibits 79x and 49x better accuracy, and 39x and 132x smaller memory footprint, than POD and autoencoder methods, respectively. Experiments demonstrate 109x and 89x wall-clock speedups over unreduced models on CPUs and GPUs, respectively. Videos and codes are available on the project page: https://crom-pde.github.io

Quantile Risk Control: A Flexible Framework for Bounding the Probability of High-Loss Predictions

Jake Snell Princeton University, Thomas P Zollo Columbia University, Zhun Deng Columbia University, Toniann Pitassi Columbia University, Richard Zemel Columbia University

Keywords: distribution-free uncertainty quantification

TL;DR: We propose a framework to rigorously and flexible control the quantiles of the loss distribution incurred by a predictor or set of predictors.

Abstract:

Rigorous guarantees about the performance of predictive algorithms are necessary in order to ensure their responsible use. Previous work has largely focused on bounding the expected loss of a predictor, but this is not sufficient in many risk-sensitive applications where the distribution of errors is important. In this work, we propose a flexible framework to produce a family of bounds on quantiles of the loss distribution incurred by a predictor. Our method takes advantage of the order statistics of the observed loss values rather than relying on the sample mean alone. We show that a quantile is an informative way of quantifying predictive performance, and that our framework applies to a variety of quantile-based metrics, each targeting important subsets of the data distribution. We analyze the theoretical properties of our proposed method and demonstrate its ability to rigorously control loss quantiles on several real-world datasets.

Causal Imitation Learning via Inverse Reinforcement Learning

Kangrui Ruan Columbia University, Junzhe Zhang Columbia University, Xuan Di Columbia University, Elias Bareinboim Columbia University

Keywords: Causal Inference, Graphical Models

TL;DR: This paper proposes novel inverse reinforcement learning methods to learn effective imitating policies from the expert’s demonstrations when unobserved confounders are present.

Abstract:

One of the most common ways children learn when unfamiliar with the environment is by mimicking adults. Imitation learning concerns an imitator learning to behave in an unknown environment from an expert’s demonstration; reward signals remain latent to the imitator. This paper studies imitation learning through causal lenses and extends the analysis and tools developed for behavior cloning (Zhang, Kumor, Bareinboim, 2020) to inverse reinforcement learning. First, we propose novel graphical conditions that allow the imitator to learn a policy performing as well as the expert’s behavior policy, even when the imitator and the expert’s state-action space disagree, and unobserved confounders (UCs) are present. When provided with parametric knowledge about the unknown reward function, such a policy may outperform the expert’s. Also, our method is easily extensible and allows one to leverage existing IRL algorithms even when UCs are present, including the multiplicative-weights algorithm (MWAL) (Syed & Schapire, 2008) and the generative adversarial imitation learning (GAIL) (Ho & Ermon, 2016). Finally, we validate our framework by simulations using real-world and synthetic data.

Neural Causal Models for Counterfactual Identification and Estimation

Kevin Muyuan Xia Columbia University, Yushu Pan Columbia University, Elias Bareinboim Columbia University

Keywords: causal inference, deep learning, neural models, neural causal models, causal identification, causal estimation, counterfactual

TL;DR: We solve the two problems of counterfactual identification and estimation from arbitrary surrogate experiments using a Generative Adversarial Network implementation of the Neural Causal Model.

Abstract:

Evaluating hypothetical statements about how the world would be had a different course of action been taken is arguably one key capability expected from modern AI systems. Counterfactual reasoning underpins discussions in fairness, the determination of blame and responsibility, credit assignment, and regret. In this paper, we study the evaluation of counterfactual statements through neural models. Specifically, we tackle two causal problems required to make such evaluations, i.e., counterfactual identification and estimation from an arbitrary combination of observational and experimental data. First, we show that neural causal models (NCMs) are expressive enough and encode the structural constraints necessary for performing counterfactual reasoning. Second, we develop an algorithm for simultaneously identifying and estimating counterfactual distributions. We show that this algorithm is sound and complete for deciding counterfactual identification in general settings. Third, considering the practical implications of these results, we introduce a new strategy for modeling NCMs using generative adversarial networks. Simulations corroborate with the proposed methodology.

Understanding Zero-shot Adversarial Robustness for Large-Scale Models

Chengzhi Mao Columbia University, Scott Geng Columbia University, Junfeng Yang Columbia University, Xin Wang Microsoft Research, Carl Vondrick Columbia University

Keywords: Adversarial Robustness, Zero-Shot Recognition

Abstract:

Pretrained large-scale vision-language models like CLIP have exhibited strong generalization over unseen tasks. Yet imperceptible adversarial perturbations can significantly reduce CLIP’s performance on new tasks. In this work, we identify and explore the problem of adapting large-scale models for zero-shot adversarial robustness. We first identify two key factors during model adaption–training losses and adaptation methods–that affect the model’s zero-shot adversarial robustness. We then propose a text-guided contrastive adversarial training loss, which aligns the text embeddings and the adversarial visual features with contrastive learning on a small set of training data. We apply this training loss to two adaption methods, model finetuning and visual prompt tuning. We find that visual prompt tuning is more effective in the absence of texts, while finetuning wins in the existence of text guidance. Overall, our approach significantly improves the zero-shot adversarial robustness over CLIP, seeing an average improvement of 31 points over ImageNet and 15 zero-shot datasets. We hope this work can shed light on understanding the zero-shot adversarial robustness of large-scale models.

TempCLR: Temporal Alignment Representation with Contrastive Learning

Yuncong Yang Columbia University, Jiawei Ma Columbia University, Shiyuan Huang Columbia University, Long Chen Columbia University, Xudong Lin Columbia University, Guangxing Han Columbia University, Shih-Fu Chang Columbia University

Keywords: Representation learning, Global Sequence Alignment, Zero/Few-shot Transfer

TL;DR: Global sequence matching under temporal order consistency matters in contrastive-based video-paragraph/text learning.

Abstract:

Video representation learning has been successful in video-text pre-training for zero-shot transfer, where each sentence is trained to be close to the paired video clips in a common feature space. For long videos, given a paragraph of description where the sentences describe different segments of the video, by matching all sentence-clip pairs, the paragraph and the full video are aligned implicitly. However, such unit-level similarity measure may ignore the global temporal context over a long time span, which inevitably limits the generalization ability. In this paper, we propose a contrastive learning framework TempCLR to compare the full video and the paragraph explicitly. As the video/paragraph is formulated as a sequence of clips/sentences, under the constraint of their temporal order, we use dynamic time warping to compute the minimum cumulative cost over sentence-clip pairs as the sequence-level distance. To explore the temporal dynamics, we break the consistency of temporal order by shuffling the video clips or sentences according to the temporal granularity. In this way, we obtain the representations for clips/sentences, which perceive the temporal information and thus facilitate the sequence alignment. In addition to pre-training on the video and paragraph, our approach can also generalize on the matching between different video instances. We evaluate our approach on video retrieval, action step localization, and few-shot action recognition, and achieve consistent performance gain over all three tasks. Detailed ablation studies are provided to justify the approach design.

The third-year PhD student is creating tools to help people with vision impairments navigate the world.

Imagine walking to your office from the subway station on a Monday morning. You notice a new café on the way, so you decide to take a detour and try a latté. That sounds like a normal way to start the week, right?

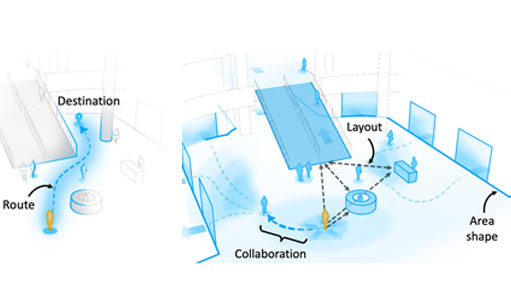

But for people with vision impairment or low vision, like those who are categorized as blind and low vision (BLV), this kind of spontaneous exploration while outside is challenging. Current navigation assistance systems (NAS) provide turn-by-turn instructions, but they do not allow visually impaired users to deviate from the shortest path to their destination or make decisions on the fly. As a result, people with vision impairment or low vision often miss out on the freedom to go out and navigate on their own terms.

In a paper published at the ACM Conference On Computer-Supported Cooperative Work And Social Computing (CSCW ‘23), computer science researchers introduced the concept of “Exploration Assistance,” which is an evolution of current NASs that can support BLV people’s exploration in unfamiliar environments. Led by Gaurav Jain, the researchers investigated how NASs should be designed by interviewing BLV people, orientation and mobility instructors, and leaders of blind-serving organizations, to understand their specific needs and challenges. Their findings highlight the types of spatial information required for exploration beyond turn-by-turn instructions and the difficulties faced by BLV people when exploring alone or with the help of others.

Jain, who is advised by Assistant Professor Brian Smith, is a PhD student in the Computer-Enabled Abilities Laboratory (CEAL Lab), where researchers develop computers that help people perceive and interact with the world around them. Their paper presents the results of interviews with BLV people and other stakeholders to identify the types of spatial information BLV people need for exploration and the challenges BLV people face when exploring unfamiliar environments. The paper offers insights into the design and development of new navigation assistance systems that can support BLV people in exploring unfamiliar environments with greater spontaneity and agency.

Based on their findings, they presented several instances of NASs that support the exploration assistance paradigm and identify several challenges that need to be overcome to make these systems a reality. Jain hopes that his research will ultimately enable BLV people to experience greater agency and independence as they navigate and explore their environments. We sat down with Jain to learn more about his research, doing qualitative research, and the thought processes behind writing research papers.

This research is incredibly exciting for the blind and low vision (BLV) community, as it represents a significant step towards equal access and agency in exploring unfamiliar environments. For BLV people, the ability to navigate and explore independently is essential to daily life, and current navigation assistance systems often limit their ability to do so. By introducing the concept of exploration assistance, this research opens up new possibilities for BLV people to explore and discover their surroundings with greater spontaneity and freedom. This research has the potential to significantly improve the quality of life for BLV people and is a major development in the ongoing pursuit of accessibility and inclusion for all.

This was my first project as a PhD student in the CEAL lab. The project was initiated as a camera-based wearable NAS for BLV people, and we conducted several formative studies with BLV people.

As we progressed, we realized that there was a significant research gap in the research community’s understanding of how NASs could support BLV people’s exploration in navigation. Based on these findings, we shifted our focus toward investigating this gap, and the paper I worked on was the result of this pivot. The paper is titled, “I Want to Figure Things Out”: Supporting Exploration in Navigation for People with Visual Impairments.

Over the course of approximately one year, I had the opportunity to work on this project that challenged me to step outside of my comfort zone as a human-computer interaction (HCI) researcher. Before this project, my research experience had primarily focused on computer vision and deep learning. I was more at ease with HCI systems research, which involved designing, building, and evaluating tools and techniques to solve user problems.

This project, however, was a qualitative research study that aimed to gain a deeper understanding of user needs, behaviors, challenges, and attitudes toward technology through in-depth interviews, observations, and other qualitative data collection methods. To prepare for this project, I had to immerse myself in the field of accessibility and navigation assistance for BLV people and read extensively on papers that employed qualitative research methods.

Although it took some time for me to shift my mindset towards qualitative research, this project helped me become a more well-rounded researcher, as I now feel comfortable with both qualitative and systems research. Overall, this project was a significant personal and professional growth experience, as I was able to expand my research expertise and contribute to a worthy cause.

Writing the paper was a critical stage in the research process, and I approached it by first organizing my thoughts and drafting a clear outline. I started by creating an outline of the paper with section and subsection headers, accompanied by a brief summary of what I intended to discuss in each section. This process allowed me to see the overall structure of the paper and ensure that I covered all the essential elements.

Once I had a clear structure in mind, I began to tackle each section of the paper one by one, starting with the introduction and then moving on to the methods, results, and discussion sections. I iteratively refined my writing based on feedback from my advisor, lab mates, and friends.

Throughout the writing process, I also ensured that my writing was clear, concise, and easy to follow. I paid close attention to the flow of ideas and transitions between sections, making sure that each paragraph and sentence contributed to the overall argument and was well-supported by the evidence.

Overall, the process of writing the paper was challenging but rewarding. It allowed me to synthesize the research findings and present them in a compelling way, showcasing the impact of our work on the lives of BLV people.

Throughout the research process, I encountered various challenges that both surprised and tested me. Interviewing participants, in particular, proved to be an intriguing yet difficult task. Initially, I struggled to guide conversations naturally toward my research questions without leading participants toward a certain answer. However, with each interview, I became more confident and began to enjoy the process. Hearing firsthand from BLV people that our work could make a real impact on their lives was also incredibly rewarding.

Analyzing and synthesizing the interview data was another major challenge. Unlike quantitative data, conversations are often open-ended and context-dependent, making it difficult to separate my own biases from the interviewee’s responses. I spent a considerable amount of time reviewing the interview transcripts and identifying emerging themes. To facilitate this process, I leveraged tools like NVivo to better organize the interview data, and our team held several discussions to refine these themes. To ensure the accuracy of our interpretation, we sought feedback from two BLV interns who worked with us over the summer on another project.

Overall, this research experience pushed me to become more adaptable. While it presented its own unique set of challenges, I am proud to have contributed to a project that has the potential to create meaningful change in the lives of BLV people.

Yes, my experience with this research project has certainly changed my view on how to approach research. It has taught me the importance of keeping the paper in mind from the beginning of a project.

Now, I make a conscious effort to think about how I want to present my work and what story I want to tell with the research. This helps me gain more clarity on the direction of the project and how to steer it toward producing meaningful results. As part of my workflow, I now write early drafts of paper introductions even before developing any tools or systems. This allows me to zoom out from the day-to-day technical challenges and see the big picture, which is crucial in making sure that the research is both impactful and well-presented.

Writing a research paper can be a challenging task, but here are a few tips that have helped me make the process smoother:

Finally, one resource that I would totally recommend to every PhD student at Columbia is Adjunct Professor Janet Kayfetz’s class on Technical Writing. Her class is an excellent way to deeply understand research writing.

I am currently working on two exciting projects that further my research goal of developing inclusive physical and digital environments for BLV people. The first project involves enhancing the capabilities of smart streets, streets with sensors like cameras and computing power, to help BLV people navigate street intersections safely.

This project is part of the NSF Engineering Research Center for Smart Streetscapes’ application thrust. The second project is focused on making videos accessible to BLV people by creating high-quality audio descriptions available at scale.

My exposure to research during my undergrad was invaluable, as it allowed me to work on diverse projects utilizing computer vision for various applications such as biometric security and medical imaging. These experiences instilled in me a passion for the research process. It was fulfilling to be able to identify problems that I care about, explore solutions, and disseminate new knowledge.

While I knew I enjoyed research, it was during the summer research fellowship at the Indian Institute of Sciences, where I collaborated with Professor P. K. Yalavarthy in the Medical Imaging Group, that crystallized my decision to pursue a PhD. The opportunity to work in a research lab, lead a project, and receive mentorship from an experienced advisor provided a glimpse of what a PhD program entails. I was excited by the prospect of being able to make a real-world impact by solving complex problems, and it was then that I decided to pursue a career in research.

I am interested in building Human-AI systems that embed AI technologies (e.g., computer vision) into human interactions to help BLV people better experience the world around them. My work on exploration assistance informs the design of future navigation assistance systems that enable BLV people to experience the physical world with more agency and spontaneity during navigation.

In addition to the physical world, I’ve also broadened my research focus to enhance BLV people’s experiences within the digital world. For example, I developed a system that makes it possible for BLV people to visualize the action in sports broadcasts rather than relying on other people’s descriptions of the game.

Accessibility research has traditionally focused on aiding daily-life activities and providing access to digital information for productivity and work, but there’s an increasing realization that providing access to everyday cultural experiences is equally important for inclusion and well-being.

This encompasses various forms of entertainment and recreation, such as watching TV, exploring museums, playing video games, listening to music, and engaging with social media. Ensuring that everyone has equal opportunities to enjoy these experiences is an emerging challenge. My goal is to design human-AI systems that enhance such experiences.

I was drawn to Columbia CS because of the type of problems my advisor works on. His research focused on creating systems that have a direct impact on people’s lives, where evaluating the user’s experience with the system is a key component.

This was a departure from my undergraduate research, where I focused on building systems to achieve high accuracy and efficiency. I found this user-centered approach to be extremely exciting, especially in the context of his project “RAD,” which aimed to make video games accessible to blind gamers. It was a super exciting prospect to be working on similar problems where you can firsthand see how people reacted and benefited from your solutions. This still remains one of the most fulfilling aspects of HCI research for me. In the end, this is what led me to choose Columbia and work with Brian Smith.

The first thing that comes to mind is the people that I have had the pleasure of working with and meeting. I am grateful for the opportunity to learn from my advisor and appreciate the incredible atmosphere he has created for me to thrive.

Additionally, I have been fortunate enough to make some amazing friends here at Columbia who have become a vital support system. Balancing work with passions outside of work has also been important to me, and I am grateful for the chance to engage with student clubs such as the dance team, Columbia Bhangra, and meet some amazing people there as well. Overall, the community at Columbia has been a highlight for me.

One thing that students wanting to do research should know is that research involves a lot of uncertainty and ambiguity. In fact, dealing with uncertainty can be one of the most challenging aspects of research, even more so than learning the technical skills required to complete a project.

In my own experience, staying motivated about the problem statement has been key to powering through those uncertain moments. Therefore, it is important to be true to yourself about what you are really excited about and work on those problems. Ultimately, this approach can go a long way in helping you navigate your time at Columbia and make the most of your research opportunities.

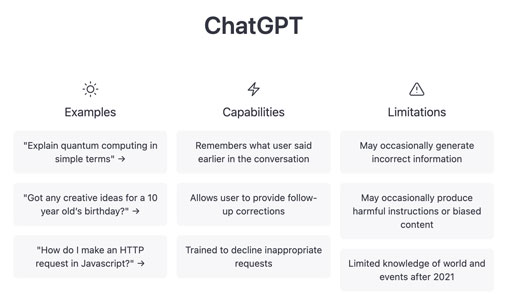

OpenAI’s ChatGPT is an artificial intelligence (AI) chatbot that is trained to follow the instruction in a prompt and give a detailed response. It is built upon GPT-3, a type of large language model (LLM) that predicts and generates text. Given a sequence of words, it will predict the word that has the highest probability of following next (kind of like autocomplete). These models are trained on huge datasets that allow them to generate answers to questions. ChatGPT works quickly and gives answers within seconds, and it also learns from every interaction and improves daily.

It can create a letter to your super asking for a repair to be done, write code and fix bugs, and suggest plot summaries for novels. But that does not mean that it is perfect. The problem with LLMs is that they can “hallucinate” and make things up. ChatGPT is guilty of this; some of the answers in its outputs do not even exist. It is also not trained to be truthful and it answers queries with a lot of confidence and authority, which is worrisome.

It is being compared to the last great tech disruption–the internet’s onset in the 1990s. We asked CS professors what the technology could do and how to use the tool the right way.

Vishal Misra

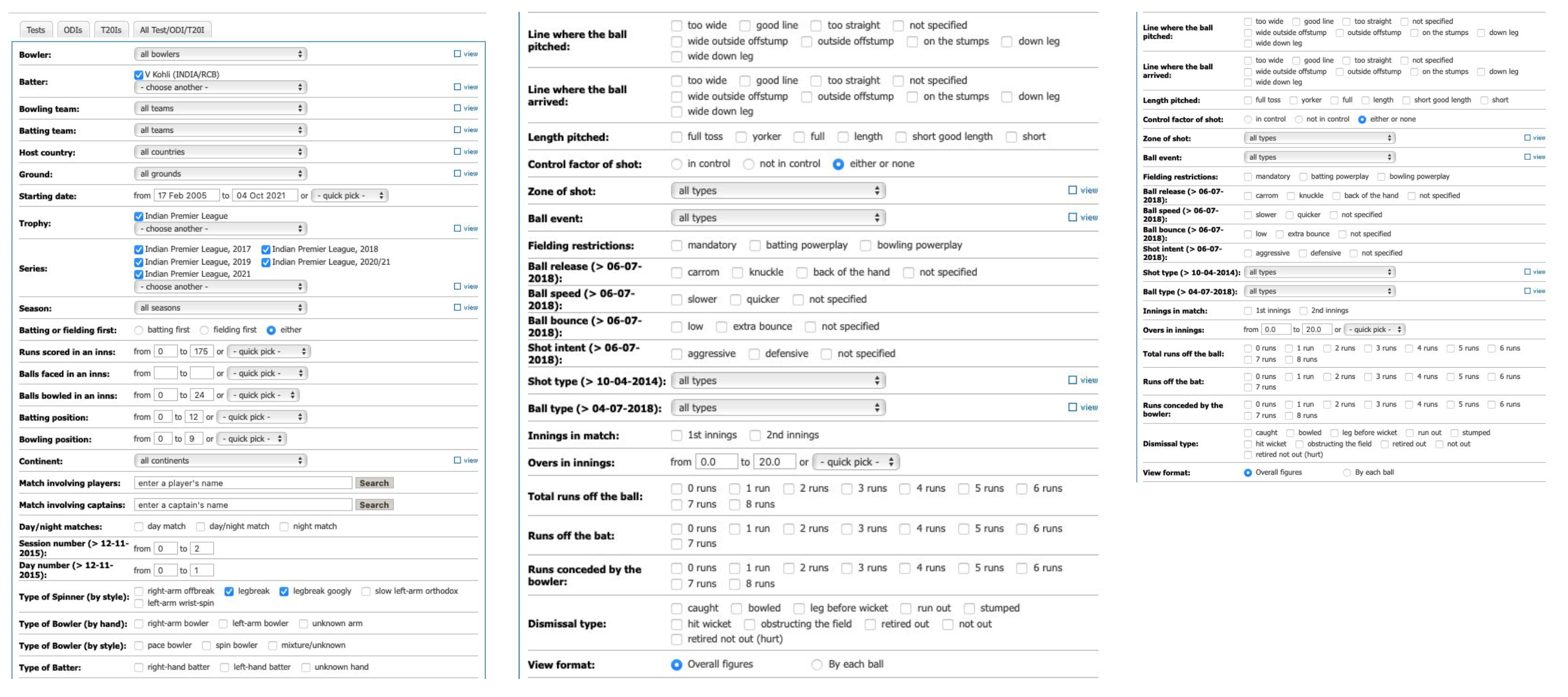

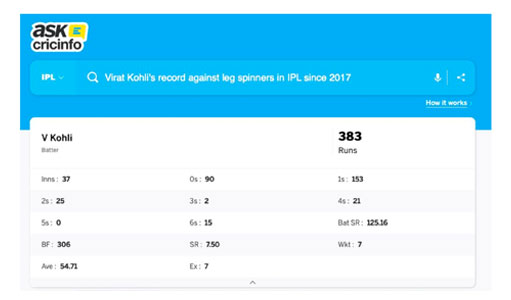

I have been using GPT-3 for over two years now. It is the underlying model behind my cricket search app for ESPN.

The original interface was cumbersome and needed an analyst who could use specialized programming languages to access the answer.

We developed AskCricInfo, which takes human input–questions or search queries–and converts the queries into a structured language like SQL that machines understand. The technology can “translate” the question into a programming language, find the answer, and quickly send it back to the user.

It is an excellent example of the power of underlying technology and what the tool can do. ChatGPT is very interesting. It is the first chatbot that makes “intelligent” and engaging conversations. There are definite use cases for making it a very effective teaching tool. It is up to the instructors to imagine a world where something like ChatGPT will always be available and teach students how to use it effectively. A lot can go wrong with ChatGPT- primarily because of its design that makes it bullshit confidently. But that is a problem with all LLMs, not just ChatGPT.

Kathy McKeown

ChatGPT and the technology it is built on, GPT3, are game changers. With them and the other large language models that have recently emerged, models can now produce extremely fluent text. They are also able to perform a variety of tasks in a way that was not previously possible. And there are many situations in which they can be used to help us do things that we previously found tedious. But they are not able to do as much as we might initially have imagined. They have limitations that are not always immediately obvious, and researchers are taking the time to study them so that we can accurately characterize what they can and cannot do. Nonetheless, they change how we do research in the natural language field and how we build new models to carry out other tasks.

I think we need to learn how to incorporate them into our teaching because they are here to stay. Students know about them and will know how to use them. We will need to think creatively about how to teach students what these tools do well and what they do poorly, when they can be used as a tool to aid in research, and situations in which it would be dishonest. I imagine situations where we ask ChatGPT to answer an essay question, ask the student to critique the essay and identify what it does poorly, and then ask the student to write an essay that goes beyond what ChatGPT can do.

The worst problem with ChatGPT is that it is not always truthful. In fact, it may often lie or hallucinate facts. It does this very fluently, making its lies sound very plausible. This is quite a barrier to use in a real-world situation unless it is used by someone who knows what is correct, what is not, and can sift the good from the bad. Technology constantly changes, though, and it is a problem that researchers are actively working on.

Itsik Pe’er

I asked ChatGPT about AI language models, and this is the output:

AI language models like ChatGPT, its competitors and successors represent a significant advancement in the field of Natural Language Processing (NLP). These models have the ability to generate human-like text based on a massive amount of input data, making them useful for various applications such as chatbots, question-answering systems, and content generation.

However, it is important to note that despite their sophisticated capabilities, they still have limitations and may generate outputs that are inaccurate, biased, or inappropriate. It is crucial to ensure responsible development and deployment of these models to maximize their benefits while minimizing potential harm.

The above demonstrates my thoughts more than I could otherwise express. In particular: it is already useful, but often not out-of-the-box, but I am a techno-optimist and believe even more usefulness is coming.

As a former judge in high school debate competitions, it reminds me of the most eloquent contestants, who would, if they needed to, lie through their teeth without missing a heartbeat. For instance, in response to this prompt, “What could go wrong with tools like ChatGPT?”, it writes:

There are several potential risks associated with the use of AI language models like ChatGPT:

Bias: AI models are trained on large amounts of text data, which may reflect societal biases and lead to biased outputs.

Misinformation: AI models may generate false or misleading information, which could have serious consequences if relied upon.

Privacy Concerns: AI models process large amounts of personal information, raising privacy and security concerns.

Misuse: AI models may be used for malicious purposes, such as spreading hate speech, fake news, or conducting phishing attacks.

Job Loss: AI models may automate certain tasks, leading to job loss in industries that rely on those tasks.

Therefore, it is important to carefully consider the potential risks and consequences of AI models and to develop and deploy them responsibly to minimize potential harm.

These are all valid points, but more context needs to be considered:

The field of natural language processing (NLP) has ramped up by leaps and bounds. This branch of artificial intelligence focuses on the ability of computers to understand and process language as humans do. It has been in the news these past few months because of a chatbot, ChatGPT, that can provide answers and data conversationally. The technology gives us a taste of just how powerful and useful NLP can be.

Tuhin Chakrabarty wants to see how much further he can push NLP in the field of computational creativity to see how computers can generate creative output. This is what ChatGPT had to say about computational creativity:

Computational creativity is a field that uses computational methods to simulate and enhance human-like creativity, producing valuable outputs such as art, music, stories, and scientific discoveries. It aims to understand and replicate the cognitive processes involved in human creativity, combining techniques from AI, cognitive psychology, and philosophy. Examples of computational creativity include generative art and music, game design, natural language processing, and scientific discovery. Ultimately, computational creativity seeks to leverage computers and algorithms to augment and extend human creativity, creating new possibilities for creative expression and innovation.

“Generating text beyond a few sentences was almost very difficult two years ago, but things look much better now. It is not perfect, but I am optimistic,” said Tuhin Chakrabarty, who first became interested in computational creativity in 2019. “One of the things that I am excited about is how better we can align models like ChatGPT to human expectations and different cultures.”

Instead of creating text conversationally, Chakrabarty’s research focuses on how AI can be used to create metaphors and detect sarcasm with little to no training data. The fifth-year PhD student advised by Smaranda Muresan has expanded his work to generating long narratives of 2,000-word documents and visual metaphors. We recently sat down with him to learn more about his research and the creative possibilities of NLP.

I did not have much research experience as an undergrad. I got accepted to the CS masters program and I was fortunate enough to take a class offered by my advisor Smaranda Muresan, which still happens to be one of my all-time favorite courses at Columbia. Computational models of Social Meaning was a graduate seminar course about impactful papers in NLP. Reading all the papers in that class made me think about what I want to do with NLP and how so many interesting research questions can be answered computationally by studying language. Alongside this, I was also working with my advisor and my friend Chris Hidey on extracting arguments from social media. That experience was really precious. The enthusiasm everyone shared in trying to solve the problem at hand made me sure of my decision to pursue research.

Around 2019, Nanyun Peng and He He, two very important researchers in the field of computational creativity, wrote a paper on generating puns. I happened to attend NAACL 2019 in Minneapolis, where the paper was presented. I thought the paper was beautiful in every possible way and it quantified the surprisal theory in humor algorithmically. This made me really fascinated about how we can use inductive biases to help machines generate creative output. For selfish reasons, I reached out to Nanyun Peng and told her that I wanted to work with her. She was very kind and agreed to mentor me. My PhD advisor Smaranda Muresan is one of the experts in the field of Figurative Language, which deals with creativity. So, of course, that influenced my decision to work in computational creativity too.

Computational creativity is a multidisciplinary endeavor located at the intersection of artificial intelligence, cognitive psychology, philosophy, and the arts. The goal of computational creativity is to model, simulate or replicate creativity using a computer to achieve one of several ends:

State-of-the-art models are often found to be inadequate for creative tasks. The principal reason for this is that in addition to composing grammatical and fluent sentences to articulate given content, these tasks usually require extensive world and common sense knowledge.

It should also be noted that current approaches to text generation require lots of training data for supervision. However, most existing corpus for creative forms of text is limited in size. Even if such a corpus existed, learning the distribution of existing data and sampling from it is unlikely to lead to truly novel, creative output.

So we have to rely on unsupervised or weakly supervised techniques to train an end-to-end model to interpret or generate creative text. Of course, with the advent of Large Language Models and few-shot learning, we can now prompt a model with a few examples of creative text and it can somewhat generalize (but not as well as humans). My dissertation deals with a lot of this.

Over the past several years, a key focus for NYTimes Research and Development has understood how advances in machine learning can extend the capabilities of journalists and unlock reader experiences that aren’t possible today. Questions and answers are central to how humans learn. Times journalism frequently uses FAQ and Q&A-style articles to help readers understand complex topics like the Covid-19 vaccines. To enhance this style of journalism, we experimented with large language models to match questions to answers, even if the reader asks their question in a novel way.

Last year we launched a new research effort to explore generating open-ended questions for news articles. Our hypothesis is that understanding the questions our news articles are implicitly answering may be helpful in the reporting process and may ultimately enable us to create FAQ and Q&A-style articles more efficiently.

You can find more information here: https://rd.nytimes.com/projects/generating-open-ended-questions-from-news-articles

This was fundamentally different from what I have been doing because I had to work towards upholding journalism values such as accuracy and verifiability. In creativity, your model can generate something that does not require attribution. But, when working on a project that deals with news and journalism, the focus is on factuality.

Recent work on question generation has primarily focused on factoid questions such as who, what, where, and when about basic facts. Generating open-ended why, how, what, etc., questions that require long-form answers has proven more difficult. To facilitate the generation of open-ended questions, we propose CONSISTENT, a new end-to-end system for generating open-ended questions that are answerable from and faithful to the input text. Using news articles as a trustworthy foundation for experimentation, we demonstrate our model’s strength over several baselines using both automatic and human-based evaluations. We contribute an evaluation dataset of expert-generated open-ended questions and discuss potential downstream applications for news media organizations.

Much of my recent and upcoming work is on human-AI collaboration for creativity. I recently worked on developing methods and evaluation frameworks for two creative tasks–poetry generation and visual metaphor generation–by leveraging collaboration between expert humans and state-of-the-art generative models. I further highlighted how collaboration improves the final output over either standalone models or only humans.

I have long focused on developing and evaluating machine learning models aimed at creativity in an isolated setting. This somehow limits their capacity to behave in an interactive setting with real humans. In a creative setting, it is crucial for models to understand human needs and provide assistance to augment human capabilities and improve performance based on human edits or feedback over time. So that is my focus now.

This is a difficult question. Pursuing a PhD can be a really fun experience, but at the same time, it can be daunting. There is a lot of uncertainty around research questions and whether something will work or not. I wish I had been a little easier on myself and not taken everything personally. Like, if an idea didn’t work, instead of spending months trying to make it work, it is okay to give up and move in a different direction.

One of the things I learned during my PhD is to focus on what you care about. There are hundreds of researchers who might work on slightly dense areas, while your work can feel niche. This is not a problem. When I started working on NLP and creativity, the field still felt very young, but over the past three to four years, it has grown tremendously.

Your advisor will be one of the most important people in your PhD. It is essential to have good communication and working chemistry with them. One of the reasons my PhD felt like so much fun is because my advisor and I cared about the same problems.

Form a community and foster friendships with your lab mates, talk about research, or email a colleague whose work moved you and get a coffee with them at a conference. Also, try for opportunities to work with people in your lab or your community. It helps us learn so much.

Researchers from the department presented machine learning and artificial intelligence research at the thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2022).

Finding and Listing Front-door Adjustment Sets

Hyunchai Jeong Purdue University, Jin Tian Iowa State University, Elias Bareinboim Columbia University

Abstract:

Identifying the effects of new interventions from data is a significant challenge found across a wide range of the empirical sciences. A well-known strategy for identifying such effects is Pearl’s front-door (FD) criterion. The definition of the FD criterion is declarative, only allowing one to decide whether a specific set satisfies the criterion. In this paper, we present algorithms for finding and enumerating possible sets satisfying the FD criterion in a given causal diagram. These results are useful in facilitating the practical applications of the FD criterion for causal effects estimation and helping scientists to select estimands with desired properties, e.g., based on cost, feasibility of measurement, or statistical power.

Causal Identification under Markov equivalence: Calculus, Algorithm, and Completeness

Amin Jaber Purdue University, Adele Ribeiro Columbia University, Jiji Zhang Hong Kong Baptist University, Elias Bareinboim Columbia University

Abstract:

One common task in many data sciences applications is to answer questions about the effect of new interventions, like: `what would happen to Y if we make X equal to x while observing covariates Z=z?’. Formally, this is known as conditional effect identification, where the goal is to determine whether a post-interventional distribution is computable from the combination of an observational distribution and assumptions about the underlying domain represented by a causal diagram. A plethora of methods was developed for solving this problem, including the celebrated do-calculus [Pearl, 1995]. In practice, these results are not always applicable since they require a fully specified causal diagram as input, which is usually not available. In this paper, we assume as the input of the task a less informative structure known as a partial ancestral graph (PAG), which represents a Markov equivalence class of causal diagrams, learnable from observational data. We make the following contributions under this relaxed setting. First, we introduce a new causal calculus, which subsumes the current state-of-the-art, PAG-calculus. Second, we develop an algorithm for conditional effect identification given a PAG and prove it to be both sound and complete. In words, failure of the algorithm to identify a certain effect implies that this effect is not identifiable by any method. Third, we prove the proposed calculus to be complete for the same task.

Online Reinforcement Learning for Mixed Policy Scopes

Junzhe Zhang Columbia University, Elias Bareinboim Columbia University

Abstract:

Combination therapy refers to the use of multiple treatments — such as surgery, medication, and behavioral therapy – to cure a single disease, and has become a cornerstone for treating various conditions including cancer, HIV, and depression. All possible combinations of treatments lead to a collection of treatment regimens (i.e., policies) with mixed scopes, or what physicians could observe and which actions they should take depending on the context. In this paper, we investigate the online reinforcement learning setting for optimizing the policy space with mixed scopes. In particular, we develop novel online algorithms that achieve sublinear regret compared to an optimal agent deployed in the environment. The regret bound has a dependency on the maximal cardinality of the induced state-action space associated with mixed scopes. We further introduce a canonical representation for an arbitrary subset of interventional distributions given a causal diagram, which leads to a non-trivial, minimal representation of the model parameters.

Masked Prediction: A Parameter Identifiability View