Jim Kurose Explains the Internet in 5 Levels of Difficulty

Jim Kurose (PhD ’84) chats with PhD student Casper Lant about networks and IoTS for Wired magazine.

Jim Kurose (PhD ’84) chats with PhD student Casper Lant about networks and IoTS for Wired magazine.

Computer scientist honored for his pioneering research in imaging.

Computer Scientist honored for his pioneering research in imaging.

Julia Zhao, SEAS’23, has been named a 2023 Rhodes Scholar for China. China awards four Rhodes Scholars each year, and this is the second time that Columbia has had a Rhodes China Scholar named.

Papers from CS researchers were accepted to the Empirical Methods in Natural Language Processing (EMNLP) 2022. EMNLP is a leading conference in artificial intelligence and natural language processing. Aside from presenting their research papers, several researchers also organized workshops to gather conference attendees for discussions about current issues confronting NLP and computer science.

Massively Multilingual Natural Language Understanding

Jack FitzGerald Amazon Alexa, Kay Rottmann Amazon Alexa, Julia Hirschberg Columbia University, Mohit Bansal University of North Carolina, Anna Rumshisky University of Massachusetts Lowell, and Charith Peris Amazon Alexa

3rd Workshop on Figurative Language Processing

Debanjan Ghosh Educational Testing Service, Beata Beigman Klebanov Educational Testing Service, Smaranda Muresan Columbia University, Anna Feldman Montclair State University, Soujanya Poria Singapore University of Technology and Design, and Tuhin Chakrabarty Columbia University

Sharing Stories and Lessons Learned

Diyi Yang Stanford University, Pradeep Dasigi Allen Institute for AI, Sherry Tongshuang Wu Carnegie Mellon University, Tuhin Chakrabarty Columbia University, Yuval Pinter Ben-Gurion University of the Negev, and Mike Zheng Shou National University of Singapore

Help me write a Poem – Instruction Tuning as a Vehicle for Collaborative Poetry Writing

Tuhin Chakrabarty Columbia University, Vishakh Padmakumar New York University, He He New York University

Abstract

Recent work in training large language models (LLMs) to follow natural language instructions has opened up exciting opportunities for natural language interface design. Building on the prior success of large language models in the realm of computer assisted creativity, in this work, we present CoPoet, a collaborative poetry writing system, with the goal of to study if LLM’s actually improve the quality of the generated content. In contrast to auto-completing a user’s text, CoPoet is controlled by user instructions that specify the attributes of the desired text, such as Write a sentence about ‘love’ or Write a sentence ending in ‘fly’. The core component of our system is a language model fine-tuned on a diverse collection of instructions for poetry writing. Our model is not only competitive to publicly available LLMs trained on instructions (InstructGPT), but also capable of satisfying unseen compositional instructions. A study with 15 qualified crowdworkers shows that users successfully write poems with CoPoet on diverse topics ranging from Monarchy to Climate change, which are preferred by third-party evaluators over poems written without the system.

FLUTE: Figurative Language Understanding through Textual Explanations

Tuhin Chakrabarty Columbia University, Arkadiy Saakyan Columbia University, Debanjan Ghosh Educational Testing Service, and Smaranda Muresan Columbia University

Abstract

Figurative language understanding has been recently framed as a recognizing textual entailment (RTE) task (a.k.a. natural language inference (NLI)). However, similar to classical RTE/NLI datasets they suffer from spurious correlations and annotation artifacts. To tackle this problem, work on NLI has built explanation-based datasets such as eSNLI, allowing us to probe whether language models are right for the right reasons. Yet no such data exists for figurative language, making it harder to assess genuine understanding of such expressions. To address this issue, we release FLUTE, a dataset of 9,000 figurative NLI instances with explanations, spanning four categories: Sarcasm, Simile, Metaphor, and Idioms. We collect the data through a Human-AI collaboration framework based on GPT-3, crowd workers, and expert annotators. We show how utilizing GPT-3 in conjunction with human annotators (novices and experts) can aid in scaling up the creation of datasets even for such complex linguistic phenomena as figurative language. The baseline performance of the T5 model fine-tuned on FLUTE shows that our dataset can bring us a step closer to developing models that understand figurative language through textual explanations.

Fine-tuned Language Models are Continual Learners

Thomas Scialom Columbia University, Tuhin Chakrabarty Columbia University, and Smaranda Muresan Columbia University

Abstract

Recent work on large language models relies on the intuition that most natural language processing tasks can be described via natural language instructions and that models trained on these instructions show strong zero-shot performance on several standard datasets. However, these models even though impressive still perform poorly on a wide range of tasks outside of their respective training and evaluation sets.To address this limitation, we argue that a model should be able to keep extending its knowledge and abilities, without forgetting previous skills. In spite of the limited success of Continual Learning, we show that Fine-tuned Language Models can be continual learners.We empirically investigate the reason for this success and conclude that Continual Learning emerges from self-supervision pre-training. Our resulting model Continual-T0 (CT0) is able to learn 8 new diverse language generation tasks, while still maintaining good performance on previous tasks, spanning in total of 70 datasets. Finally, we show that CT0 is able to combine instructions in ways it was never trained for, demonstrating some level of instruction compositionality.

Multitask Instruction-based Prompting for Fallacy Recognition

Tariq Alhindi Columbia University, Tuhin Chakrabarty Columbia University, Elena Musi University of Liverpool, and Smaranda Muresan Columbia University

Abstract

Fallacies are used as seemingly valid arguments to support a position and persuade the audience about its validity. Recognizing fallacies is an intrinsically difficult task both for humans and machines. Moreover, a big challenge for computational models lies in the fact that fallacies are formulated differently across the datasets with differences in the input format (e.g., question-answer pair, sentence with fallacy fragment), genre (e.g., social media, dialogue, news), as well as types and number of fallacies (from 5 to 18 types per dataset). To move towards solving the fallacy recognition task, we approach these differences across datasets as multiple tasks and show how instruction-based prompting in a multitask setup based on the T5 model improves the results against approaches built for a specific dataset such as T5, BERT or GPT-3. We show the ability of this multitask prompting approach to recognize 28 unique fallacies across domains and genres and study the effect of model size and prompt choice by analyzing the per-class (i.e., fallacy type) results. Finally, we analyze the effect of annotation quality on model performance, and the feasibility of complementing this approach with external knowledge.

CONSISTENT: Open-Ended Question Generation From News Articles

Tuhin Chakrabarty Columbia University, Justin Lewis The New York Times R&D, and Smaranda Muresan Columbia University

Abstract

Recent work on question generation has largely focused on factoid questions such as who, what, where, when about basic facts. Generating open-ended why, how, what, etc. questions that require long-form answers have proven more difficult. To facilitate the generation of open-ended questions, we propose CONSISTENT, a new end-to-end system for generating open-ended questions that are answerable from and faithful to the input text. Using news articles as a trustworthy foundation for experimentation, we demonstrate our model’s strength over several baselines using both automatic and human=based evaluations. We contribute an evaluation dataset of expert-generated open-ended questions.We discuss potential downstream applications for news media organizations.

SafeText: A Benchmark for Exploring Physical Safety in Language Models

Sharon Levy University of California, Santa Barbara, Emily Allaway Columbia University, Melanie Subbiah Columbia University, Lydia Chilton Columbia University, Desmond Patton Columbia University, Kathleen McKeown Columbia University, and William Yang Wang University of California, Santa Barbara

Abstract

Understanding what constitutes safe text is an important issue in natural language processing and can often prevent the deployment of models deemed harmful and unsafe. One such type of safety that has been scarcely studied is commonsense physical safety, i.e. text that is not explicitly violent and requires additional commonsense knowledge to comprehend that it leads to physical harm. We create the first benchmark dataset, SafeText, comprising real-life scenarios with paired safe and physically unsafe pieces of advice. We utilize SafeText to empirically study commonsense physical safety across various models designed for text generation and commonsense reasoning tasks. We find that state-of-the-art large language models are susceptible to the generation of unsafe text and have difficulty rejecting unsafe advice. As a result, we argue for further studies of safety and the assessment of commonsense physical safety in models before release.

Learning to Revise References for Faithful Summarization

Griffin Adams Columbia University, Han-Chin Shing Amazon AWS AI, Qing Sun Amazon AWS AI, Christopher Winestock Amazon AWS AI, Kathleen McKeown Columbia University, and Noémie Elhadad Columbia University

Abstract

In real-world scenarios with naturally occurring datasets, reference summaries are noisy and may contain information that cannot be inferred from the source text. On large news corpora, removing low quality samples has been shown to reduce model hallucinations. Yet, for smaller, and/or noisier corpora, filtering is detrimental to performance. To improve reference quality while retaining all data, we propose a new approach: to selectively re-write unsupported reference sentences to better reflect source data. We automatically generate a synthetic dataset of positive and negative revisions by corrupting supported sentences and learn to revise reference sentences with contrastive learning. The intensity of revisions is treated as a controllable attribute so that, at inference, diverse candidates can be over-generated-then-rescored to balance faithfulness and abstraction. To test our methods, we extract noisy references from publicly available MIMIC-III discharge summaries for the task of hospital-course summarization, and vary the data on which models are trained. According to metrics and human evaluation, models trained on revised clinical references are much more faithful, informative, and fluent than models trained on original or filtered data.

Mitigating Covertly Unsafe Text within Natural Language Systems

Alex Mei University of California, Santa Barbara, Anisha Kabir University of California, Santa Barbara, Sharon Levy University of California, Santa Barbara, Melanie Subbiah Columbia University, Emily Allaway Columbia University, John N. Judge University of California, Santa Barbara, Desmond Patton University of Pennsylvania, Bruce Bimber University of California, Santa Barbara, Kathleen McKeown Columbia University, and William Yang Wang University of California, Santa Barbara

Abstract

An increasingly prevalent problem for intelligent technologies is text safety, as uncontrolled systems may generate recommendations to their users that lead to injury or life-threatening consequences. However, the degree of explicitness of a generated statement that can cause physical harm varies. In this paper, we distinguish types of text that can lead to physical harm and establish one particularly underexplored category: covertly unsafe text. Then, we further break down this category with respect to the system’s information and discuss solutions to mitigate the generation of text in each of these subcategories. Ultimately, our work defines the problem of covertly unsafe language that causes physical harm and argues that this subtle yet dangerous issue needs to be prioritized by stakeholders and regulators. We highlight mitigation strategies to inspire future researchers to tackle this challenging problem and help improve safety within smart systems.

Affective Idiosyncratic Responses to Music

Sky CH-Wang Columbia University, Evan Li Columbia University, Oliver Li Columbia University, Smaranda Muresan Columbia University, and Zhou Yu Columbia University

Abstract

Affective responses to music are highly personal. Despite consensus that idiosyncratic factors play a key role in regulating how listeners emotionally respond to music, precisely measuring the marginal effects of these variables has proved challenging. To address this gap, we develop computational methods to measure affective responses to music from over 403M listener comments on a Chinese social music platform. Building on studies from music psychology in systematic and quasi-causal analyses, we test for musical, lyrical, contextual, demographic, and mental health effects that drive listener affective responses. Finally, motivated by the social phenomenon known as 网抑云 (wǎng-yì-yún), we identify influencing factors of platform user self-disclosures, the social support they receive, and notable differences in discloser user activity.

Robots-Dont-Cry: Understanding Falsely Anthropomorphic Utterances in Dialog Systems

David Gros University of California, Davis, Yu Li Columbia University, and Zhou Yu Columbia University

Abstract

Dialog systems are often designed or trained to output human-like responses. However, some responses may be impossible for a machine to truthfully say (e.g. “that movie made me cry”). Highly anthropomorphic responses might make users uncomfortable or implicitly deceive them into thinking they are interacting with a human. We collect human ratings on the feasibility of approximately 900 two-turn dialogs sampled from 9 diverse data sources. Ratings are for two hypothetical machine embodiments: a futuristic humanoid robot and a digital assistant. We find that for some data-sources commonly used to train dialog systems, 20-30% of utterances are not viewed as possible for a machine. Rating is marginally affected by machine embodiment. We explore qualitative and quantitative reasons for these ratings. Finally, we build classifiers and explore how modeling configuration might affect output permissibly, and discuss implications for building less falsely anthropomorphic dialog systems.

Just Fine-tune Twice: Selective Differential Privacy for Large Language Models

Weiyan Shi Columbia University, Ryan Patrick Shea Columbia University, Si Chen Columbia University, Chiyuan Zhang Google Research, Ruoxi Jia Virginia Tech, and Zhou Yu Columbia University

Abstract

Protecting large language models from privacy leakage is becoming increasingly crucial with their wide adoption in real-world products. Yet applying *differential privacy* (DP), a canonical notion with provable privacy guarantees for machine learning models, to those models remains challenging due to the trade-off between model utility and privacy loss. Utilizing the fact that sensitive information in language data tends to be sparse, Shi et al. (2021) formalized a DP notion extension called *Selective Differential Privacy* (SDP) to protect only the sensitive tokens defined by a policy function. However, their algorithm only works for RNN-based models. In this paper, we develop a novel framework, *Just Fine-tune Twice* (JFT), that achieves SDP for state-of-the-art large transformer-based models. Our method is easy to implement: it first fine-tunes the model with *redacted* in-domain data, and then fine-tunes it again with the *original* in-domain data using a private training mechanism. Furthermore, we study the scenario of imperfect implementation of policy functions that misses sensitive tokens and develop systematic methods to handle it. Experiments show that our method achieves strong utility compared to previous baselines. We also analyze the SDP privacy guarantee empirically with the canary insertion attack.

Focus! Relevant and Sufficient Context Selection for News Image Captioning

Mingyang Zhou University of California, Davis, Grace Luo University of California, Berkeley, Anna Rohrbach University of California, Berkeley, and Zhou Yu Columbia University

Abstract

News Image Captioning requires describing an image by leveraging additional context from a news article. Previous works only coarsely leverage the article to extract the necessary context, which makes it challenging for models to identify relevant events and named entities. In our paper, we first demonstrate that by combining more fine-grained context that captures the key named entities (obtained via an oracle) and the global context that summarizes the news, we can dramatically improve the model’s ability to generate accurate news captions. This begs the question, how to automatically extract such key entities from an image? We propose to use the pre-trained vision and language retrieval model CLIP to localize the visually grounded entities in the news article and then capture the non-visual entities via an open relation extraction model. Our experiments demonstrate that by simply selecting a better context from the article, we can significantly improve the performance of existing models and achieve new state-of-the-art performance on multiple benchmarks.

National Academy of Inventors Selects Columbia Engineering Researchers for their “highly prolific spirit of innovation.”

The National Academy of Inventors fellows are recognized for their work that benefits society.

Axl Chen is the founder and CEO of Surplex, a new full-body tracking technology company aiming to change the gaming industry and professional sports.

Researchers from the department presented machine learning and artificial intelligence research at the thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2022).

Finding and Listing Front-door Adjustment Sets

Hyunchai Jeong Purdue University, Jin Tian Iowa State University, Elias Bareinboim Columbia University

Abstract:

Identifying the effects of new interventions from data is a significant challenge found across a wide range of the empirical sciences. A well-known strategy for identifying such effects is Pearl’s front-door (FD) criterion. The definition of the FD criterion is declarative, only allowing one to decide whether a specific set satisfies the criterion. In this paper, we present algorithms for finding and enumerating possible sets satisfying the FD criterion in a given causal diagram. These results are useful in facilitating the practical applications of the FD criterion for causal effects estimation and helping scientists to select estimands with desired properties, e.g., based on cost, feasibility of measurement, or statistical power.

Causal Identification under Markov equivalence: Calculus, Algorithm, and Completeness

Amin Jaber Purdue University, Adele Ribeiro Columbia University, Jiji Zhang Hong Kong Baptist University, Elias Bareinboim Columbia University

Abstract:

One common task in many data sciences applications is to answer questions about the effect of new interventions, like: `what would happen to Y if we make X equal to x while observing covariates Z=z?’. Formally, this is known as conditional effect identification, where the goal is to determine whether a post-interventional distribution is computable from the combination of an observational distribution and assumptions about the underlying domain represented by a causal diagram. A plethora of methods was developed for solving this problem, including the celebrated do-calculus [Pearl, 1995]. In practice, these results are not always applicable since they require a fully specified causal diagram as input, which is usually not available. In this paper, we assume as the input of the task a less informative structure known as a partial ancestral graph (PAG), which represents a Markov equivalence class of causal diagrams, learnable from observational data. We make the following contributions under this relaxed setting. First, we introduce a new causal calculus, which subsumes the current state-of-the-art, PAG-calculus. Second, we develop an algorithm for conditional effect identification given a PAG and prove it to be both sound and complete. In words, failure of the algorithm to identify a certain effect implies that this effect is not identifiable by any method. Third, we prove the proposed calculus to be complete for the same task.

Online Reinforcement Learning for Mixed Policy Scopes

Junzhe Zhang Columbia University, Elias Bareinboim Columbia University

Abstract:

Combination therapy refers to the use of multiple treatments — such as surgery, medication, and behavioral therapy – to cure a single disease, and has become a cornerstone for treating various conditions including cancer, HIV, and depression. All possible combinations of treatments lead to a collection of treatment regimens (i.e., policies) with mixed scopes, or what physicians could observe and which actions they should take depending on the context. In this paper, we investigate the online reinforcement learning setting for optimizing the policy space with mixed scopes. In particular, we develop novel online algorithms that achieve sublinear regret compared to an optimal agent deployed in the environment. The regret bound has a dependency on the maximal cardinality of the induced state-action space associated with mixed scopes. We further introduce a canonical representation for an arbitrary subset of interventional distributions given a causal diagram, which leads to a non-trivial, minimal representation of the model parameters.

Masked Prediction: A Parameter Identifiability View

Bingbin Liu Carnegie Mellon University, Daniel Hsu Columbia University, Pradeep Ravikumar Carnegie Mellon University, Andrej Risteski Carnegie Mellon University

Abstract:

The vast majority of work in self-supervised learning have focused on assessing recovered features by a chosen set of downstream tasks. While there are several commonly used benchmark datasets, this lens of feature learning requires assumptions on the downstream tasks which are not inherent to the data distribution itself. In this paper, we present an alternative lens, one of parameter identifiability: assuming data comes from a parametric probabilistic model, we train a self-supervised learning predictor with a suitable parametric form, and ask whether the parameters of the optimal predictor can be used to extract the parameters of the ground truth generative model.Specifically, we focus on latent-variable models capturing sequential structures, namely Hidden Markov Models with both discrete and conditionally Gaussian observations. We focus on masked prediction as the self-supervised learning task and study the optimal masked predictor. We show that parameter identifiability is governed by the task difficulty, which is determined by the choice of data model and the amount of tokens to predict. Technique-wise, we uncover close connections with the uniqueness of tensor rank decompositions, a widely used tool in studying identifiability through the lens of the method of moments.

Learning single-index models with shallow neural networks

Alberto Bietti Meta AI/New York University, Joan Bruna New York University, Clayton Sanford Columbia University, Min Jae Song New York University

Abstract:

Single-index models are a class of functions given by an unknown univariate link” function applied to an unknown one-dimensional projection of the input. These models are particularly relevant in high dimension, when the data might present low-dimensional structure that learning algorithms should adapt to. While several statistical aspects of this model, such as the sample complexity of recovering the relevant (one-dimensional) subspace, are well-understood, they rely on tailored algorithms that exploit the specific structure of the target function. In this work, we introduce a natural class of shallow neural networks and study its ability to learn single-index models via gradient flow. More precisely, we consider shallow networks in which biases of the neurons are frozen at random initialization. We show that the corresponding optimization landscape is benign, which in turn leads to generalization guarantees that match the near-optimal sample complexity of dedicated semi-parametric methods.

On Scrambling Phenomena for Randomly Initialized Recurrent Networks

Evangelos Chatziafratis University of California Santa Cruz, Ioannis Panageas University of California Irvine, Clayton Sanford Columbia University, Stelios Stavroulakis University of California Irvine

Abstract:

Recurrent Neural Networks (RNNs) frequently exhibit complicated dynamics, and their sensitivity to the initialization process often renders them notoriously hard to train. Recent works have shed light on such phenomena analyzing when exploding or vanishing gradients may occur, either of which is detrimental for training dynamics. In this paper, we point to a formal connection between RNNs and chaotic dynamical systems and prove a qualitatively stronger phenomenon about RNNs than what exploding gradients seem to suggest. Our main result proves that under standard initialization (e.g., He, Xavier etc.), RNNs will exhibit \textit{Li-Yorke chaos} with \textit{constant} probability \textit{independent} of the network’s width. This explains the experimentally observed phenomenon of \textit{scrambling}, under which trajectories of nearby points may appear to be arbitrarily close during some timesteps, yet will be far away in future timesteps. In stark contrast to their feedforward counterparts, we show that chaotic behavior in RNNs is preserved under small perturbations and that their expressive power remains exponential in the number of feedback iterations. Our technical arguments rely on viewing RNNs as random walks under non-linear activations, and studying the existence of certain types of higher-order fixed points called \textit{periodic points} in order to establish phase transitions from order to chaos.

Patching open-vocabulary models by interpolating weights

Gabriel Ilharco University of Washington, Mitchell Wortsman University of Washington, Samir Yitzhak Gadre Columbia University, Shuran Song Columbia University, Hannaneh Hajishirzi University of Washington, Simon Kornblith Google Brain, Ali Farhadi University of Washington, Ludwig Schmidt University of Washington

Abstract:

Open-vocabulary models like CLIP achieve high accuracy across many image classification tasks. However, there are still settings where their zero-shot performance is far from optimal. We study model patching, where the goal is to improve accuracy on specific tasks without degrading accuracy on tasks where performance is already adequate. Towards this goal, we introduce PAINT, a patching method that uses interpolations between the weights of a model before fine-tuning and the weights after fine-tuning on a task to be patched. On nine tasks where zero-shot CLIP performs poorly, PAINT increases accuracy by 15 to 60 percentage points while preserving accuracy on ImageNet within one percentage point of the zero-shot model. PAINT also allows a single model to be patched on multiple tasks and improves with model scale. Furthermore, we identify cases of broad transfer, where patching on one task increases accuracy on other tasks even when the tasks have disjoint classes. Finally, we investigate applications beyond common benchmarks such as counting or reducing the impact of typographic attacks on CLIP. Our findings demonstrate that it is possible to expand the set of tasks on which open-vocabulary models achieve high accuracy without re-training them from scratch.

ASPiRe: Adaptive Skill Priors for Reinforcement Learning

Mengda Xu Columbia University, Manuela Veloso JP Morgan/Carnegie Mellon University, Shuran Song Columbia University

Abstract:

We introduce ASPiRe (Adaptive Skill Prior for RL), a new approach that leverages prior experience to accelerate reinforcement learning. Unlike existing methods that learn a single skill prior from a large and diverse dataset, our framework learns a library of different distinction skill priors (i.e., behavior priors) from a collection of specialized datasets, and learns how to combine them to solve a new task. This formulation allows the algorithm to acquire a set of specialized skill priors that are more reusable for downstream tasks; however, it also brings up additional challenges of how to effectively combine these unstructured sets of skill priors to form a new prior for new tasks. Specifically, it requires the agent not only to identify which skill prior(s) to use but also how to combine them (either sequentially or concurrently) to form a new prior. To achieve this goal, ASPiRe includes Adaptive Weight Module (AWM) that learns to infer an adaptive weight assignment between different skill priors and uses them to guide policy learning for downstream tasks via weighted Kullback-Leibler divergences. Our experiments demonstrate that ASPiRe can significantly accelerate the learning of new downstream tasks in the presence of multiple priors and show improvement on competitive baselines.

Language Models with Image Descriptors are Strong Few-Shot Video-Language Learners

Zhenhailong Wang Columbia University, Manling Li Columbia University, Ruochen Xu Microsoft, Luowei Zhou Meta, Jie Lei Meta, Xudong Lin Columbia University, Shuohang Wang Microsoft, Ziyi Yang Stanford University, Chenguang Zhu Stanford University, Derek Hoiem University of Illinois, Shih-Fu Chang Columbia University, Mohit Bansal University of North Carolina Chapel Hill, Heng Ji University of Illinois

Abstract:

The goal of this work is to build flexible video-language models that can generalize to various video-to-text tasks from few examples. Existing few-shot video-language learners focus exclusively on the encoder, resulting in the absence of a video-to-text decoder to handle generative tasks. Video captioners have been pretrained on large-scale video-language datasets, but they rely heavily on finetuning and lack the ability to generate text for unseen tasks in a few-shot setting. We propose VidIL, a few-shot Video-language Learner via Image and Language models, which demonstrates strong performance on few-shot video-to-text tasks without the necessity of pretraining or finetuning on any video datasets. We use image-language models to translate the video content into frame captions, object, attribute, and event phrases, and compose them into a temporal-aware template. We then instruct a language model, with a prompt containing a few in-context examples, to generate a target output from the composed content. The flexibility of prompting allows the model to capture any form of text input, such as automatic speech recognition (ASR) transcripts. Our experiments demonstrate the power of language models in understanding videos on a wide variety of video-language tasks, including video captioning, video question answering, video caption retrieval, and video future event prediction. Especially, on video future event prediction, our few-shot model significantly outperforms state-of-the-art supervised models trained on large-scale video datasets.Code and processed data are publicly available for research purposes at https://github.com/MikeWangWZHL/VidIL.

Implications of Model Indeterminacy for Explanations of Automated Decisions

Marc-Etienne Brunet University of Toronto, Ashton Anderson University of Toronto, Richard Zemel Columbia University

Abstract:

There has been a significant research effort focused on explaining predictive models, for example through post-hoc explainability and recourse methods. Most of the proposed techniques operate upon a single, fixed, predictive model. However, it is well-known that given a dataset and a predictive task, there may be a multiplicity of models that solve the problem (nearly) equally well. In this work, we investigate the implications of this kind of model indeterminacy on the post-hoc explanations of predictive models. We show how it can lead to explanatory multiplicity, and we explore the underlying drivers. We show how predictive multiplicity, and the related concept of epistemic uncertainty, are not reliable indicators of explanatory multiplicity. We further illustrate how a set of models showing very similar aggregate performance on a test dataset may show large variations in their local explanations, i.e., for a specific input. We explore these effects for Shapley value based explanations on three risk assessment datasets. Our results indicate that model indeterminacy may have a substantial impact on explanations in practice, leading to inconsistent and even contradicting explanations.

Reconsidering Deep Ensembles

Taiga Abe Columbia University, Estefany Kelly Buchanan Columbia University, Geoff Pleiss Columbia University, Richard Zemel Columbia University, John Cunningham Columbia University

Abstract:

Ensembling neural networks is an effective way to increase accuracy, and can often match the performance of individual larger models. This observation poses a natural question: given the choice between a deep ensemble and a single neural network with similar accuracy, is one preferable over the other? Recent work suggests that deep ensembles may offer distinct benefits beyond predictive power: namely, uncertainty quantification and robustness to dataset shift. In this work, we demonstrate limitations to these purported benefits, and show that a single (but larger) neural network can replicate these qualities. First, we show that ensemble diversity, by any metric, does not meaningfully contribute to an ensemble’s ability to detect out-of-distribution (OOD) data, but is instead highly correlated with the relative improvement of a single larger model. Second, we show that the OOD performance afforded by ensembles is strongly determined by their in-distribution (InD) performance, and – in this sense – is not indicative of any “effective robustness.” While deep ensembles are a practical way to achieve improvements to predictive power, uncertainty quantification, and robustness, our results show that these improvements can be replicated by a (larger) single model

Professor Steven Bellovin remembers his mentor Frederick P. Brooks, Jr., in a blog post about the computer scientist and author of The Mythical Man-Month.

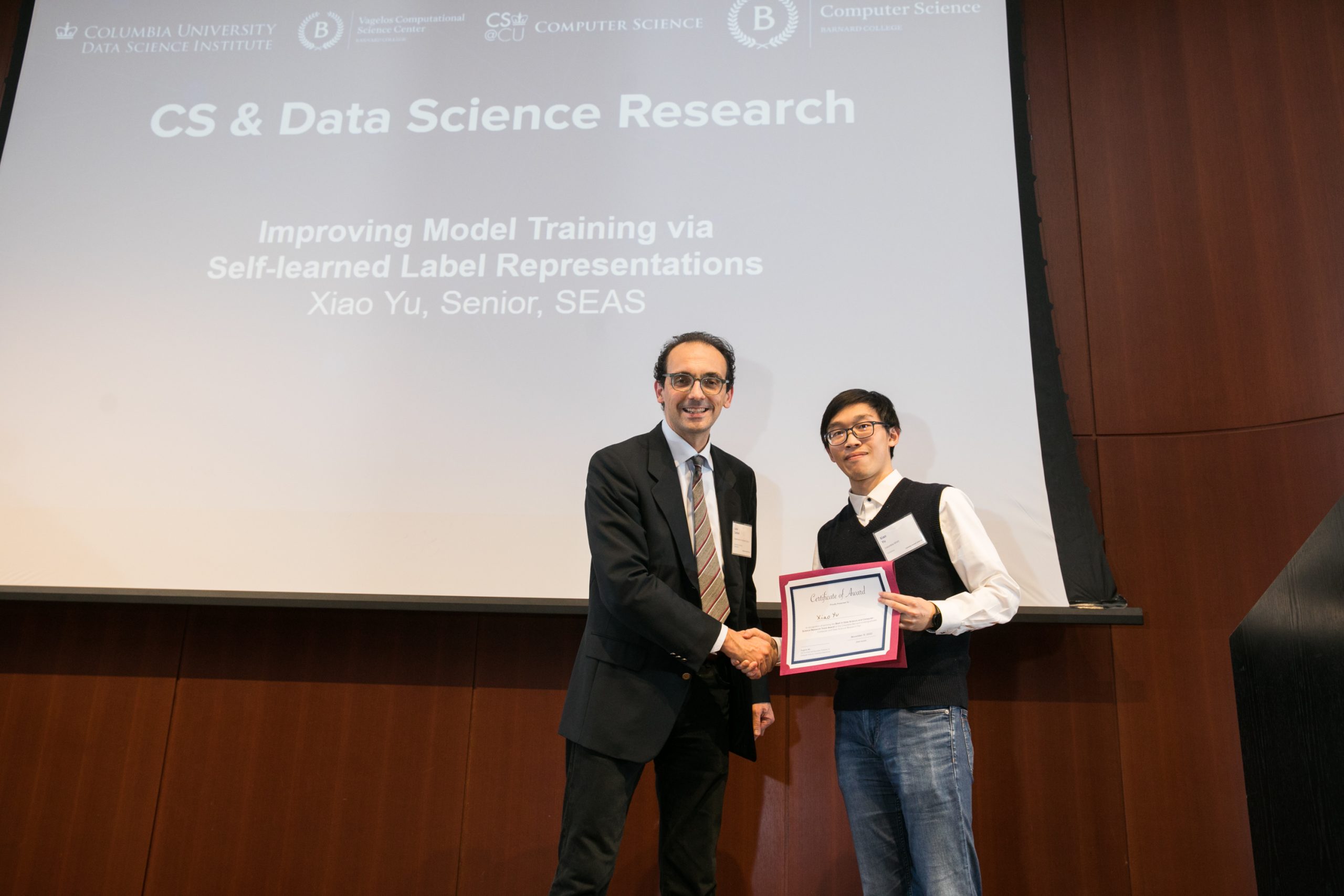

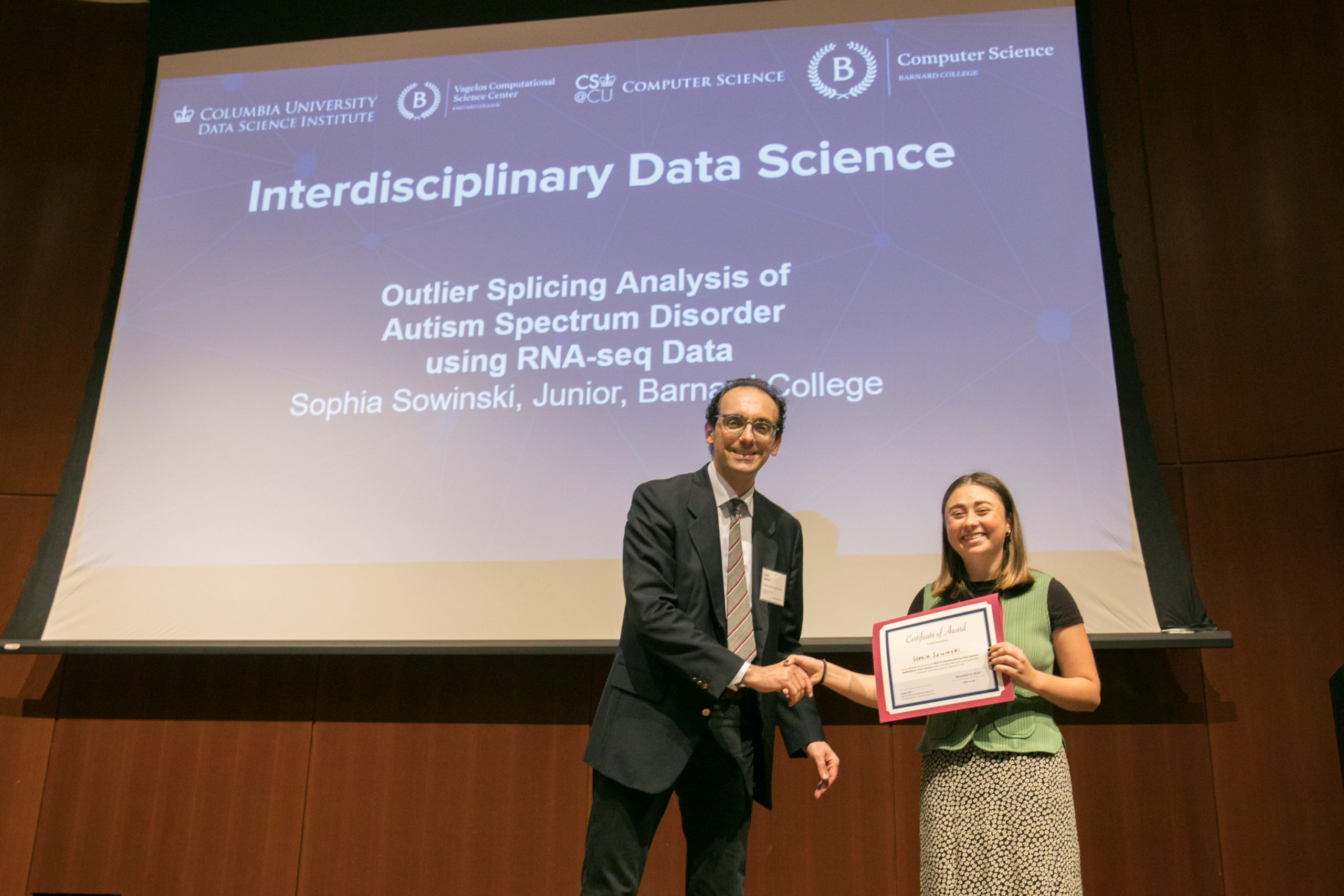

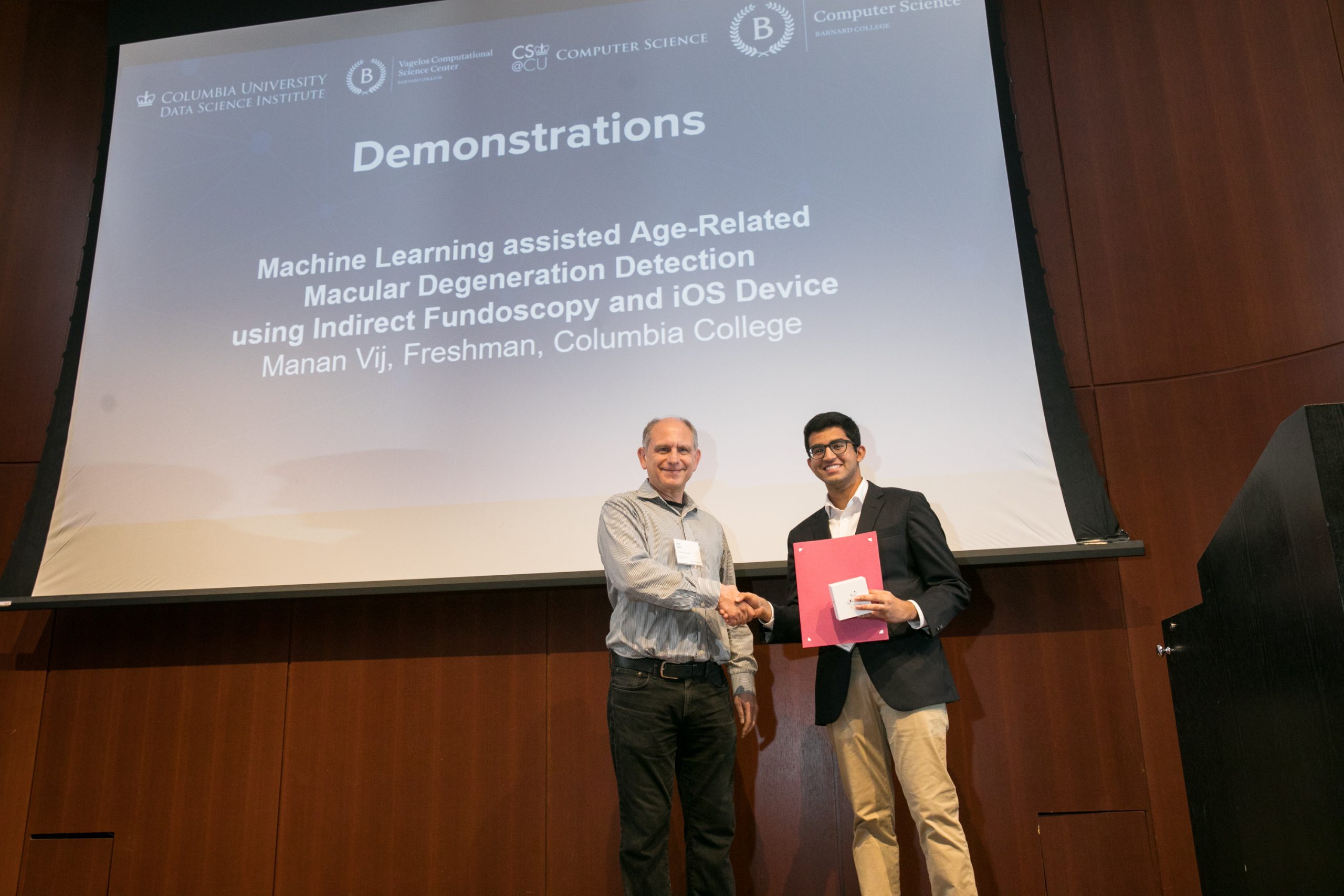

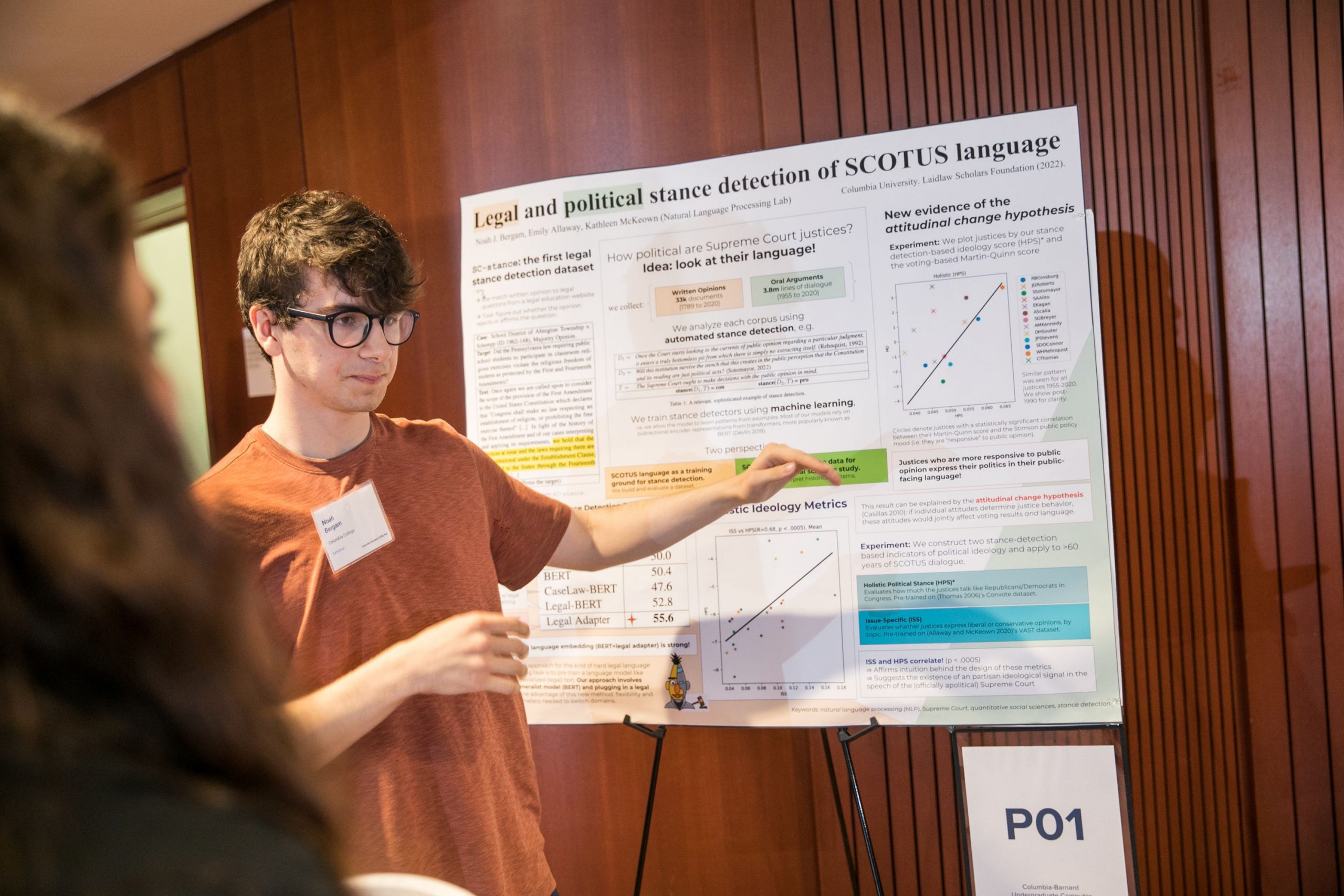

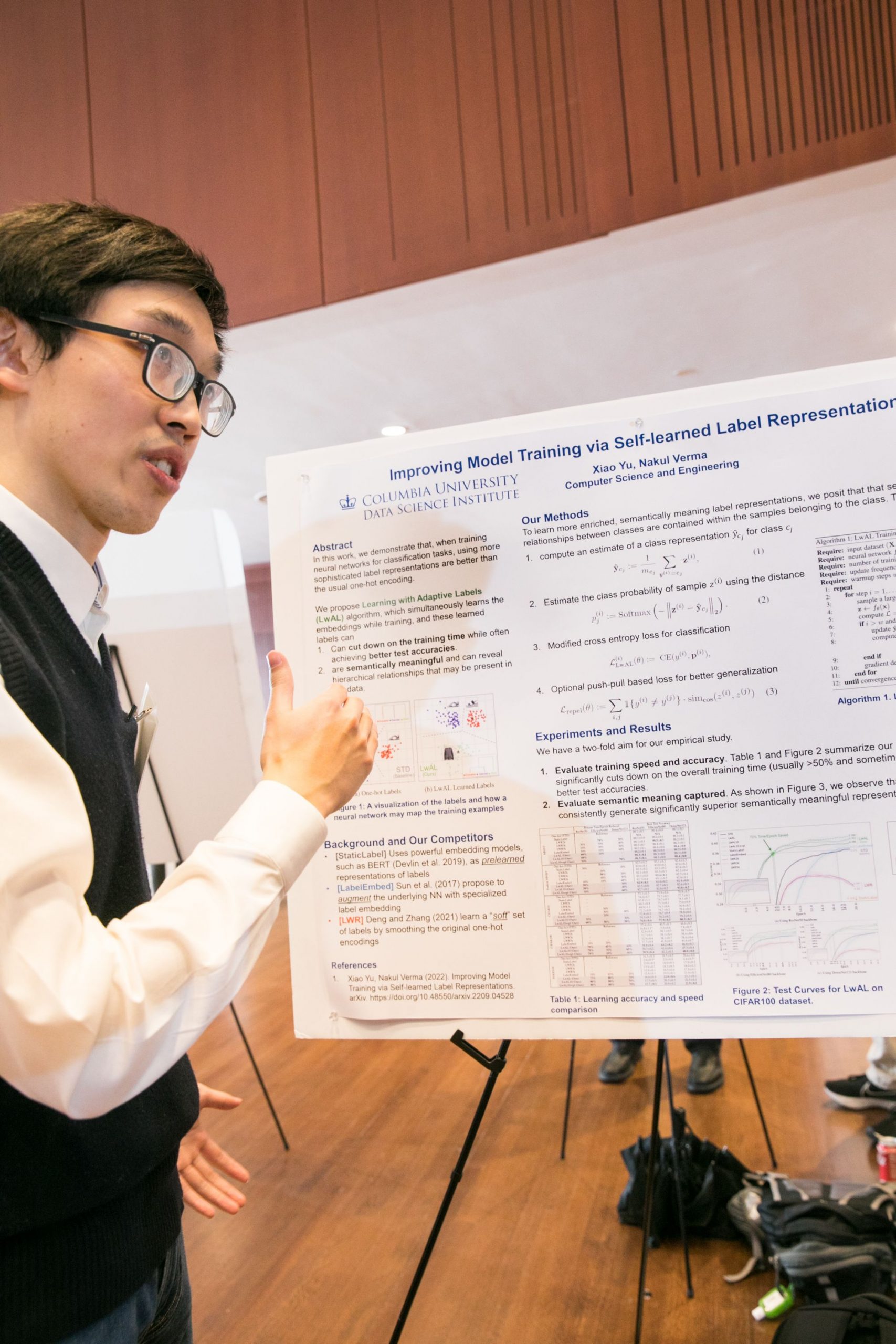

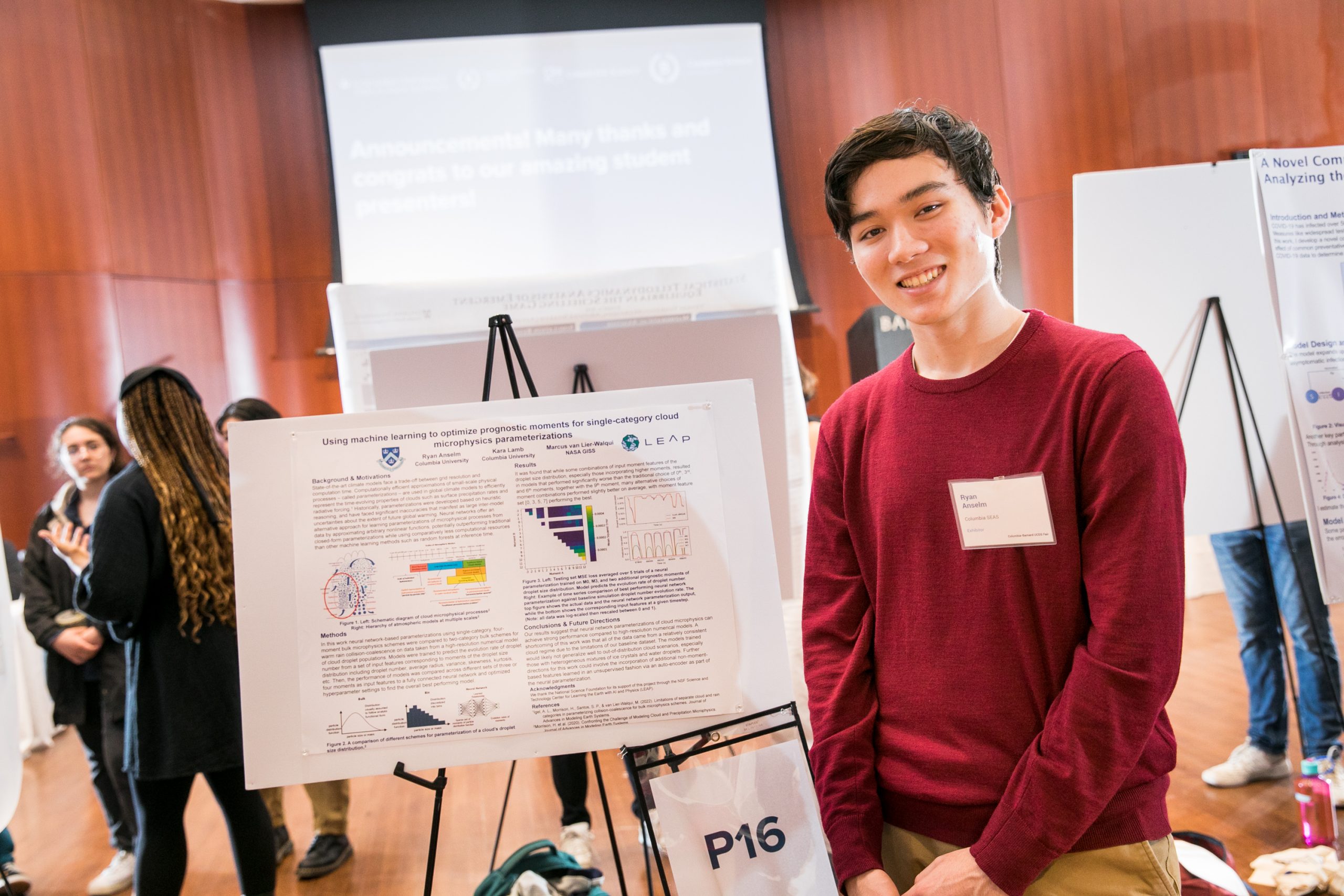

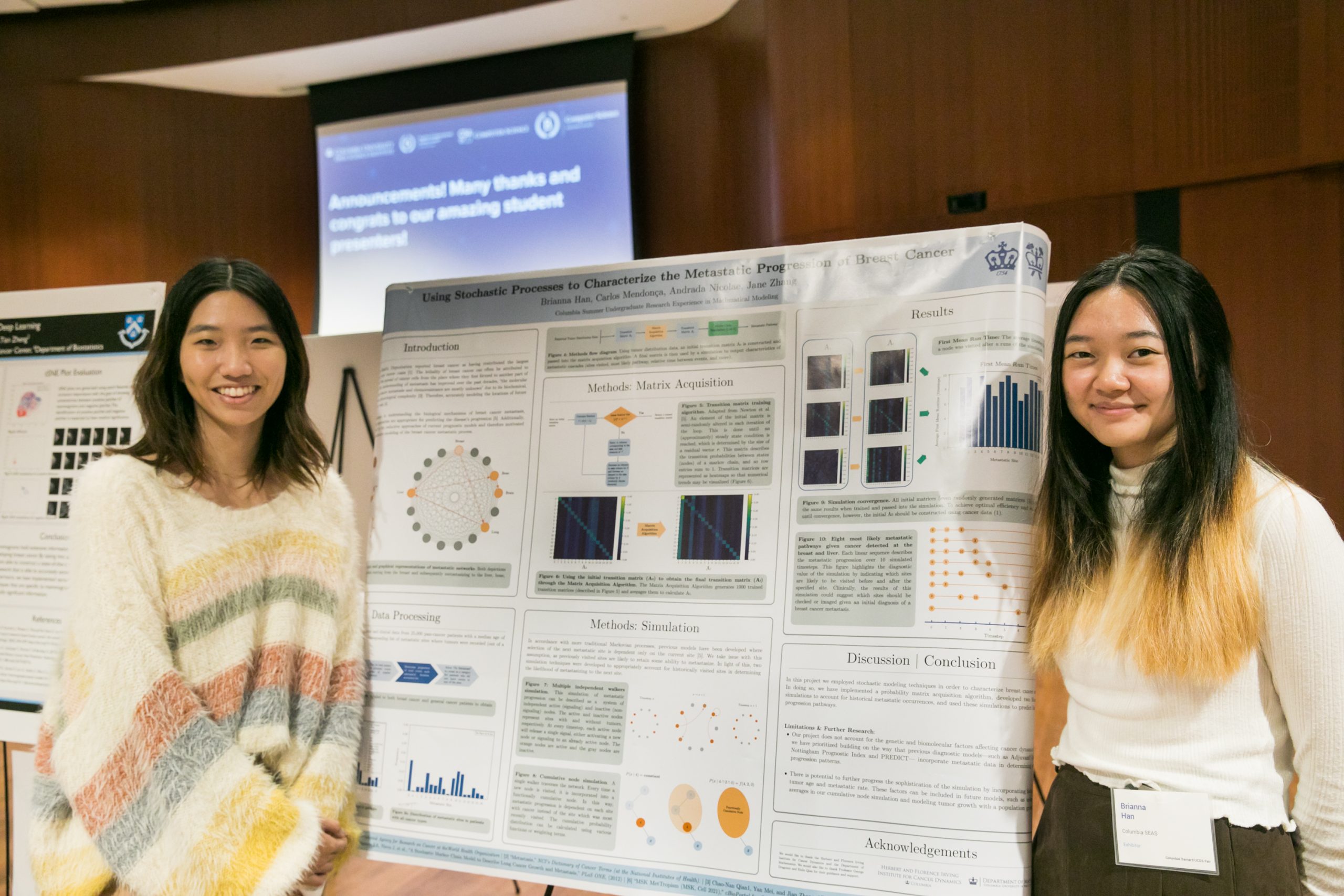

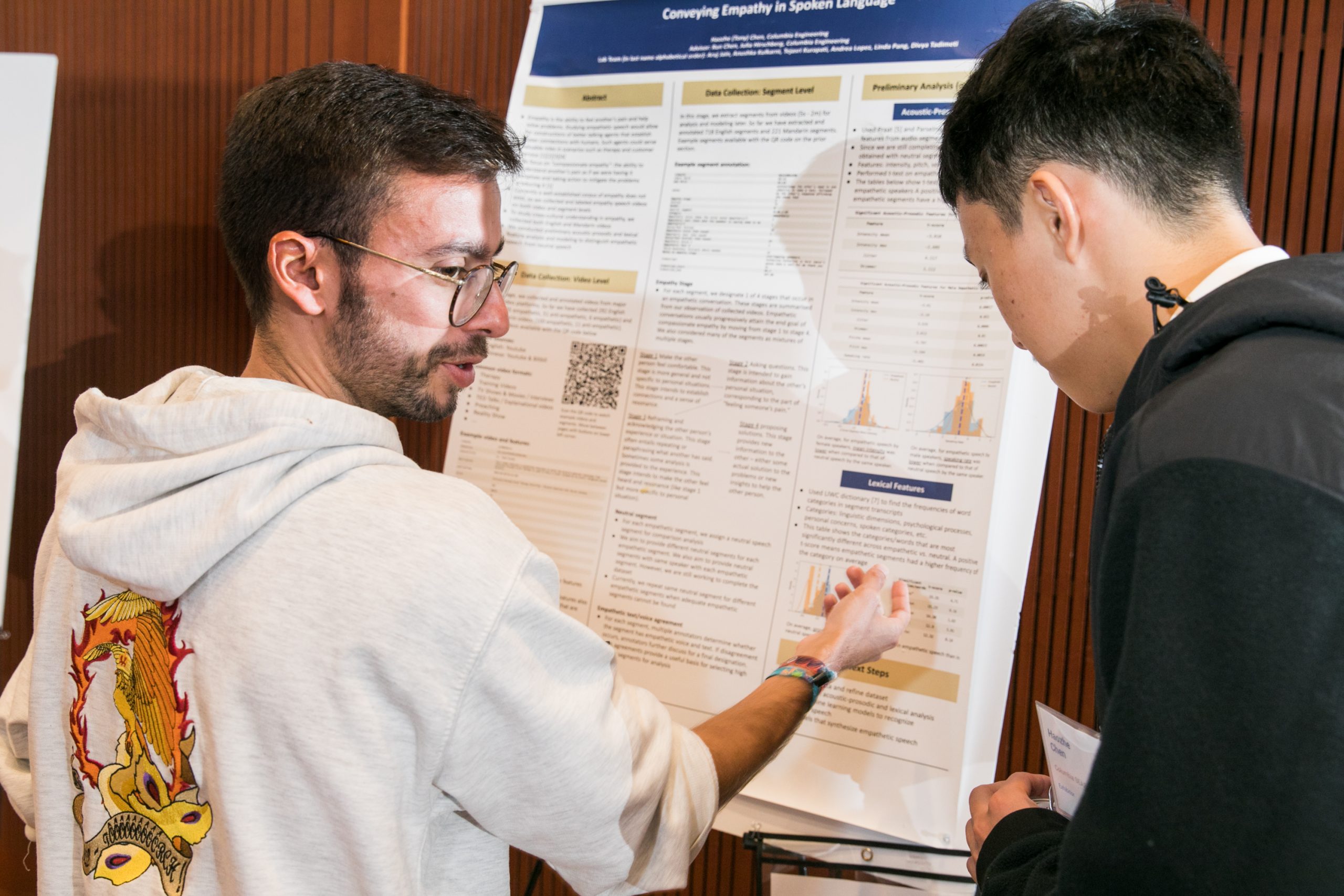

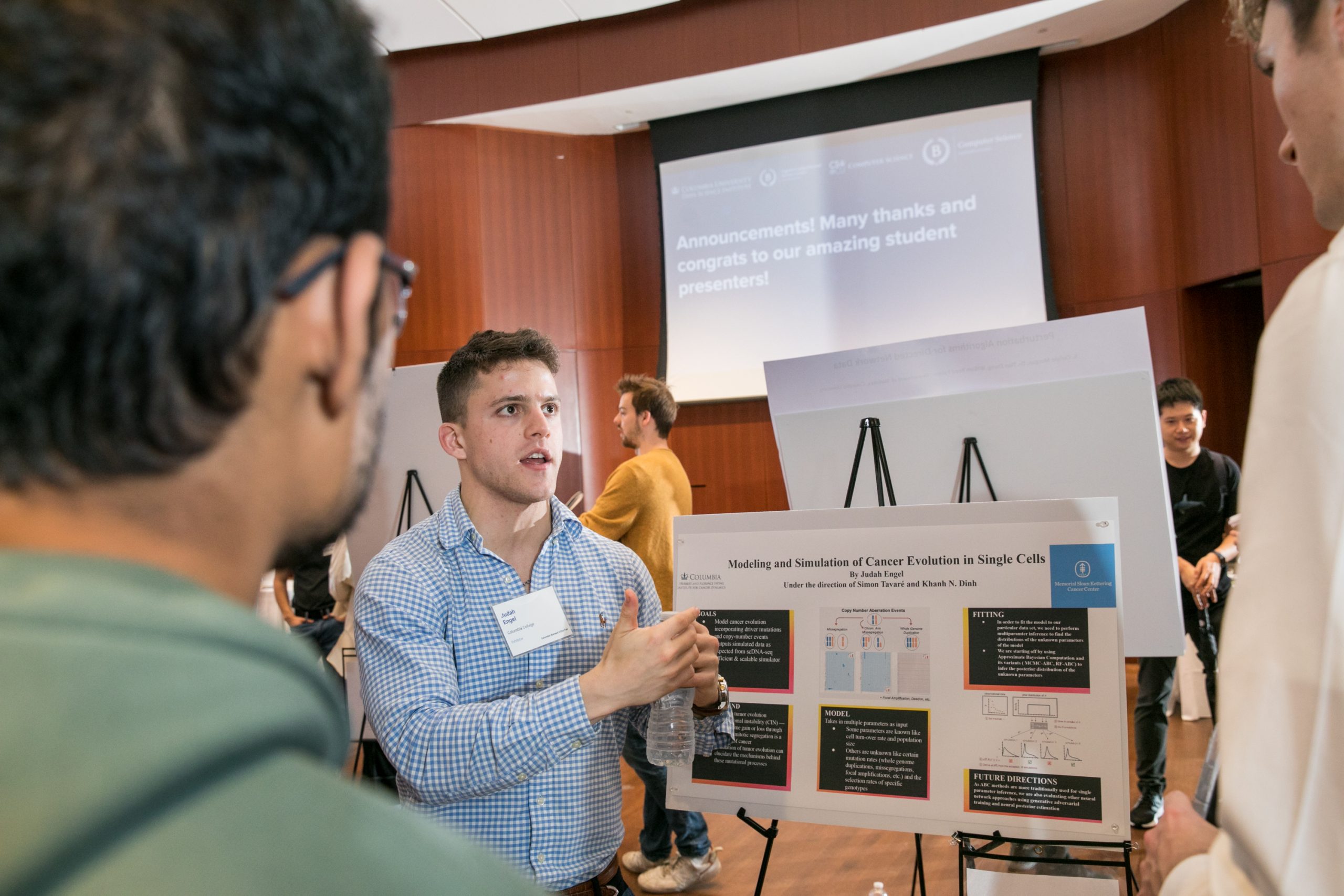

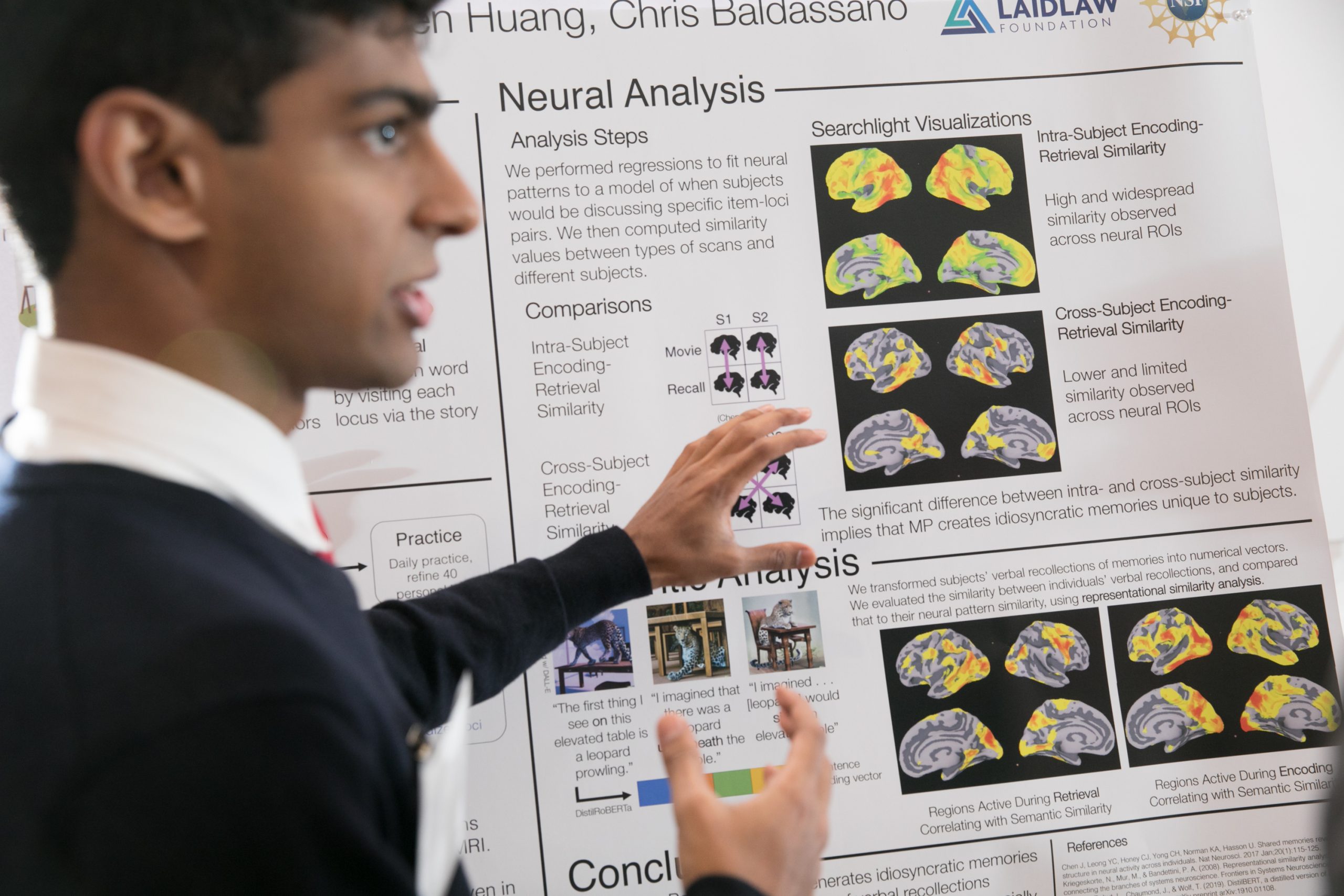

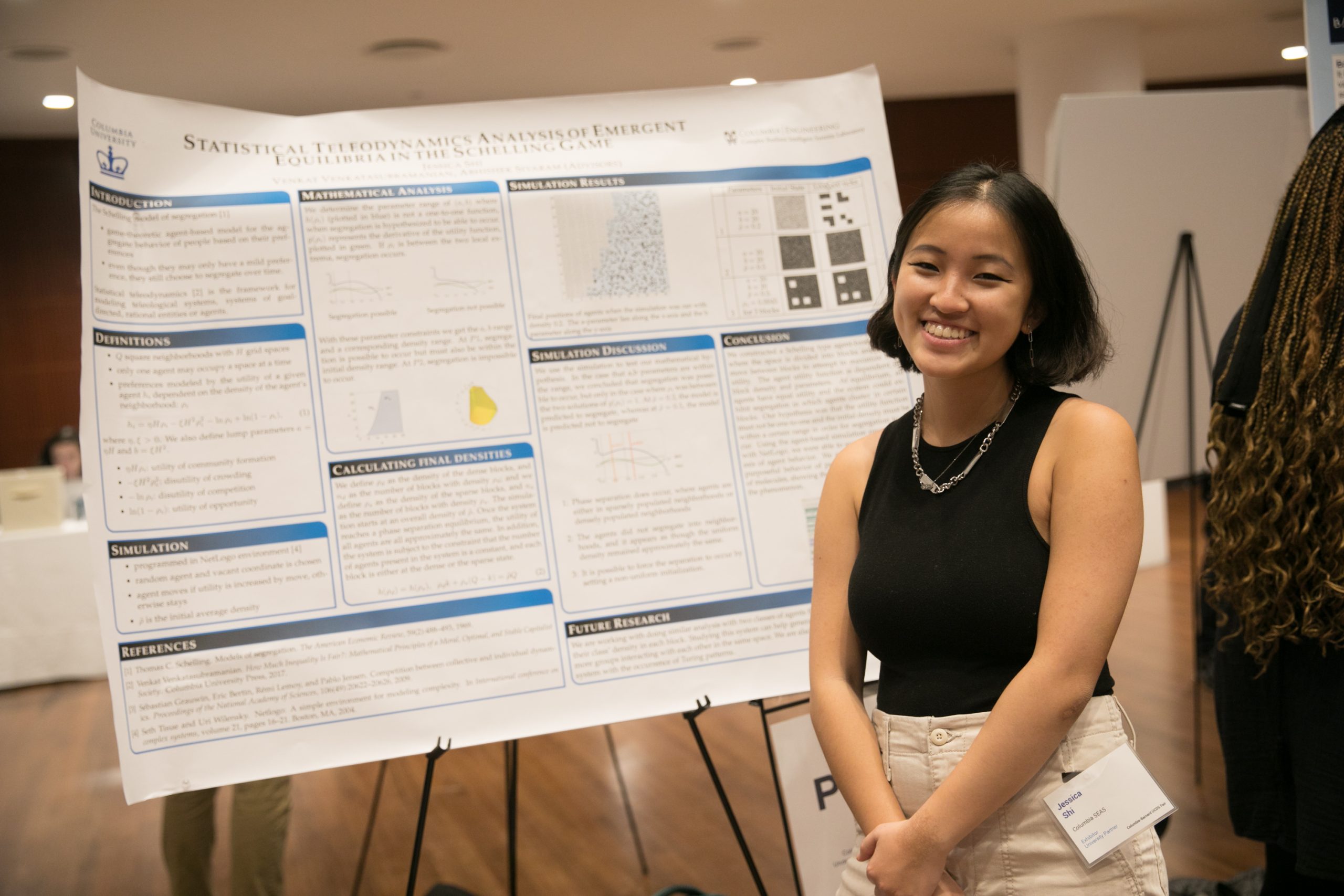

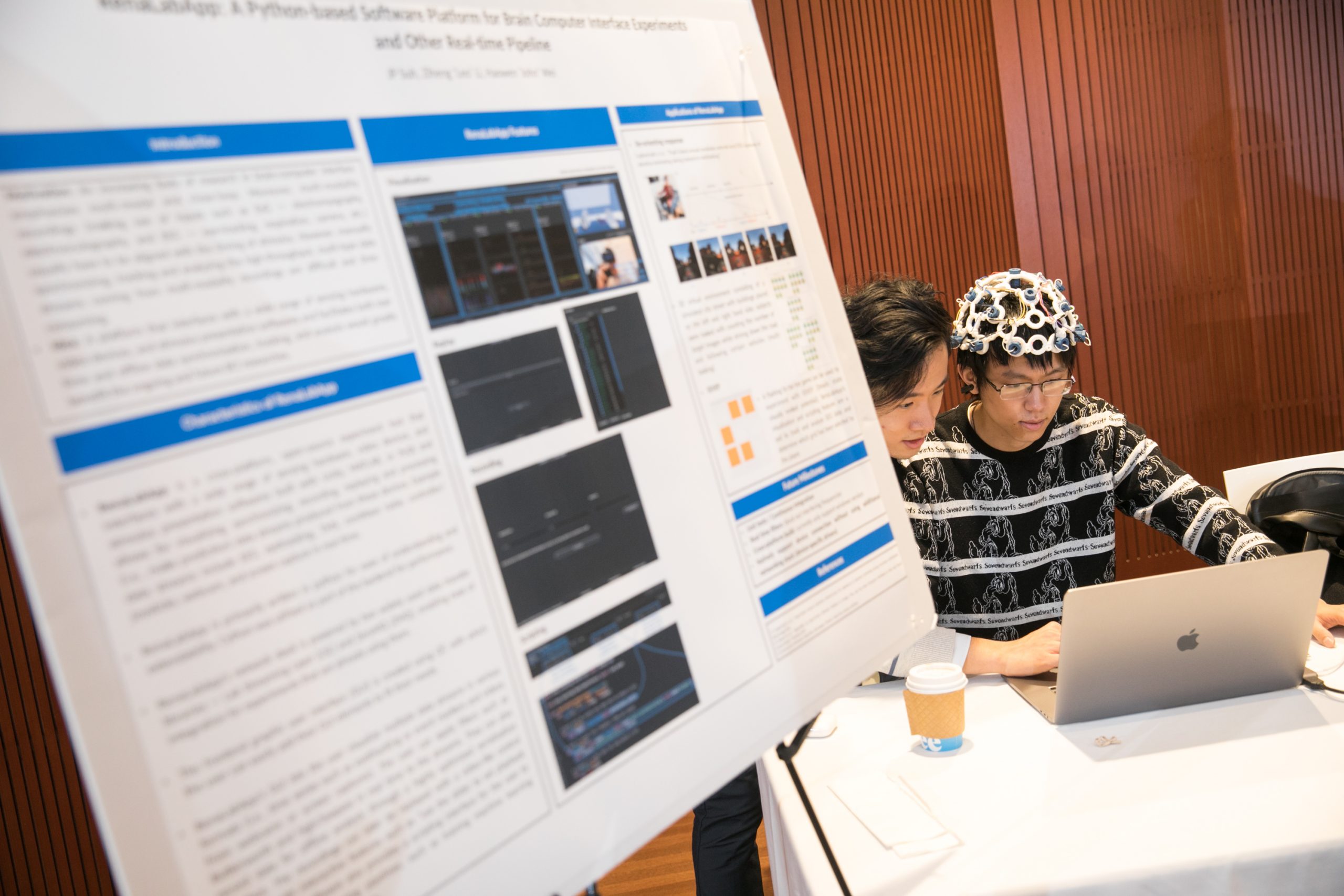

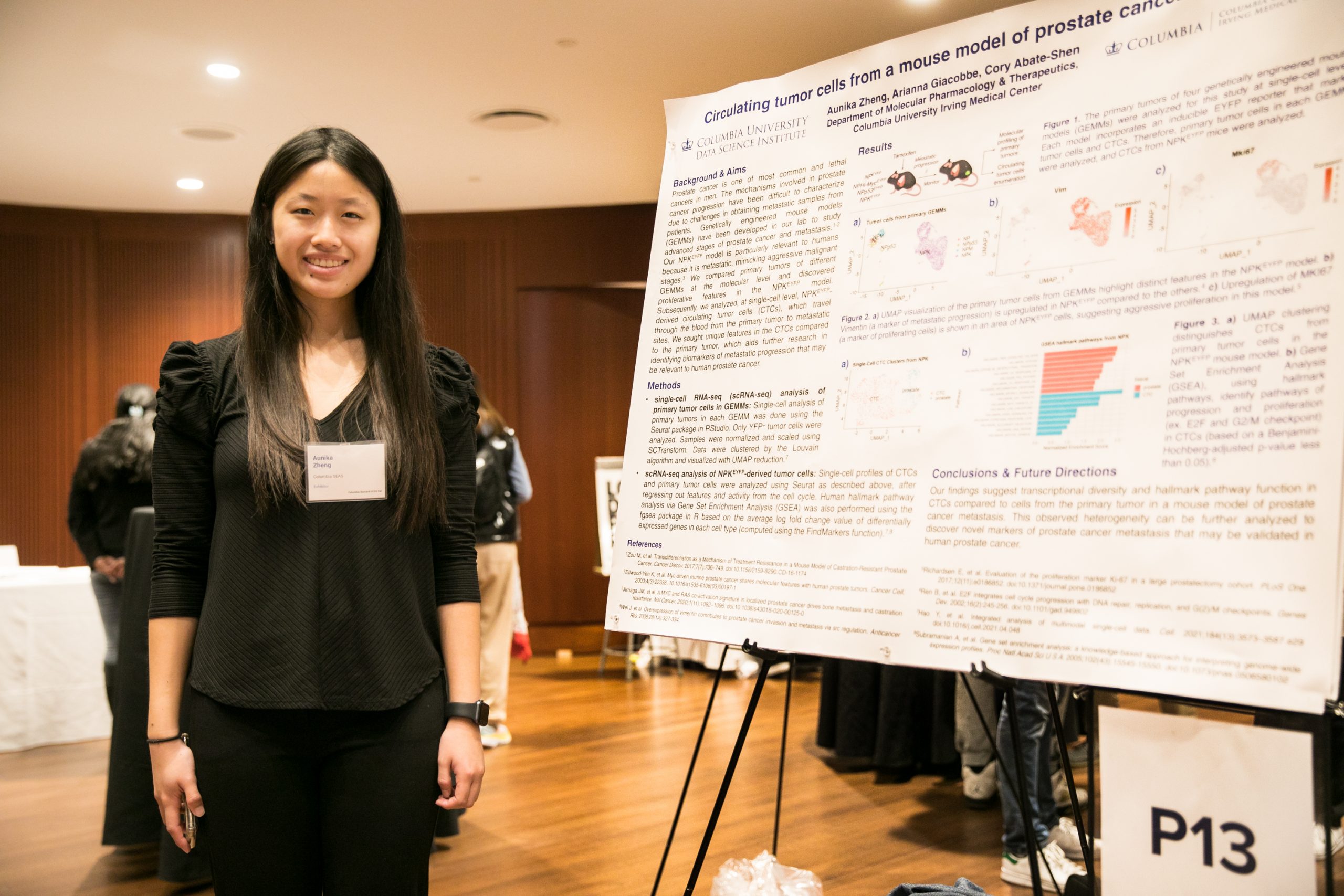

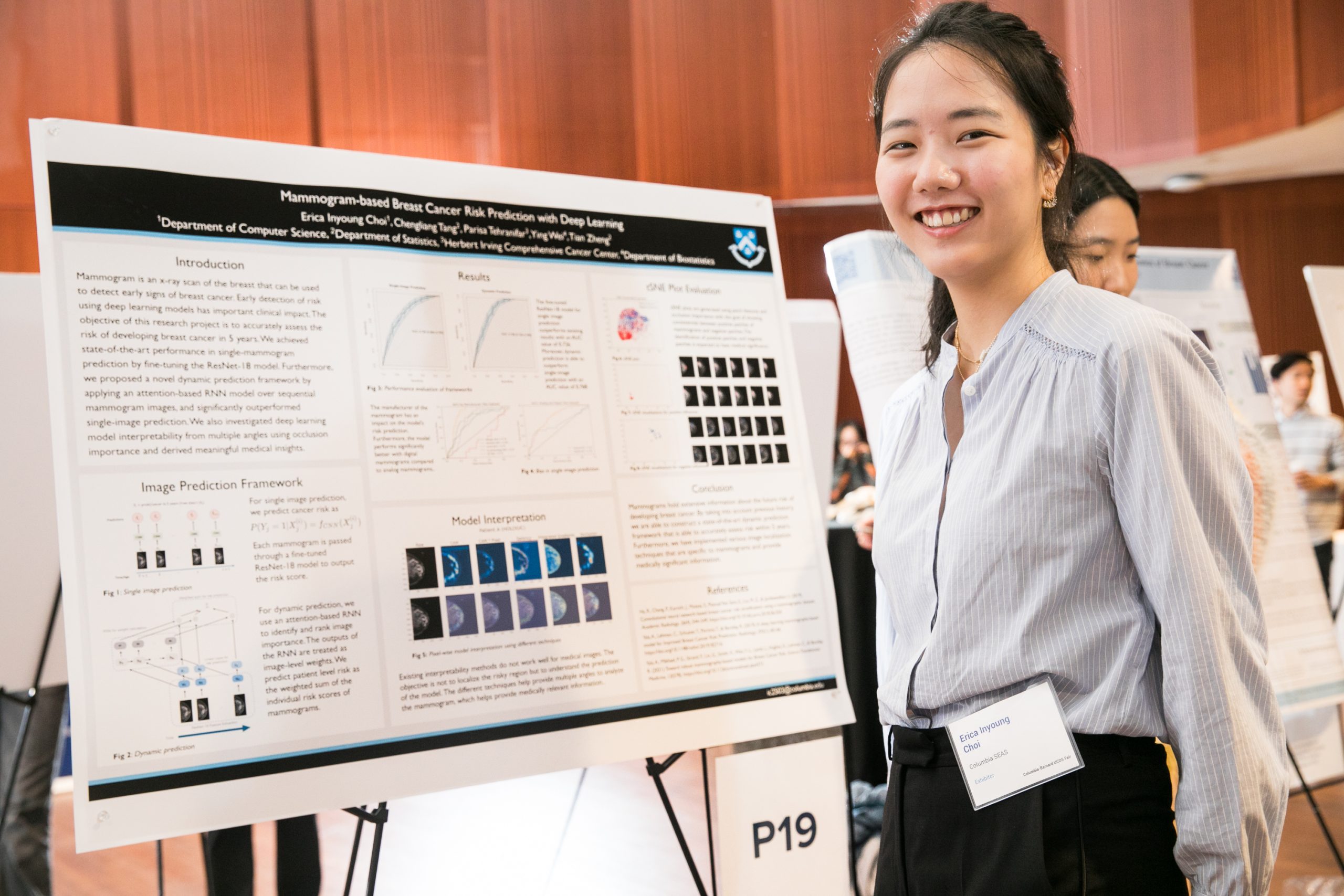

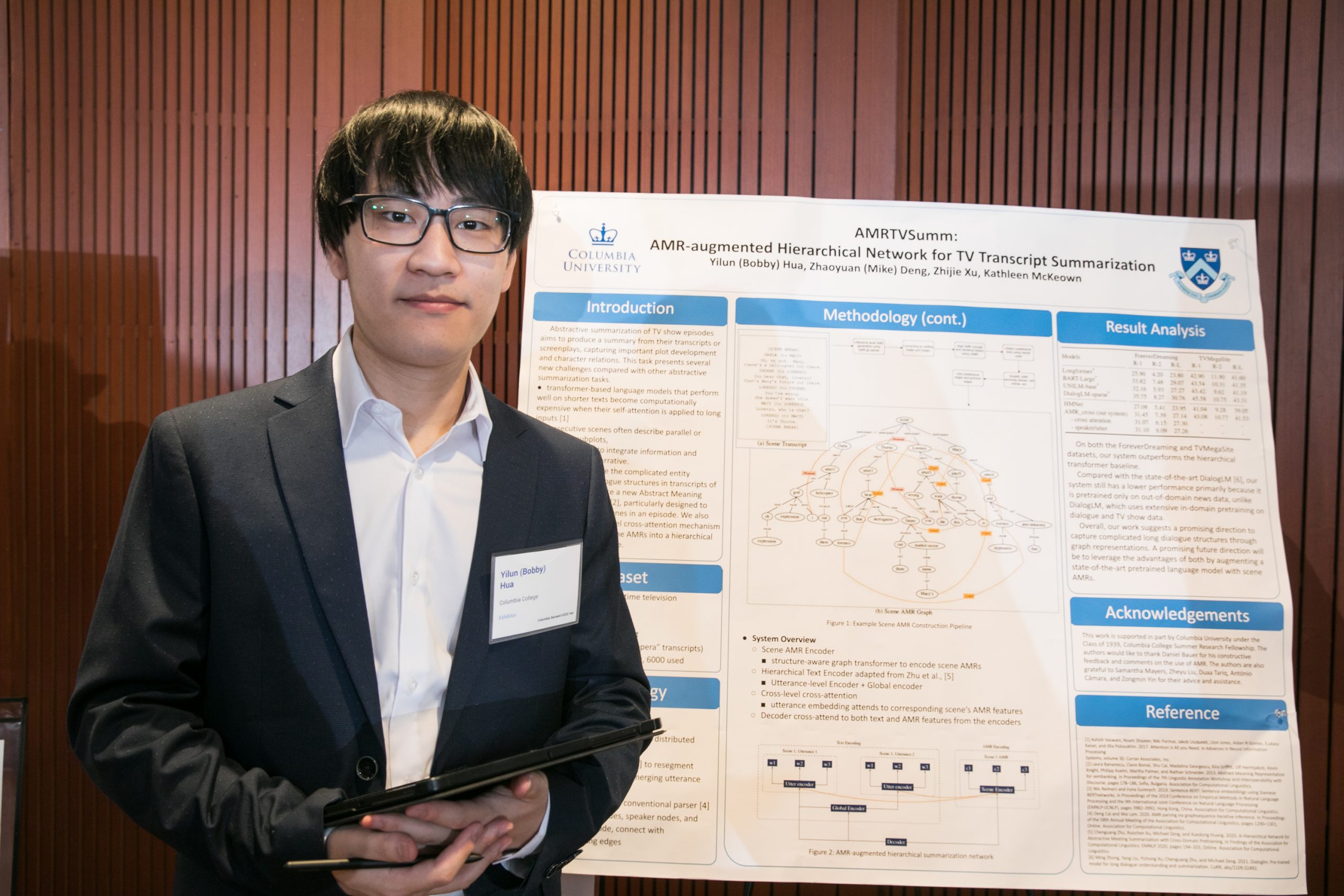

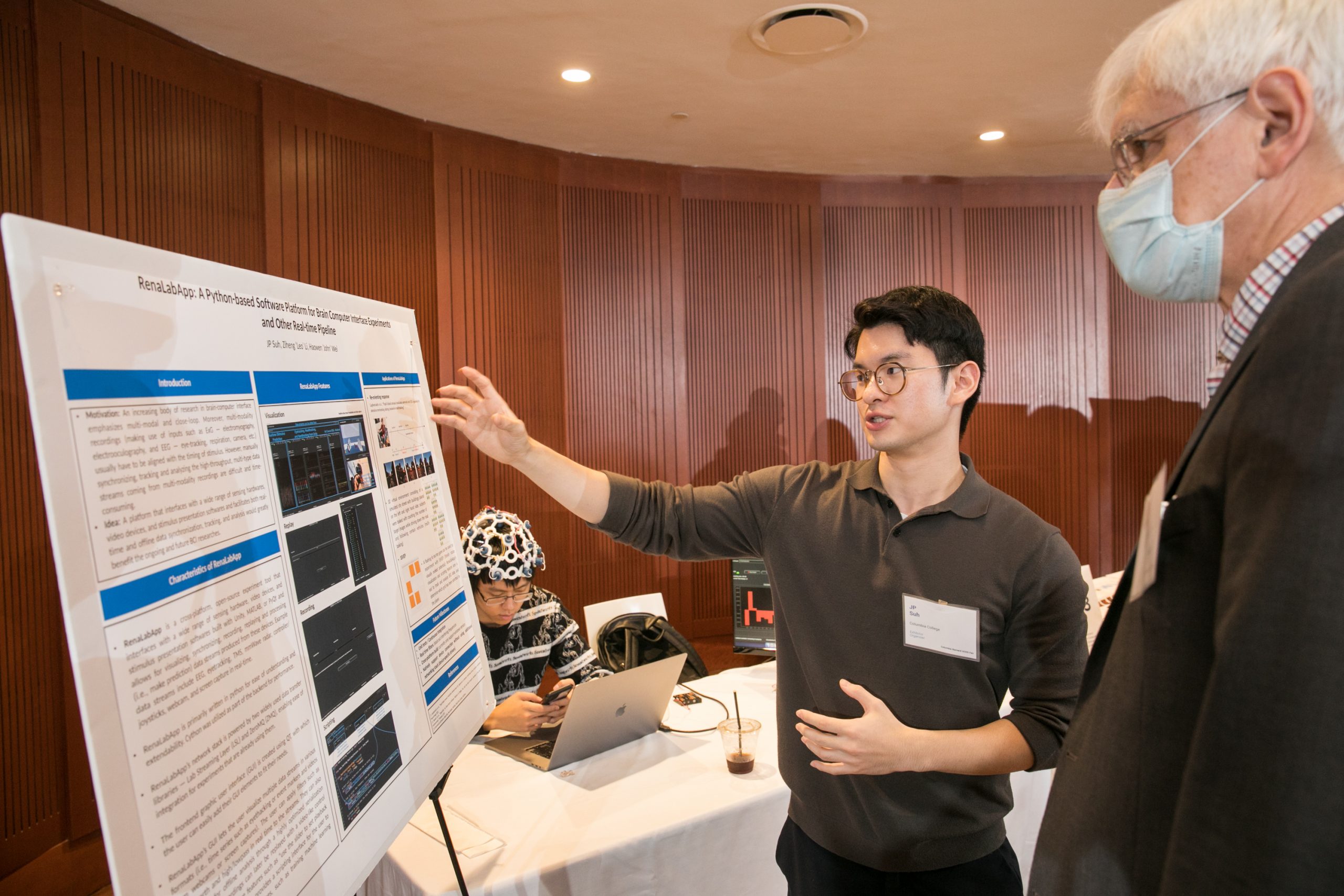

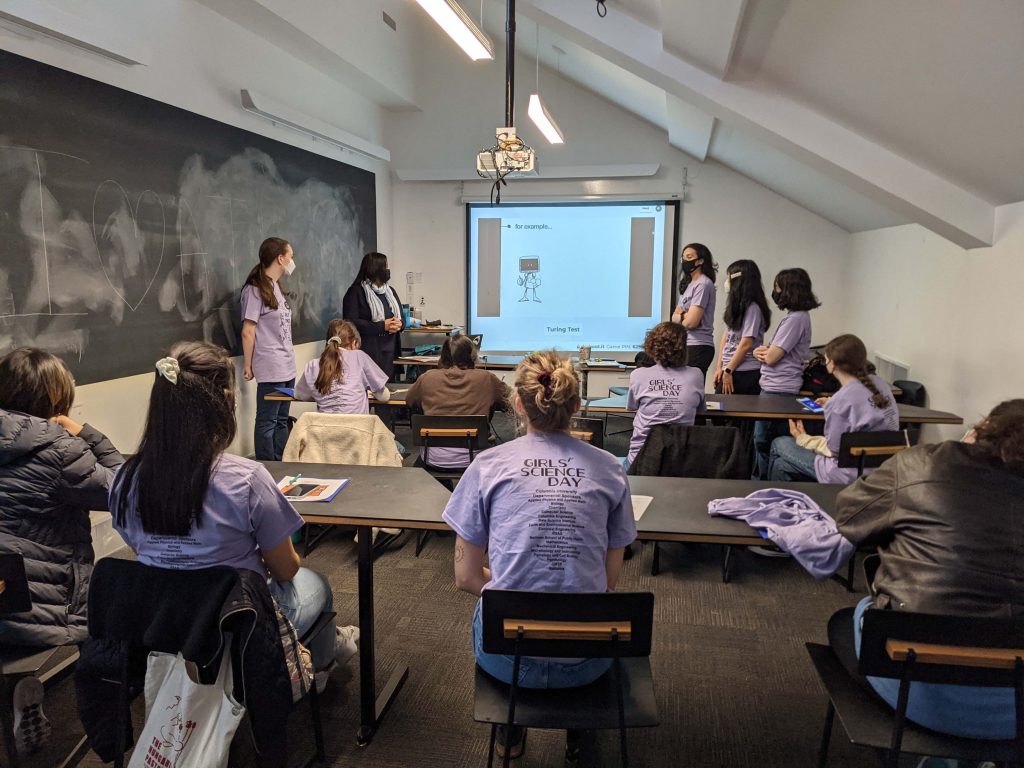

The projects of the Undergraduate Computer and Data Science Research Fair fell under the themes of Data Science and Society, Interdisciplinary Data Science Applications, Data Science and Computer Science Research. Posters and demonstrations were proudly hosted by twenty-five students from SEAS, Barnard College, Columbia College, and the School of General Studies.

The fair was organized by the Data, Media and Society Center at the Data Science Institute, Columbia University; the Barnard Program in Computer Science and the Vagelos Computational Science Center; and the Department of Computer Science at Columbia Engineering led by Eugene Wu, Susan McGregor, Rebecca Wright, and Alexis Avedisian.

The claim: Mail-in ballots show bad actors ‘how many fraudulent ballots they need to produce’

Computer scientist honored for his pioneering research in imaging and vision.

In a blog post for Trail of Bits, PhD student Andreas Kellas shares how he discovered a 22-year-old vulnerability in SQLite.

One of the hardest things to do is prepare application materials for a PhD program. It is hard to gather your thoughts and try to distill them in a way that makes sense and will impress the admission committee. Plus, the pressure is on for you to get into a program and a lab that matches your research interests.

These were some of the thoughts running through Tao Long’s mind this time last year when he was figuring out which PhD programs to apply to. He started doing human-computer interaction (HCI) research as an undergraduate at Cornell University and knew that he wanted to continue on the academic research track. When he saw that Columbia’s computer science PhD program had an application review program, he knew that he should apply to it and take the opportunity to get feedback on his application materials.

The Pre-submission Application Review (PAR) program is a student-led initiative where current PhD students give a one-time review of an applicant’s statement of purpose and CV. Now in its third year, the aim is to promote a diverse and welcoming intellectual environment for all. Not many PhD applicants have access to a network that can give advice and guide them through the application process; the student volunteers of the PAR program hope to address these inequities.

Long shares his experience applying to PhD programs, taking part in review programs, and how he is paying it forward by helping other students with their applications to PhD programs.

Q: Why did you decide to go into a PhD program directly after undergrad?

During my undergrad at Cornell, I worked on a few projects in several different labs, all focusing on HCI. I am really proud of a digital technology project that I contributed to – collaborating with Weill Cornell Medicine and Cornell Law; we are trying to understand the existing barriers for asylum seekers to adopt technologies and how to design ones for them.

My passion for socially impactful technology makes me want to continue doing human-computer interaction (HCI) research. HCI researchers study how people interact with technology and how to better design and develop technology according to people’s needs. Thus, both technical and social science knowledge is essential in this interdisciplinary field. With a background in information science and communication, I believe technology has a communicative capacity and responsibility: we speak, design, and build for those who can’t.

Q: How was your experience applying to PhD programs?

I finalized my school list, started reaching out to faculty members I was interested in working with, and prepared my application materials around September and October. In November, I focused on polishing my statement with some current graduate students and pre-submission application review programs held by universities.

December was the time when the long wait began. After the holidays, some applicants will begin receiving interview inquiries. Around late February and March, most applicants will receive their concluded application decisions back. Lastly, they need to decide whether they accept the offer by April 15 (see April 15 Resolution).

The whole half-year-long application process was too long for me. There are many online platforms, like the r/gradadmissions subreddit and the GradCafe, where people share their admission updates. These information sources made the waiting process more exciting but also made me feel a bit anxious. I think I really enjoyed the post-submission period, where I felt relieved after all my application materials were in. Thus, I started working at an on-campus cafe, learned knitting, and watched YouTube and TikTok all day.

Q: Why did you choose to apply to Columbia CS? What attracted you to the program?

Columbia is well-known for its strong academic and research resources. I am now taking a class on how to build a successful startup in CS. The instructor of that class is a Columbia CS PhD graduate with several well-established startups. In addition to courses, countless fascinating research projects from the department widely collaborate with different schools on campus and large tech companies in the city. Specifically, I find the research conducted by my advisor, Lydia Chilton, really cutting-edge in helping users understand and interact with artificial intelligence tools. Many of her recent publications on large language models and text-to-image generative models are fascinating and impactful to the HCI community.

Q: You were part of the PAR program last year; how was it?

I found the PAR program on the department website during my school search period. During that month, I looked through many university websites and found several schools that provided similar programs to give feedback to PhD applicants. I chose to apply for the PAR program because I wanted more feedback on my application materials. Sometimes people say you have enough experience, but it isn’t addressed well on the application. I wanted to make sure that I presented myself well and painted a full picture of myself.

Q: Was it helpful to get feedback?

I submitted my Statement of Purpose, Personal Statement, and academic CV in early November. Then, the PAR team paired me with a reviewer and provided me with valuable and insightful written comments on my application materials by November 21, which is around 20 days before Columbia’s application deadline.

The feedback contained a general evaluation of the pros and cons of my application materials. They pointed out problems in the statement structure – mention more about my experience, stress more about the technical or the non-technical skill sets, shrink the length of the statement, and move one section forward. Feedback was also given on the format and language usage like what tense to use, easy-to-read font style and size, and header or page breaks to help the user flow between sections. Thus, containing feedback from both high-level and low-level perspectives, this PAR review program was beneficial for me to navigate and make future changes.

Q: You are part of the PAR team now, right? Why did you join the group?

I became active in the subreddit r/gradadmissions last year while I was waiting for the admission results to come out. I found it helpful to check posts there to learn more about the general admission process from other people’s cases. I also found a few HCI PhD applicants there, thus establishing some prior connections before entering the field. My friend and I started helping applicants from low-resource countries or regions by reviewing their application materials and providing feedback.

When I decided to go to Columbia, I knew I wanted to continue helping PhD applicants. I am part of other review programs offered by affinity groups outside Columbia to help those interested in pursuing a PhD. I joined the department’s PAR program committee in September. We are getting ready for the upcoming November 15th deadline and recruiting current CS PhD students to become reviewers. I highly recommend that applicants join the PAR program! I am sure that you could receive a lot of insightful feedback from the current CS PhD student community to help polish your materials! Good luck!

——

Related Content

PAR Program Offers Peer Support to PhD Applicants

Student-led Initiative Aims to Help Applicants of the PhD Program

Interested applicants have to apply to the PAR program and submit their personal statement and CV by November 7th at 11:59 pm EST. Because the program is student-run and dependent on volunteers, there is no guarantee that every applicant can be accommodated. Those who are accepted will be notified by November 14th, then paired with a PhD student in the same research area who will review their materials and provide feedback to them by November 21st – well ahead of the December 15th deadline to apply to the PhD program.

Computer scientist honored for his pioneering research in imaging and vision.

This fall, Columbia University and Barnard College will host the inaugural Undergraduate Computer and Data Science Research Fair to showcase undergraduate student work in data science and related fields. Applications will be accepted along three thematic tracks and in a variety of formats to highlight the diversity of opportunities that data science affords researchers.

Get ready to roar for these Lions — including two CS majors— competing in the Capital One College Bowl, the NBC quiz show hosted by Hall of Fame quarterback Peyton Manning. The 10-episode series will feature undergraduates from 16 schools vying for a share of $1 million in scholarship money.

Xia Zhou, Brian Borowski, and Kostis Kaffes join the department. They will facilitate research and learning in mobile computing, software systems, and networks.

Xia Zhou

Associate Professor

PhD Computer Science, University of California Santa Barbara

MS Computer Science, Peking University

BS Computer Science and Technology, Wuhan University

Xia Zhou is an expert in mobile computing and networks whose research is focused on wireless systems and mobile health. Zhou joins Columbia after nine years at Dartmouth where she was the co-director of the Dartmouth Networking and Ubiquitous Systems and the Dartmouth Reality and Robotics Lab. At Columbia, she will direct the Mobile X Laboratory.

Brian Borowski

Lecturer in Discipline

PhD Computer Science, Stevens Institute of Technology

MS Computer Science, Stevens Institute of Technology

BS Computer Science, Seton Hall University

Brian Borowski is an expert in software systems who aims to present a blend of theoretical and practical instruction so his students can be successful after graduation, regardless of the path they choose. He studied underwater acoustic communication and won several awards for his teaching at Stevens Institute of Technology.

Kostis Kaffes

Kostis Kaffes

Assistant Professor

PhD Electrical Engineering, Stanford University

MS Electrical Engineering, Stanford University

BS Electrical and Computer Engineering, National Technical University of Athens

Kostis Kaffes is interested in computer systems, cloud computing, and scheduling across the stack. He arrives in Fall 2023 and will spend the next year doing research at Google Cloud with the Systems Research Group.

Professor Kathleen R. McKeown is the recipient of the 2023 IEEE Innovation in Societal Infrastructure Award—the highest award recognizing significant technological achievements and contributions to the establishment, development, and proliferation of innovative societal infrastructure systems through the application of information technology with an emphasis on distributed computing systems.

Graduate students from the department have been selected to receive scholarships. The diverse group is a mix of those new to Columbia and students who have received fellowships for the year.

Shunhua Jiang

Shunhua Jiang

Shunhua Jiang is a fourth-year PhD student who is advised by Professor Alexandr Andoni and Assistant Professor Omri Weinstein. Her research interests are in optimization and data structures, with a focus on using dynamic data structures to accelerate the algorithms for conic programming.

Jiang graduated from Tsinghua University with a B.Eng. in computer science. In her free time, she enjoys running and playing the Chinese flute.

Andreas Kellas

Andreas Kellas

Andreas Kellas is a second-year PhD student working in software system security, with interests in mitigating exploits, finding vulnerabilities, and verifying the correctness of software patches. He is co-advised by Professor Simha Sethumadhavan and Professor Junfeng Yang.

Kellas graduated from the United States Military Academy in 2015 with a BS in computer science and Arabic.

Harry Wang

Harry Wang

Harry Wang is a first-year PhD student interested in the intersection of systems, networks, and security. He joins the Software Systems Laboratory under the guidance of Professor Junfeng Yang and Assistant Professor Asaf Cidon.

Wang graduated this year from the University of Southern California with a BSc in computer engineering and computer science. He was a 2021 Goldwater Scholar and received the USC Computer Science Award for Outstanding Research for his work on binary analysis and network security.

Sei Chang

Sei Chang

Sei Chang is a first-year PhD student interested in computational genomics and machine learning. He is advised by Assistant Professor David Knowles at the New York Genome Center. His research aims to apply machine learning techniques to analyze transcriptomic dysregulation in genetic diseases.

Chang graduated magna cum laude from the University of California, Los Angeles in 2022 with a BS in computer science and a minor in bioinformatics. He is a National Merit Scholar and recipient of the Muriel K. and Robert B. Allan Award from UCLA Engineering.

Nick Deas

Nick Deas

Nick Deas’ interests lie in the application of natural language processing to the social sciences, particularly psychology, and developing natural language processing methods for social media and other unstructured data sources. His undergraduate research similarly involved using natural language processing techniques to analyze political tweets and detect psychological constructs from Reddit posts, but also ranged to speech transcript correction and topic modeling. He will be joining the Natural Language Processing Group under the guidance of Professor Kathleen McKeown.

Deas graduated from Clemson University in May 2022 with a BS in computer science and a BS in Psychology. He was awarded a full-tuition scholarship as part of the National Scholars Program. While there, he received the Outstanding Senior Award in the Computer Science department in 2020, the Phi Kappa Phi Certificate of Merit Award in the Computer Science department in 2022, and the Award for Academic Excellence in Psychology in 2022. The Midwest Political Science Association (MPSA) awarded him a Best Paper by an Undergraduate Student for work on natural language processing and political tweets. In addition to the NSF GRFP, his graduate studies will be funded by the SEAS Presidential Fellowship and a Provost Diversity Fellowship.

Sruthi Sudhakar

Sruthi Sudhakar

Sruthi Sudhakar is interested in computer vision with a focus on studying stronger representations using multimodal inputs to improve robustness and generalization. She is a first-year PhD student who will work with Professor Richard Zemel and Assistant Professor Carl Vondrick.

Sudhakar graduated from the Georgia Institute of Technology with a BS in computer science and received Georgia Tech’s 2021 Outstanding Undergraduate Research Award.

Matthew Toles

Matthew Toles

Matthew Toles is a first-year PhD student interested in generating goal-oriented questions for better human-computer collaboration. He will work with Professor Luis Gravano and Assistant Professor Zhou Yu.

Toles graduated in 2016 from the University of Washington with a BS in materials science and engineering. He was a recipient of the Mary Gates Research Fellowship, NASA SURP scholarship, and an Amazon Catalyst Fellowship.

Ira Ceka

Ira Ceka is a first-year PhD student who will be advised by Baishkakhi Ray. Her research interests are in software engineering.

Prior to Columbia, Ceka worked in industry as a software engineer. She was also a research intern at IBM Thomas J. Watson Research Center, MIT, and Harvard University. She graduated in 2019 with a BS in computer science from the University of Massachusetts Boston.

Yusuf Efe

Yusuf Efe

Yusuf Efe is a PhD student set to work with Associate Professor Elias Bareinboim. His work will focus on designing robust causal reinforcement learning algorithms. He is particularly interested in defining dynamic and context-dependent value functions in reinforcement learning settings to render possible human-like learning processes for intelligent agents. In addition, he is interested in blockchain and decentralized finance.

Efe graduated with a BS degree in electrical engineering and physics from Bogazici University, Turkey. During his research internships at Stanford and UIUC, he analyzed multi-agent settings utilizing machine learning and game theory principles. He is an alumnus of the Turkish National Physics Olympiad team and won a gold, two silver, and bronze medals, and the Best Theory Paper award at the International Physics Olympiad.

Rashida Hakim

Rashida Hakim

Rashida Hakim studies the design and analysis of algorithms for networked applications. These applications include the Internet, distributed computing, and the brain. She joins the Theory Group and will be advised by Professor Christos Papadimitriou and Professor Mihalis Yannakakis.

Hakim graduated from the California Institute of Technology with a BS in computer science. When she has free time, she likes to crochet, hike, and read science fiction.

Zeyi Liu

Zeyi Liu

Zeyi Liu is a first-year PhD student working with Assistant Professor Shuran Song on robotics and computer vision. Her work focuses on developing methods for embodied agents to better understand the environment and plan for complex tasks. She is particularly interested in developing robots that are able to assist humans in household environments.

Liu graduated from Columbia University in 2022 with a BS in computer science. She recently picked up sailing as a hobby and really enjoys it.

Hadleigh Schwartz

Hadleigh Schwartz

Hadleigh Schwartz is interested in building and analyzing interdisciplinary mobile systems. Her Master’s thesis focused on understanding the visual privacy risks posed by IoT video analytics systems. She is also interested in techniques for improving network security and reliability. She is an incoming first-year PhD student advised by Associate Professor Xia Zhou, Professor Dan Rubenstein, and Professor Vishal Misra.

Schwartz graduated summa cum laude and Phi Beta Kappa from the University of Chicago in 2022 with both a BA and MS in computer science. She likes cooking, baking, playing guitar, and hiking whenever she has free time.

Josephine Passananti

Josephine Passananti

Josephine Passananti’s research focus is security and adversarial machine learning. While at Columbia, she hopes to further the robustness of AI applications and systems. She will be co-advised by Associate Professor Baishakhi Ray and Associate Professor Suman Jana.

Passananti recently graduated from the University of Chicago with a BS in computer science and a minor in visual arts. She loves cooking and exploring the city, and when she has the time, she goes hiking, plays tennis, and makes pottery.

William Pires

William Pires

William Pires is a first-year PhD student who is broadly interested in computational complexity and the role of randomness in computation. While at Columbia, he hopes to continue working on problems related to the TFNP classes. He will be advised by Professor Toniann Pitassi, Professor Rocco Servedio, and Professor Xi Chen and he is a member of the Theory Group.

Pires graduated from McGill University this May where he received a BS in computer science. While there, he worked with Robert Robere on TFNP classes and Hamed Hatami on communication complexity.

He enjoys being outdoors and looks forward to hiking in New York.

Kele Huang

Kele Huang

Kele Huang is a first-year PhD student working with Professor Ronghui Gu and Professor Jason Nieh at the Software Systems Laboratory. He is interested in systems research, with a focus on operating systems, virtualization, and formal verification.

Huang completed his BE in microelectronics science and engineering from Central South University. His undergrad studies were funded by the China National Scholarship. He was recommended to the Institute of Computing Technology, CAS, and graduated with an MS in computer science in 2021.

Natalie Parham

Natalie Parham

Natalie Parham studies theoretical computer science with a focus on quantum complexity theory and quantum algorithms. She is particularly interested in understanding the power and limitations of near-term quantum computers. She is a first-year PhD student in the Theory group who will work with Assistant Professor Henry Yuen.

Parham graduated in 2020 from the University of California, Berkeley with a BS in electrical engineering and computer science and from the University of Waterloo with an MMath in combinatorics and optimization – quantum information in 2022.

She balances her time with strength training, skateboarding, and yoga.

Mandi Zhao

Mandi Zhao

Mandi Zhao is a first-year PhD student working with Assistant Professor Shuran Song. She is interested in data-driven approaches for robotics, such as imitation learning and reinforcement learning, and works on developing algorithms that enable robot learning in highly complex, real-world environments.

Prior to Columbia, she obtained her BA and MS degrees from UC Berkeley, where she was affiliated with Berkeley AI Research (BAIR) advised by Professor Pieter Abbeel. In the summer of 2022, she interned at Meta AI research and worked on scaling up robot imitation learning in the Pittsburgh office.

In her free time, she likes to paint, go hiking, and watch soccer games.

Jerry Liu

Jerry Liu

Jerry Liu is an incoming first-year PhD student advised by Associate Professor

Eugene Wu. His research interests lie in database systems and differential privacy. Particularly, he is interested in privacy-preserving data systems with high usability. During his free time, he likes hiking and playing board games.

Liu graduated from the University of Waterloo with a BS in computer science, statistics, and combinatorics and optimization in 2022.

Sitong Wang

Sitong Wang

Sitong Wang is an incoming first-year PhD student whose work focuses on creativity support in the field of Human-Computer Interaction (HCI). She is broadly interested in research at the intersection of human creativity and technologies and will work with Assistant Professor Lydia Chilton.

Wang earned an MS in computer science in 2021 from Columbia. In 2020 she graduated with a BS in electrical engineering from the University of Cincinnati and a BE in electrical engineering and automation from Chongqing University.

Computer science is one of the most popular degrees at Columbia and each year more and more students are interested in taking a CS course or shifting majors. As the number of students enrolling in computer science classes increases, the department is expanding the number of teaching faculty to ensure a solid grounding in computer science skills and methods for all students.

Some of the largest classes, with 300 students or more, are taught by the teaching faculty. Their classes cover everything from Intro to Computer Science to graduate-level Analysis of Algorithms. Aside from teaching, some of them draw from their research backgrounds to mentor student projects, improve CS education, and advance the computer science field.

We caught up with them to get to know them a little better and to find out how students can do well in their classes!

Daniel Bauer

Daniel Bauer

Classes this semester/year:

ENGI E1006 Introduction to Computing for Engineers Applied Scientists

COMS W4705 Natural Language Processing

What are your research projects at the moment?

I work on a variety of small projects related to natural language semantics. I also work on applications of NLP, such as knowledge graph extraction for human rights research. In addition, I am interested in CS education research, focusing on how to make CS courses more accessible and effective.

What type of student should apply to work on your research projects?

Students interested in NLP research who have taken at least COMS W4705 Natural Language Processing, or an equivalent course. They should be very comfortable programming in Python. Ideally, they’d have first-hand experience with machine learning, including using TensorFlow or PyTorch. For CS education research, students should have a strong interest in education and some prior experience working with students, for example, as a TA. They should also have some experience collecting and analyzing data. In general, I enjoy working with students who can work independently, are enthusiastic about the research topic, and are interested in how the specific work relates to the broader field.

Why did you decide to become a professor?

Some of my undergraduate professors were great role models, who engaged in cutting-edge research but also really cared about their students. I thought being a professor, as opposed to working in industry, would give you the freedom to work on any topic you are interested in, without having to worry about applicability and external demands (that turned out to be an incorrect assumption). When I started working as a TA and later as a Ph.D. student, when I started teaching courses by myself, I discovered that I really enjoyed teaching. So I decided to become teaching faculty.

What do you like about teaching?

I like getting people interested in new areas of CS and hopefully sharing some of my enthusiasm with them. Sometimes, when explaining a tricky (but beautiful) concept to someone, you can watch them have an “aha” moment when all the pieces fall into place. These are the most rewarding situations for me as a teacher. Because I teach courses at all different levels, I get to follow students through the CS program and it’s fun to watch them succeed and grow academically.

What are your tips for students so they can pass your classes?

It’s normal to get stuck, especially if you are new to CS. When students fail classes, there was usually a lack of communication. Be proactive in reaching out to the teaching staff for help, even in large classes. Talk to other students in the class. Make use of all the resources the course provides to you.

Brian Borowski

Classes this semester/year:

COMS W3134 In Data Structures in Java in the Fall

COMS W3175 Advanced Programming in the Spring

Why did you decide to become a professor?

To me, a professor is both an instructor and a mentor. I endeavor to be an effective educator, combining the finest attributes of my high school teachers and college professors while completely disregarding the approach of those who merely went through the motions.

When I was in high school, I wrote a Pascal program to multiply polynomials and combine like terms. It was challenging for me at the time, and I couldn’t wait to show my AP Computer Science teacher. My enthusiasm was met with the response, “Why don’t you go outside and play like a normal boy?”

Fortunately, I wasn’t discouraged and remained committed to computer science all throughout college, graduate school, and my career. Over the years, I’ve taken courses with professors whose enthusiasm for the material shone through as soon as they stepped into the classroom. They would explain WHY something is important as well as how it works and would work tirelessly to get everyone to understand the material. I want to share my knowledge with my students, galvanizing their enthusiasm in the classroom, so they can be inspired to always continue learning and go out into the world and make a difference.

What do you like about teaching?

Every lecture is different, even if you’ve taught the same course for years. Someone will inevitably ask a question for which you don’t know the answer or solve a problem in a way you never considered. It’s exhilarating when a typical class meeting turns into a profound discussion.

Do you have a favorite story about your students that you tell people about?

I don’t have, per se, a favorite story. I delight in my students’ accomplishments whether it be getting a good grade in a course, passing a round of technical interviews, landing an internship or full-time job, being accepted into graduate school, winning a programming competition, or completing a research or software development project. Over the years, my students have shared with me so many success stories that I don’t know where to begin recounting them!

What are your tips for students so they can pass your classes?

For years I have been saying that computer science is not a spectator sport. Students will learn better by trying to solve problems and code solutions on their own rather than by watching someone else do it. Learning takes place while making attempts, and often a small amount of coaching will get a student back on track.

Adam Cannon

Classes this semester/year:

COMS W1002 Computing in Context in the Fall

COMS W1004 Intro to CS in the Spring

Why did you decide to become a professor?

I love teaching good students and working in a university environment. I have worked in industry as an engineer and I’ve been a full-time researcher at a national lab, but I’ve always felt most at home on a university campus.

How did you end up teaching CS?

When I was getting out of grad school, I applied mostly to math departments but the work I was doing was on the interface of discrete math, statistics, and CS, so I also applied for a couple of CS positions. I didn’t think I would be teaching for long but wanted to try it and Columbia CS seemed like a good place to do that.

What do you like about teaching?

There’s a feeling you get when your students understand something new for the first time. I’ve heard some teachers call it a “teacher’s high.” It’s a thing and I like it. Then there’s something specific to STEM and that’s bringing people in that never really considered a career in STEM before. Broadening participation in CS and more generally in STEM is something that resonates with me.

What are your tips for students so they can pass your classes?

1. Go to class

2. Do the homework.

3. Do the reading

That’s it.

Tony Dear

Classes this semester/year:

COMS W3203 Discrete Math

ORCS 4200 Data-Driven Decision Modeling

About research, are you working on anything now?

I am investigating using deep reinforcement learning for robot locomotion.

What types of projects have you worked on with your students?

In an ongoing project, students have investigated wheeled and swimming snake robots in simulation to endow them with the ability to learn how to locomote. Other projects have covered using a combination of reinforcement learning with genetic algorithms to enable humanoid robot locomotion and using real-time replanning algorithms to coordinate motion planning for multiple robots.

Why did you decide to become a professor?

I am driven by the pursuit and dissemination of knowledge. My job allows me to be colleagues with experts who are authorities in their field. And I get to interact regularly with bright students who teach me new things all the time and have the potential to do great work.

What do you like about teaching?

Guiding students from the very beginning of the course to the end and seeing them make all the little connections. Continuing to interact with talented students when they work with me as TAs and seeing them solidify their knowledge. Learning about students’ diverse backgrounds and experiences, and how they’ve applied their knowledge outside the classroom.

Do you have a favorite story about your students that you tell people about?

I’ve taught a wide array of students, some of whom are older than myself. One such student had a couple of decades of industry experience before coming back to school for his graduate degree. He was an extremely talented student in my classes and ended up TAing with me in a later semester. After graduating, he took up an adjunct professor position to teach computer science at an even broader level, partially attributing this decision to his experiences working with me.

What are your tips for students so they can pass your classes?

Different strategies work for different students. But the most successful students in my classes tend to manage their time well, can focus without getting easily distracted, and ask questions as soon as they need help.

Eleni Drinea

Classes this semester/year:

CSOR W4246 Algorithms for Data Science in the Fall

CSOR W4231 Analysis of Algorithms in the Spring

What do you like about teaching?

What I mostly enjoy about teaching are the materials I teach and my students’ enthusiasm and brilliance. I love teaching courses on algorithms where we solve a wide range of interesting problems as efficiently as possible. I am fortunate to have hard-working students, often with diverse academic backgrounds (ranging from Computer Science, and Engineering more generally, to Statistics and Physics), who ask challenging questions and can offer insights and connections with other fields.

Do you have a favorite story about your students that you tell people about?

When I was teaching the Data Science Capstone & Ethics course (ENGI 4800) in Fall 2017, a student team I was supervising worked so diligently that they finished their semester-long project on Deep Learning in just two weeks. Their industry project mentor was very impressed: He extended the scope of the project to include a heavier research component and recruited two out of the five students in the team to their company (MediaMath).

What are your tips for students so they can pass your classes?

Students should always ask questions in class (or during office hours or on EdStem) if there is anything unclear from the lectures. I encourage students to pursue and test extensively their own ideas when solving homework problems to develop confidence in their own algorithmic thinking. I also recommend working through the optional exercises I provide in every homework. Finally, I encourage students to form study groups, which offer an effective and enjoyable way to learn.

Jae Woo Lee

Classes this semester/year:

On sabbatical this year

COMS 3157 Advanced Programming

COMS 4118 Operating Systems

Why did you decide to become a professor?

I’ll answer a slightly narrower question: why I became a teaching-focused professor, rather than a research-focused one. Midway into my PhD years, the department needed an instructor for AP at the last minute, due to an unexpected departure of the instructor who was assigned to teach it. I volunteered, thinking not much more than that it wouldn’t hurt to have an actual instructor experience on my resume. This turned out to be a life-changing experience for me. I spent all my time that semester creating my version of the course and teaching it (and did zero research…). After that, I knew that teaching was what I wanted to do.

What do you like about teaching?

I don’t really have one magical part of teaching that I love the most. I like all the normal things about teaching, especially when I try my best and end up doing them well. Some of the things that make me happy: preparing a well-developed lecture and delivering it exactly as I planned it, anticipating difficult questions and being ready to answer them, developing cool assignments with crystal-clear and well-thought-out instructions, writing sample code as carefully and beautifully as I can, coming up with sharp exam questions that are not unexpected yet challenging, and of course, hearing from students that they appreciate the efforts.

Do you have a favorite story about your students that you tell people about?

I get this question from time to time, but I never have an answer ready. I do have some stories about former students and TAs, but I don’t usually share them with others. I haven’t found a good story for which I am also certain that everyone involved would be OK with it being shared…

What are your tips for students so they can pass your classes?

It’s usually pretty easy to pass AP: Keep trying until the end and don’t cheat. A related question would be: How can one do well in AP? There are many tips, and my TAs and I share them at every chance we get. I’ll say one of them that some people find surprising: Get as little help as you can manage. Don’t run to a TA office hour at the first sign of trouble. Try to fix things yourself as much as possible. Even if you end up not going anywhere and needing some TA help eventually, the time you spent thinking and debugging is never wasted.

Ansaf Salleb-Aouissi

Ansaf Salleb-Aouissi

Classes this semester/year:

COMS 4701 Artificial Intelligence

COMS 3202 Discrete Mathematics

What are your research projects at the moment?

My current research focuses on predicting and preventing adverse pregnancy outcomes like premature birth and preeclampsia.

I am also working on predicting the consequences of spine surgery like PJK (Proximal Junctional Kyphosis). On a more fundamental aspect, I study counterfactual explanations and physics law discovery from data. I strongly believe that machine learning will be a game changer in advancing science and medicine, in the next decade or so.

My overall focus is research and applications of AI for the benefit of science and education.

What type of student should apply to work on your research projects?

In general, my projects require students with backgrounds in machine learning/artificial intelligence. Whenever possible, I also involve students without experience to work on real-world problems, do data cleaning, and just learn how research goes. I am fully staffed this Fall though!

Why did you decide to become a professor?

I loved teaching ever since I was a kid. I recall mimicking my teachers, solving problems with chalk and board at home, and explaining the solution steps to an imaginary audience.

Later in high school and college, I volunteered to tutor my little neighbors and siblings. For me, teaching is a duty, a way to give back to society. It is one of the most demanding yet satisfying activities that teaches, in return, compassion and selflessness, and pushes the frontier of one’s own knowledge.

What do you like about teaching?

I like breaking up concepts to make them accessible to all students. It is for me a way to be creative about how to present things. I also genuinely care about how students perceive a new concept; I care about their self-esteem and happiness in my class.

I believe in a stress-free and inclusive teaching environment that can reach every student in class despite their difference in backgrounds and preparation.

Do you have a favorite story about your students that you tell people about?

I have many stories of students who came to class not on good terms with mathematics but ended up doing very well. Discrete math for me is the reconciliation of many young students with math that they perceived in high school as an inaccessible subject but then they discover the beauty of proofs and critical thinking, and how math is not boring but an engaging subject that sparks creativity.

What are your tips for students so they can pass your classes?

Just sit, relax, and enjoy learning the material. Make sure you do your own homework all by yourself and get the most out of it. Feel free to reach out to me anytime if you have any questions, and I will be more than happy to help. Then, not only will you pass my class, you will excel and thrive.

Nakul Verma

Nakul Verma

Classes this semester/year:

COMS 4771 Machine Learning

COMS 4774 Unsupervised Learning

What are your research projects at the moment?

I like working on various aspects of machine learning problems and high-dimensional statistics. I am especially interested in analyzing how data geometry affects the learning problem. My theoretical projects include studying how to design effective machine learning algorithms in non-Euclidean spaces. My practical projects include developing algorithms and systems to do data analysis on domain-specific structured data, such as clustering mathematical documents or discovering patterns in neuroscience data.

What type of student should apply to work on your research projects?

Students with a strong mathematical background, who are interested in unsupervised learning or topological data analysis.

Why did you decide to become a professor?

Being a professor not only provides me the freedom to study a topic in greater depth but also gives me the opportunity to learn from and interact with world-class students and faculty. I find getting to know students and helping them achieve their goals extremely rewarding.

What do you like about teaching?

Teaching lets me share my passion for the subject with my students. It is very fulfilling to see students apply what they learned in class to their ML-related projects outside of class in creative ways.

Do you have a favorite story about your students that you tell people about?

I occasionally get students who are very stressed about taking my class because of “all the math” that is involved. I once had a student who was very underprepared to take my class. They let me know their concerns at the beginning of the semester, so we developed a plan to strengthen their math skills alongside doing their regular assignments. It was a struggle, and at times I really thought that the student would not be able to succeed. But to my pleasant surprise, this student ended up doing very well in class. I was truly impressed by what they were able to accomplish over the semester. I like to share this story to remind everyone, including myself, that planning ahead, communicating, and devoting time can and does make a remarkable difference.

What are your tips for students so they can pass your classes?

I think “passing” my class is easy, but to excel I recommend getting a solid understanding of the basics and doing consistent work throughout the semester.

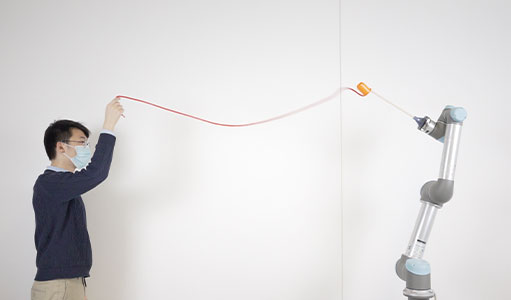

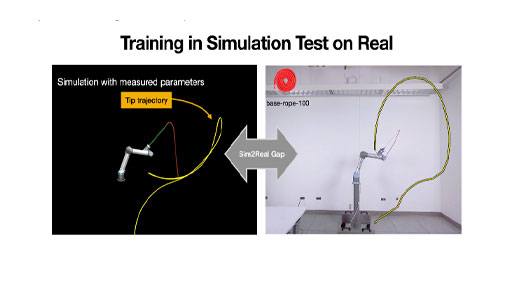

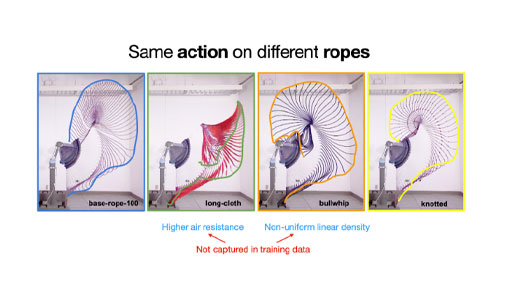

In the Columbia Artificial Intelligence and Robotics (CAIR) Lab, Cheng Chi stands in front of a robotic arm. At the end of the arm sits a yellow plastic cup. His goal at the moment is to use a piece of rope to hit the cup to the ground.