AI in Elections: How Should Society — and Engineers — Respond?

Eugene Wu and Brian Smith share their thoughts on how to ensure that emerging technologies benefit humanity.

Eugene Wu and Brian Smith share their thoughts on how to ensure that emerging technologies benefit humanity.

Brian Smith received an NSF CAREER Award to develop a framework that will improve digital imagery so that blind and low-vision (BLV) individuals can better perceive and interact with visual content in the digital realm.

The third-year PhD student is creating tools to help people with vision impairments navigate the world.

Imagine walking to your office from the subway station on a Monday morning. You notice a new café on the way, so you decide to take a detour and try a latté. That sounds like a normal way to start the week, right?

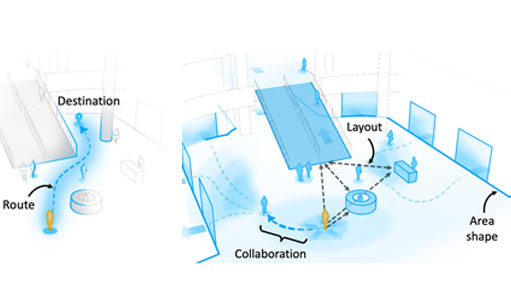

But for people with vision impairment or low vision, like those who are categorized as blind and low vision (BLV), this kind of spontaneous exploration while outside is challenging. Current navigation assistance systems (NAS) provide turn-by-turn instructions, but they do not allow visually impaired users to deviate from the shortest path to their destination or make decisions on the fly. As a result, people with vision impairment or low vision often miss out on the freedom to go out and navigate on their own terms.

In a paper published at the ACM Conference On Computer-Supported Cooperative Work And Social Computing (CSCW ‘23), computer science researchers introduced the concept of “Exploration Assistance,” which is an evolution of current NASs that can support BLV people’s exploration in unfamiliar environments. Led by Gaurav Jain, the researchers investigated how NASs should be designed by interviewing BLV people, orientation and mobility instructors, and leaders of blind-serving organizations, to understand their specific needs and challenges. Their findings highlight the types of spatial information required for exploration beyond turn-by-turn instructions and the difficulties faced by BLV people when exploring alone or with the help of others.

Jain, who is advised by Assistant Professor Brian Smith, is a PhD student in the Computer-Enabled Abilities Laboratory (CEAL Lab), where researchers develop computers that help people perceive and interact with the world around them. Their paper presents the results of interviews with BLV people and other stakeholders to identify the types of spatial information BLV people need for exploration and the challenges BLV people face when exploring unfamiliar environments. The paper offers insights into the design and development of new navigation assistance systems that can support BLV people in exploring unfamiliar environments with greater spontaneity and agency.

Based on their findings, they presented several instances of NASs that support the exploration assistance paradigm and identify several challenges that need to be overcome to make these systems a reality. Jain hopes that his research will ultimately enable BLV people to experience greater agency and independence as they navigate and explore their environments. We sat down with Jain to learn more about his research, doing qualitative research, and the thought processes behind writing research papers.

This research is incredibly exciting for the blind and low vision (BLV) community, as it represents a significant step towards equal access and agency in exploring unfamiliar environments. For BLV people, the ability to navigate and explore independently is essential to daily life, and current navigation assistance systems often limit their ability to do so. By introducing the concept of exploration assistance, this research opens up new possibilities for BLV people to explore and discover their surroundings with greater spontaneity and freedom. This research has the potential to significantly improve the quality of life for BLV people and is a major development in the ongoing pursuit of accessibility and inclusion for all.

This was my first project as a PhD student in the CEAL lab. The project was initiated as a camera-based wearable NAS for BLV people, and we conducted several formative studies with BLV people.

As we progressed, we realized that there was a significant research gap in the research community’s understanding of how NASs could support BLV people’s exploration in navigation. Based on these findings, we shifted our focus toward investigating this gap, and the paper I worked on was the result of this pivot. The paper is titled, “I Want to Figure Things Out”: Supporting Exploration in Navigation for People with Visual Impairments.

Over the course of approximately one year, I had the opportunity to work on this project that challenged me to step outside of my comfort zone as a human-computer interaction (HCI) researcher. Before this project, my research experience had primarily focused on computer vision and deep learning. I was more at ease with HCI systems research, which involved designing, building, and evaluating tools and techniques to solve user problems.

This project, however, was a qualitative research study that aimed to gain a deeper understanding of user needs, behaviors, challenges, and attitudes toward technology through in-depth interviews, observations, and other qualitative data collection methods. To prepare for this project, I had to immerse myself in the field of accessibility and navigation assistance for BLV people and read extensively on papers that employed qualitative research methods.

Although it took some time for me to shift my mindset towards qualitative research, this project helped me become a more well-rounded researcher, as I now feel comfortable with both qualitative and systems research. Overall, this project was a significant personal and professional growth experience, as I was able to expand my research expertise and contribute to a worthy cause.

Writing the paper was a critical stage in the research process, and I approached it by first organizing my thoughts and drafting a clear outline. I started by creating an outline of the paper with section and subsection headers, accompanied by a brief summary of what I intended to discuss in each section. This process allowed me to see the overall structure of the paper and ensure that I covered all the essential elements.

Once I had a clear structure in mind, I began to tackle each section of the paper one by one, starting with the introduction and then moving on to the methods, results, and discussion sections. I iteratively refined my writing based on feedback from my advisor, lab mates, and friends.

Throughout the writing process, I also ensured that my writing was clear, concise, and easy to follow. I paid close attention to the flow of ideas and transitions between sections, making sure that each paragraph and sentence contributed to the overall argument and was well-supported by the evidence.

Overall, the process of writing the paper was challenging but rewarding. It allowed me to synthesize the research findings and present them in a compelling way, showcasing the impact of our work on the lives of BLV people.

Throughout the research process, I encountered various challenges that both surprised and tested me. Interviewing participants, in particular, proved to be an intriguing yet difficult task. Initially, I struggled to guide conversations naturally toward my research questions without leading participants toward a certain answer. However, with each interview, I became more confident and began to enjoy the process. Hearing firsthand from BLV people that our work could make a real impact on their lives was also incredibly rewarding.

Analyzing and synthesizing the interview data was another major challenge. Unlike quantitative data, conversations are often open-ended and context-dependent, making it difficult to separate my own biases from the interviewee’s responses. I spent a considerable amount of time reviewing the interview transcripts and identifying emerging themes. To facilitate this process, I leveraged tools like NVivo to better organize the interview data, and our team held several discussions to refine these themes. To ensure the accuracy of our interpretation, we sought feedback from two BLV interns who worked with us over the summer on another project.

Overall, this research experience pushed me to become more adaptable. While it presented its own unique set of challenges, I am proud to have contributed to a project that has the potential to create meaningful change in the lives of BLV people.

Yes, my experience with this research project has certainly changed my view on how to approach research. It has taught me the importance of keeping the paper in mind from the beginning of a project.

Now, I make a conscious effort to think about how I want to present my work and what story I want to tell with the research. This helps me gain more clarity on the direction of the project and how to steer it toward producing meaningful results. As part of my workflow, I now write early drafts of paper introductions even before developing any tools or systems. This allows me to zoom out from the day-to-day technical challenges and see the big picture, which is crucial in making sure that the research is both impactful and well-presented.

Writing a research paper can be a challenging task, but here are a few tips that have helped me make the process smoother:

Finally, one resource that I would totally recommend to every PhD student at Columbia is Adjunct Professor Janet Kayfetz’s class on Technical Writing. Her class is an excellent way to deeply understand research writing.

I am currently working on two exciting projects that further my research goal of developing inclusive physical and digital environments for BLV people. The first project involves enhancing the capabilities of smart streets, streets with sensors like cameras and computing power, to help BLV people navigate street intersections safely.

This project is part of the NSF Engineering Research Center for Smart Streetscapes’ application thrust. The second project is focused on making videos accessible to BLV people by creating high-quality audio descriptions available at scale.

My exposure to research during my undergrad was invaluable, as it allowed me to work on diverse projects utilizing computer vision for various applications such as biometric security and medical imaging. These experiences instilled in me a passion for the research process. It was fulfilling to be able to identify problems that I care about, explore solutions, and disseminate new knowledge.

While I knew I enjoyed research, it was during the summer research fellowship at the Indian Institute of Sciences, where I collaborated with Professor P. K. Yalavarthy in the Medical Imaging Group, that crystallized my decision to pursue a PhD. The opportunity to work in a research lab, lead a project, and receive mentorship from an experienced advisor provided a glimpse of what a PhD program entails. I was excited by the prospect of being able to make a real-world impact by solving complex problems, and it was then that I decided to pursue a career in research.

I am interested in building Human-AI systems that embed AI technologies (e.g., computer vision) into human interactions to help BLV people better experience the world around them. My work on exploration assistance informs the design of future navigation assistance systems that enable BLV people to experience the physical world with more agency and spontaneity during navigation.

In addition to the physical world, I’ve also broadened my research focus to enhance BLV people’s experiences within the digital world. For example, I developed a system that makes it possible for BLV people to visualize the action in sports broadcasts rather than relying on other people’s descriptions of the game.

Accessibility research has traditionally focused on aiding daily-life activities and providing access to digital information for productivity and work, but there’s an increasing realization that providing access to everyday cultural experiences is equally important for inclusion and well-being.

This encompasses various forms of entertainment and recreation, such as watching TV, exploring museums, playing video games, listening to music, and engaging with social media. Ensuring that everyone has equal opportunities to enjoy these experiences is an emerging challenge. My goal is to design human-AI systems that enhance such experiences.

I was drawn to Columbia CS because of the type of problems my advisor works on. His research focused on creating systems that have a direct impact on people’s lives, where evaluating the user’s experience with the system is a key component.

This was a departure from my undergraduate research, where I focused on building systems to achieve high accuracy and efficiency. I found this user-centered approach to be extremely exciting, especially in the context of his project “RAD,” which aimed to make video games accessible to blind gamers. It was a super exciting prospect to be working on similar problems where you can firsthand see how people reacted and benefited from your solutions. This still remains one of the most fulfilling aspects of HCI research for me. In the end, this is what led me to choose Columbia and work with Brian Smith.

The first thing that comes to mind is the people that I have had the pleasure of working with and meeting. I am grateful for the opportunity to learn from my advisor and appreciate the incredible atmosphere he has created for me to thrive.

Additionally, I have been fortunate enough to make some amazing friends here at Columbia who have become a vital support system. Balancing work with passions outside of work has also been important to me, and I am grateful for the chance to engage with student clubs such as the dance team, Columbia Bhangra, and meet some amazing people there as well. Overall, the community at Columbia has been a highlight for me.

One thing that students wanting to do research should know is that research involves a lot of uncertainty and ambiguity. In fact, dealing with uncertainty can be one of the most challenging aspects of research, even more so than learning the technical skills required to complete a project.

In my own experience, staying motivated about the problem statement has been key to powering through those uncertain moments. Therefore, it is important to be true to yourself about what you are really excited about and work on those problems. Ultimately, this approach can go a long way in helping you navigate your time at Columbia and make the most of your research opportunities.

CS researchers had a strong showing at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2023), with seven papers and two posters accepted. The premier international conference of Human-Computer Interaction (HCI) brings together researchers and practitioners who have an overarching goal to make the world a better place with interactive digital technologies.

Memento Player: Shared Multi-Perspective Playback of Volumetrically-Captured Moments in Augmented Reality

Yimeng Liu UC Santa Barbara, Jacob Ritchie Stanford University, Sven Kratz Snap Inc., Misha Sra UC Santa Barbara, Brian A. Smith Columbia University, Andrés Monroy-Hernández Princeton University, Rajan Vaish Snap Inc.

Capturing and reliving memories allow us to record, understand and share our past experiences. Currently, the most common approach to revisiting past moments is viewing photos and videos. These 2D media capture past events that reflect a recorder’s first-person perspective. The development of technology for accurately capturing 3D content presents an opportunity for new types of memory reliving, allowing greater immersion without perspective limitations. In this work, we adopt 2D and 3D moment-recording techniques and build a moment-reliving experience in AR that combines both display methods. Specifically, we use AR glasses to record 2D point-of-view (POV) videos, and volumetric capture to reconstruct 3D moments in AR. We allow seamless switching between AR and POV videos to enable immersive moment reliving and viewing of high-resolution details. Users can also navigate to a specific point in time using playback controls. Control is synchronized between multiple users for shared viewing.

Towards Accessible Sports Broadcasts for Blind and Low-Vision Viewers

Gaurav Jain Columbia University, Basel Hindi Columbia University, Connor Courtien Hunter College, Xin Yi Therese Xu Pomona College, Conrad Wyrick University of Florida, Michael Malcolm SUNY at Albany, Brian A. Smith Columbia University

Abstract:

Blind and low-vision (BLV) people watch sports through radio broadcasts that offer a play-by-play description of the game. However, recent trends show a decline in the availability and quality of radio broadcasts due to the rise of video streaming platforms on the internet and the cost of hiring professional announcers. As a result, sports broadcasts have now become even more inaccessible to BLV people. In this work, we present Immersive A/V, a technique for making sports broadcasts —in our case, tennis broadcasts— accessible and immersive to BLV viewers by automatically extracting gameplay information and conveying it through an added layer of spatialized audio cues. Immersive A/V conveys players’ positions and actions as detected by computer vision-based video analysis, allowing BLV viewers to visualize the action. We designed Immersive A/V based on results from a formative study with BLV participants. We conclude by outlining our plans for evaluating Immersive A/V and the future implications of this research.

Supporting Piggybacked Co-Located Leisure Activities via Augmented Reality

Samantha Reig Carnegie Mellon University, Erica Principe Cruz Carnegie Mellon University, Melissa M. Powers New York University, Jennifer He Stanford University, Timothy Chong University of Washington, Yu Jiang Tham Snap Inc., Sven Kratz Independent, Ava Robinson Snap Inc., Brian A. Smith Columbia University, Rajan Vaish Snap Inc., Andrés Monroy-Hernández Princeton University

Abstract:

Technology, especially the smartphone, is villainized for taking meaning and time away from in-person interactions and secluding people into “digital bubbles”. We believe this is not an intrinsic property of digital gadgets, but evidence of a lack of imagination in technology design. Leveraging augmented reality (AR) toward this end allows us to create experiences for multiple people, their pets, and their environments. In this work, we explore the design of AR technology that “piggybacks” on everyday leisure to foster co-located interactions among close ties (with other people and pets). We designed, developed, and deployed three such AR applications, and evaluated them through a 41-participant and 19-pet user study. We gained key insights about the ability of AR to spur and enrich interaction in new channels, the importance of customization, and the challenges of designing for the physical aspects of AR devices (e.g., holding smartphones). These insights guide design implications for the novel research space of co-located AR.

Towards Inclusive Avatars: Disability Representation in Avatar Platforms

Kelly Mack University of Washington, Rai Ching Ling Hsu Snap Inc., Andrés Monroy-Hernández Princeton University, Brian A. Smith Columbia University, Fannie Liu JPMorgan Chase

Abstract:

Digital avatars are an important part of identity representation, but there is little work on understanding how to represent disability. We interviewed 18 people with disabilities and related identities about their experiences and preferences in representing their identities with avatars. Participants generally preferred to represent their disability identity if the context felt safe and platforms supported their expression, as it was important for feeling authentically represented. They also utilized avatars in strategic ways: as a means to signal and disclose current abilities, access needs, and to raise awareness. Some participants even found avatars to be a more accessible way to communicate than alternatives. We discuss how avatars can support disability identity representation because of their easily customizable format that is not strictly tied to reality. We conclude with design recommendations for creating platforms that better support people in representing their disability and other minoritized identities.

ImageAssist: Tools for Enhancing Touchscreen-Based Image Exploration Systems for Blind and Low-Vision Users

Vishnu Nair Columbia University, Hanxiu ’Hazel’ Zhu Columbia University, Brian A. Smith Columbia University

Abstract:

Blind and low vision (BLV) users often rely on alt text to understand what a digital image is showing. However, recent research has investigated how touch-based image exploration on touchscreens can supplement alt text. Touchscreen-based image exploration systems allow BLV users to deeply understand images while granting a strong sense of agency. Yet, prior work has found that these systems require a lot of effort to use, and little work has been done to explore these systems’ bottlenecks on a deeper level and propose solutions to these issues. To address this, we present ImageAssist, a set of three tools that assist BLV users through the process of exploring images by touch — scaffolding the exploration process. We perform a series of studies with BLV users to design and evaluate ImageAssist, and our findings reveal several implications for image exploration tools for BLV users.

Improving Automatic Summarization for Browsing Longform Spoken Dialog

Daniel Li Columbia University, Thomas Chen Microsoft, Alec Zadikian Google, Albert Tung Stanford University, Lydia B. Chilton Columbia University

Abstract:

Longform spoken dialog delivers rich streams of informative content through podcasts, interviews, debates, and meetings. While production of this medium has grown tremendously, spoken dialog remains challenging to consume as listening is slower than reading and difficult to skim or navigate relative to text. Recent systems leveraging automatic speech recognition (ASR) and automatic summarization allow users to better browse speech data and forage for information of interest. However, these systems intake disfluent speech which causes automatic summarization to yield readability, adequacy, and accuracy problems. To improve navigability and browsability of speech, we present three training agnostic post-processing techniques that address dialog concerns of readability, coherence, and adequacy. We integrate these improvements with user interfaces which communicate estimated summary metrics to aid user browsing heuristics. Quantitative evaluation metrics show a 19% improvement in summary quality. We discuss how summarization technologies can help people browse longform audio in trustworthy and readable ways.

Social Dynamics of AI Support in Creative Writing

Katy Ilonka Gero Columbia University, Tao Long Columbia University, Lydia Chilton Columbia University

Abstract:

Recently, large language models have made huge advances in generating coherent, creative text. While much research focuses on how users can interact with language models, less work considers the social-technical gap that this technology poses. What are the social nuances that underlie receiving support from a generative AI? In this work we ask when and why a creative writer might turn to a computer versus a peer or mentor for support. We interview 20 creative writers about their writing practice and their attitudes towards both human and computer support. We discover three elements that govern a writer’s interaction with support actors: 1) what writers desire help with, 2) how writers perceive potential support actors, and 3) the values writers hold. We align our results with existing frameworks of writing cognition and creativity support, uncovering the social dynamics which modulate user responses to generative technologies.

AngleKindling: Supporting Journalistic Angle Ideation with Large Language Models

Savvas Petridis Columbia University, Nicholas Diakopoulos Northwestern University, Kevin Crowston Syracuse University, Mark Hansen Columbia University, Keren Henderson Syracuse University, Stan Jastrzebski Syracuse University, Jefrey V. Nickerson Stevens Institute of Technology, Lydia B. Chilton Columbia University

Abstract:

News media often leverage documents to find ideas for stories, while being critical of the frames and narratives present. Developing angles from a document such as a press release is a cognitively taxing process, in which journalists critically examine the implicit meaning of its claims. Informed by interviews with journalists, we developed AngleKindling, an interactive tool which employs the common sense reasoning of large language models to help journalists explore angles for reporting on a press release. In a study with 12 professional journalists, we show that participants found AngleKindling significantly more helpful and less mentally demanding to use for brainstorming ideas, compared to a prior journalistic angle ideation tool. AngleKindling helped journalists deeply engage with the press release and recognize angles that were useful for multiple types of stories. From our findings, we discuss how to help journalists customize and identify promising angles, and extending AngleKindling to other knowledge-work domains.

PopBlends: Strategies for Conceptual Blending with Large Language Models

Sitong Wang Columbia University, Savvas Petridis Columbia University, Taeahn Kwon Columbia University, Xiaojuan Ma Hong Kong University of Science and Technology, Lydia B. Chilton Columbia University

Pop culture is an important aspect of communication. On social media people often post pop culture reference images that connect an event, product, or other entity to a pop culture domain. Creating these images is a creative challenge that requires finding a conceptual connection between the users’ topic and a pop culture domain. In cognitive theory, this task is called conceptual blending. We present a system called PopBlends that automatically suggests conceptual blends. The system explores three approaches that involve both traditional knowledge extraction methods and large language models. Our annotation study shows that all three methods provide connections with similar accuracy, but with very different characteristics. Our user study shows that people found twice as many blend suggestions as they did without the system, and with half the mental demand. We discuss the advantages of combining large language models with knowledge bases for supporting divergent and convergent thinking.

Elias Bareinboim, Brian Smith, and Shuran Song join the department.

Elias Bareinboim

Associate Professor, Computer Science

Director, Causal Artificial Intelligence Lab

Member, Data Science Institute

PhD, Computer Science, University of California, Los Angeles (UCLA), 2014

BS & MS, Computer Science, Federal University of Rio de Janeiro (UFRJ), 2007

Elias Bareinboim’s research focuses on causal and counterfactual inference and its application to data-driven fields in the health and social sciences as well as artificial intelligence and machine learning. His work was the first to propose a general solution to the problem of “causal data fusion,” providing practical methods for combining datasets generated under heterogeneous experimental conditions and plagued with various biases. This theory and methods constitute an integral part of the discipline called “causal data science,” which is a principled and systematic way of performing data analysis with the goal of inferring cause and effect relationships.

More recently, Bareinboim has been investigating how causal inference can help to improve decision-making in complex systems (including classic reinforcement learning settings), and also how to construct human-friendly explanations for large-scale societal problems, including fairness analysis in automated systems.

Bareinboim is the recipient of the NSF Faculty Early Career Development (CAREER) Award, IEEE AI’s 10 to Watch, and a number of best paper awards. Later this year, he will be teaching a causal inference class intended to train the next generation of causal inference researchers and data scientists. Bareinboim directs the Causal Artificial Intelligence Lab, which currently has open positions for Ph.D. students and Postdoctoral scholars.

Brian Smith

Assistant Professor

PhD, Computer Science, Columbia University, 2018

MPhil, Computer Science, Columbia University, 2015

MS, Computer Science, Columbia University, 2011

BS, Computer Science, Columbia University, 2009

Brian Smith’s interests lie in human-computer interaction (HCI) and creating computers that can help people better experience the world. His past research on video games for the visually impaired was featured in Quartz, TechCrunch, the Huffington Post, among others.

Smith has spent the last year at Snap Research (Snap is best known for Snapchat) developing new concepts in human–computer interaction (HCI), games, social computing, and augmented reality. He will continue to work on projects with Snap while at Columbia.

He comes back to the department as an assistant professor and is set to teach a class on user interface design this fall. That class had a waitlist of 235 students hoping to be part of the class. Smith shared that back when he was a student, there were only 35 students in the class he was enrolled in. “There is definitely more interest in computer science now compared to even five years ago,” he said.

Smith hopes to start a HCI group and is looking for PhD students. He encourages students from underrepresented groups to apply.

Shuran Song

Assistant Professor

PhD, Computer Science, Princeton University, 2018

MS, Computer Science, Princeton University, 2015

BEng, Computer Engineering, Hong Kong University of Science and Technology, 2013

Shuran Song is interested in artificial intelligence with an emphasis on computer vision and robotics. The goal of her research is to enable machines to perceive and understand their environment in a way that allows them to intelligently operate and assist people in the physical world.

Previously, Song worked at Google Brain Robotics as a researcher and developed TossingBot, a robot that learns to how to accurately throw arbitrary objects through self-supervised learning.

This fall, she is teaching a seminar class on robot learning. Song currently has one PhD student who is working on active perception — enabling robots to learn from their interactions with the physical world, and autonomously acquire the perception and manipulation skills necessary to execute complex tasks. She is looking for more students who are interested in machine learning for vision and robotics.

Find open faculty positions here.

President Bollinger announced that Columbia University along with many other academic institutions (sixteen, including all Ivy League universities) filed an amicus brief in the U.S. District Court for the Eastern District of New York challenging the Executive Order regarding immigrants from seven designated countries and refugees. Among other things, the brief asserts that “safety and security concerns can be addressed in a manner that is consistent with the values America has always stood for, including the free flow of ideas and people across borders and the welcoming of immigrants to our universities.”

This recent action provides a moment for us to collectively reflect on our community within Columbia Engineering and the importance of our commitment to maintaining an open and welcoming community for all students, faculty, researchers and administrative staff. As a School of Engineering and Applied Science, we are fortunate to attract students and faculty from diverse backgrounds, from across the country, and from around the world. It is a great benefit to be able to gather engineers and scientists of so many different perspectives and talents – all with a commitment to learning, a focus on pushing the frontiers of knowledge and discovery, and with a passion for translating our work to impact humanity.

I am proud of our community, and wish to take this opportunity to reinforce our collective commitment to maintaining an open and collegial environment. We are fortunate to have the privilege to learn from one another, and to study, work, and live together in such a dynamic and vibrant place as Columbia.

Sincerely,

Mary C. Boyce

Dean of Engineering

Morris A. and Alma Schapiro Professor