Year: 2017

Henning Schulzrinne named to North American Numbering Committee

![]()

Henning Schulzrinne, the Julian Clarence Levi Professor of Mathematical Methods and Computer Science at Columbia University, has been appointed to the North American Numbering Council (NANC), a federal committee advising the Federal Communications Committee (FCC) on the efficient and impartial administration of telephone numbering resources in North America. Among other responsibilities, the NANC recommends the creation of new area codes when the supply of numbers diminishes due to demand, and it advises on policy and technical issues involving numbering resources. On the Committee, he hopes to accelerate the transition to a more Internet-based and capable system for assigning and managing telephone numbers, adding the ability to prevent the spoofing of caller ID and to port numbers nationwide.

Schulzrinne, who last month completed his second term as Chief Technology Officer at the FCC, has worked with the FCC in a number of positions over the past seven years, helping shape public policy and providing guidance on technology and engineering issues. Schulzrinne played a major role in the FCC’s decision to require mobile carriers to enable customers to contact 911 using text messages. He is active also in technology solutions to limit phone spam (“robocalls”) and enable relay services for people who are deaf or hard of hearing.

As a researcher in applied networking, Schulzrinne is particularly known for his contributions in developing the Session Initiation Protocol (SIP) and Real-Time Transport Protocol (RTP), the key protocols that enable Voice-over-IP (VoIP) and other multimedia applications. Each is now an Internet standard and together VoIP and SIP have had an immense impact on telecommunications, both by greatly reducing consumer costs and by providing a flexible alternative to the traditional and expensive public-switched telephone network.

Last year Schulzrinne received the 2016 IEEE Internet Award for “formative contributions to the design and standardization of Internet multimedia protocols and applications.” Previously he was named an ACM Fellow (2015), receiving also in 2015 an Outstanding Service Award by the Internet Technical Committee (ITC), of which he was the founding chair. In 2013, Schulzrinne was inducted into the Internet Hall of Fame. Other notable awards include the New York City Mayor’s Award for Excellence in Science and Technology and the VON Pioneer Award. Active in serving the broader technology community, Schulzrinne is a past member of the Board of Governors of the IEEE Communications Society and a former vice chair of ACM SIGCOMM. He has served on the editorial board of several key publications, chaired important conferences, and published more than 250 journal and conference papers and more than 86 Internet Requests for Comment. He was recently appointed to the Intelligent Infrastructure Task Force of the Computing Community Consortium.

Schulzrinne continues to work on VoIP and other multimedia and networking applications and is currently investigating an overall architecture for the Internet of Things, including new user-friendly means of authentication, and how to protect the electric grid against cyberattacks.

Schulzrinne received his undergraduate degree in economics and electrical engineering from the Darmstadt University of Technology, Germany, his MSEE degree as a Fulbright scholar from the University of Cincinnati, Ohio, and his PhD from the University of Massachusetts in Amherst, Massachusetts.

Posted 11/20/2017

Computer Science and Interdisciplinary Collaboration at Columbia: Projects in Two Labs

![]()

![]()

![]() Interdisciplinary collaborations are needed today because the hard problems—in medicine, environmental science, biology, security and privacy, and software engineering—are interdisciplinary. Too complex to fit within one or even two disciplines, they require the collective efforts of those with different types of expertise and different perspectives.

Interdisciplinary collaborations are needed today because the hard problems—in medicine, environmental science, biology, security and privacy, and software engineering—are interdisciplinary. Too complex to fit within one or even two disciplines, they require the collective efforts of those with different types of expertise and different perspectives.

Computer scientists are in high demand as collaborators, and not just because the computer is indispensable in virtually all fields today. The computational, or algorithmic, approach—where a task is systematically decomposed into its component parts—is itself a powerful problem-solving technique that transfers across disciplines. New techniques in machine learning, natural language processing, robotics, computer graphics, visualization, and augmented reality make it possible to present and think about information in ways not possible before.

The benefits flow both ways. Collaborations offer computer scientists the chance to work on new problems they might not otherwise consider. In some cases, collaborations can change the direction of their own research.

“The most successful collaborations revolve around problems that interest everyone involved,” says Julia Hirschberg, chair of Columbia’s Computer Science Department. She adds that collaborations often require time and negotiating. “It might take a while to find out what’s interesting to you, what’s interesting to them, but in the end you figure out how to make the collaboration relevant to both of you.”

In higher education, more efforts are being put into promoting faculty-faculty collaborations across departments while also preparing students to reach across disciplinary boundaries. At Columbia, the Data Science Institute (DSI) brings together researchers from 11 of Columbia’s 20 schools—including the School of International and Public Affairs, the Columbia Medical Center, the Columbia Law School—to work on problems in smart cities, new media, health analytics, financial analytics, and cybersecurity. Fully 80% of Columbia’s computer science faculty are DSI members.

Other interdisciplinary efforts are supported by provost awards intended to encourage collaborations among schools and departments.

The Computer Science Department plays its role also, whether it’s individuals informally connecting people together or through more structured programs like the NSF-funded IGERT Data to Solutions program, which trains PhD students on taking a multi-disciplinary approach for integrating data collections. As part of its mission, IGERT sponsors talks where researchers outside the department present interesting problems from their own fields.

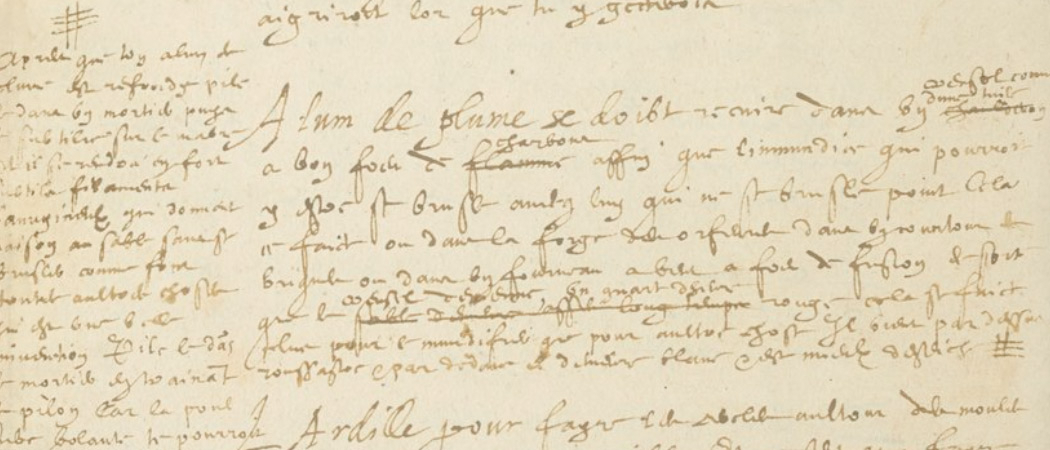

In spring 2015, one of those speakers was Pamela Smith, a professor specializing in the history of science and early modern European history, with particular attention to historical crafts and techniques. Her talk was on the Making and Knowing Project, which seeks to replicate 16th-century methods for constructing pigments, colored metals, coins, jewelry, guns, and decorative objects.

After the talk, Hirschberg suggested Smith contact Steven Feiner, director of the Computer Graphics and User Interfaces Lab.

Updating the reading experience

For the Making and Knowing Project, Smith and her students recreate historical techniques by following recipes contained in a one-of-a-kind, 340-page, 16th-century French manuscript. (The original, BnF Ms. Fr. 640, is housed in the Bibliothèque Nationale in Paris. Selected entries of the present, ongoing English translation are here.) It’s trial and error; the recipes have no precise measurements and often skip certain details so it can take several iterations to get a recipe right. Since even early attempts that “fail” can be highly informative, Smith has her students document and record every step and any objects that are produced.

The result is a substantial collection of artifacts, including photos, videos, texts, translations, and objects recreated using 16th century technology. The challenge for Smith is making this content easily available to others.

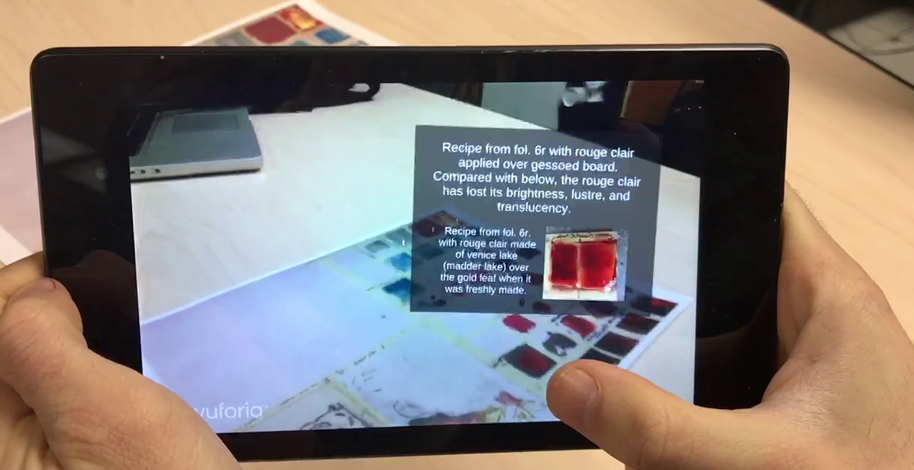

Steven Feiner works in the decidedly 21st century areas of augmented reality, virtual reality, and 3D user interfaces. Together he and Smith are collaborating on how to use the technologies from Feiner’s lab to effectively present historical content in a way that is dynamic and convincing to people without access to the original manuscript.

Their joint solution is to make virtual representations of the content viewable in 3D space and, when available, in context of physical copies of artifacts from Smith’s lab, all seen through a smart device with the appropriate software. The content—texts, images, videos, and 3D simulations of objects—is naturally organized around the recipes.

The devices used by Feiner’s lab range from ordinary smartphones and tablets through devices specifically designed to support augmented reality, in which virtual content can be experienced as if it were physically present in the real world. These higher-end devices include smartphones with Google’s Tango technology and the Microsoft HoloLens stereoscopic headworn computer, both of which have sensors that are used to model the surrounding world. To view the combination of virtual and physical content, a user looks through a smart device, viewing the physical world through the device camera in the case of a smartphone or tablet. Software installed on the device blends the virtual content with the user’s physical environment, taking into account the current position and the orientation of the device’s location in space, giving the impression that the virtual content actually exists in the real environment. If the virtual content includes a 3D object, the user can move relative to the object to see it from any perspective. Virtual content can be attached to physical objects, such as a printed photo or even to Smith’s lab—either the physical room or a virtual model that the researchers are creating.

The two labs are working together to convert Smith’s artifacts into digital content that can be linked together and arranged in 3D in ways that elucidate their interrelationships. Both sides benefit as learning occurs in the two labs: Smith and her students gain new tools and the digital proficiency to use them in a way to better study their own field; Feiner and his students get the chance to work on a problem they might never have previously considered and to better understand how to present and interact with information effectively in 3D—one of their lab’s themes. As the project progresses, Feiner and his students will take what they are learning from working with Smith’s students to further improve the tools and make them more general-purpose so others can adapt them for completely different projects.

More information about the collaboration can be found on the Making and Knowing Project’s Digital page while an extensive photo repository on the project’s Flicker account shows lab reconstruction experiments. Latest project and class updates are posted to Twitter.

It’s just one collaboration in Feiner’s lab; in another, the new media artist Amir Baradaran is incorporating augmented reality technology into two art pieces, one exploring the parallels between code and poetry, and the other looking at the implications to authorship when audience members are able to immerse themselves into an artwork and affect the content. Such issues don’t necessarily enter into the thinking of the computer scientists focused on technical aspects of augmented reality. Says Feiner, “Having Baradaran here in the lab is a chance for us to work with others who bring different perspectives. It makes us better engineers if we’re more cognizant of how these technologies will change the way people interact with the world.”

This project in particular points to another reason for computer scientists to seek collaborations: With technology so ingrained in modern life, others from outside computer science, especially those focused on aesthetic, ethical, and communication issues, can contribute to making technology more human-oriented and easier to use in everyday life.

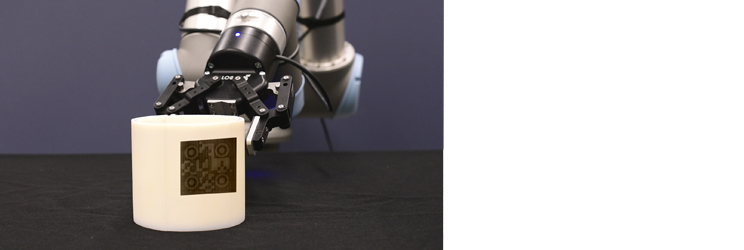

A robotic grasping system to assist people with severe upper extremity disabilities

Robotics by nature is interdisciplinary, requiring expertise in computer science and electrical and mechanical engineering. Peter Allen, director of Columbia’s Robotics Group, is often approached for collaborations by people who come to him for technical solutions; over the years, he has worked on a broad range of projects in different disciplines, from art and archaeology—where he helped construct 3D models of archeological sites from 2D images—to biology and medicine, where he works with colleagues at the medical school to develop surgical robots.

One particularly fruitful collaboration came after a colleague encouraged Allen to attend a talk in which Sanjay Joshi (Professor of Mechanical and Aerospace Engineering at UC, Davis) spoke about a noninvasive brain-muscle-computer interface he could use to control two dimensions of space.

That struck a bell for Allen. In robotic grasping, one difficulty is constraining choices. Jointed digits can fold and bend in almost unlimited number of positions to grasp an object, meaning an almost unfathomable number of micro decisions: Which fingers to use, how to bend and position each one, where on an object should digits grasp? After much research into simplifying robotic motions, Allen was eventually able to break the task down into two motions—two parameters—that could be combined to achieve 80% of grasps.

Joshi could also control two parameters—two dimensions of space. Out of this overlap, and working also with Joel Stein of Columbia’s Medical Center, who specializes in physical medicine and rehabilitation, the three are now developing a robotic grasping system to assist people with severe upper extremity disabilities to pick up and manipulate objects. (A video of the system is here.)

The system interprets and acts on an intent to grasp. Intent is signaled by the user activating a small muscle (posterior auricular) behind the ear. (This muscle, which most people can be trained to control, responds to nerves that come directly from the brain stem, not from spine nerves; even individuals with the most severe spinal cord paralysis can still access the posterior auricular.)

A noninvasive sensor (for sEMG, or surface electromyography) placed behind a patient’s ear detects activation of the posterior auricular, and from there the robotic system carries out a series of automated tasks, culminating in selecting the most appropriate grasp from a collection of preplanned grasps to pick up the desired object.

The whole purpose of the project is to restore the ability to carry out simple daily tasks to people with the most limited motor control function, including those with tetraplegia, multiple sclerosis, stroke, amyotrophic lateral sclerosis (ALS).

“Interdisciplinary work is so important for the future of robotics, especially for human-robot interfaces,” says Allen. “If robots are capable and ubiquitous, humans have to figure out how to interact with them, whether through voice or gestures or brain interfaces—it’s an extremely complex issue. But the rewards can be very high. It’s exciting to see your technology in real clinical use where it can impact and help others.”

In this case, the complexity requires the collective efforts of researchers with expertise in signal processing, robotic grasping, and rehabilitative medicine.

Each collaboration is different, of course, but common to all successful collaborations is a shared purpose in solving a problem while at the same time having the challenge of extending knowledge in one’s own field. In the best cases, the benefits extend far beyond those working in the collaboration.

Posted 11/16/2017

– Linda Crane

Columbia team earns 2nd-place at NYU cybersecurity applied research competition

“NEZHA: Efficient Domain-Independent Differential Testing” won a 2nd place prize at the 14th Cyber Security Awareness Week (CSAW). Put on by NYU, CSAW is world’s largest student-run cybersecurity event, featuring competitions, workshops, and industry events.

Changxi Zheng part of team creating a cheap way to boost and secure Wi-Fi networks

Researchers create an algorithm that computes a reflector shape that boosts signals for desired indoor areas while weakening them elsewhere. The reflector can be 3D-printed for $35. Limiting signal coverage also helps prevent nearby cyberattacks.

A New Way to Find Bugs in Self-Driving AI Could Save Lives

What is the cyber kill chain? Why it’s not always the right approach to cyber attacks

Ansaf Salleb-Aouissi to give keynote at Artificial Intelligence & Inclusion Symposium

The symposium, to be held in Rio de Janeiro, November 8-10, examines the enormous promise of AI technologies as well as the risk that uneven access to AI may amplify digital inequalities across the world.

Phone sensors can save lives by revealing what floor you are on

Dingzeyu Li, lead author of best paper at UIST, selected to UIST Doctoral Symposium

![]()

Dingzeyu Li was one of eight PhD students selected to the Doctoral Symposium organized by the User Interface and Software Technology Symposium held last month in Quebec City. Li is a fifth-year PhD candidate advised by Changxi Zheng.

The daylong symposium brought together eight doctoral candidates who presented their ongoing work in front of their peers and also before a panel of faculty who provided feedback on the presented material and manner of presentation. An informal setting allowed students to meet one another and get to know about one another’s research. Students selected for the symposium received a grant for travel, hotel, and registration.

“Preparing for this symposium gave me an opportunity to rethink my past research projects and connect the dots together,” says Li. “I had a lot of inspiring conversations with the diverse faculty panel and students. It is also a great honor to present and share my research to the HCI (human computer interaction) community.”

Li presented his thesis work on incorporating physics-based simulation into two relatively new research areas: computer-generated acoustics and computational fabrication. The work has particular implications for animation, immersive environments, and fabricating and tagging 3D objects.

In projects involving sound simulation, Li has worked to closely integrate visual and audible components, so one is a natural extension of the other. Algorithms and tools he developed automatically generate sounds from the animation itself, rather than relying on pre-recorded sounds created apart from the animation. For virtual reality environments, he has developed a real-time sound engine that responds to user interactions with realistic and synchronized 3D audio to create a more realistic virtual environment.

Li’s research into simulated sound is also enabling new design tools for 3D printing. In a well-received paper from last year, Li and his coauthors describe a computational approach for designing acoustic filters, or voxels, that fit within an arbitrary 3D shape. At a fundamental level, acoustic voxels demonstrate the connection between shape and sound; at a practical level, they allow for uniquely identifying 3D-printed objects through each object’s acoustic properties. For this same work, which pushed the boundaries of 3D printing, Li was named a recipient of the Shapeways Fall 2016 Educational Grant.

In his work on computational fabrication research, Li utilized physics-based simulation on light scattering to enable the AirCode system, which uniquely identifies printed objects through carefully designed air pockets embedded just below the surface of an object. Manipulating the size and configuration of these air pockets causes light to scatter below the object surface in a distinctive manner that can be exploited to encode information. Information encoded using this method allows fabricated objects to be tracked, linked to online content, tagged with metadata, and embedded with copyright or licensing information. Under an object’s surface, AirCode tags are invisible to human eyes but easily readable using off-the-shelf digital cameras and projectors.

The AirCode paper, which Li wrote with coauthors Avinash S. Nair, Shree K. Nayar, and Changxi Zheng, was named best paper at UIST.

Li expects to graduate in 2018 and looks forward to future exciting research challenges in audio/visual computing and computational design tools. He is also interested in integrating his research into real-world products and applications.

Dingzeyu Li entered the PhD program at Columbia’s Computer Science Department in 2013 after graduating in the top 1% of his class at Hong Kong University of Science and Technology (HKUST), where he received a Bachelor’s of Engineering in Computer Engineering.

Posted 11/02/2017

– Linda Crane