Dingzeyu Li, lead author of best paper at UIST, selected to UIST Doctoral Symposium

![]()

Dingzeyu Li was one of eight PhD students selected to the Doctoral Symposium organized by the User Interface and Software Technology Symposium held last month in Quebec City. Li is a fifth-year PhD candidate advised by Changxi Zheng.

The daylong symposium brought together eight doctoral candidates who presented their ongoing work in front of their peers and also before a panel of faculty who provided feedback on the presented material and manner of presentation. An informal setting allowed students to meet one another and get to know about one another’s research. Students selected for the symposium received a grant for travel, hotel, and registration.

“Preparing for this symposium gave me an opportunity to rethink my past research projects and connect the dots together,” says Li. “I had a lot of inspiring conversations with the diverse faculty panel and students. It is also a great honor to present and share my research to the HCI (human computer interaction) community.”

Li presented his thesis work on incorporating physics-based simulation into two relatively new research areas: computer-generated acoustics and computational fabrication. The work has particular implications for animation, immersive environments, and fabricating and tagging 3D objects.

In projects involving sound simulation, Li has worked to closely integrate visual and audible components, so one is a natural extension of the other. Algorithms and tools he developed automatically generate sounds from the animation itself, rather than relying on pre-recorded sounds created apart from the animation. For virtual reality environments, he has developed a real-time sound engine that responds to user interactions with realistic and synchronized 3D audio to create a more realistic virtual environment.

Li’s research into simulated sound is also enabling new design tools for 3D printing. In a well-received paper from last year, Li and his coauthors describe a computational approach for designing acoustic filters, or voxels, that fit within an arbitrary 3D shape. At a fundamental level, acoustic voxels demonstrate the connection between shape and sound; at a practical level, they allow for uniquely identifying 3D-printed objects through each object’s acoustic properties. For this same work, which pushed the boundaries of 3D printing, Li was named a recipient of the Shapeways Fall 2016 Educational Grant.

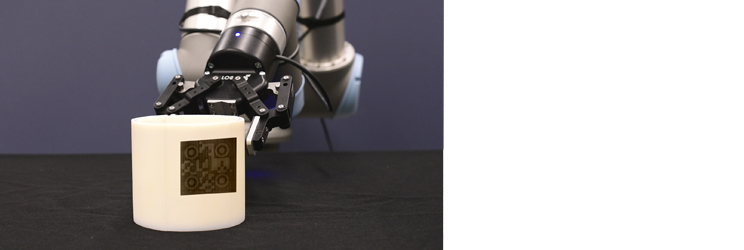

In his work on computational fabrication research, Li utilized physics-based simulation on light scattering to enable the AirCode system, which uniquely identifies printed objects through carefully designed air pockets embedded just below the surface of an object. Manipulating the size and configuration of these air pockets causes light to scatter below the object surface in a distinctive manner that can be exploited to encode information. Information encoded using this method allows fabricated objects to be tracked, linked to online content, tagged with metadata, and embedded with copyright or licensing information. Under an object’s surface, AirCode tags are invisible to human eyes but easily readable using off-the-shelf digital cameras and projectors.

The AirCode paper, which Li wrote with coauthors Avinash S. Nair, Shree K. Nayar, and Changxi Zheng, was named best paper at UIST.

Li expects to graduate in 2018 and looks forward to future exciting research challenges in audio/visual computing and computational design tools. He is also interested in integrating his research into real-world products and applications.

Dingzeyu Li entered the PhD program at Columbia’s Computer Science Department in 2013 after graduating in the top 1% of his class at Hong Kong University of Science and Technology (HKUST), where he received a Bachelor’s of Engineering in Computer Engineering.

Posted 11/02/2017

– Linda Crane