Getting Ready for the New Economy of Heterogeneous Computing

With multiple grants, Professor Luca Carloni works toward developing design methodologies and system architectures for heterogeneous computing. He foresees computing systems both in the cloud and at the edge of the cloud will become more heterogeneous.

For a while, many systems in the cloud, in servers, and in computers, were based on homogeneous multi-core architectures where multiple processors are combined in a chip and multiple chips on a board. But all, in the first approximation, are copies of the same type of processor.

“Now, it is not the case,” said Carloni, who has worked on heterogeneous computing for the past 10 years. “And we have been one of the first groups to really, I think, understand this transition from a research viewpoint and change first our research focus and then our teaching efforts to address all the issues.”

Heterogeneous means that a system is made of components and each component has a different nature. Some of these components are processors that execute software – application software and system software while other components are accelerators. An accelerator is a hardware module specialized to execute a particular function. Specialization provides major benefits in terms of performance and energy efficiency.

Heterogeneous computing, however, is more difficult. Compared to its homogeneous counterpart, heterogeneous systems bring new challenges in terms of hardware-software interactions, access to shared resources, and diminished regularity of the design.

Another aspect of heterogeneity is that components often come from different sources. Let’s say that a company builds a new system-on-chip (SoC), a pervasive type of integrated circuit that is highly heterogeneous. Some parts may be designed anew inside the company, some reused from previous designs, while others may be licensed from other companies. Integrating these parts efficiently requires new design methods.

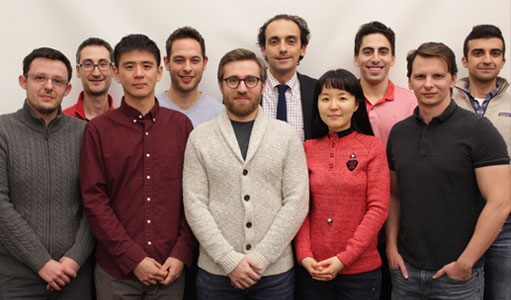

Front row (left to right) : Luca Piccolboni, Kuan-Lin Chiu, Davide Giri, Jihye Kwon, Maico Cassel

Back Row (left to right) : Paolo Mantovani, Guy Eichler, Luca Carloni, Joseph Zuckerman, Giuseppe Di Guglielmo

Carloni’s lab, the System-Level Design Group, tackles these challenges with the concept of embedded scalable platforms (ESP). A platform combines a flexible computer architecture and a companion computer-aided design methodology. The architecture defines how the computation is organized among multiple components, how the components are interconnected, and how to establish the interface between what is done in software and what is done in hardware. Because of the complexity of these systems, many important decisions must be made early, while room must be left to make adjustments later. The methodology guides software programmers and hardware engineers to design, optimize, and integrate their novel solutions.

By leveraging ESP, the SLD Group is developing many SoC prototypes, particularly with field programmable gate arrays (FPGAs). With FPGAs, the hardware in the system can be configured in the field. This allows the chance to explore several optimizations for the target heterogeneous system before committing to the fabrication of expensive silicon.

All of these topics are covered in System-on-Chip Platforms, a class Carloni teaches each fall semester. Students have to design an accelerator — not just for one particular system, but also with a good degree of reusability so that it can be leveraged across multiple systems. Earlier this year, Carloni presented a paper that describes this course at the 2019 Workshop on Computer Architecture Education.

“In developing System-on-Chip Platforms we put particular emphasis on SoC architectures for high-performance embedded applications,” he said. “But we believe that the course provides a broad foundation on the principles and practices of heterogeneous computing.”