CS Student to Present at Google Cloud Next ’19

Tomer Aharoni (GS ’19) will present “Nagish”, a software that helps people with hearing and speech difficulties communicate over the phone, at the Google Cloud Next ‘19 conference in April.

It all started with a voice message and follow up text to Aharoni – his friend needed him to reply and he was in class and could not use his phone. Curious about what was so important, he decided to create a hack – he took the sound output of his computer and redirected it into the input, then he opened a Google Doc, selected the dictation option, played the voice message and the app translated the audio into text. He was finally able to read his friend’s message.

“I realized that I might be onto something,” said Aharoni, a veteran of the Israeli Defense Force who is a general studies student majoring in computer science. “The next week I teamed up with friends to join the DevFest hackathon and we won second place.”

The decision to join DevFest, Columbia’s annual hackathon, proved to be a way to open doors for Nagish. Aside from the second place win, the team also won the Best Hack for Social Good award and caught the eye of Google Cloud.

For the first iteration of Nagish, the team stayed up all night to create a product linked to only one phone number. They had to pre-code the number and link it to Facebook messenger. When Google showed interest and gave them a grant through the Google Cloud credits program, they focused on using Google’s APIs and added other messenger apps to reach more users.

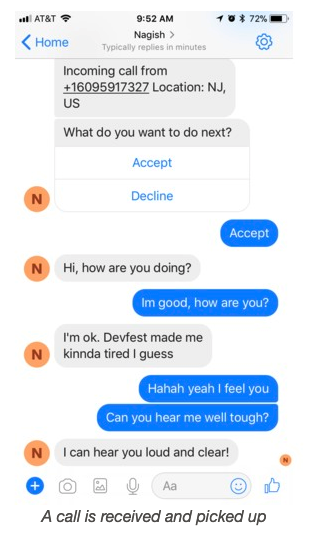

Each call begins with a text message informing the registered user that they have an incoming call. The user can either pick up or decline the call. If the user picks up the call, the caller is informed that this is a special phone call for someone with hearing or speech difficulties so they know that they should wait on the line.

From then on the caller can speak and whatever they say will be translated into text. While the registered user will be able to reply via text that will be translated into a voice message for the caller. So one side of the call can type and read and the other side can speak and hear. The reverse is also true – registered users can place calls with the help of the software.

“The first time we built it it was like a fraction of a second of delay,” shared Aharoni. “It sounds like nothing but the delay is super annoying when you speak with someone over the phone.”

Since then they have worked on making the software more efficient and faster in time for beta testing set for March. Right now the team is looking for 10 people with hearing or speech difficulties to participate in a day of testing.

One of the things that is holding them back at the moment is the cost of using Nagish. While they have a grant from Google, not all of the APIs they use are from that suite of products. To connect the call to Nagish they use an API called Twilio which is costly. Each incoming or outgoing call costs 20 cents per minute. Even though they plan to release Nagish for free, users still have to pay Twilio to be able to use Nagish.

In the beginning, Aharoni had little experience in programming and credits the past year of working on Nagish as invaluable. “I learned a lot from my classes but it is different to actually work on a project and build my skills that way,” he said. Since DevFest he has continuously worked with Alon Ezer, who also gave him a lot of guidance in the beginning. Now he knows the whole process from project conception, implementation, to testing. He hopes to release Nagish, which means accessible in Hebrew, for free sometime this year.

Bloomberg also approached him after the hackathon to apply as an intern. He spent last summer as an intern for the equities group within the engineering department and will join the company as an employee after graduation this May.

“We’ve spent many hours working on Nagish to help others but all that hard work has been fulfilling in more ways than one,” said Aharoni.