DevFest draws 1300 beginner, intermediate, and experienced coders

Unequal parts hackathon and learnathon—the emphasis is solidly on learning—the annual DevFest took place last month with 1300 participants attending. One of Columbia’s largest tech events and hosted annually by ADI (Application Development Initiative), DevFest is a week of classes, mini-lectures, workshops, and sponsor tech talks, topped off by an 18-hour hackathon—all interspersed with socializing, meetups, free food, and fun events.

Open to any student from Columbia College, Columbia Engineering, General Studies, and Barnard, DevFest had something for everyone.

Beginners, even those with zero coding experience, could take introductory classes in Python, HMTL, JavaScript. Those already coding had the opportunity to expand their programming knowledge through micro-lectures on iOS, data science, Civic Data and through workshops on geospatial visualization, UI/UX, web development, Chrome extensions, and more. DevFest sponsor Google gave tech talks on Tensorflow and Google Cloud Platform, with Google engineers onsite giving hands-on instruction and valuable advice.

Every evening, DevSpace offered self-paced, online tutorials to guide DevFest participants through the steps of building a fully functioning project. Four tracks were offered: Beginner Development (where participants set up a working website), Web Development, iOS Development, Data Science. On hand to provide more help were TAs, mentors, and peers.

This emphasis on learning within a supportive community made for a diverse group and not the usual hackathon mix: 60% were attending their first hackathon, 50% were women, and 25% identified as people of color.

DevFest events were kicked off on Monday, February 12, with talks by Computer Science professor Lydia Chilton (an HCI researcher) and Jenn Schiffer, a pixel design artist and tech satirist; events concluded with an 18-hour hackathon starting Saturday evening and continuing through Sunday.

Ends with a hackathon

Thirty teams competed in the DevFest hackathon. Limited to a few members only, teams either arrived ready-made or coalesced during a team-forming event where students pitched ideas to attract others with the needed skills.

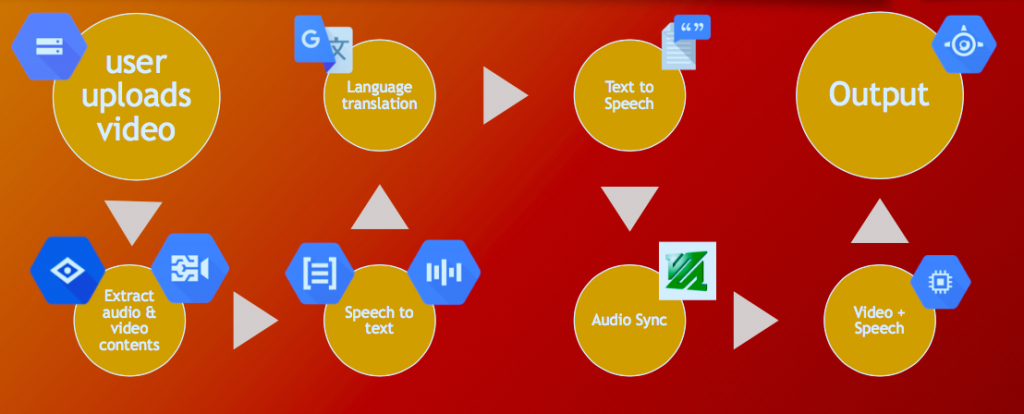

The $1500 first place went to Eyes and Ears, a video service aimed at making information within video more widely accessible, both by translating it for those who don’t speak the language in a video, and by providing audio descriptions of a scene’s content for people with visual impairments. The aim was to erase barriers preventing people from accessing the increasing amounts of information resources available in video. Someone using the service simply chooses a video, selects a language, and then waits for an email delivering the translated video complete with audio scene descriptions.

Eyes and Ears is the project of four computer science MS students—Siddhant Somani, Ishan Jain, Shubham Singhal, Amit Bhat—who came to DevFest with the intent to compete as a team in the hackathon. The idea for a project came after they attended Google’s Cloud workshop, learning there about an array of Google APIs. The question then became finding a way to combine APIs to create something with an impact for good.

The initial idea was to “simply” translate a video from any language to any other language supported by Google Translate (roughly 80% of the world’s languages). However, having built a translation pipeline, the team realized the pipeline could be extended to include audio descriptions of a video’s visual scenes, both when a scene changes or per a user’s request.

That such a service is even possible—let alone buildable in 18 hours—is due to the power of APIs to perform complex technology tasks.

It’s in the spaces between the many APIs and the sequential order of those APIs that required engineering effort. The team had to shepherd the output of one API to the output of another, sync pauses to the audio (which required an algorithm for detecting the start and stop of speech), sync the pace of one language to the pace of the other language (taking into account a differing number of words). Because Google Video Intelligence API, which is designed for indexing video only, outputs sparse single words (mostly nouns like “car” or “dog”), the team had to construct full, semantically correct sentences. All in 18 hours.

The project earned praise from Google engineers for its imaginative and ambitious use of APIs. In addition to its first place prize, Eyes and Ears was named best use of Google Cloud API.

The team will look to continue work on Eyes and Ears in future hackathons.

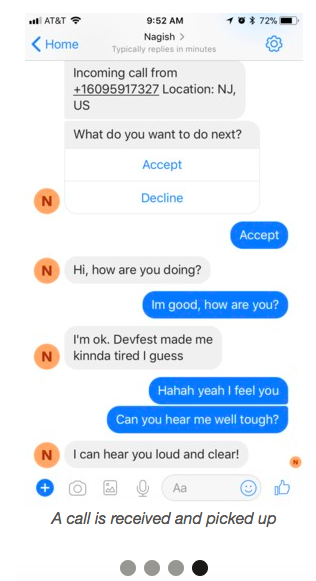

The $1000 second-place prize went to Nagish (Hebrew for “accessible”), a platform that makes it simple for people with hearing or speaking difficulties to make and receive phone calls using their smart phone. For incoming and outgoing calls, Nagish converts text to speech and speech to text in real time so voice phone calls can be read or generated via Facebook Messenger. All conversions are done in real-time for seamless and natural phone conversations.

| The Nagish team— computer science majors Ori Aboodi, Roy Prigat, Ben Arbib, Tomer Aharoni, Alon Ezer—are five veterans who were motivated to help fellow veterans as well as others with hearing and speech impairments.

To do so required a fairly complex environment made up of several APIs (Google’s text-to-speech and speech-to-text as well as a Twilio API for generating phone numbers for each user and retrieving the mp3 files of the calls), all made to “talk” to one another over custom Python programs. Additionally, the team created a chatbot to connect Nagish to the Facebook platform. For providing a needed service to those with hearing and speech impairments, the team won the Best Hack for Social Good. Of course, potential users don’t have to be hearing- or speech-impaired to appreciate how Nagish makes it possible to unobtrusively take an important, or not so important, phone call during a meeting or perhaps even during class. |

|

Taking the $750 third-place prize was Three a Day, a platform that matches restaurants or individuals wanting to donate food with those in need of food donations. The goal is making sure every individual gets three meals a day. The two-person team (computer science majors Kanishk Vashisht and Sambhav Anand) built Three a Day using Firebase as the database and React as the front end, with the back-end computing supplied primarily through Google Cloud functions. A Digital Ocean server runs Cron jobs to schedule the matching of restaurants and charities. The team also won for the best use of Digital Ocean products.

Posted March 22, 2018

– Linda Crane