2021

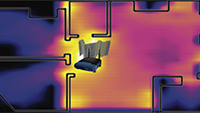

Neural network applications have become popular in both enterprise and personal settings. Network solutions are tuned meticulously for each task, and designs that can robustly resolve queries end up in high demand. As the commercial value of accurate and performant machine learning models increases, so too does the demand to protect neural architectures as confidential investments. We explore the vulnerability of neural networks deployed as black boxes across accelerated hardware through electromagnetic side channels. We examine the magnetic flux emanating from a graphics processing unit's power cable, as acquired by a cheap $3 induction sensor, and find that this signal betrays the detailed topology and hyperparameters of a black-box neural network model. The attack acquires the magnetic signal for one query with unknown input values, but known input dimensions. The network reconstruction is possible due to the modular layer sequence in which deep neural networks are evaluated. We find that each layer component's evaluation produces an identifiable magnetic signal signature, from which layer topology, width, function type, and sequence order can be inferred using a suitably trained classifier and a joint consistency optimization based on integer programming. We study the extent to which network specifications can be recovered, and consider metrics for comparing network similarity. We demonstrate the potential accuracy of this side channel attack in recovering the details for a broad range of network architectures, including random designs. We consider applications that may exploit this novel side channel exposure, such as adversarial transfer attacks. In response, we discuss countermeasures to protect against our method and other similar snooping techniques.

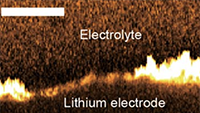

Lithium metal batteries are attractive for next-generation energy storage because of their high energy density. A major obstacle to their commercialization is the uncontrollable growth of lithium dendrites, which arises from complicated but poorly understood interactions at the electrolyte/electrode interface. In this work, we use a machine learning-based artificial neural network (ANN) model to explore how the lithium growth rate is affected by local material properties, such as surface curvature, ion concentration in the electrolyte, and the lithium growth rates at previous moments. The ion concentration in the electrolyte was acquired by Stimulated Raman Scattering Microscopy, which is often missing in past experimental data-based modeling. The ANN network reached a high correlation coefficient of 0.8 between predicted and experimental values. Further sensitivity analysis based on the ANN model demonstrated that the salt concentration and concentration gradient, as well as the prior lithium growth rate, have the highest impacts on the lithium dendrite growth rate at the next moment. This work shows the potential capability of the ANN model to forecast lithium growth rate, and unveil the inner dependency of the lithium dendrite growth rate on various factors.

Chang Xiao and Changxi Zheng

MoiréBoard: A Stable, Accurate and Low-cost Camera Tracking Method.

ACM User Interface Software and Technology (UIST), 2021

Abstract

MoiréBoard: A Stable, Accurate and Low-cost Camera Tracking Method.

ACM User Interface Software and Technology (UIST), 2021

Abstract

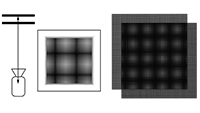

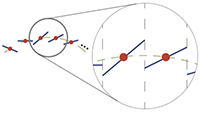

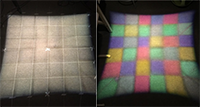

Camera tracking is an essential building block in a myriad of HCI applications.

For example, commercial VR devices are equipped with dedicated hardware, such

as laser-emitting beacon stations, to enable accurate tracking of VR headsets.

However, this hardware remains costly. On the other hand, low-cost solutions

such as IMU sensors and visual markers exist, but they suffer from large

tracking errors. In this work, we bring high accuracy and low cost together to

present MoiréBoard, a new 3-DOF camera position tracking method that

leverages a seemingly irrelevant visual phenomenon, the moiré

effect. Based on a systematic analysis of the moiré effect under camera

projection, MoiréBoard requires no power nor camera calibration. It

can be easily made at a low cost (e.g., through 3D printing), ready to use with

any stock mobile devices with a camera. Its tracking algorithm is

computationally efficient, able to run at a high frame rate. Although it is

simple to implement, it tracks devices at high accuracy, comparable to the

state-of-the-art commercial VR tracking systems.

Rundi Wu, Chang Xiao, and Changxi Zheng

DeepCAD: A Deep Generative Network for Computer-Aided Design Models.

International Conference on Computer Vision (ICCV) 2021

Paper Project Page Abstract

DeepCAD: A Deep Generative Network for Computer-Aided Design Models.

International Conference on Computer Vision (ICCV) 2021

Paper Project Page Abstract

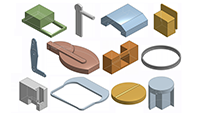

Deep generative models of 3D shapes have received a great deal of research interest. Yet, almost all of them generate discrete shape representations, such as voxels, point clouds, and polygon meshes. We present the first 3D generative model for a drastically different shape representation--describing a shape as a sequence of computer-aided design (CAD) operations. Unlike meshes and point clouds, CAD models encode the user creation process of 3D shapes, widely used in numerous industrial and engineering design tasks. However, the sequential and irregular structure of CAD operations poses significant challenges for existing 3D generative models. Drawing an analogy between CAD operations and natural language, we propose a CAD generative network based on the Transformer. We demonstrate the performance of our model for both shape autoencoding and random shape generation. To train our network, we create a new CAD dataset consisting of 178,238 models and their CAD construction sequences. We have made this dataset publicly available to promote future research on this topic.

We present an inverse design method for achieving unprecedented performance

and ultra wide bandwidth based on direct adaptive refinement of the device geometry. We

experimentally demonstrate a 90/10 splitter with more than 200 nm bandwidth.

Jianjin Xu and Changxi Zheng

Linear Semantics in Generative Adversarial Networks.

Computer Vision and Pattern Recognition (CVPR), 2021

Paper Abstract Source Code

Linear Semantics in Generative Adversarial Networks.

Computer Vision and Pattern Recognition (CVPR), 2021

Paper Abstract Source Code

Generative Adversarial Networks (GANs) are able to generate high-quality images, but it remains difficult to explicitly

specify the semantics of synthesized images. In this work, we aim to better understand the semantic representation of

GANs, and thereby enable semantic control in GAN's generation process. Interestingly, we find that a well-trained GAN

encodes image semantics in its internal feature maps in a surprisingly simple way: a linear transformation of feature

maps suffices to extract the generated image semantics. To verify this simplicity, we conduct extensive experiments on

various GANs and datasets; and thanks to this simplicity, we are able to learn a semantic segmentation model for a

trained GAN from a small number (e.g., 8) of labeled images. Last but not least, leveraging our findings, we propose

two few-shot image editing approaches, namely SemanticConditional Sampling and Semantic Image Editing. Given

a trained GAN and as few as eight semantic annotations, the user is able to generate diverse images subject to a userprovided semantic layout, and control the synthesized image

semantics. We have made the code publicly available.

We present BackTrack, a trackpad placed on the back of a smartphone to track fine-grained finger motions. Our system has a small form factor, with all the circuits encapsulated in a thin layer attached to a phone case. It can be used with any off-the-shelf smartphone, requiring no power supply or modification of the operating systems. BackTrack simply extends the finger tracking area of the front screen, without interrupting the use of the front screen. It also provides a switch to prevent unintentional touch on the trackpad. All these features are enabled by a battery-free capacitive circuit, part of which is a transparent, thin-film conductor coated on a thin glass and attached to the front screen. To ensure accurate and robust tracking, the capacitive circuits are carefully designed. Our design is based on a circuit model of capacitive touchscreens, justified through both physics-based finite-element simulation and controlled laboratory experiments. We conduct user studies to evaluate the performance of using BackTrack. We also demonstrate its use in a number of smartphone applications.

2020

Ziwei Zhu and Changxi Zheng

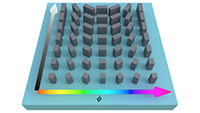

Differentiable scattering matrix for optimization of photonic structures.

Optics Express, 28 (25), 2020

Paper (PDF) Abstract Source Code

Differentiable scattering matrix for optimization of photonic structures.

Optics Express, 28 (25), 2020

Paper (PDF) Abstract Source Code

The scattering matrix, which quantifies the optical reflection and transmission of a photonic structure, is pivotal for understanding the performance of the structure. In many photonic design tasks, it is also desired to know how the structure's optical performance changes with respect to design parameters, that is, the scattering matrix's derivatives (or gradient). Here we address this need. We present a new algorithm for computing scattering matrix derivatives accurately and robustly. In particular, we focus on the computation in semi-analytical methods (such as rigorous coupled-wave analysis). To compute the scattering matrix of a structure, these methods must solve an eigen-decomposition problem. However, when it comes to computing scattering matrix derivatives, differentiating the eigen-decomposition poses significant numerical difficulties. We show that the differentiation of the eigen-decomposition problem can be completely sidestepped, and thereby propose a robust algorithm. To demonstrate its efficacy, we use our algorithm to optimize metasurface structures and reach various optical design goals.

Ruilin Xu, Rundi Wu, Yuko Ishiwaka, Carl Vondrick, and Changxi Zheng

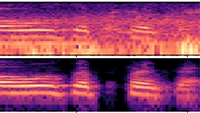

Listening to Sounds of Silence for Speech Denoising.

Advances in Neural Information Processing Systems (NeurIPS), 2020

Paper (PDF) Project Page Abstract

Listening to Sounds of Silence for Speech Denoising.

Advances in Neural Information Processing Systems (NeurIPS), 2020

Paper (PDF) Project Page Abstract

We introduce a deep learning model for speech denoising, a long-standing challenge in audio analysis arising in numerous applications. Our approach is based on a key observation about human speech: there is often a short pause between each sentence or word. In a recorded speech signal, those pauses introduce a series of time periods during which only noise is present. We leverage these incidental silent intervals to learn a model for automatic speech denoising given only mono-channel audio. Detected silent intervals over time expose not just pure noise but its time-varying features, allowing the model to learn noise dynamics and suppress it from the speech signal. Experiments on multiple datasets confirm the pivotal role of silent interval detection for speech denoising, and our method outperforms several state-of-the-art denoising methods, including those that accept only audio input (like ours) and those that denoise based on audiovisual input (and hence require more information). We also show that our method enjoys excellent generalization properties, such as denoising spoken languages not seen during training.

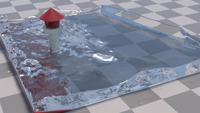

Wei Li, Yixin Chen, Mathieu Desbrun, Changxi Zheng, Xiaopei Liu

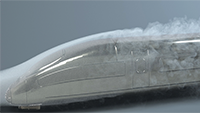

Fast and Scalable Turbulent Flow Simulation with Two-Way Coupling.

ACM Transaction on Graphics (SIGGRAPH 2020)

Paper (PDF) Project Page Abstract Video

Fast and Scalable Turbulent Flow Simulation with Two-Way Coupling.

ACM Transaction on Graphics (SIGGRAPH 2020)

Paper (PDF) Project Page Abstract Video

Despite their cinematic appeal, turbulent flows involving fluid-solid coupling remain a computational challenge in animation. At the root of this current limitation is the numerical dispersion from which most accurate Navier-Stokes solvers suffer: proper coupling between fluid and solid often generates artificial dispersion in the form of local, parasitic trains of velocity oscillations, eventually leading to numerical instability. While successive improvements over the years have led to conservative and detail-preserving fluid integrators, the dispersive nature of these solvers is rarely discussed despite its dramatic impact on fluid-structure interaction. In this paper, we introduce a novel low-dissipation and low-dispersion fluid solver that can simulate two-way coupling in an efficient and scalable manner, even for turbulent flows. In sharp contrast with most current CG approaches, we construct our solver from a kinetic formulation of the flow derived from statistical mechanics. Unlike existing lattice Boltzmann solvers, our approach leverages high-order moment relaxations as a key to controlling both dissipation and dispersion of the resulting scheme. Moreover, we combine our new fluid solver with the immersed boundary method to easily handle fluid-solid coupling through time adaptive simulations. Our kinetic solver is highly parallelizable by nature, making it ideally suited for implementation on single- or multi-GPU computing platforms. Extensive comparisons with existing solvers on synthetic tests and real-life experiments are used to highlight the multiple advantages of our work over traditional and more recent approaches, in terms of accuracy, scalability, and efficiency.

Ziwei Zhu, Utsav D. Dave, Michal Lipson, and Changxi Zheng

Inverse Geometric Design of Fabrication-Robust Nanophotonic Waveguides.

Conference on Lasers and Electro-Optics (CLEO), May 2020

(Oral presentation)

Paper (PDF) Abstract

Inverse Geometric Design of Fabrication-Robust Nanophotonic Waveguides.

Conference on Lasers and Electro-Optics (CLEO), May 2020

(Oral presentation)

Paper (PDF) Abstract

We present an inverse design method making waveguides with high performance and high robustness to fabrication errors. As an example, we show a 1-to-4 mode converter > -1.5 dB conversion efficiency under geometric variations within fabrication tolerances.

Chang Xiao and Changxi Zheng

One Man's Trash is Another Man's Treasure: Resisting Adversarial Examples by Adversarial Examples.

Computer Vision and Pattern Recognition (CVPR), 2020

Paper Abstract Source Code

One Man's Trash is Another Man's Treasure: Resisting Adversarial Examples by Adversarial Examples.

Computer Vision and Pattern Recognition (CVPR), 2020

Paper Abstract Source Code

Modern image classification systems are often built on deep neural networks,

which suffer from adversarial examples -- images with deliberately crafted,

imperceptible noise to mislead the network's classification. To defend against

adversarial examples, a plausible idea is to obfuscate the network's gradient

with respect to the input image. This general idea has inspired a long line of

defense methods. Yet, almost all of them have proven vulnerable.

We revisit this seemingly flawed idea from a radically different perspective. We embrace the omnipresence of adversarial examples and the numerical procedure of crafting them, and turn this harmful attacking process into a useful defense mechanism. Our defense method is conceptually simple: before feeding an input image for classification, transform it by finding an adversarial example on a pre-trained external model. We evaluate our method against a wide range of possible attacks. On both CIFAR-10 and Tiny ImageNet datasets, our method is significantly more robust than state-of-the-art methods. Particularly, in comparison to adversarial training, our method offers lower training cost as well as stronger robustness.

We revisit this seemingly flawed idea from a radically different perspective. We embrace the omnipresence of adversarial examples and the numerical procedure of crafting them, and turn this harmful attacking process into a useful defense mechanism. Our defense method is conceptually simple: before feeding an input image for classification, transform it by finding an adversarial example on a pre-trained external model. We evaluate our method against a wide range of possible attacks. On both CIFAR-10 and Tiny ImageNet datasets, our method is significantly more robust than state-of-the-art methods. Particularly, in comparison to adversarial training, our method offers lower training cost as well as stronger robustness.

Chang Xiao, Peilin Zhong, and Changxi Zheng

Enhancing Adversarial Defense by k-Winners-Take-All.

International Conference on Learning Representations (ICLR), 2020

(Spotlight presentation)

Paper (PDF) Abstract Source Code

Enhancing Adversarial Defense by k-Winners-Take-All.

International Conference on Learning Representations (ICLR), 2020

(Spotlight presentation)

Paper (PDF) Abstract Source Code

We propose a simple change to existing neural network structures for better defending against gradient-based adversarial attacks. Instead of using popular activation functions (such as ReLU), we advocate the use of k-Winners-Take-All (k-WTA) activation, a C0

discontinuous function that purposely invalidates the neural network model's gradient at densely distributed input data points.

The proposed k-WTA activation can be readily used in nearly all existing networks and training methods

with no significant overhead. Our proposal is theoretically rationalized. We analyze why the discontinuities in k-WTA networks

can largely prevent gradient-based search of adversarial examples and why they at the same time remain innocuous

to the network training. This understanding is also empirically backed. We test k-WTA activation on various network

structures optimized by a training method, be it adversarial training or not. In all cases, the robustness of k-WTA

networks outperforms that of traditional networks under white-box attacks.

2019

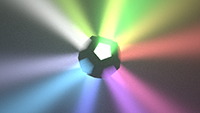

Metasurfaces are optically thin metamaterials that promise complete control of the wavefront of light but are primarily

used to control only the phase of light. Here, we present an approach, simple in concept and in practice, that uses

meta-atoms with a varying degree of form birefringence and rotation angles to create high-efficiency dielectric

metasurfaces that control both the optical amplitude and phase at one or two frequencies. This opens up applications

in computer-generated holography, allowing faithful reproduction of both the phase and amplitude of a target

holographic scene without the iterative algorithms required in phase-only holography. We demonstrate all-dielectric

metasurface holograms with independent and complete control of the amplitude and phase at up to two optical

frequencies simultaneously to generate two- and three-dimensional holographic objects. We show that phaseamplitude metasurfaces enable a few features not attainable in phase-only holography; these include creating artifact-free two-dimensional holographic images, encoding phase and amplitude profiles separately at the object plane,

encoding intensity profiles at the metasurface and object planes separately, and controlling the surface textures of

three-dimensional holographic objects.

Peilin Zhong*, Yuchen Mo*, Chang Xiao*, Pengyu Chen, and Changxi Zheng

Rethinking Generative Mode Coverage: A Pointwise Guaranteed Approach.

Advances in Neural Information Processing Systems (NeurIPS), 2019

(*equal contribution)

Paper (PDF) Abstract Source Code

Rethinking Generative Mode Coverage: A Pointwise Guaranteed Approach.

Advances in Neural Information Processing Systems (NeurIPS), 2019

(*equal contribution)

Paper (PDF) Abstract Source Code

Many generative models have to combat missing modes. The conventional wisdom to this end is by reducing through training a statistical distance (such as f-divergence) between the generated distribution and provided data distribution. But this is more of a heuristic than a guarantee. The statistical distance measures a global, but not local, similarity between two distributions. Even if it is small, it does not imply a plausible mode coverage. Rethinking this problem from a game-theoretic perspective, we show that a complete mode coverage is firmly attainable. If a generative model can approximate a data distribution moderately well under a global statistical distance measure, then we will be able to find a mixture of generators that collectively covers every data point and thus every mode, with a lower-bounded generation probability. Constructing the generator mixture has a connection to the multiplicative weights update rule, upon which we propose our algorithm. We prove that our algorithm guarantees complete mode coverage. And our experiments on real and synthetic datasets confirm better mode coverage over recent approaches, ones that also use generator mixtures but rely on global statistical distances.

Yun (Raymond) Fei, Christopher Batty, Eitan Grinspun, and Changxi Zheng

A Multi-Scale Model for Coupling Strands with Shear-Dependent Liquid.

ACM Transaction on Graphics (SIGGRAPH Asia 2019)

Paper (PDF) Project Page Abstract Video

A Multi-Scale Model for Coupling Strands with Shear-Dependent Liquid.

ACM Transaction on Graphics (SIGGRAPH Asia 2019)

Paper (PDF) Project Page Abstract Video

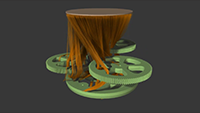

We propose a framework for simulating the complex dynamics of strands interacting with compressible, shear-dependent liquids, such as oil paint, mud, cream, melted chocolate, and pasta sauce. Our framework contains three main components: the strands modeled as discrete rods, the bulk liquid represented as a continuum (material point method), and a reduced-dimensional flow of liquid on the surface of the strands with detailed elastoviscoplastic behavior. These three components are tightly coupled together. To enable discrete strands interacting with continuum-based liquid, we develop models that account for the volume change of the liquid as it passes through strands and the momentum exchange between the strands and the liquid. We also develop an extended constraint-based collision handling method that supports cohesion between strands. Furthermore, we present a principled method to preserve the total momentum of a strand and its surface flow, as well as an analytic plastic flow approach for Herschel-Bulkley fluid that enables stable semi-implicit integration at larger time steps. We explore a series of challenging scenarios, involving splashing, shaking, and agitating the liquid which causes the strands to stick together and become entangled.

Cheng Zhang, Lifan Wu, Changxi Zheng, Ioannis Gkioulekas, Ravi Ramamoorthi, and Shuang Zhao

A Differential Theory of Radiative Transfer.

ACM Transaction on Graphics (SIGGRAPH Asia 2019)

Paper (PDF) Project Page Abstract

A Differential Theory of Radiative Transfer.

ACM Transaction on Graphics (SIGGRAPH Asia 2019)

Paper (PDF) Project Page Abstract

Physics-based differentiable rendering is the task of estimating the derivatives of radiometric measures with respect to scene parameters. The ability to compute these derivatives is necessary for enabling gradient-based optimization in a diverse array of applications: from solving analysis-by-synthesis problems to training machine learning pipelines incorporating forward rendering processes. Unfortunately, physics-based differentiable rendering remains challenging, due to the complex and typically nonlinear relation between pixel intensities and scene parameters.

We introduce a differential theory of radiative transfer, which shows how individual components of the radiative transfer equation (RTE) can be differentiated with respect to arbitrary differentiable changes of a scene. Our theory encompasses the same generality as the standard RTE, allowing differentiation while accurately handling a large range of light transport phenomena such as volumetric absorption and scattering, anisotropic phase functions, and heterogeneity. To numerically estimate the derivatives given by our theory, we introduce an unbiased Monte Carlo estimator supporting arbitrary surface and volumetric configurations. Our technique differentiates path contributions symbolically and uses additional boundary integrals to capture geometric discontinuities such as visibility changes.

We validate our method by comparing our derivative estimations to those generated using the finite-difference method. Furthermore, we use a few synthetic examples inspired by real-world applications in inverse rendering, non-line-of-sight (NLOS) and biomedical imaging, and design, to demonstrate the practical usefulness of our technique.

We introduce a differential theory of radiative transfer, which shows how individual components of the radiative transfer equation (RTE) can be differentiated with respect to arbitrary differentiable changes of a scene. Our theory encompasses the same generality as the standard RTE, allowing differentiation while accurately handling a large range of light transport phenomena such as volumetric absorption and scattering, anisotropic phase functions, and heterogeneity. To numerically estimate the derivatives given by our theory, we introduce an unbiased Monte Carlo estimator supporting arbitrary surface and volumetric configurations. Our technique differentiates path contributions symbolically and uses additional boundary integrals to capture geometric discontinuities such as visibility changes.

We validate our method by comparing our derivative estimations to those generated using the finite-difference method. Furthermore, we use a few synthetic examples inspired by real-world applications in inverse rendering, non-line-of-sight (NLOS) and biomedical imaging, and design, to demonstrate the practical usefulness of our technique.

Zahra Montazeri, Chang Xiao, Yun (Raymond) Fei, Changxi Zheng, and Shuang Zhao

Mechanics-Aware Modeling of Cloth Appearance.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2019

Paper (PDF) Abstract Video

Mechanics-Aware Modeling of Cloth Appearance.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2019

Paper (PDF) Abstract Video

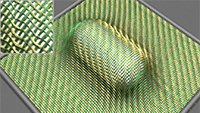

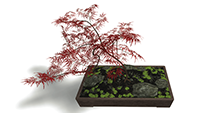

Micro-appearance models have brought unprecedented fidelity and details to cloth rendering. Yet, these models neglect fabric mechanics: when a piece of cloth interacts with the environment, its yarn and fiber arrangement usually changes in response to external contact and tension forces. Since subtle changes of a fabric's microstructures can greatly affect its macroscopic appearance, mechanics-driven appearance variation of fabrics has been a phenomenon that remains to be captured.

We introduce a mechanics-aware model that adapts the microstructures of cloth yarns in a physics-based manner.

Our technique works on two distinct physical scales: using physics-based simulations of individual yarns, we capture the rearrangement of yarn-level structures in response to external forces.

These yarn structures are further enriched to obtain appearance-driving fiber-level details.

The cross-scale enrichment is made practical through a new parameter fitting algorithm for simulation, an augmented procedural yarn model coupled with a custom-design regression neural network.

We train the network using a dataset generated by joint simulations at both the yarn and the fiber levels.

Through several examples, we demonstrate that our model is capable of synthesizing photorealistic cloth appearance in a mechanically plausible way.

Arun A. Nair, Austin Reiter, Changxi Zheng, and Shree K. Nayar

Audiovisual Zooming: What You See Is What You Hear.

ACM International Conference on Multimedia (ACMMM), 2019

(Best Paper Award)

Paper (PDF) Abstract Video

Audiovisual Zooming: What You See Is What You Hear.

ACM International Conference on Multimedia (ACMMM), 2019

(Best Paper Award)

Paper (PDF) Abstract Video

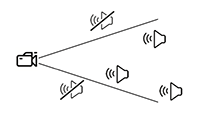

When capturing videos on a mobile platform, often the target of interest is contaminated by the surrounding environment. To alleviate the visual irrelevance, camera panning and zooming provide the means to isolate a desired field of view (FOV). However, the captured audio is still contaminated by signals outside the FOV. This effect is unnatural--for human perception, visual and auditory cues must go hand-in-hand.

We present the concept of Audiovisual Zooming, whereby an auditory FOV is formed to match the visual. Our framework is built around the classic idea of beamforming, a computational approach to enhancing sound from a single direction using a microphone array. Yet, beamforming on its own can not incorporate the auditory FOV, as the FOV may include an arbitrary number of directional sources. We formulate our audiovisual zooming as a generalized eigenvalue problem and propose an algorithm for efficient computation on mobile platforms. To inform the algorithmic and physical implementation, we offer a theoretical analysis of our algorithmic components as well as numerical studies for understanding various design choices of microphone arrays. Finally, we demonstrate audiovisual zooming on two different mobile platforms: a mobile smartphone and a 360° spherical imaging system for video conference settings.

We present the concept of Audiovisual Zooming, whereby an auditory FOV is formed to match the visual. Our framework is built around the classic idea of beamforming, a computational approach to enhancing sound from a single direction using a microphone array. Yet, beamforming on its own can not incorporate the auditory FOV, as the FOV may include an arbitrary number of directional sources. We formulate our audiovisual zooming as a generalized eigenvalue problem and propose an algorithm for efficient computation on mobile platforms. To inform the algorithmic and physical implementation, we offer a theoretical analysis of our algorithmic components as well as numerical studies for understanding various design choices of microphone arrays. Finally, we demonstrate audiovisual zooming on two different mobile platforms: a mobile smartphone and a 360° spherical imaging system for video conference settings.

Henrique Teles Maia, Dingzeyu Li, Yuan Yang, and Changxi Zheng

LayerCode: Optical Barcodes for 3D Printed Shapes.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

LayerCode: Optical Barcodes for 3D Printed Shapes.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

With the advance of personal and customized fabrication techniques, the capability to embed information in physical objects becomes evermore crucial. We present LayerCode, a tagging scheme that embeds a carefully designed barcode pattern in 3D printed objects as a deliberate byproduct of the 3D printing process. The LayerCode concept is inspired by the structural resemblance between the parallel black and white bars of the standard barcode and the universal layer-by-layer approach of 3D printing. We introduce an encoding algorithm that enables the 3D printing layers to carry information without altering the object geometry. We also introduce a decoding algorithm that reads the LayerCode tag of a physical object by just taking a photo. The physical deployment of LayerCode tags is realized on various types of 3D printers, including Fused Deposition Modeling printers as well as Stereolithography based printers. Each offers its own advantages and tradeoffs. We show that LayerCode tags can work on complex, nontrivial shapes, on which all previous tagging mechanisms may fail. To evaluate LayerCode thoroughly, we further stress test it with a large dataset of complex shapes using virtual rendering. Among 4,835 tested shapes, we successfully encode and decode on more than 99% of the shapes.

Chang Xiao, Karl Bayer, Changxi Zheng, and Shree K. Nayar

Vidgets: Modular Mechanical Widgets for Mobile Devices.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

Vidgets: Modular Mechanical Widgets for Mobile Devices.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

We present Vidgets, a family of mechanical widgets, specifically push buttons and rotary knobs that augment mobile devices with tangible user interfaces. When these widgets are attached to a mobile device and a user interacts with them, the widgets' nonlinear mechanical response shifts the device slightly and quickly, and this subtle motion can be detected by the accelerometer commonly equipped on mobile devices. We propose a physics-based model to understand the nonlinear mechanical response of widgets. This understanding enables us to design tactile force profiles of these widgets so that the resulting accelerometer signals become easy to recognize. We then develop a lightweight signal processing algorithm that analyzes the accelerometer signals and recognizes how the user interacts with the widgets in real time. Vidgets widgets are low-cost, compact, reconfigurable, and power efficient. They can form a diverse set of physical interfaces that enrich users' interactions with mobile devices in various practical scenarios.

2018

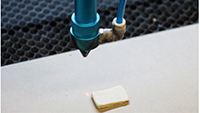

We study the application of laser-heating technology to browning dough, due to its potential for high-resolution spatial and surface color control. An important component of this process is the identification of how laser parameters affect browning and baking and whether desirable results can be achieved. In this study, we analyze the performance of a carbon dioxide (CO2) mid-infrared laser (operating at 10.6 micrometer wavelength) during the browning of dough. Dough samples--consisting of flour and water--were exposed to the infrared laser at different laser power, beam diameter, and sample exposure time. At a laser energy flux of 0.32 MW m^{-2} (beam diameter of 5.7 mm) and sample exposure time of 180 s we observe a maximum thermal penetration of 0.77 mm and satisfactory dough browning. These results suggest that a CO2 laser is ideal for browning thin goods as well as for food layered manufacture.

@article{blutinger2019characterization,

title={Characterization of CO2 laser browning of dough},

author={Blutinger, Jonathan David and Meijers, Yor{\'a}n and Chen, Peter Yichen and

Zheng, Changxi and Grinspun, Eitan and Lipson, Hod},

journal={Innovative Food Science and Emerging Technologies},

volume={52},

pages={145--157},

year={2019},

publisher={Elsevier}

}

Chang Xiao, Peilin Zhong, and Changxi Zheng

BourGAN: Generative Networks with Metric Embeddings.

Advances in Neural Information Processing Systems (NeurIPS), 2018

(Spotlight presentation)

Paper (PDF) Abstract Source Code Bibtex

BourGAN: Generative Networks with Metric Embeddings.

Advances in Neural Information Processing Systems (NeurIPS), 2018

(Spotlight presentation)

Paper (PDF) Abstract Source Code Bibtex

This paper addresses the mode collapse for generative adversarial networks (GANs). We view modes as a geometric structure of data distribution in a metric space. Under this geometric lens, we embed subsamples of the dataset from an arbitrary metric space into the L2 space, while preserving their pairwise distance distribution. Not only does this metric embedding determine the dimensionality of the latent space automatically, it also enables us to construct a mixture of Gaussians to draw latent space random vectors. We use the Gaussian mixture model in tandem with a simple augmentation of the objective function to train GANs. Every major step of our method is supported by theoretical analysis, and our experiments on real and synthetic data confirm that the generator is able to produce samples spreading over most of the modes while avoiding unwanted samples, outperforming several recent GAN variants on a number of metrics and offering new features.

@incollection{Xiao18Bourgan,

title = {BourGAN: Generative Networks with Metric Embeddings},

author = {Xiao, Chang and Zhong, Peilin and Zheng, Changxi},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS) 31},

pages = {2275--2286},

year = {2018},

publisher = {Curran Associates, Inc.},

}

A data-driven model that predicatively generates photorealistic RGB images of dough surface browning isproposed. This model was validated in a practical application using a CO2 laser dough browning pipeline, thus confirming that it can be employed to characterize visual appearance of browned samples, such as surface colorand patterns. A supervised deep generative network takes laser speed, laser energy flux, and dough moisture asan input and outputs an image (of 64x64 pixel size) of laser-browned dough. Image generation is achieved bynonlinearly interpolating high-dimensional training data. The proposed prediction framework contributes to thedevelopment of computer-aided design (CAD) software for food processing techniques by creating more accuratephotorealistic models.

Ye Yuan, Changxi Zheng, and Stelian Coros

Computational Design of Transformables.

ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA), 2018

Paper (PDF) Abstract Video

Computational Design of Transformables.

ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA), 2018

Paper (PDF) Abstract Video

We present a computational approach to designing transformables, physical characters that can shape-shift to take on vastly different forms. The design process begins with a morphological description of an input character and a target object that it should transform into. Guided by a set of objectives that model the core attributes of desirable transformable designs, optimized embeddings are interactively generated. Intuitively, embeddings represent tightly folded character configurations that fit within the target object. From any feasible embedding, skin meshes are then generated for each body part of the character. The process for generating these 3D models is based on a segmentation of the target object, which is achieved through a growth-based model applied to a multiple level set representation of the transformable. A set of transformation-aware post-processing algorithms ensure the feasibility of the final designs. Building on this technical core, our computational design system provides many opportunities for users to inject their intuition and personal preferences into the process of creating transformables, while shielding them from tasks that are challenging and tedious. As a result, they can intuitively explore the vast space of design possibilities. We demonstrated the effectiveness of our computational approach by creating a variety of transformable designs, three of which we fabricate.

Dingzeyu Li, Timothy Langlois, and Changxi Zheng

Scene-Aware Audio for 360° Videos.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

Scene-Aware Audio for 360° Videos.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

Although 360° cameras ease the capture of panoramic footage, it remains challenging to add realistic 360° audio that blends into the captured scene and is synchronized with the camera motion. We present a method for adding scene-aware spatial audio to 360° videos in typical indoor scenes, using only a conventional mono-channel microphone and a speaker. We observe that the late reverberation of a room's impulse response is usually diffuse spatially and directionally. Exploiting this fact, we propose a method that synthesizes the directional impulse response between any source and listening locations by combining a synthesized early reverberation part and a measured late reverberation tail. The early reverberation is simulated using a geometric acoustic simulation and then enhanced using a frequency modulation method to capture room resonances. The late reverberation is extracted from a recorded impulse response, with a carefully chosen time duration that separates out the late reverberation from the early reverberation. In our validations, we show that our synthesized spatial audio matches closely with recordings using ambisonic microphones. Lastly, we demonstrate the strength of our method in several applications.

@article{Li2018360audio,

title={Scene-Aware Audio for 360\textdegree{} Videos},

author={Li, Dingzeyu and Langlois, Timothy R. and Zheng, Changxi},

journal={ACM Trans. Graph.},

volume={37},

number={4},

year={2018},

publisher = {ACM},

address = {New York, NY, USA},

}

Yun (Raymond) Fei, Christopher Batty, Eitan Grinspun, and Changxi Zheng

A Multi-Scale Model for Simulating Liquid-Fabric Interactions.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

A Multi-Scale Model for Simulating Liquid-Fabric Interactions.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

We propose a method for simulating the complex dynamics of partially and fully saturated woven and knit fabrics interacting with liquid, including the effects of buoyancy, nonlinear drag, pore (capillary) pressure, dripping, and convection-diffusion. Our model evolves the velocity fields of both the liquid and solid relying on mixture theory, as well as tracking a scalar saturation variable that affects the pore pressure forces in the fluid. We consider the porous microstructure implied by the fibers composing individual threads, and use it to derive homogenized drag and pore pressure models that faithfully reflect the anisotropy of fabrics. In addition to the bulk liquid and fabric motion, we derive a quasi-static flow model that accounts for liquid spreading within the fabric itself. Our implementation significantly extends standard numerical cloth and fluid models to support the diverse behaviors of wet fabric, and includes a numerical method tailored to cope with the challenging nonlinearities of the problem. We explore a range of fabric-water interactions to validate our model, including challenging animation scenarios involving splashing, wringing, and collisions with obstacles, along with qualitative comparisons against simple physical experiments.

@article{Fei2018MMS,

author = {Fei, Yun (Raymond) and Batty, Christopher and Grinspun, Eitan and Zheng, Changxi},

title = {A Multi-scale Model for Simulating Liquid-fabric Interactions},

journal = {ACM Trans. Graph.},

issue_date = {Aug 2018},

volume = {37},

number = {4},

month = aug,

year = {2018},

pages = {51:1--51:16},

articleno = {51},

numpages = {16},

publisher = {ACM},

address = {New York, NY, USA},

}

Gabriel Cirio, Ante Qu, George Drettakis, Eitan Grinspun, and Changxi Zheng

Multi-Scale Simulation of Nonlinear Thin-Shell Sound with Wave Turbulence.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

Multi-Scale Simulation of Nonlinear Thin-Shell Sound with Wave Turbulence.

ACM Transactions on Graphics (SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

Thin shells -- solids that are thin in one dimension compared to the other

two -- often emit rich nonlinear sounds when struck. Strong excitations can

even cause chaotic thin-shell vibrations, producing sounds whose energy

spectrum diffuses from low to high frequencies over time -- a phenomenon

known as wave turbulence. It is all these nonlinearities that grant shells such

as cymbals and gongs their characteristic "glinting" sound. Yet, simulation

models that efficiently capture these sound effects remain elusive.

We propose a physically based, multi-scale reduced simulation method to synthesize nonlinear thin-shell sounds. We first split nonlinear vibrations into two scales, with a small low-frequency part simulated in a fully nonlinear way, and a high-frequency part containing many more modes approximated through time-varying linearization. This allows us to capture interesting nonlinearities in the shells' deformation, tens of times faster than previous approaches. Furthermore, we propose a method that enriches simulated sounds with wave turbulent sound details through a phenomenological diffusion model in the frequency domain, and thereby sidestep the expensive simulation of chaotic high-frequency dynamics. We show several examples of our simulations, illustrating the efficiency and realism of our model.

We propose a physically based, multi-scale reduced simulation method to synthesize nonlinear thin-shell sounds. We first split nonlinear vibrations into two scales, with a small low-frequency part simulated in a fully nonlinear way, and a high-frequency part containing many more modes approximated through time-varying linearization. This allows us to capture interesting nonlinearities in the shells' deformation, tens of times faster than previous approaches. Furthermore, we propose a method that enriches simulated sounds with wave turbulent sound details through a phenomenological diffusion model in the frequency domain, and thereby sidestep the expensive simulation of chaotic high-frequency dynamics. We show several examples of our simulations, illustrating the efficiency and realism of our model.

@article{Cirio2018MSN,

author = {Cirio, Gabriel and Qu, Ante and Drettakis, George and Grinspun, Eitan and Zheng, Changxi},

title = {Multi-scale Simulation of Nonlinear Thin-shell Sound with Wave Turbulence},

journal = {ACM Trans. Graph.},

volume = {37},

number = {4},

month = jul,

year = {2018},

pages = {110:1--110:14},

articleno = {110},

numpages = {14},

url = {http://www.cs.columbia.edu/cg/waveturb/},

address = {New York, NY, USA},

}

Chang Xiao, Cheng Zhang, and Changxi Zheng

FontCode: Embedding Information in Text Documents using Glyph Perturbation.

ACM Transactions on Graphics, 2018 (Presented at SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

FontCode: Embedding Information in Text Documents using Glyph Perturbation.

ACM Transactions on Graphics, 2018 (Presented at SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

We introduce FontCode, an information embedding technique for text documents. Provided a text document with specific fonts, our method embeds user-specified information in the text by perturbing the glyphs of text characters while preserving the text content. We devise an algorithm to choose unobtrusive yet machine-recognizable glyph perturbations, leveraging a recently developed generative model that alters the glyphs of each character continuously on a font manifold. We then introduce an algorithm that embeds a user-provided message in the text document and produces an encoded document whose appearance is minimally perturbed from the original document. We also present a glyph recognition method that recovers the embedded information from an encoded document stored as a vector graphic or pixel image, or even on a printed paper. In addition, we introduce a new error-correction coding scheme that rectifies a certain number of recognition errors. Lastly, we demonstrate that our technique enables a wide array of applications, using it as a text document metadata holder, an unobtrusive optical barcode, a cryptographic message embedding scheme, and a text document signature.

@article{Xiao2018FEI,

author = {Xiao, Chang and Zhang, Cheng and Zheng, Changxi},

title = {FontCode: Embedding Information in Text Documents Using Glyph Perturbation},

journal = {ACM Trans. Graph.},

issue_date = {May 2018},

volume = {37},

number = {2},

month = feb,

year = {2018},

pages = {15:1--15:16},

articleno = {15},

numpages = {16},

doi = {10.1145/3152823},

}

We report a high-efficiency dielectric metasurface with continuous and arbitrary control of both amplitude and phase of one or two colors simultaneously. We numerically and experimentally demonstrate 2D and 3D holograms using such metasurfaces.

Zhao Tian, Yu-Lin Wei, Wei-Nin Chang, Xi Xiong, Changxi Zheng, Hsin-Mu Tsai, Kate Ching-Ju Lin, and Xia Zhou

Augmenting Indoor Inertial Tracking with Polarized Light.

Conference on Mobile Systems, Applications, and Services (MobiSys), 2018

Paper (PDF) Abstract Bibtex

Augmenting Indoor Inertial Tracking with Polarized Light.

Conference on Mobile Systems, Applications, and Services (MobiSys), 2018

Paper (PDF) Abstract Bibtex

Inertial measurement unit (IMU) has long suffered from the problem

of integration drift, where sensor noises accumulate quickly and

cause fast-growing tracking errors. Existing methods for calibrating

IMU tracking either require human in the loop, or need energyconsuming

cameras, or suffer from coarse tracking granularity. We

propose to augment indoor inertial tracking by reusing existing

indoor luminaries to project a static light polarization pattern in the

space. This pattern is imperceptible to human eyes and yet through

a polarizer, it becomes detectable by a color sensor, and thus can

serve as fine-grained optical landmarks that constrain and correct

IMU's integration drift and boost tracking accuracy. Exploiting

the birefringence optical property of transparent tapes -- a lowcost

and easily-accessible material -- we realize the polarization

pattern by simply adding to existing light cover a thin polarizer

film with transparent tape stripes glued atop. When fusing with

IMU sensor signals, the light pattern enables robust, accurate and

low-power motion tracking. Meanwhile, our approach entails low

deployment overhead by reusing existing lighting infrastructure

without needing an active modulation unit. We build a prototype of

our light cover and the sensing unit using off-the-shelf components.

Experiments show 4.3 cm median error for 2D tracking and 10 cm

for 3D tracking, as well as its robustness in diverse settings.

@inproceedings{Tian2018AII,

author = {Tian, Zhao and Wei, Yu-Lin and Chang, Wei-Nin and Xiong, Xi and Zheng, Changxi and

Tsai, Hsin-Mu and Lin, Kate Ching-Ju and Zhou, Xia},

title = {Augmenting Indoor Inertial Tracking with Polarized Light},

booktitle = {Proceedings of the 16th Annual International Conference on Mobile Systems,

Applications, and Services},

series = {MobiSys '18},

year = {2018},

numpages = {14},

publisher = {ACM},

address = {New York, NY, USA},

}

Depth of heat penetration and temperature must be precisely controlled to optimize nutritional value, appearance, and taste of food products. These objectives can be achieved with the use of a high-resolution blue diode laser---which operates at 445nm---by adjusting the water content of the dough and the exposure pattern of the laser. Using our laser, we successfully cooked a 1mm thick dough sample with a 5mm diameter ring-shaped cooking pattern, 120 repetitions, 4000mm/min speed, and 2W laser power. Heat penetration in dough products with a blue laser is significantly higher compared to with an infrared laser. The use of a blue laser coupled with an infrared laser yields most optimal cooking conditions for food layered manufacture.

2017

Zhao Tian, Yu-Lin Wei, Xi Xiong, Wei-Nin Chang, Hsin-Mu Tsai, Kate Ching-Ju Lin, Changxi Zheng, and Xia Zhou

Position: Augmenting Inertial Tracking with Light.

Visible Light Communication Systems (VLCS), 2017

Paper (PDF) Bibtex

Position: Augmenting Inertial Tracking with Light.

Visible Light Communication Systems (VLCS), 2017

Paper (PDF) Bibtex

@inproceedings{Tian:2017:PAI,

author = {Tian, Zhao and Wei, Yu-Lin and Xiong, Xi and Chang, Wei-Nin and Tsai, Hsin-Mu and Lin,

Kate Ching-Ju and Zheng, Changxi and Zhou, Xia},

title = {Position: Augmenting Inertial Tracking with Light},

booktitle = {Proceedings of the 4th ACM Workshop on Visible Light Communication Systems},

series = {VLCS '17},

year = {2017},

location = {Snowbird, Utah, USA},

pages = {25--26},

numpages = {2},

publisher = {ACM}

}

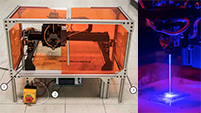

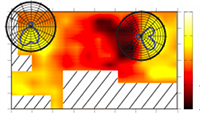

Xi Xiong, Justin Chan, Ethan Yu, Nisha Kumari, Ardalan Amiri Sani, Changxi Zheng, and Xia Zhou

Customizing Indoor Wireless Coverage via a 3D-Fabricated Reflector.

ACM Systems for Energy-Efficient Built Environments (BuildSys), 2017

Paper (PDF) Project Page Abstract Video

Customizing Indoor Wireless Coverage via a 3D-Fabricated Reflector.

ACM Systems for Energy-Efficient Built Environments (BuildSys), 2017

Paper (PDF) Project Page Abstract Video

Judicious control of indoor wireless coverage is crucial in built environments. It enhances signal reception, reduces harmful interference, and raises the barrier for malicious attackers. Existing methods are either costly, vulnerable to attacks, or hard to configure. We present a low-cost, secure, and easy-to-configure approach that uses an easily-accessible, 3D-fabricated reflector to customize wireless coverage. With input on coarse-grained environment setting and preferred coverage (e.g., areas with signals to be strengthened or weakened), the system computes an optimized reflector shape tailored to the given environment. The user simply 3D prints the reflector and places it around a Wi-Fi access point to realize the target coverage. We conduct experiments to examine the efficacy and limits of optimized reflectors in different indoor settings. Results show that optimized reflectors coexist with a variety of Wi-Fi APs and correctly weaken or enhance signals in target areas by up to 10 or 6 dB, resulting to throughput changes by up to -63.3% or 55.1%.

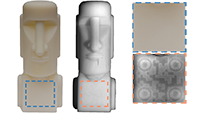

Dingzeyu Li, Avinash S. Nair, Shree K. Nayar, and Changxi Zheng

AirCode: Unobtrusive Physical Tags for Digital Fabrication.

ACM User Interface Software and Technology (UIST), Oct. 2017

(Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

AirCode: Unobtrusive Physical Tags for Digital Fabrication.

ACM User Interface Software and Technology (UIST), Oct. 2017

(Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

We present AirCode, a technique that allows the user to tag physically fabricated objects with given information. An AirCode tag consists of a group of carefully designed air pockets placed beneath the object surface. These air pockets are easily produced during the fabrication process of the object, without any additional material or postprocessing. Meanwhile, the air pockets affect only the scattering light transport under the surface, and thus are hard to notice to our naked eyes. But, by using a computational imaging method, the tags become detectable. We present a tool that automates the design of air pockets for the user to encode information. AirCode system also allows the user to retrieve the information from captured images via a robust decoding algorithm. We demonstrate our tagging technique with applications for metadata embedding, robotic grasping, as well as conveying object affordances.

@inproceedings{Li2017AUP,

author = {Li, Dingzeyu and Nair, Avinash S. and Nayar, Shree K. and Zheng, Changxi},

title = {AirCode: Unobtrusive Physical Tags for Digital Fabrication},

booktitle = {Proceedings of the 30th Annual ACM Symposium on User Interface Software

and Technology},

series = {UIST '17},

year = {2017},

pages = {449--460},

publisher = {ACM},

address = {New York, NY, USA},

}

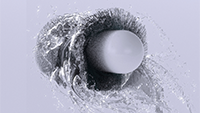

Yun (Raymond) Fei, Henrique Teles Maia, Christopher Batty, Changxi Zheng, and Eitan Grinspun

A Multi-Scale Model for Simulating Liquid-Hair Interactions.

ACM Transactions on Graphics (SIGGRAPH 2017), 36(4)

Paper (PDF) Project Page Abstract Video Bibtex

A Multi-Scale Model for Simulating Liquid-Hair Interactions.

ACM Transactions on Graphics (SIGGRAPH 2017), 36(4)

Paper (PDF) Project Page Abstract Video Bibtex

The diverse interactions between hair and liquid are complex and span multiple

length scales, yet are central to the appearance of humans and animals in

many situations. We therefore propose a novel multi-component simulation

framework that treats many of the key physical mechanisms governing the

dynamics of wet hair. The foundations of our approach are a discrete rod

model for hair and a particle-in-cell model for fluids. To treat the thin layer

of liquid that clings to the hair, we augment each hair strand with a height

field representation. Our contribution is to develop the necessary physical

and numerical models to evolve this new system and the interactions among

its components. We develop a new reduced-dimensional liquid model to

solve the motion of the liquid along the length of each hair, while accounting

for its moving reference frame and influence on the hair dynamics. We

derive a faithful model for surface tension-induced cohesion effects between

adjacent hairs, based on the geometry of the liquid bridges that connect

them. We adopt an empirically-validated drag model to treat the effects of

coarse-scale interactions between hair and surrounding fluid, and propose

new volume-conserving dripping and absorption strategies to transfer liquid

between the reduced and particle-in-cell liquid representations. The synthesis

of these techniques yields an effective wet hair simulator, which we use

to animate hair flipping, an animal shaking itself dry, a spinning car wash

roller brush dunked in liquid, and intricate hair coalescence effects, among

several additional scenarios.

@article{Fei:2017:liquidhair,

title={A Multi-Scale Model for Simulating Liquid-Hair Interactions},

author={Fei, Yun (Raymond) and Maia, Henrique Teles and Batty, Christopher and Zheng, Changxi

and Grinspun, Eitan},

journal={ACM Trans. Graph.},

volume={36},

number={4},

year={2017},

}

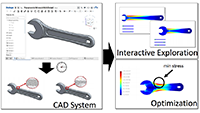

Adriana Schulz, Jie Xu, Bo Zhu, Changxi Zheng, Eitan Grispun, and Wojciech Matusik

Interactive Design Space Exploration and Optimization for CAD Models.

ACM Transactions on Graphics (SIGGRAPH 2017), 36(4)

Paper (PDF) Project Page Abstract Video Bibtex

Interactive Design Space Exploration and Optimization for CAD Models.

ACM Transactions on Graphics (SIGGRAPH 2017), 36(4)

Paper (PDF) Project Page Abstract Video Bibtex

Computer Aided Design (CAD) is a multi-billion dollar industry used by almost every mechanical engineer in the world to create practically every existing manufactured shape. CAD models are not only widely available but also extremely useful in the growing field of fabrication-oriented design because they are parametric by construction and capture the engineer's design intent, including manufacturability. Harnessing this data, however, is challenging, because generating the geometry for a given parameter value requires time-consuming computations. Furthermore, the resulting meshes have different combinatorics, making the mesh data inherently dis- continuous with respect to parameter adjustments. In our work, we address these challenges and develop tools that allow interactive exploration and optimization of parametric CAD data. To achieve interactive rates, we use precomputation on an adaptively sampled grid and propose a novel scheme for interpolating in this domain where each sample is a mesh with different combinatorics. Specifically, we extract partial correspondences from CAD representations for local mesh morphing and propose a novel interpolation method for adaptive grids that is both continuous/smooth and local (i.e., the influence of each sample is constrained to the local regions where mesh morphing can be computed). We show examples of how our method can be used to interactively visualize and optimize objects with a variety of physical properties.

@article{Schulz:2017,

author = {Schulz, Adriana and Xu, Jie and Zhu, Bo and Zheng, Changxi

and Grinpun, Eitan and Matusik, Wojciech},

title = {Interactive Design Space Exploration and Optimization for CAD Models},

journal = {ACM Transactions on Graphics},

year = {July 2017},

volume = {36},

number = {4},

}

We report a high-efficiency dielectric metasurface with continuous and arbitrary control of both amplitude and phase. We experimentally demonstrated the advantages of complete wavefront control by comparing amplitude-phase modulation metasurface holograms to phase-only metasurface holograms.

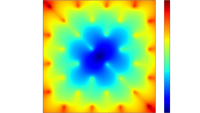

Shuang Zhao, Frédo Durand, and Changxi Zheng

Inverse Diffusion Curves using Shape Optimization.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2017

Paper (PDF) Abstract Video Bibtex

Inverse Diffusion Curves using Shape Optimization.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2017

Paper (PDF) Abstract Video Bibtex

The inverse diffusion curve problem focuses on automatic creation of diffusion curve images that resemble user provided color fields. This problem is challenging since the 1D curves have a nonlinear and global impact on resulting color fields via a partial differential equation (PDE). We introduce a new approach complementary to previous methods by optimizing curve geometry. In particular, we propose a novel iterative algorithm based on the theory of shape derivatives. The resulting diffusion curves are clean and well-shaped, and the final image closely approximates the input. Our method provides a user-controlled parameter to regularize curve complexity, and generalizes to handle input color fields represented in a variety of formats.

@article{Zhao:2017:IDC,

title={Inverse Diffusion Curves using Shape Optimization},

author={Shuang Zhao and Fredo Durand and Changxi Zheng},

publisher={IEEE Trans Vis Comput Graph. (TVCG)}

volume={PP},

number={99},

year={2017},

}2016

Xiang Chen, Changxi Zheng, and Kun Zhou

Example-Based Subspace Stress Analysis for Interactive Shape Design.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2016

Paper (PDF) Abstract Video Bibtex

Example-Based Subspace Stress Analysis for Interactive Shape Design.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2016

Paper (PDF) Abstract Video Bibtex

Stress analysis is a crucial tool for designing structurally sound shapes. However, the expensive computational cost has hampered its use in interactive shape editing tasks. We augment the existing example-based shape editing tools, and propose a fast subspace stress analysis method to enable stress-aware shape editing. In particular, we construct a reduced stress basis from a small set of shape exemplars and possible external forces. This stress basis is automatically adapted to the current user edited shape on the fly, and thereby offers reliable stress estimation. We then introduce a new finite element discretization scheme to use the reduced basis for fast stress analysis. Our method runs up to two orders of magnitude faster than the full-space finite element analysis, with average L2 estimation errors less than 2% and maximum L2 errors less than 6%. Furthermore, we build an interactive stress-aware shape editing tool to demonstrate its performance in practice.

@article{Chen:2016:stress,

title={Example-Based Subspace Stress Analysis for Interactive Shape Design},

author={Xiang Chen and Changxi Zheng and Kun Zhou},

publisher={IEEE Trans Vis Comput Graph. (TVCG)}

volume={PP},

number={99},

year={2016},

}

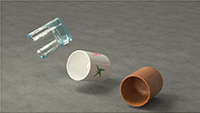

Gabriel Cirio, Dingzeyu Li, Eitan Grinspun, Miguel A. Otaduy, and Changxi Zheng

Crumpling Sound Synthesis.

ACM Transactions on Graphics (SIGGRAPH Asia 2016), 35(6)

Paper (PDF) Project Page Abstract Video Bibtex

Crumpling Sound Synthesis.

ACM Transactions on Graphics (SIGGRAPH Asia 2016), 35(6)

Paper (PDF) Project Page Abstract Video Bibtex

Crumpling a thin sheet produces a characteristic sound, comprised of distinct clicking sounds corresponding to buckling events. We propose a physically based algorithm that automatically synthesizes crumpling sounds for a given thin shell animation. The resulting sound is a superposition of individually synthesized clicking sounds corresponding to visually-significant and -insignificant buckling events. We identify visually significant buckling events on the dynamically evolving thin surface mesh, and instantiate visually insignificant buckling events via a stochastic model that seeks to mimic the power-law distribution of buckling energies observed in many materials.

In either case, the synthesis of a buckling sound employs linear modal analysis of the deformed thin shell. Because different buckling events in general occur at different deformed configurations, the question arises whether the calculation of linear modes can be reused. We amortize the cost of the linear modal analysis by dynamically partitioning the mesh into nearly rigid pieces: the modal analysis of a rigidly moving piece is retained over time, and the modal analysis of the assembly is obtained via Component Mode Synthesis (CMS). We illustrate our approach through a series of examples and a perceptual user study, demonstrating the utility of the sound synthesis method in producing realistic sounds at practical computation times.

In either case, the synthesis of a buckling sound employs linear modal analysis of the deformed thin shell. Because different buckling events in general occur at different deformed configurations, the question arises whether the calculation of linear modes can be reused. We amortize the cost of the linear modal analysis by dynamically partitioning the mesh into nearly rigid pieces: the modal analysis of a rigidly moving piece is retained over time, and the modal analysis of the assembly is obtained via Component Mode Synthesis (CMS). We illustrate our approach through a series of examples and a perceptual user study, demonstrating the utility of the sound synthesis method in producing realistic sounds at practical computation times.

@article{Cirio:2016:crumpling_sound_synthesis,

title={Crumpling Sound Synthesis},

author={Cirio, Gabriel and Li, Dingzeyu and Grinspun, Eitan and Otaduy, Miguel A.

and Zheng, Changxi},

journal={ACM Trans. Graph.},

volume={35},

number={6},

year={2016},

url = {http://www.cs.columbia.edu/cg/crumpling/}

}

Tianjia Shao, Dongping Li, Yuliang Rong, Changxi Zheng, and Kun Zhou

Dynamic Furniture Modeling Through Assembly Instructions.

ACM Transactions on Graphics (SIGGRAPH Asia 2016), 35(6)

Paper (PDF) Abstract Video Bibtex

Dynamic Furniture Modeling Through Assembly Instructions.

ACM Transactions on Graphics (SIGGRAPH Asia 2016), 35(6)

Paper (PDF) Abstract Video Bibtex

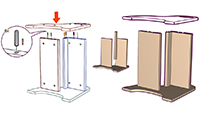

We present a technique for parsing widely used furniture assembly instructions, and reconstructing the 3D models of furniture components and their dynamic assembly process. Our technique takes as input a multi-step assembly instruction in a vector graphic format and starts to group the vector graphic primitives into semantic elements representing individual furniture parts, mechanical connectors (e.g., screws, bolts and hinges), arrows, visual highlights, and numbers. To reconstruct the dynamic assembly process depicted over multiple steps, our system identifies previously built 3D furniture components when parsing a new step, and uses them to address the challenge of occlusions while generating new 3D components incrementally. With a wide range of examples covering a variety of furniture types, we demonstrate the use of our system to animate the 3D furniture assembly process and, beyond that, the semantic-aware furniture editing as well as the fabrication of personalized furnitures.

@article{Shao:2016:furnitures,

title={Dynamic Furniture Modeling Through Assembly Instructions},

author={Tianjia Shao and Dongping Li and Yuliang Rong and Changxi Zheng and Kun Zhou},

journal = {ACM Transactions on Graphics (SIGGRAPH Asia 2016)},

volume={35},

number={6},

year={2016}

}

Dingzeyu Li, David I.W. Levin, Wojciech Matusik, and Changxi Zheng

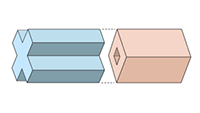

Acoustic Voxels: Computational Optimization of Modular Acoustic Filters.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

Acoustic Voxels: Computational Optimization of Modular Acoustic Filters.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

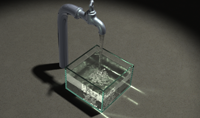

Acoustic filters have a wide range of applications, yet customizing them with desired properties is difficult. Motivated by recent progress in additive manufacturing that allows for fast prototyping of complex shapes, we present a computational approach that automates the design of acoustic filters with complex geometries. In our approach, we construct an acoustic filter comprised of a set of parameterized shape primitives, whose transmission matrices can be precomputed. Using an efficient method of simulating the transmission matrix of an assembly built from these underlying primitives, our method is able to optimize both the arrangement and the parameters of the acoustic shape primitives in order to satisfy target acoustic properties of the filter. We validate our results against industrial laboratory measurements and high-quality off-line simulations. We demonstrate that our method enables a wide range of applications including muffler design, musical wind instrument prototyping, and encoding imperceptible acoustic information into everyday objects.

@article{Li:2016:acoustic_voxels,

title={Acoustic Voxels: Computational Optimization of Modular Acoustic Filters},

author={Li, Dingzeyu and Levin, David I.W. and Matusik, Wojciech and Zheng, Changxi},

journal = {ACM Transactions on Graphics (SIGGRAPH 2016)},

volume={35},

number={4},

year={2016},

url = {http://www.cs.columbia.edu/cg/lego/}

}

Timothy R. Langlois, Changxi Zheng, and Doug L. James

Toward Animating Water with Complex Acoustic Bubbles.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

Toward Animating Water with Complex Acoustic Bubbles.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

This paper explores methods for synthesizing physics-based bubble sounds directly from two-phase incompressible simulations of bubbly water flows. By tracking fluid-air interface geometry, we identify bubble geometry and topological changes due to splitting, merging and popping. A novel capacitance-based method is proposed that can estimate volume-mode bubble frequency changes due to bubble size, shape, and proximity to solid and air interfaces. Our acoustic transfer model is able to capture cavity resonance effects due to near-field geometry, and we also propose a fast precomputed bubble-plane model for cheap transfer evaluation. In addition, we consider a bubble forcing model that better accounts for bubble entrainment, splitting, and merging events, as well as a Helmholtz resonator model for bubble popping sounds. To overcome frequency bandwidth limitations associated with coarse resolution fluid grids, we simulate micro-bubbles in the audio domain using a power-law model of bubble populations. Finally, we present several detailed examples of audiovisual water simulations and physical experiments to validate our frequency model.

@article{Langlois:2016:Bubbles,

author = {Timothy R. Langlois and Changxi Zheng and Doug L. James},

title = {Toward Animating Water with Complex Acoustic Bubbles},

journal = {ACM Transactions on Graphics (SIGGRAPH 2016)},

year = {2016},

volume = {35},

number = {4},

month = Jul,

url = {http://www.cs.cornell.edu/projects/Sound/bubbles}

}

Changxi Zheng, Timothy Sun and Xiang Chen

Deployable 3D Linkages with Collision Avoidance.

ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA), July, 2016 (Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

Deployable 3D Linkages with Collision Avoidance.

ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA), July, 2016 (Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

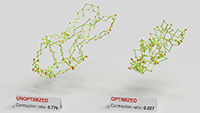

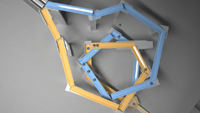

We present a pipeline that allows ordinary users to create deployable scissor linkages in arbitrary 3D shapes, whose mechanisms are inspired by Hoberman's Sphere. From an arbitrary 3D model and a few user inputs, our method can generate a fabricable scissor linkage resembling that shape that aims to save as much space as possible in its most contracted state. Self-collisions are the primary obstacle in this goal, and these are not addressed in prior work. One key component of our algorithm is a succinct parameterization of these types of linkages. The fast continuous collision detection that arises from this parameterization serves as the foundation for the discontinuous optimization procedure that automatically improves joint placement for avoiding collisions. While linkages are usually composed of straight bars, we consider curved bars as a means of improving the contractibility. To that end, we describe a continuous optimization algorithm for locally deforming the bars.

@inproceedings{Zheng16:Deployable,

author = {Changxi Zheng and Timothy Sun and Xiang Chen},

title = {Deployable 3D Linkages with Collision Avoidance},

booktitle = {Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation},

series = {SCA '16},

year = {2016},

month = Jul,

url = {http://www.cs.columbia.edu/cg/deployable}

}

Menglei Chai, Changxi Zheng, and Kun Zhou

Adaptive Skinning for Interactive Hair-Solid Simulation.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2016

Paper (PDF) Abstract Video Bibtex

Adaptive Skinning for Interactive Hair-Solid Simulation.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2016

Paper (PDF) Abstract Video Bibtex

Reduced hair models have proven successful for interactively simulating a full head of hair strands, building upon a fundamental assumption that only a small set of guide hairs are needed for explicit simulation, and the rest of the hair move coherently and thus can be interpolated using guide hairs. Unfortunately, hair-solid interactions is a pathological case for traditional reduced hair models, as the motion coherence between hair strands can be arbitrarily broken by interacting with solids.

In this paper, we propose an adaptive hair skinning method for interactive hair simulation with hair-solid collisions. We precompute many eligible sets of guide hairs and the corresponding interpolation relationships that are represented using a compact strand-based hair skinning model. At runtime, we simulate only guide hairs; for interpolating every other hair, we adaptively choose its guide hairs, taking into account motion coherence and potential hair-solid collisions. Further, we introduce a two-way collision correction algorithm to allow sparsely sampled guide hairs to resolve collisions with solids that can have small geometric features. Our method enables interactive simulation of more than 150K hair strands interacting with complex solid objects, using 400 guide hairs. We demonstrate the efficiency and robustness of the method with various hairstyles and user-controlled arbitrary hair-solid interactions.

In this paper, we propose an adaptive hair skinning method for interactive hair simulation with hair-solid collisions. We precompute many eligible sets of guide hairs and the corresponding interpolation relationships that are represented using a compact strand-based hair skinning model. At runtime, we simulate only guide hairs; for interpolating every other hair, we adaptively choose its guide hairs, taking into account motion coherence and potential hair-solid collisions. Further, we introduce a two-way collision correction algorithm to allow sparsely sampled guide hairs to resolve collisions with solids that can have small geometric features. Our method enables interactive simulation of more than 150K hair strands interacting with complex solid objects, using 400 guide hairs. We demonstrate the efficiency and robustness of the method with various hairstyles and user-controlled arbitrary hair-solid interactions.

@article{chai2016adaptive,

title={Adaptive Skinning for Interactive Hair-Solid Simulation},

author={Chai, Menglei and Zheng, Changxi and Zhou, Kun},

year={2016},

publisher={IEEE Trans Vis Comput Graph. (TVCG)}

}2015

Gaurav Bharaj, David Levin, James Tompkin, Yun Fei, Hanspeter Pfister, Wojciech Matusik and Changxi Zheng

Computational Design of Metallophone Contact Sounds.

ACM Transactions on Graphics (SIGGRAPH Asia 2015), 34(6)

Paper (PDF) Project Page Abstract Video Bibtex

Computational Design of Metallophone Contact Sounds.

ACM Transactions on Graphics (SIGGRAPH Asia 2015), 34(6)

Paper (PDF) Project Page Abstract Video Bibtex