Roxana Geambasu and Daniel Hsu Chosen for Google Faculty Research Awards Program

The award is given to faculty at top universities to support research that is relevant to Google’s products and services. The program is structured as a gift that funds a year of study for a graduate student.

Certified Robustness to Adversarial Examples with Differential Privacy

Principal Investigator: Roxana Geambasu Computer Science Department

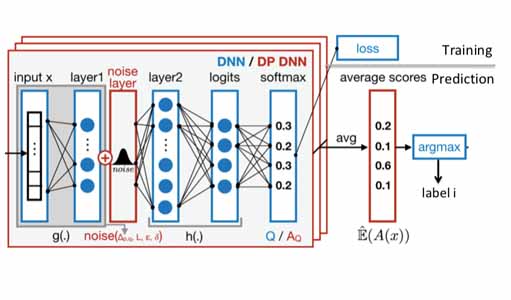

The proposal builds on Geambasu’s recent work on providing a “guaranteed” level of robustness for machine learning models against attackers that may try to fool their predictions. PixelDP works by randomizing the prediction of a model in such a way to obtain a bound on the maximum change an attacker can make on the probability of any label with only a small change in the image (measured in some norm).

Imagine that a building rolls out a face recognition-based authorization system. People are automatically recognized as they approach the door and are let into the building if they are labeled as someone with access to that building.

The face recognition system is most likely backed by a machine learning model, such as a deep neural network. These models have been shown to be extremely vulnerable to “adversarial examples,” where an adversary finds a very small change in their appearance that causes the models to classify them incorrectly – wearing a specific kind of hat or makeup can cause a face recognition model to misclassify even if the model would have been able to correctly classify without these “distractions.”

The bound the researchers enforce is then used to assess, on each prediction on an image, whether any attack up to a given norm size could have changed the prediction on that image. If it cannot, then the prediction is deemed “certifiably robust” against attacks up to that size.

A sample PixelDP DNN: original architecture in blue; the changes introduced by PixelDP in red.

This robustness certificate for an individual prediction is the key piece of functionality that their defense provides, and it can serve two purposes. First, a building authentication system can use it to decide whether a prediction is sufficiently robust to rely on the face recognition model to make an automated decision, or whether additional authentication is required. If the face recognition model cannot certify a particular person, that person may be required to use their key to get into the building. Second, a model designer can use robustness certificates for predictions on a test set to assess a lower bound of their model on accuracy under attack. They can use this certified accuracy to compare model designs and choose one that is most robust for deployment.

“Our defense is currently the most scalable defense that provides a formal guarantee of robustness to adversarial example attacks,” said Roxana Geambasu, principal investigator of the research project.

The project is joint work with Mathias Lecuyer, Daniel Hsu, and Suman Jana. It will develop new training and prediction algorithms for PixelDP models to increase certified accuracy for both small and large attacks. The Google funds will support PhD student Mathias Lecuyer, the primary author of PixelDP, to develop these directions and evaluate them on large networks in diverse domains.

The role of over-parameterization in solving non-convex problems

Principal Investigators: Daniel Hsu Computer Science Department, Arian Maleki Department of Statistics

One of the central computational tasks in data science is that of fitting statistical models to large and complex data sets. These models allow for people to reason and draw conclusions from the data.

For example, such models have been used to discover communities in social network data and to uncover human ancestry structure from genetic data. In order to make accurate inferences, it has to be ensured that the model is well-fit to the data. This is a challenge because the predominant approach to fitting models to data requires solving complex optimization problems that are computationally intractable in the worst case.

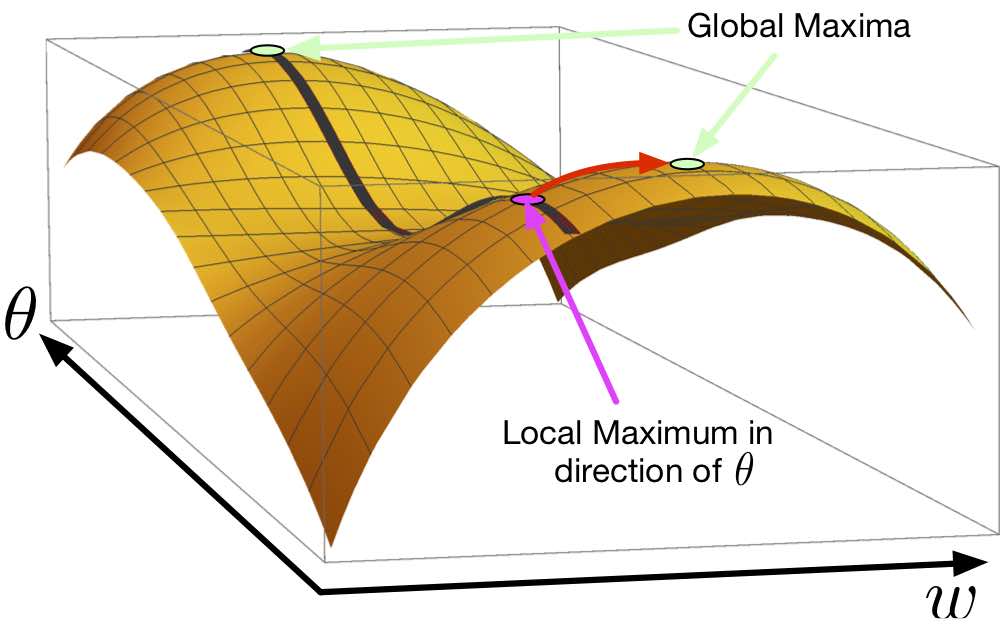

“Our research considers a surprising way to alleviate the computational burden, which is to ‘over-parameterize’ the statistical model,” said Daniel Hsu, one of the principal investigators. “By over-parameterization, we mean introducing additional ‘parameters’ to the statistical model that are unnecessary from the statistical point-of-view.”

One way to over-parameterize a model is to take some some prior information about the data and now regard it as a variable parameter to fit. For instance, in the social network case, the sizes of the communities expected to discover may have been known; the model can be over-parameterized by treating the sizes as parameters to be estimated. This over-parameterization would seem to make the model fitting task more difficult. However, the researchers proved that, for a particular statistical model called a Gaussian mixture model, over-parameterization can be computationally beneficial in a very strong sense.

This result is important because it suggests a way around computational intractability that data scientists may face in their work of fitting models to data.

The aim of the proposed research project is to understand this computational benefit of over-parameterization in the context of other statistical models. The researchers have empirical evidence of this benefit for many other variants of the Gaussian mixture model beyond the one for which their theorem applies. The Google funds will support PhD student Ji Xu, who is jointly advised by Daniel Hsu and Arian Maleki.