Tag: climate change

Voices of CS: Zachary Huang

PhD student Zachary Huang shares how his research helps track data from smallholder farms to fill “data voids” in Africa

Extreme weather and climate hazards negatively affect the food production and income of smallholder farmers across the world. One of the risk reduction strategies employed is index insurance for drought, which allows smallholder farmers to reduce their risk of losing money in the event of a drought. Computing the insurance claims requires data on the drought severity and timing. The problem is that these smallholder farmers do not have the infrastructure to accurately collect data, causing a “data void”. This, in turn, makes it hard for them to receive fair and timely relief.

Over the past decade, Columbia Earth Institute’s Daniel Osgood, has led the Financial Instruments Sector Team (FIST) which partners with farmers in Ethiopia, Zambia, and Senegal to develop an insurance index design process. The data was collected manually and in person but when COVID hit, the researchers realized they needed to develop scalable and easy-to-use digital tools.

In a recently funded NSF grant, Osgood collaborated with Assistant Professor Lydia Chilton and Associate Professor Eugene Wu to create systems that accurately collect data, tools that clean and visualize the data, and ultimately create an “open insurance toolkit” that any organization or government can use. One of the major tools created for the collaboration was Reptile, an easy-to-use app that helps clean and cross-verify farmer drought reports developed by third-year PhD student Zachary Huang. Reptile utilizes the predictive power of satellite data readily available at the International Research Institute’s data library as well as data collected from the farmers themselves. Farmer drought reports are critical for the FIST team to make high-stakes data-driven decisions in index insurance design. The insurance could protect hundreds of millions of farmers in face of climate hazards and extreme weather events.

Huang is a PhD student in Eugene Wu’s Wu Lab where researchers are addressing three bottlenecks in the future of data analysis: data cleaning, creating interactive data exploration and visualization interfaces, and understanding analysis results. Even though Huang’s background is in data analytics and database management systems, he shared he was not concerned about diving into the index insurance project. He learned about index insurance through a “very friendly” index insurance tutorial made by the FIST team. He also reviewed previous research papers and it was enough for him to work on the project. He likened himself to a blacksmith who builds tools for swordsmen. Said Huang, “I just need to know what swordsmen care about when using swords, but I do not have to be a swordmaster.”

We caught up with Huang to talk about his research, working on the Reptile app, and how his research focus has evolved.

Q: What was your role in the project? What did you do?

I mainly had two roles. My first role is as a software engineer, where I developed many useful data exploration tools to help FIST clean farmer drought reports and track the progress of data cleaning.

My second role is as a researcher. I studied the problem of data cleaning, data exploration, and data integration in-depth. I found there was a gap between what data cleaning research focused on and the problems the FIST team actually had. So I formalized the problem of cleaning farmer drought data and solved many hard technical problems while building Reptile. Our paper has been accepted by the ACM SIGMOD International Conference on Management of Data (SIGMOD 2022).

Q: What kinds of data did you have to work with and how did you manage it?

The main data I worked with is farmer drought data collected through questionnaires and a large volume of satellite data available from the International Research Institute’s data library. The data library compiles raw climate, geophysical, health, and agriculture data from numerous providers and formats it into a common framework that is publicly available.

These data from the data library are very predictive of drought severity. For example, the rainfall data are negatively correlated with the drought data. However, I was surprised by how laborious and frustrating it was to merge these data with the drought data from farmers and fully exploit their predictive power. This “data integration” problem is a hard problem even in industry.

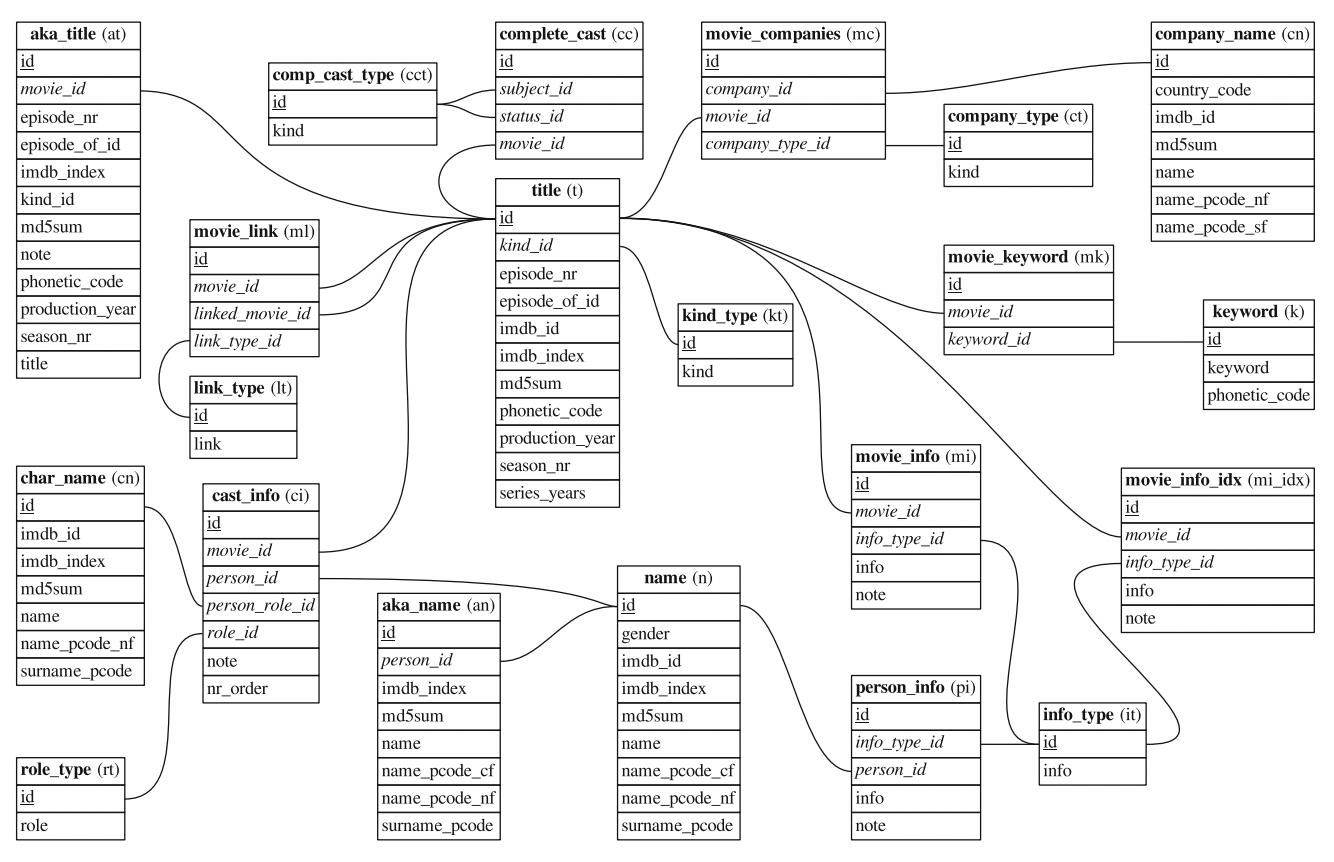

The problem of data analytics across different data sources is very common across areas and challenging. To give you a sense of the difficulty, let’s take a look at the structure of public data from IMDB, the Internet Movie Database website. The information about movies, actors, companies, etc. is distributed across so many tables. Unfortunately, traditional data analytics tools are typically designed for a single table at a time. As a result, analysts have to manually “join” these tables together, which is confusing for non-experts, slow, and generally painful. FIST is facing the same problem: there are so many valuable tables, but how to take advantage of them?

Q: What does Reptile do?

Farmer reports can often have errors from wrong data entry, misremembering historical events, and bias. It’s not realistic to examine and assess each report individually, so Reptile helps the FIST team identify abnormalities at the district or national level, cross-verify these patterns with satellite data, and quickly fix the errors. FIST and local partners then rely on these cleaned data to design the index insurance.

Q: You mentioned that you decided to make the system simple and much easier to use. Why is that?

Initially, I built many different features per the requests from FIST. The end result is a monolithic system that tries to do everything but does nothing well. Users had to go to different systems even if the tasks are similar and had huge overlaps, which caused confusion.

About why I decided to make the system simple, let me quote Steve Jobs, “Simple can be harder than complex: You have to work hard to get your thinking clean to make it simple. But it’s worth it in the end because once you get there, you can move mountains.” I invested most of my time thinking about the project and trying to think of creative ways to solve problems. I read many papers, studied the problems in abstraction, and solved research problems. As a result, I aggressively simplified the system and made it much more useful for users.

Q: Can you talk about your background and why did you decide to pursue a PhD?

In undergrad, I had a decent mathematical background and transferred to computer science during my junior year. I initially intended to apply for some software engineering jobs. However, I learned from a friend who was a software engineer in a big tech company that his job is “an endless routine of crushing monotony”, and he also wanted to apply for a PhD to do something more intellectual.

I enjoy doing projects. But if you are hired as a junior software engineer, chances are that you are assigned some tedious tasks in the beginning and you need to slowly climb the career ladder before you can become a project lead. I very much wanted to be an entrepreneur, but I was totally not ready at that time. Doing a PhD sounded like a cool option, as it is intellectual and I can take initiative with research projects.

Q: What is your research focus?

I am interested in data analytics in cloud databases. I pursue this type of research because I believe the cloud is the future. It is the most profitable service in big tech companies like Microsoft and Amazon. The momentum keeps going with a large growth rate. I believe in the near future almost all data will be stored in the cloud, people will never want to move data outside of the cloud, and all these data analytics and machine learning tasks will be done inside the cloud.

With great power comes great responsibility. However, current cloud databases only store large volumes of data, but leave it to users to figure out how to use them. People call those data in cloud databases a “data swamp” – they just dump tons of tables but no one understands them.

Before Reptile, my research interest was to automate data cleaning. Given the fact that there are so much data in the cloud, it is a huge waste not to use it. However, while designing Reptile, I realized that data cleaning is a human problem, not a system problem. There are so many errors that are domain-specific and cannot be automated. For instance, farmer reports could be wrong because they confused drought with a flood, mixed planting and harvesting seasons, misremember the year, etc. While any of these issues sound trivial, discovering and understanding all of them requires domain knowledge, experiences, and common sense. We call these types of problems “AI-complete” problems; they are the most difficult problems in AI and can’t be solved until we can make computers as intelligent as people.

After Reptile, I shifted my attention from data cleaning to algorithmic optimization of data analytics over multiple tables. I utilized theories from probabilistic graphical models to aggressively save computations and significantly accelerate the process. We have an active project that shows how this can enable practical “data markets”, which are platforms where people can trade and monetize data across and within organizations, such that people have huge incentives to clean and improve the utility of data so that they are more valuable to potential buyers.

Q: What are you working on now?

My theoretical and algorithmic work has led to many exciting applications.

For instance, I’m collaborating with the Microsoft Azure team to build an innovative in-database machine learning system. Currently, if customers want to apply machine learning to data in cloud databases, they have to move data “outside” of the database to a machine learning system, which is slow, wasteful, and not secure. We implement all the machine learning algorithms “inside” cloud databases so that customers can conduct data analytics directly in cloud databases.

Another application is to support data analytics and machine learning over hundreds of tables without the need to “join” them. For example, FIST can use this system to directly combine the predictive power of hundreds of satellite data in the data library with the drought reports from farmers. Our preliminary results show orders of magnitude performance improvement over traditional machine learning systems.

Q: How long did it take you to complete the work? How was it?

Reptile took about two years to complete. It took a lot longer than I expected! I started the initial draft in the first semester of my PhD. However, figuring out the details, conducting experiments, polishing the writing, and revising based on reviewers’ feedback…there were so many things to attend to, which made the project long. Luckily, the whole process is a cumulative learning experience and inspired many research ideas for my future projects. Plus, I think I will be able to finish any future research projects much more efficiently moving forward.

Q: How did your previous experiences prepare you for a PhD?

I did research projects when I was an undergraduate – one on data cleaning and another on database storage. These projects helped me understand the research process and what are the critical problems nowadays in databases. Ultimately, having research experience strengthened my PhD application.

Q: What are some things you wish you knew before starting your PhD?

Things take time and research projects especially take time! We all want overnight success, but success happens because we have prepared for it for a long time. It is important not to worry if your research papers are not accepted at conferences and published. There are too many factors that are out of our control. If you have good ideas and decent work, being published is just a matter of time.

Also, it is better to focus on learning. Because research projects take such a long period, it is easy for us to get lost in monotonous and repetitive routines. However, you do not improve by just working hard. Do something that is cumulative in the long term, like learning! Small things will add up and make a huge difference in the long term.

Q: What is your advice for students on how to navigate their PhD? If they want to do research what should they know?

They should definitely work on some new areas and get a competitive advantage that is unique to them. The aim of your PhD research is to innovate in a specific area and push the boundaries of human knowledge. However, if you are working in an area that has been well-studied by so many smart people for decades, chances are there is no room for further innovation. For instance, if you want to improve system performance based on your coding skills, there are so many talented people who can code, so it’s unlikely for you to beat them. Your PhD life will be much happier if you can find something that not too many people know but is quite useful, and then become an expert in it.

I invest a lot of time learning probabilistic graphical models and graph theory. These statistical techniques seem irrelevant to database systems but they solve a similar problem – how to conduct analytics over multiple tables. This competitive advantage lets me easily design algorithms that are magnitudes faster than previous work.

Q: What else do you think is important for PhD students to think about?

I think it is good to periodically check if your research direction is useful. Committing to a research project and spending huge amounts of time on it requires a certain kind of fooling yourself – you need to convince yourself that your research project is useful and it is worthwhile to work so hard on.

However, you need to periodically jump out of your comfort zone and verify if your research direction is really useful. It is disappointing to devote five years to a research direction only to later find out that no one cares. One way to verify the usefulness of a research topic is to find some users or collaborators, like the FIST team for my Reptile project.

You should also ask your advisors for help, as they have a much deeper understanding of the area. My advisor, Eugene Wu, helped me a lot in finding the real-world applications of my theoretical ideas. To find applications, we have connected with professors in different domains, research departments in different companies, and even venture capitalists.