Christos Papadimitriou and Mihalis Yannakakis Awarded The John von Neumann Theory Prize

Christos Papadimitriou and Mihalis Yannakakis were honored by INFORMS for their significant contributions to the field of operations research and analytics.

Christos Papadimitriou and Mihalis Yannakakis were honored by INFORMS for their significant contributions to the field of operations research and analytics.

Christos H. Papadimitriou and Mihalis Yannakakis Honored by INFORMS for their Significant Contributions to the Field of Operations Research and Analytics.

The paper “I Want to Figure Things Out”: Supporting Exploration in Navigation for People with Visual Impairments” and three other papers from the Graphics & User Interfaces group will be presented at the 26th ACM Conference On Computer-Supported Cooperative Work And Social Computing (CSCW 2023).

“I Want to Figure Things Out”: Supporting Exploration in Navigation for People with Visual Impairments”

Gaurav Jain Columbia University, Yuanyang Teng Columbia University, Dong Heon Cho Columbia University, Yunhao Xing Columbia University, Maryam Aziz University of Connecticut, and Brian Smith Columbia University

Navigation assistance systems (NASs) aim to help visually impaired people (VIPs) navigate unfamiliar environments. Most of today’s NASs support VIPs via turn-by-turn navigation, but a growing body of work highlights the importance of exploration as well. It is unclear, however, how NASs should be designed to help VIPs explore unfamiliar environments. In this paper, we perform a qualitative study to understand VIPs’ information needs and challenges with respect to exploring unfamiliar environments to inform the design of NASs that support exploration. Our findings reveal the types of spatial information that VIPs need as well as factors that affect VIPs’ information preferences. We also discover specific challenges that VIPs face that future NASs can address, such as orientation and mobility education and collaborating effectively with others. We present design implications for NASs that support exploration, and we identify specific research opportunities and discuss open socio-technical challenges for making such NASs possible. We conclude by reflecting on our study procedure to inform future approaches in research on ethical considerations that may be adopted while interacting with the broader VIP community.

Social Wormholes: Exploring Preferences and Opportunities for Distributed and Physically-Grounded Social Connections

Joanne Leong MIT Media Lab, Yuanyang Teng Columbia University, Xingyu Liu University of California Los Angeles, Hanseul Jun Stanford University, Sven Kratz Snap, Inc., Yu Jiang Tham Snap, Inc., Andrés Monroy-Hernández Snap, Inc. and Princeton University, Brian Smith Snap, Inc. and Columbia University, and Rajan Vaish Snap, Inc.

Ubiquitous computing encapsulates the idea for technology to be interwoven into the fabric of everyday life. As computing blends into everyday physical artifacts, powerful opportunities open up for social connection. Prior connected media objects span a broad spectrum of design combinations. Such diversity suggests that people have varying needs and preferences for staying connected to one another. However, since these designs have largely been studied in isolation, we do not have a holistic understanding around how people would configure and behave within a ubiquitous social ecosystem of physically-grounded artifacts. In this paper, we create a technology probe called Social Wormholes, that lets people configure their own home ecosystem of connected artifacts. Through a field study with 24 participants, we report on patterns of behaviors that emerged naturally in the context of their daily lives and shine a light on how ubiquitous computing could be leveraged for social computing.

Exploring Immersive Interpersonal Communication via AR

Kyungjun Lee University of Maryland, College Park, Hong Li Snap, Inc.,

Muhammad Rizky Wellytanto University of Illinois at Urbana-Champaign, Yu Jiang Tham Snap, Inc., Andrés Monroy-Hernández Snap, Inc. and Princeton University, Fannie Liu Snap, Inc. and JPMorgan Chase, Brian A. Smith Snap, Inc. and Columbia University, Rajan Vaish Snap, Inc.

A central challenge of social computing research is to enable people to communicate expressively with each other remotely. Augmented reality has great promise for expressive communication since it enables communication beyond texts and photos and towards immersive experiences rendered in recipients’ physical environments. Little research, however, has explored AR’s potential for everyday interpersonal communication. In this work, we prototype an AR messaging system, ARwand, to understand people’s behaviors and perceptions around communicating with friends via AR messaging. We present our findings under four themes observed from a user study with 24 participants, including the types of immersive messages people choose to send to each other, which factors contribute to a sense of immersiveness, and what concerns arise over this new form of messaging. We discuss important implications of our findings on the design of future immersive communication systems.

Perspectives from Naive Participants and Social Scientists on Addressing Embodiment in a Virtual Cyberball Task

Tao Long Cornell University and Columbia University, Swati Pandita Cornell University and California Institute of Technology, Andrea Stevenson Won Cornell University

We describe the design of an immersive virtual Cyberball task that included avatar customization, and user feedback on this design. We first created a prototype of an avatar customization template and added it to a Cyberball prototype built in the Unity3D game engine. Then, we conducted in-depth user testing and feedback sessions with 15 Cyberball stakeholders: five naive participants with no prior knowledge of Cyberball and ten experienced researchers with extensive experience using the Cyberball paradigm. We report the divergent perspectives of the two groups on the following design insights; designing for intuitive use, inclusivity, and realistic experiences versus minimalism. Participant responses shed light on how system design problems may contribute to or perpetuate negative experiences when customizing avatars. They also demonstrate the value of considering multiple stakeholders’ feedback in the design process for virtual reality, presenting a more comprehensive view in designing future Cyberball prototypes and interactive systems for social science research.

Research papers from the Computer Vision Group were accepted to the International Conference on Computer Vision (ICCV ’23), the premiere international conference that includes computer vision workshops and tutorials.

ViperGPT: Visual Inference via Python Execution for Reasoning

Dídac Surís Columbia University, Sachit Menon Columbia University, Carl Vondrick Columbia University

Answering visual queries is a complex task that requires both visual processing and reasoning. End-to-end models, the dominant approach for this task, do not explicitly differentiate between the two, limiting interpretability and generalization. Learning modular programs presents a promising alternative, but has proven challenging due to the difficulty of learning both the programs and modules simultaneously. We introduce ViperGPT, a framework that leverages code-generation models to compose vision-and-language models into subroutines to produce a result for any query. ViperGPT utilizes a provided API to access the available modules, and composes them by generating Python code that is later executed. This simple approach requires no further training, and achieves state-of-the-art results across various complex visual tasks.

Zero-1-to-3: Zero-shot One Image to 3D Object

Ruoshi Liu Columbia University, Rundi Wu Columbia University, Basile Van Hoorick Columbia University, Pavel Tokmakov Toyota Research Institute, Sergey Zakharov Toyota Research Institute, Carl Vondrick Columbia University

We introduce Zero-1-to-3, a framework for changing the camera viewpoint of an object given just a single RGB image. To perform novel view synthesis in this under-constrained setting, we capitalize on the geometric priors that large-scale diffusion models learn about natural images. Our conditional diffusion model uses a synthetic dataset to learn controls of the relative camera viewpoint, which allow new images to be generated of the same object under a specified camera transformation. Even though it is trained on a synthetic dataset, our model retains a strong zero-shot generalization ability to out-of-distribution datasets as well as in-the-wild images, including impressionist paintings. Our viewpoint-conditioned diffusion approach can further be used for the task of 3D reconstruction from a single image. Qualitative and quantitative experiments show that our method significantly outperforms state-of-the-art single-view 3D reconstruction and novel view synthesis models by leveraging Internet-scale pre-training.

Muscles in Action

Mia Chiquier Columbia University, Carl Vondrick Columbia University

Human motion is created by, and constrained by, our muscles. We take a first step at building computer vision methods that represent the internal muscle activity that causes motion. We present a new dataset, Muscles in Action (MIA), to learn to incorporate muscle activity into human motion representations. The dataset consists of 12.5 hours of synchronized video and surface electromyography (sEMG) data of 10 subjects performing various exercises. Using this dataset, we learn a bidirectional representation that predicts muscle activation from video, and conversely, reconstructs motion from muscle activation. We evaluate our model on in-distribution subjects and exercises, as well as on out-of-distribution subjects and exercises. We demonstrate how advances in modeling both modalities jointly can serve as conditioning for muscularly consistent motion generation. Putting muscles into computer vision systems will enable richer models of virtual humans, with applications in sports, fitness, and AR/VR.

SurfsUp: Learning Fluid Simulation for Novel Surfaces

Arjun Mani Columbia University, Ishaan Preetam Chandratreya Columbia University, Elliot Creager University of Toronto, Carl Vondrick Columbia University, Richard Zemel Columbia University

Modeling the mechanics of fluid in complex scenes is vital to applications in design, graphics, and robotics. Learning-based methods provide fast and differentiable fluid simulators, however most prior work is unable to accurately model how fluids interact with genuinely novel surfaces not seen during training. We introduce SURFSUP, a framework that represents objects implicitly using signed distance functions (SDFs), rather than an explicit representation of meshes or particles. This continuous representation of geometry enables more accurate simulation of fluid-object interactions over long time periods while simultaneously making computation more efficient. Moreover, SURFSUP trained on simple shape primitives generalizes considerably out-of-distribution, even to complex real-world scenes and objects. Finally, we show we can invert our model to design simple objects to manipulate fluid flow.

Landscape Learning for Neural Network Inversion

Ruoshi Liu Columbia University, Chengzhi Mao Columbia University, Purva Tendulkar Columbia University, Hao Wang Rutgers University, Carl Vondrick Columbia University

Many machine learning methods operate by inverting a neural network at inference time, which has become a popular technique for solving inverse problems in computer vision, robotics, and graphics. However, these methods often involve gradient descent through a highly non-convex loss landscape, causing the optimization process to be unstable and slow. We introduce a method that learns a loss landscape where gradient descent is efficient, bringing massive improvement and acceleration to the inversion process. We demonstrate this advantage on a number of methods for both generative and discriminative tasks, including GAN inversion, adversarial defense, and 3D human pose reconstruction.

The paper “An Empirical Study of API Stability and Adoption in the Android Ecosystem”, was recognized as the Most Impactful Paper from among the published papers at ICSME ’13.

ARNI Director Zemel goes to Washington to explain to Congress how Columbia’s new AI institute will connect major progress made in AI systems to our understanding of the brain.

The paper “Predictable Programming on a Precision Timed Architecture” from the CASES 2008 Conference was honored with a Test of Time Award.

Alum Raghav Poddar created Superorder, a tool for businesses to set up their restaurant’s online presence, create digital storefronts, and receive more online sales.

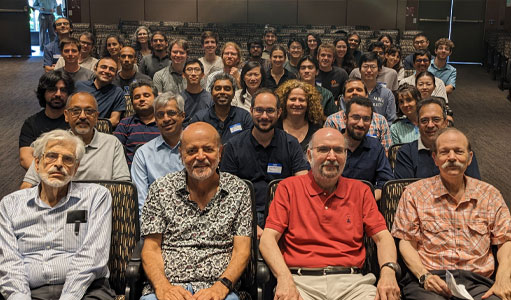

The Theory Group recently hosted a three-day workshop in honor of Professor Mihalis Yannakakis’ 70th birthday.

The workshop, dubbed Mihalis Fest, invited 18 computer science researchers and professors who gave talks about the various research areas that Yannakakis’ work has strongly influenced. Among the speakers were Professor Toniann Pitassi and Turing Award winner Jeffrey Ullman, who was Yannakakis’ PhD advisor at Princeton University.

“Mihalis is universally recognized as one of the true giants of our field. He’s made major contributions all over the intellectual map of theoretical computer science, in too many areas to list. He’s also a much-beloved figure in the research community, whose wisdom and kindness have impacted countless colleagues and students,” said Professor Rocco Servedio. “The CS department was delighted to host a celebratory workshop in honor of his milestone birthday!”

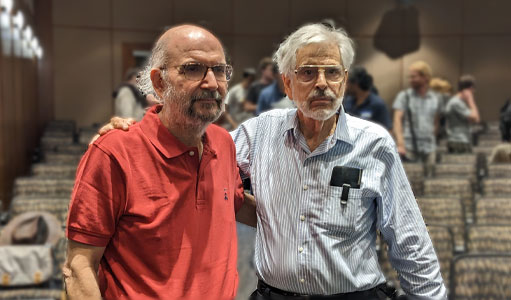

Professor Christos Papadimitriou closed out the workshop and shared how he and Yannakakis first met while PhD students at Princeton. Said Papadimitriou, “I introduced computer science theory to Mihalis. I should’ve retired after that accomplishment.”

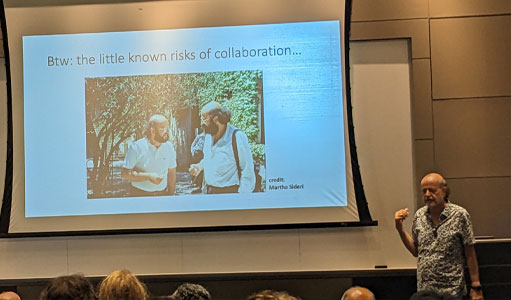

Papadimitriou and Yannakakis have collaborated on many papers over the years, and their 1988 paper, “Optimization, Approximation, and Complexity Classes,” introduced a whole range of new complexity classes and notions of approximation that continue to be studied to this day. They are also good friends, and colleagues have noted that the two hardly need to talk but understand each other instantly. “At one point, we started to look alike, too,” joked Papadimitriou.

Many presenters, plus former and current PhD students, shared personal stories of their time working with Yannakakis. Their tributes showed a common theme: how Yannakakis is a brilliant computer scientist who also knows how to support and nurture those around him.

Find open faculty positions here.

President Bollinger announced that Columbia University along with many other academic institutions (sixteen, including all Ivy League universities) filed an amicus brief in the U.S. District Court for the Eastern District of New York challenging the Executive Order regarding immigrants from seven designated countries and refugees. Among other things, the brief asserts that “safety and security concerns can be addressed in a manner that is consistent with the values America has always stood for, including the free flow of ideas and people across borders and the welcoming of immigrants to our universities.”

This recent action provides a moment for us to collectively reflect on our community within Columbia Engineering and the importance of our commitment to maintaining an open and welcoming community for all students, faculty, researchers and administrative staff. As a School of Engineering and Applied Science, we are fortunate to attract students and faculty from diverse backgrounds, from across the country, and from around the world. It is a great benefit to be able to gather engineers and scientists of so many different perspectives and talents – all with a commitment to learning, a focus on pushing the frontiers of knowledge and discovery, and with a passion for translating our work to impact humanity.

I am proud of our community, and wish to take this opportunity to reinforce our collective commitment to maintaining an open and collegial environment. We are fortunate to have the privilege to learn from one another, and to study, work, and live together in such a dynamic and vibrant place as Columbia.

Sincerely,

Mary C. Boyce

Dean of Engineering

Morris A. and Alma Schapiro Professor