Distinguished Lecture Series 2023

The Distinguished Lecture series brings computer scientists to Columbia to discuss current issues and research that are affecting their particular research fields.

Cognitive Workforce Revolution with Trustworthy and Self-Learning Generative AI

Cognitive Workforce Revolution with Trustworthy and Self-Learning Generative AI

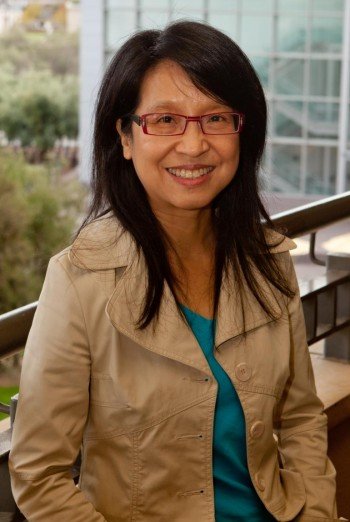

Monica Lam, Stanford University

CS Auditorium (CSB 451)

November 15, 2023

11:40 AM to 12:40 PM

Generative AI, and in particular Large Language Models (LLMs), have already changed how we work and study. To truly transform the cognitive workforce however, LLMs need to be trustworthy so they can operate autonomously without human oversight. Unfortunately, language models are not grounded and have a tendency to hallucinate.

Our research hypothesis is that we can turn LLM into useful workers across different domains if we (1) teach them how to acquire and apply knowledge in external corpora such as written documents, knowledge bases, and APIs; (2) have them self-learn through model distillation of simulated conversations. We showed that by supplying different external corpora to our Genie assistant framework, we can readily create trustworthy agents that can converse about topics in open domains from Wikidata, Wikipedia, or StackExchange; help navigate services and products such as restaurants or online stores; persuade users to donate to charities; and improve the social skills of people with autism spectrum disorder.

Watch the Video of the Lecture

Causal Representation Learning and Optimal Intervention Design

Causal Representation Learning and Optimal Intervention Design

Caroline Uhler, MIT

CS Auditorium (CSB 451)

November 8, 2023

11:40 AM to 12:40 PM

Massive data collection holds the promise of a better understanding of complex phenomena and, ultimately, of better decisions. Representation learning has become a key driver of deep learning applications since it allows learning latent spaces that capture important properties of the data without requiring any supervised annotations. While representation learning has been hugely successful in predictive tasks, it can fail miserably in causal tasks, including predicting the effect of an intervention. This calls for a marriage between representation learning and causal inference. An exciting opportunity in this regard stems from the growing availability of interventional data (in medicine, advertisement, education, etc.). However, these datasets are still minuscule compared to the action spaces of interest in these applications (e.g. interventions can take on continuous values like the dose of a drug or can be combinatorial as in combinatorial drug therapies). In this talk, we will present initial ideas towards building a statistical and computational framework for causal representation learning and discuss its applications to optimal intervention design in the context of drug design and single-cell biology.

Watch the Video of the Lecture

SmartBook: an AI Prophetess for Disaster Reporting and Forecasting

Heng Ji, University of Illinois at Urbana-Champaign

CS Auditorium (CSB 451)

November 1, 2023

11:40 AM to 12:40 PM

Abstract:

We propose SmartBook, a novel framework that cannot be solved by ChatGPT, targeting situation report generation which consumes large volumes of news data to produce a structured situation report with multiple hypotheses (claims) summarized and grounded with rich links to factual evidence by claim detection, fact checking, misinformation detection and factual error correction. Furthermore, SmartBook can also serve as a novel news event simulator, or an intelligent prophetess. Given “What-if” conditions and dimensions elicited from a domain expert user concerning a disaster scenario, SmartBook will induce schemas from historical events, and automatically generate a complex event graph along with a timeline of news articles that describe new simulated events based on a new Λ-shaped attention mask that can generate text with infinite length. By effectively simulating disaster scenarios in both event graph and natural language format, we expect SmartBook will greatly assist humanitarian workers and policymakers to exercise reality checks (what would the next disaster look like under these given conditions?), and thus better prevent and respond to future disasters.

Watch the Video of the Lecture

Enabling the Era of Immersive Computing

Sarita Adve, University of Illinois at Urbana-Champaign

CS Auditorium (CSB 451)

October 25, 2023

11:40 AM to 12:40 PM

Computing is on the brink of a new immersive era. Recent innovations in virtual/augmented/mixed reality (extended reality or XR) show the potential for a new immersive modality of computing that will transform most human activities and change how we design, program, and use computers. There is, however, an orders of magnitude gap between the power/performance/quality-of-experience attributes of current and desirable immersive systems. Bridging this gap requires an inter-disciplinary research agenda that spans end-user devices, edge, and cloud, is based on hardware-software-algorithm co-design, and is driven by end-to-end human-perceived quality of experience.

The ILLIXR (Illinois Extended Reality) project has developed an open source end-to-end XR system to enable such a research agenda. ILLIXR is being used in academia and industry to quantify the research challenges for desirable immersive experiences and provide solutions to address these challenges. To further push the interdisciplinary frontier for immersive computing, we recently established the IMMERSE center at Illinois to bring together research, education, and infrastructure activities in immersive technologies, applications, and human experience. This talk will give an overview of IMMERSE and a deeper dive into the ILLIXR project, including the ILLIXR infrastructure, its use to identify XR systems research challenges, and cross-system solutions to address several of these challenges.

Watch the Video of the Lecture

Protecting Human Users from Misused AI

Ben Zhao, University of Chicago

CS Auditorium (CSB 451)

October 9, 2023

11:40 AM to 12:40 PM

Abstract:

Recent developments in machine learning and artificial intelligence have taken nearly everyone by surprise. The arrival of arguably the most transformative wave of AI did not bring us smart cities full of self-driving cars, or robots that do our laundry and mow our lawns. Instead, it brought us over-confident token predictors that hallucinate, deepfake generators that produce realistic images and video, and ubiquitous surveillance. In this talk, I’ll describe some of our recent efforts to warn, and later defend against some of the darker side of AI.

In particular, I will tell the story of how our efforts to disrupt unauthorized facial recognition models led unexpectedly to Glaze, a tool to defend human artists against art mimicry by generative image models. I will share some of the ups and downs of implementing and deploying an adversarial ML tool to a global user base, and reflect on mistakes and lessons learned.

Watch the Video of the Lecture