Katy Gero Wins Best Paper Award at CHI 2020

Katy Gero, a third-year PhD student, wins a Best Paper Award from the ACM Computer-Human Interaction (CHI 2020).

Mental Models of AI Agents in a Cooperative Game Setting

Katy Ilonka Gero Columbia University, Zahra Ashktorab IBM Research AI , Casey Dugan IBM Research AI, Qian Pan IBM Research AI, James Johnson IBM Research AI, Werner Geyer IBM Research AI, Maria Ruiz IBM Watson, Sarah Miller IBM Watson, David R Millen IBM Watson, Murray Campbell IBM Research AI, Sadhana Kumaravel IBM Research AI, Wei Zhang IBM Research AI

As more and more forms of artificial intelligence (AI) become prevalent, it becomes increasingly important to understand how people develop mental models of these systems. In this work, the paper studies people’s mental models of AI in a cooperative word guessing game. The researchers wanted to know how people develop mental models of AI agents.

Mental models refer to how a person “thinks” a system works. For instance, someone’s mental model of driving a car is that when they push on the gas pedal, more gas is pumped into the engine which makes the car go faster. That may not be how a car “actually” works, it’s just one view a person may have as a non-car expert. (The actual way it works is the gas pedal lets more air into the engine; a series of sensors then adjust the amount of gas to match the amount of air.)

“Mental models are known to be incomplete, unstable, and often wrong,” said Katy Gero, a third year PhD student and the study’s lead author. “Though that doesn’t mean they’re not useful!”

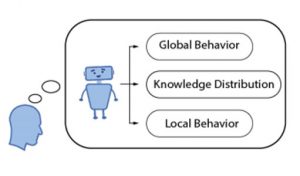

To study the mental models of AI agents, the study’s participants played Passcode a word guessing game with an AI agent. The AI agent had a secret word, like “tomato”, and would give one word hints, like “salad” or “red”, to get the participant to guess the secret word. They learned that people’s mental models had three components:

– Global Behavior: How does the AI agent behave over time?

– Local Behavior: How does the AI agent decide on a single action?

– Knowledge Distribution: How much does the AI agent know about different parts of the world?

There was often a misunderstanding of local behavior. Sometimes the AI agent gave a hint that a participant didn’t understand. For instance, sometimes it gave the hint ‘hectic’ when the participant was meant to guess the word was ‘calm’. A participant might think that hint didn’t make any sense, like the AI agent made a mistake. In reality, the AI agent was giving an antonym hint. This is an example where a person had an incorrect mental model that they corrected over the course of several games.

The researchers conducted two studies – (1) a think-aloud study in which people play the game with an AI agent; through thematic analysis they identified features of the mental models developed by the participant and (2) a large-scale study where participants played the game with the AI agent online and used a post-game survey to probe their mental model

In the first study thematic analysis was used to develop a set of codes which describe the types of concerns participants had when playing with the AI agent. The most prevalent codes show what people think about most when playing with the AI agent: they worry about why it did something unexpected, they try to find patterns in its behavior, and they wonder what kinds of knowledge it has.

For the second study, they found that people who had the best understanding of how the AI agent behaved were able to play the game the best. However, as is expected with complex systems, no one had a perfect understanding of how the AI agent worked.

This work provides a new framework for modeling AI systems from a user-centered perspective: models should detail the global behavior, knowledge distribution, and local behavior of the AI agent. These studies also have implications for the design of AI systems that attempt to explain themselves to the user, especially AI agents that want to explain why they behave in certain ways.