Query logs put to new uses in data analysis: democratizing interfaces and tracking sources of errors

![]()

![]()

Researchers led by Eugene Wu are presenting two papers next week in Chicago at SIGMOD, a leading international forum for database research. “Precision Interfaces” proposes a semi-automatic method for generating customized interfaces for real user tasks by analyzing logs of programs that are actually used for analysis. “QFix: Diagnosing Errors through Query Histories” is a data cleaning method that determines how errors entered a database, making it possible to diagnosis the reasons for the errors, uncover other possible errors, and help prevent their reoccurrence. Though very different applications, both papers rely on a unique and underutilized source of information: program and query logs.

An interface for every task

Data analysis is critical today to an increasing number of tasks. Modern data exploration and analysis tools (think visualization systems like Tableau, or form-based querying) make data analysis easier by providing interactive interfaces complete with buttons, sliders, checkboxes, and other widgets, rather than typing programs manually. Existing interfaces are designed for general tasks performed by many, but they often ignore the long tail of tasks and analyses that are less common but important for small number of people; for these long tail tasks, users either learn how to build interfaces for themselves, rely on existing tools ill-suited for the task, or make do without.

“Precision Interfaces,” which is being presented at the Human In the Loop Data Analysis (HILDA) workshop, is intended to address this long tail by automatically generating data analysis interfaces that are “precise” to user tasks. The aim, according to Eugene Wu and his coauthors Haoci Zhang and Thibault Sellam, is to make data analysis accessible to more users so they can do exactly the data operations they want, and on the data they want.

The question of course is figuring out what people want to do when they themselves may have trouble articulating what they want. Operations on data constitute a potentially huge space, but query and program logs serve to constrain the possibilities by capturing what subsets of data that users are most interested in, what tasks they perform most often, and the sequence of those tasks.

In most cases, these logs show that people use data in fairly predictable and limited ways. Says Wu, “If you look at what people are doing it is not totally fancy new things. Any given task is usually associated with a small set of operations. The hard part identifying these task by perusing the history of what everyone has done, and providing interface that matches these tasks.”

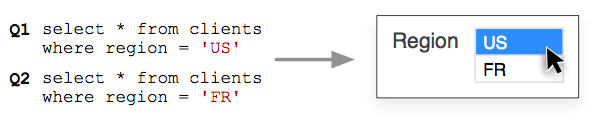

Whether users are entering a range of numeric values (where a slider is appropriate), or simply toggling an option (when a checkbox suffices), can be gleaned from analyzing the program logs. By modeling each program (an SQL query or Python code that performs a task) as a node in an interaction graph, and drawing edges between nodes that differ in simple structural changes, researchers can detect similar changes and generate interfaces to express them.

Aesthetics and ease of use are considerations also. Says Sellam, “An interface with only a text box (a console) is visually simple but requires users to basically program. Oppositely, an interface with one button for each program is too complicated for any non-trivial program log. How easily users can interact with the widgets matters too. Ultimately, the optimal design depends on the use case.”

Information in program logs helps constrain otherwise ill-defined, open ended problems. A similar observation is applied by Wu and coauthors Xiaolan Wang and Assistant Professor Alexandra Meliou (University of Massachusetts, Amherst) in analyzing database query logs to identify and repair errors in databases and data-driven applications.

Tracking the source of data errors

Data errors pose a huge cost to business and other entities, especially local governments. Transposing two digits in a numeric value—an income level, a gas meter reading, a phone number—can set in motion a slew of problems as customers or clients get taxed or billed for the wrong amount. One error—an incorrect tax rate, for example—begets others as later queries retrieve the erroneous rate to calculate taxes for perhaps thousands of people, thus propagating the original error throughout records in the database while obscuring the source of the original error.

The usual fix—manually intervening to change individual errors after (and if) they are discovered—is ad hoc and superficial, and it fails to address the root cause.

QFix is designed to understand how an error entered and impacted the database so it can suggest repairs (e.g., fix the incorrect tax rate). Once a problem is reported, QFix examines the query log to analyze past queries that modified the database and then isolates the query that most likely introduced the initial error. The naive approach would be roll back to a given query, change it, and re-run the rest of the queries and see if it fixed the errors. This approach will not likely to finish within a lifetime for any realistic database.

The researchers’ approach is to think of each query as a set of linear equations that compute the database’s new values from the current values. By turning the queries in the query log into a large set of equations, they can be solved using modern linear constraint solvers. Says Wu, “If your database is a single value, say 10, and you know the incorrect linear equation (query) changed its value to 100 instead of 200, then we know how the linear equation (query) should be changed to fix the error.” QFix simply does this for millions of intertwined equations.

To generate the repairs quickly, the paper describes a set of optimizations to filter out queries, database records, and attributes that are unrelated to the data errors. This lets QFix identify erroneous queries in less than a second on standard industry database benchmark applications. QFix then suggests these queries and possible repairs to an administrator, who repair those queries and propagate the repairs to erase downstream errors—errors that might otherwise go unnoticed.

In using the information contained in query logs, QFix offers a fundamentally different method of data cleaning than anomaly/outlier detection, dimensionality reduction, and other traditional methods.

Both QFix and Precision Interfaces demonstrate how program and query logs are a rich resource for critical data science applications such as data cleaning, creating customized interfaces, and understanding in general what users want and need in their data analysis applications.

Posted 5/11/2017