Leveraging cue selection in RGBD images for more accurate and faster segmentation

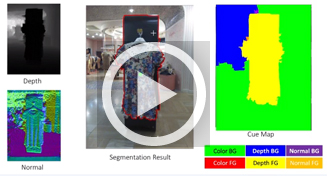

Researchers at Columbia University and Adobe Research have developed a new interactive image segmentation method that leverages both color and depth information in RGBD images to more cleanly and quickly separate target objects from the background. The method, described in Interactive Segmentation on RGBD Images via Cue Selection, a paper to be presented later this month at the Computer Vision and Pattern Recognition conference (June 26 — July 1), expands on previous RGB and RGBD segmentation methods in two ways: it derives normal vectors from depth information to serve as an additional cue, and it uses a single cue for each pixel, rather than a combination of all cues (color, depth, normal), to distinguish foreground objects from the background. The insight is that a single cue contains a preponderance of discriminative power in the local region, a power that may be diluted when combined with less predictive cues. The resulting cue map offers a new perspective into how each cue is used to produce segmentation. The approach is supported by experiments showing the single-cue method outperforms multiple-cue methods for both RGB and RGBD images, requiring fewer user inputs to achieve higher accuracy.

Separating objects from the background has many applications in image and video processing as well as image compression. It is also a well-known feature of popular consumer image editing programs like Photoshop, which offer a quick selection tool (magic wand) so users can indicate with clicks or brush strokes an object to isolate from the rest of the image. Image segmentation works generally by evaluating similarity or dissimilarity of neighboring pixels, usually on the basis of color, texture, and other such cues, and then establishing a border that encompasses similar pixels and excludes dissimilar ones.

Color or intensity, however, is often not enough to distinguish an object from the background. Foreground and background colors can overlap, colors might be affected by illumination, other objects may obfuscate an object’s borders. Users as a result often have to spend a lot of time finessing the selection process, zooming in to select first one pixel, then another until the selected object is fully separated.

Another, more promising cue is becoming available to make image segmentation more accurate. Depth information is increasingly being incorporated into images (RGBD format) courtesy of the Microsoft Kinect and similar depth-sensing devices (some new smart phones are already incorporating cameras that likewise perceive depth). Because depth measures the distance of each pixel from the camera and is not affected by illumination changes, it is often more accurate and reliable than color or other currently used cues at distinguishing foreground objects from the background.

A few segmentation methods are starting to take advantage of depth, using it as another channel of information that can be combined with color, texture, and other cues to more accurately isolate selected objects.

Rather than simply viewing depth as an additional cue to be linearly mixed with others, researchers from Columbia and Adobe looked more carefully at how depth information might be used in image segmentation, and also re-used. Besides using depth information to only establish relative distances of pixels from the background, researchers went one more step and computed normal vectors from an object’s surface orientation, making it possible to distinguish an object’s geometry and thus better draw the edges of an object.

Depth information, thus used twice, can distinguish two objects even when they are at the same depth.

Nor is depth—or any other cue—treated as simply an element of a combination. In experimenting with how best to combine depth and normal information with other cues, the researchers found that a single cue works better than a linear combination of many cues. Says Jie Feng, lead author of the paper. “Our insight was that among neighboring pixels, one cue is usually enough to explain why one pixel is foreground or why it is background. Combining many cues could dilute the predictive power of a strong signal within the local region.” A single cue has the added benefit of providing explanatory power, something lacking in a combination of multiple cues.

The single cue might be depth or it might be another cue; it all depends on what the user wants. Each time a user selects a pixel, the algorithm dynamically adapts a cue map, evaluating each pixel to determine the most predictive cue for that pixel (optimization was done using a modified version of Markov Random Field).

Different cues take effect in different areas of an image.

Top row: Clicking the dress once picks depth as best cue, isolating the entire dress.

Bottom row: Clicking the white part of the dress separates the dress into two parts with color as cue.

In extensive testing, the single-cue method, compared to other segmentation methods, required far fewer clicks to achieve the same accuracy, a result that holds for both RGB and RGBD images. Test results are demonstrated in the following video.

The general concept of using a single strong cue has application beyond segmenting images and may enhance performance of other tasks such as semantic or video segmentation, which also involve multiple features for single pixels. The researchers are also looking at extending their method to automatic image segmentation.

Posted: 6/15/2016

– Linda Crane