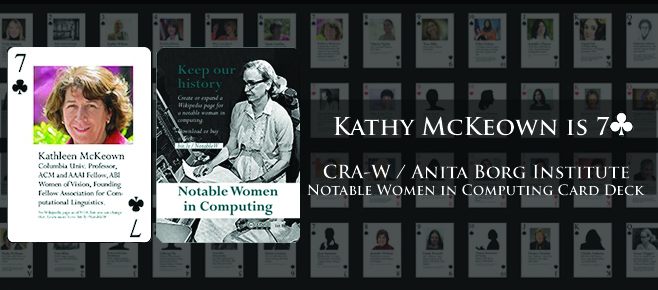

A deck of playing cards honors 54 notable women in computing. Produced by Duke University and Everwise (in conjunction with CRA-W and Anita Borg Institute Wikipedia Project), the first card decks were distributed at this year’s Grace Hopper conference. (A Kickstarter campaign is raising money for a second printing.)

One of the women to be honored with her own card is Kathy McKeown, a computer scientist working in the field of natural language processing. The first female full-time professor in Columbia’s school of engineering, she was also the first woman to serve as department chair. Currently the Henry and Gertrude Rothschild Professor of Computer Science, she is also the inaugural director of Columbia’s multidisciplinary Data Science Institute. And now she is the seven of clubs.

Question: Congratulations on being included among the Notable Women of Science card deck. What does it feel like to see your picture on the seven of clubs?

Kathy McKeown: It is really exciting to be part of this group, to be part of such a distinguished group of computer scientists.

You started at the Columbia Engineering school in 1982 and were for a time the only full-time female professor in Columbia’s school of engineering. From your experience seeing other women follow soon after, what’s most helpful for women wanting to advance in computer science?

KM: Just having other women around makes a big difference. Women can give one another advice and together support issues that women in particular care about. Having a woman in a senior position is especially helpful. When I was department chair, several undergraduate women approached me about starting a Women in Computer Science group. As a woman, I understood the need for such a group and in my position it was easier for me to present the case.

Of course, getting women into engineering and computer science requires making sure girls in school remain interested in these subjects as they approach college, and I think one way that is done is by showing them how stimulating and gratifying it can be to design and build something that helps others.

Talking of interesting work that helps others, you recently received an NSF grant for “Describing Disasters and the Ensuing Personal Toll.” What is the work to be funded?

KM: The goal is to build a system for automatically generating a comprehensive description of a disaster, one that includes objective, factual information—what is the specific sequence of events of what happened when—with compelling, emotional first-person accounts of people impacted by the disaster. We will use natural language processing techniques to find the relevant articles and stories, and then weave them together into a single, overall resource so people can query the information they need, both as the disaster unfolds or months or years later.

It might be emergency responders or relief workers needing to know where to direct their efforts first; it might be journalists wanting to report current facts or researching past similar disasters. It might be urban planners wanting to compare how two different but similar neighborhoods fared under the disaster, maybe discovering why one escaped heavy damage while the other did not. Or it might be someone who lived through the event coming back in years after to remember what it was like.

It’s not a huge grant—it’s enough for me and two graduate students for three years, but it’s a very appealing one, and it’s one that allows undergraduates to work with us and do research. Already we have seven students working on this, the two funded graduate students and five undergraduates.

It makes sense that journalists and emergency responders would want an objective account of a disaster or event. Why include personal stories?

KM: Because it’s the personal stories that let others understand fully what is going on. Numbers tell one side of the story, but emotions tell another. During Katrina, there were reports of people marooned in the Superdome in horrendous conditions—reports that were initially dismissed by authorities. It wasn’t until reporters went there and interviewed people and put up their stories with pictures and videos that the rest of us could actually see the true plight and desperation of these people, and you realize at the same time it could be you. It wasn’t possible to ignore what was happening after that, and rescue efforts accelerated.

What was the inspiration?

KM: For us it was Hurricane Sandy, since many of us were here in New York or nearby when Sandy struck. One student, whose family on the Jersey Shore was hard-hit, was particularly motivated to look at what could be done to help people.

But more importantly, Sandy as an event has interesting characteristics. It was large scale and played out over multiple days and multiple areas, and it triggered other events. Being able to provide a description of an event at this magnitude is hard and poses an interesting problem. The project is meant to cover any type of disaster—earthquakes, terror attacks, floods, mass shootings—where the impact is long-lasting and generates sub-events.

How will it work?

KM: The underlying system will tap into streaming social media and news to collect information, using techniques of natural language processing and artificial intelligence to find those articles and stories pertinent to a specific disaster and the sub-events it spawns. Each type of disaster is associated with a distinct vocabulary and we’ll build language models to capture this information.

Obviously we’ll look at established news sites for factual information. To include first-person stories, it’s not yet entirely clear where to look since there aren’t well-defined sites for this type of content. We will be searching blogs and discussion boards and wherever else we can discover personal accounts.

For users—and the intent is for anyone to be able to use the system—we envision currently some type of browser-type interface. It will probably be visual and may be laid out by location where things happened. Clicking on one location will present descriptions and give a timeline about what happened at that location at different times, and each sub-event will be accompanied by a personal account.

Newsblaster is already finding articles that cover the same event. Will you be building on top of Newsblaster?

KM: Yes, after all, Newsblaster represents 11 years of experience in auto-generating summaries and it contains years of data, though we will modernize it to include social media, which Newsblaster doesn’t currently do. We also need to also expand the scope of how Newsblaster uses natural language processing. Currently it relies on common language between articles to both find articles of the same event and then to produce summaries. I’m simplifying here, but Newsblaster works by extracting nouns and other important words from articles and then measuring statistical similarity of the vocabulary in these articles to determine which articles cover the same topic.

In a disaster covering multiple days with multiple sub-events, there is going to be a lot less common language and vocabulary among the articles we want to capture. A news item about flooding might not refer directly to Sandy by name; it may describe the flooding only as “storm-related” but we have to tie this back to the hurricane itself even when two articles don’t share a common language. There’s going to be more paraphrasing also as journalists and writers, to avoid being repetitive after days of writing about the same topic, change up their sentences. It makes it harder for language tools that are looking for the same phrases and words.

Determining semantic relatedness is obviously the key, but we’re going to need to build new language tools and approaches that don’t rely on the explicit presence of shared terms.

How will “Describing Disasters” find personal stories?

KM: That’s one question, but the more interesting question is how do you recognize a good, compelling story people would want to hear? Not a lot of people have looked at this. While there is work on what makes scientific writing good, recognizing what makes a story compelling is new research.

We’re starting by investigating a number of theories drawn from linguistics and from literature on what type of structure or features are typically found in narratives. We’ll be looking especially at the theory of the sociolinguist

William Labov of the University of Pennsylvania who has been looking at the language that people use when telling stories. There is often an orientation in the beginning that tells you something about location, or a sequence of complicating actions that culminates in an event, which Labov calls the most reportable event—something shocking or involving life and death for which you tend to get a lot of evaluative material. One student is now designing a most-reportable-event classifier but in a way that is not disaster-specific. We don’t want to have to enumerate it for different events, to have to explicitly state that the most reportable event for a hurricane is flooding, and that it’s something different for a tornado or a mass shooting.

What are the hard problems?

KM: Timelines that summarize changes over time, that describe how something that happened today is different from what we knew yesterday. In general that’s a hard problem. On one hand, everything is different, but most things that are different aren’t important. So how do we know what’s new and different that is important and should be included? Some things that happen on later days may be connected to the initial event, but not necessarily. How do we tell?

Being able to connect sub-events triggered by an initial event can be hard for a program to do automatically. Programs can’t see the correlation necessarily. We’re going to have to insert more intelligence for this to happen.

Here’s one example. There was a crane hanging over mid-town Manhattan after Hurricane Sandy came in. If we were using our normal expectations of hurricane-related events, we wouldn’t necessarily think of a crane dangling over a city street from a skyscraper. This crane sub-event spawned its own sub-event, a terrible traffic jam. Traffic jams happen in New York city. How would we know that this one traffic jam was a result of Hurricane Sandy and not some normal everyday event in New York?

It does seem like an interesting problem. How will you solve it?

KM: We don’t know, at least not now. Clearly geographic and temporal information is important, but large-scale disasters can encompass enormous areas and the effects go on for weeks. We need to find clues in language to draw the connections between different events.

We’re just getting started. And that’s the fun of research, the challenge of the difficult. You start with a question or a goal without an easy answer and you work toward it.

There are other problems, of course. The amount of data surrounding a large-scale disaster is enormous. Somehow we’ll have to wade through massive amounts of data to find just what we need. Validation is another issue. How do we know if we’ve developed something that is correct? We’ll need metrics to evaluate how well the system is working.

Another issue will be reining in everything we will want to do. There is so much opportunity with this project, particularly for multidisciplinary studies. We can easily see pulling in journalism students, and those working in visualization. To be able to present the information in an appealing way will make the system more usable to a wider range of people, and it may generate new ways of looking at the data. We see this project as a start; there is a lot of potential for something bigger.