Invisible, machine-readable AirCode tags make tagging objects part of 3D-fabrication process

To uniquely identify and encode information in printed objects, Columbia researchers Dingzeyu Li, Avinash S. Nair, Shree K. Nayar, and Changxi Zheng have invented a process that embeds carefully designed air pockets, or AirCode tags, just below the surface of an object. By manipulating the size and configuration of these air pockets, the researchers cause light to scatter below the object surface in a distinctive profile they can exploit to encode information. Information encoded using this method allows 3D-fabricated objects to be tracked, linked to online content, tagged with metadata, and embedded with copyright or licensing information. Under an object’s surface, AirCode tags are invisible to human eyes but easily readable using off-the-shelf digital cameras and projectors.

The AirCode system has several advantages over existing tagging methods, including the highly visible barcodes, QR codes, and RFID circuits: AirCode tags can be generated during the 3D printing process, removing the need for post-processing steps to apply tags. Being built into a printed object, the tags cannot be removed, either inadvertently or intentionally; nor do they obscure any part of the object or detract from its visual appearance. Invisibility of the tags also means that the presence of information can remain hidden.

“With the increasing popularity of 3D printing, it’s more important than ever to personalize and identify objects,” says Changxi Zheng, who helped develop the method. “We were motivated to find an easy, unobtrusive way to link digital information to physical objects. Among their many uses, AirCode tags provide a way for artists to authenticate their work and for companies to protect their branded products.”

One additional use for AirCode tags is robotic grasping. By encoding both an object’s 3D model and its orientation into an AirCode tag, a robot would just need to read the tag rather than rely on visual or other sensors to locate the graspable part of an object (such as the handle of a mug), which might be occluded depending on the object’s orientation.

AirCode tags, which work with existing 3D printers and with almost every 3D printing material, are easy to incorporate into 3D object fabrication. A user would install the AirCode software and supply a bitstring of the information to be encoded. From this bitstring, AirCode software automatically generates air pockets of the right size and configuration to encode the supplied information, inserting the air pockets at the precise depth to be invisible but still readable using a conventional camera-projector setup.

How the AirCode system works

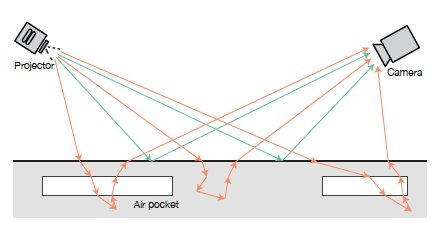

The AirCode system takes advantage of the scattering of light that occurs when light penetrates an object. Subsurface scattering in 3D materials, which is not normally noticeable to people, will be different depending on whether the light hits an air pocket or hits solid material.

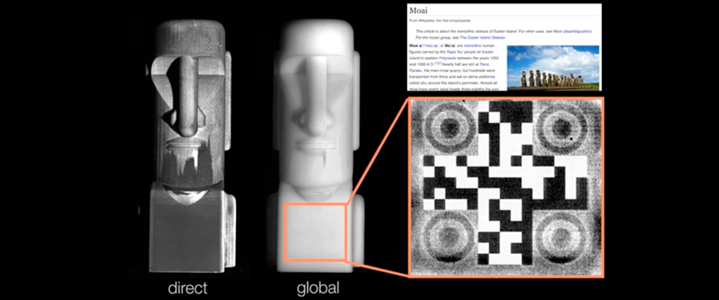

Computational imaging techniques are able to differentiate reflective light from scattered light and decompose a photo into two separate components: a direct component produced by reflected light, and a global component produced from the scattered light waves that first penetrate an object. It’s this global component that AirCode tags manipulate to encode information.

AirCode represents the first use of subsurface light scattering to relay information, and the paper detailing the method, AirCode: Unobtrusive Physical Tags for Digital Fabrication, was named Best Paper at this year’s User Interface Software and Technology (UIST), the premier forum for innovations in Human-Computer Interfaces.

Other innovations are algorithmic, falling into three main steps:

Analyzing the density and optical properties of a material. Most plastic 3D printer materials exhibit strong subsurface scattering, which will be different for each printing material and will thus affect the ideal depth, size, and geometry of an AirCode structure. For each printing material, the researchers analyze the optical properties to model how light penetrates the surface and its interactions with air pockets.

“The technical difficulty here was to create a physics-based model that can predict the light scattering properties of 3D printed materials especially when air pockets are present inside of the materials,” says PhD student Dingzeyu Li, a coauthor on the paper. “It’s only by doing these analyses were we able to determine the size and depth of individual air pockets.”

Analyzing the optical properties of a material is done once with results stored in a database.

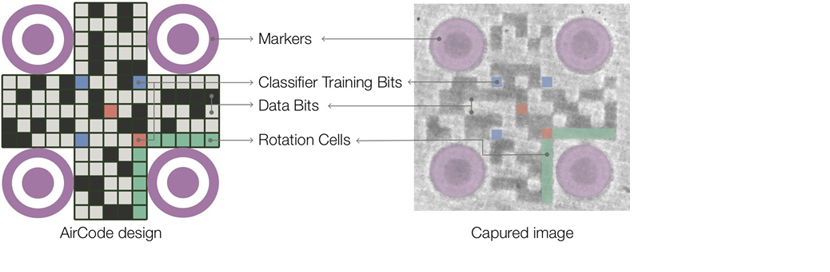

Constructing the AirCode tag for a given material. Like QR codes, AirCode tags are made up of cells arranged on a grid, with each cell representing either a 1 (air-filled) or a 0 (filled with printing material), according to the user-supplied bitstring. Circular-shaped markers, easily detected by computer vision algorithms, help orient the tag and locate different cell regions.

Unlike QR codes, which are clearly visible with sharp distinctions between white and black, AirCode tags are often noisy and blurred, with many levels of gray. To overcome these problems, the researchers insert predefined bits to serve as training data for calibrating in real time the threshold for classifying cells as 0s or 1s.

Detecting and decoding the AirCode tag. To read the tag, a projector shines light on the object (multiple tags might be used to ensure the projector easily finds a tag) and a camera captures images of the object. A computer vision algorithm previously created by coauthor Shree Nayar and adapted for AirCode tags separates each image into its direct component and global component, making the AirCode tag clearly visible so it can be decoded.

While the AirCode system has certain limitations—it requires materials to be homogeneous and semitransparent (though most 3D printer materials fit this description) and the tags become unreadable when covered by opaque paint—tagging printed objects during fabrication has substantial benefits in cost, aesthetics, and function.

Posted 10/24/2017

– Linda Crane