Devices shown to be vulnerable via remote CLKSCREW attack on energy management system

![]()

In this interview, Adrian Tang describes how the CLKSCREW attack exploits the design of energy management systems to breach hardware security mechanisms.

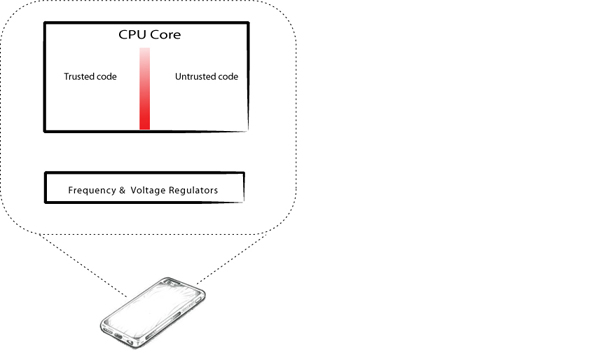

Security on smart phones is maintained by isolating sensitive data such as cryptographic keys in zones that can’t be accessed by non-authorized systems, apps, and other components running on the device. This hardware-imposed security establishes a high barrier and presumes any attacker would have to gain physical access to the device and modify the behavior of the phone using physical attacks typically involving soldering and complex equipment, all without damaging the phone.

Now three Columbia researchers have found they can bypass the hardware-imposed isolation without resorting to such hardware attacks. They do so by leveraging the energy management system, which conserves battery power on small devices loaded with systems and apps. Ubiquitous because they keep power consumption low, energy management systems work by carefully regulating voltage levels across the device, adjusting upward for an active component, adjusting downward otherwise. Knowing which components are idle and which are active requires accessing every component on the device to know its status, no matter what zone a component occupies.

The researchers exploited this design feature to create a new type of fault attack, CLKSCREW. Using this attack, they were able to infer cryptographic keys and upload their own unauthorized code to a phone without having physical access to the device. In experiments, researchers ran their attacks against the ARMv7 architecture on a Nexus 6 smartphone, but the attack would likely succeed on other similar devices since the need to conserve energy consumption is universal on devices. The paper describing the attack, “CLKSCREW: Exposing the Perils of Security-Oblivious Energy Management,” was named most distinguished paper at last month’s USENIX Security Symposium.

In this interview, lead author Adrian Tang describes the genesis of the idea for CLKSCREW and the engineering effort required to implement it.

What made you think energy management systems might have important security flaws?

We asked ourselves what technologies are ubiquitous, are so complex that any vulnerability would be hard to spot, but are nevertheless little studied from a security perspective. Energy management filled all the checkboxes. It seemed like an area ripe for exploitation, especially considering that devices are made up of many different components, all designed by different people. And in manufacturers’ relentless pursuit of smaller, faster, and more energy-efficient devices, security unfortunately is bound to be relegated to the backburner during the design of these devices.

When we looked further, we saw that the energy management features, to be effective, have their reach to almost everything running on a device. Normally, sensitive data on a device is protected through a hardware design that isolates execution environments, so if you have lower-level privileges, you can’t touch the higher-privileged stuff. It’s like a safe; non-authorized folks outside the safe can’t see or touch what’s inside.

But the hardware voltage and frequency regulators—which are part of the energy management system—do work across what’s inside and what’s outside this safe, and are thus able to affect the environment within the safe from the outside. With the right conditions, this has serious implications on the integrity of the crypto strength protecting the box.

The unfortunate kicker is that software controls these regulators. If software can affect aspects of the underlying hardware, that gives us a way into the processors without having to have physical access.

How does the CLKSCREW attack work?

It’s a type of fault attack that pushes the regulators to operate outside the suggested operating parameters, which are published and publicly available. This destabilizes the system so it doesn’t operate correctly and doesn’t follow its normal checks, like requiring digital signatures to verify that code is trusted. So we were able to break cryptography to infer secret keys and even bypass cryptographic checks to load our own self-signed code into the trusted environment, tricking the system into thinking our code was coming from a trusted company, in this case, us.

A fault attack is a known type of attack that’s been around awhile. However, fault attacks typically require physical access to the device to bypass the hardware isolation. Our attack does not require physical access because we can use software to abuse the energy management mechanisms to control parts of the system where we are not supposed to be allowed to. The assumption is of course we need to have already gained software access to the device. To achieve that, an attacker can get the device owner to download a virus, maybe by clicking an email attachment or downloading a malware-laden app.

Why the name CLKSCREW?

This name is an oblique reference to the operating feature on the device we are exploiting – the clock. All digital circuits require a common clock signal to coordinate their functions. The faster the clock operates, the higher the operating frequency and thus the faster your device runs. When you increase the frequency, more operations need to take place over the same time period, so of course that also reduces the amount of time allowed for an operation. CLKSCREW over-stretches the frequency to the extent that operations cannot complete in time. Unexpected operations happen and the system becomes unstable, providing an avenue for an attacker to breach the security of the device.

How difficult was it to create this attack?

Quite difficult. Because energy management features do not exist in just one layer of the computing stack, we had to do a deep dive into every single stack layer to figure out the different ways software in the upper layers can influence the hardware on lower layers. We looked at the drivers, and the applications above them and the hardware below them, studying the physical aspects of the software, and the different parameters in each case. Furthermore, pulling this attack off requires knowledge from many disciplines: electrical engineering, computer science, computer engineering, and cryptography.

Were your surprised your attack worked as well as it did?

Yes. While we were somewhat surprised there were no limits on the hardware regulators, we were more flabbergasted at the fact that the regulators operate across sensitive compartments without any security controls. While these measures ensure energy management work as fast as possible—keeping users happy—security takes a back seat.

Can someone take the attack as you describe it in your paper and carry out their own attack?

Maybe with some work; however, I intentionally left out some key details of the attacks, such as the parameter values we use to time the attacks.

As part of responsible disclosure in this line of attack work, we contacted the vendors of the devices before publishing, and they were very receptive, acknowledging the seriousness of the problem, which they were able to reproduce. They are in the process of working on mitigations for existing devices, as well as future ones. It is not an easy problem to fix. Any potential fixes may involve changes to multiple layers in the stack.

We hope the paper will convince the industry as well as academia to not neglect security while designing all parts of the systems. If history is any indication, any component in the computing stack is fair game for a determined attacker. Energy management features, as we have shown in this work, are certainly no exception.

Adrian Tang is a fifth-year PhD student advised by Simha Sethumadhavan and Salvatore Stolfo. He expects to defend his thesis on rethinking security issues occurring at software-hardware interfaces later this year.

Posted 10/02/17

– Linda Crane