Back to analog computing: Columbia researchers merge analog and digital computing on a single chip

Digital computers have had a remarkable run, giving rise to the modern computing and communications age and perhaps leading to a new one of artificial intelligence and the Internet of things. But speed bumps are starting to appear. As digital chips get smaller and hotter, limits to increases in future speed and performance come into view, and researchers are looking for alternative computing methods. In any case, the discrete step-by-step methodology of digital computing was never a good fit for dynamic or continuous problems—modeling plasmas or running neural networks—or for robots and other systems that react in real time to real-world inputs. A better approach may be analog computing, which directly solves the ordinary differential equations at the heart of continuous problems. Essentially mothballed since the 1970s for being inaccurate and hard to program, analog computing is being updated by Columbia researchers; by merging analog and digital on a single chip, they gain the advantages of analog and bypass its problems.

What is a computer? The answer would seem obvious: an electronic device performing calculations and logical operations on binary data. Millions of simple discrete operations carried out at lightning speed one after another—adding two numbers, comparing two others, fetching a third from memory—is responsible for the modern computing age, an achievement that much more impressive considering the world is not digital.

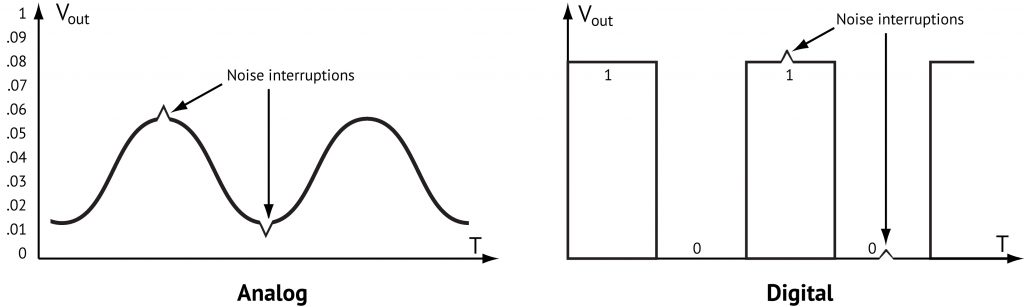

It is analog; so to adjust for the continuous time of the real world, digital computers subdivide time into smaller and smaller discrete steps, calculating at each step the values that describe the world at a particular instant before advancing to the next step.

This discretization of time has computational costs, but each new chip generation—faster and more powerful than the last—could be expected to make up for the inefficiencies. But as chips shrink in size and it becomes harder to dissipate the resulting heat, continued improvements are less and less guaranteed.

Some problems, especially those presented by highly dynamic systems, never fit snugly into the discretization model, no matter how infinitesimally small the steps. Plasmas are one example. Modeled usually at a basic level like air and water, a plasma is also electrically charged. Atoms have separated, and electrons and nuclei float free, all of it sensitive to electromagnetics; with so many variables at play at the same time, modeling plasmas is intrinsically difficult.

Neural networks, perhaps key to real artificial intelligence, are also highly dynamic, relying on an infinite number of simultaneous calculations before converging on an answer.

The analog alternative

“At the core of these types of continuous problems are ordinary differential equations. Some number changes in time; an equation describes this change mathematically using calculus,” explains Yipeng Huang, a computer architecture PhD candidate working in the lab of Simha Sethumadhavan. “Computers in the 1950s were designed to solve ordinary differential equations. People took these equations that explained a physical phenomena and built computer circuits to describe them.”

“Analog” derives from “analogy” for the way the early computer pioneers approached computing: rather than decompose a problem into discrete steps, they set up a small-scale version of a physical problem and studied it that way. A wind tunnel is a (non-electronic) analog computer. An automobile or plane is placed inside, the wind tunnel is turned on, and researchers observe how the wind flows around the object, writing equations to describe what they see. By adjusting wind direction or speed, they can draw analogies from what happens in the tunnel to what would happen in the real world.

Electronic analog computers are the abstraction of this process, with circuits describing an equation that the computer solves directly and in real time. With no notion of a step, no need to translate between analog and digital (or power-hungry clock to keep everything in sync), analog is also much more energy efficient than digital.

This energy efficiency opens possibilities in robotics and is critical if small, lightweight robots are to operate in the field untethered from a power source. Though robots incorporate computing, they are unlike general-purpose computers that receive, operate on, and output mostly digital data. Robots might operate in an entirely analog context, receiving inputs from the environment and responding with an analog output such as when, sensing a solid surface, a robot reacts in a mechanical way to change direction. When inputs and outputs are both analog, translating from analog to digital and back to analog becomes only so much overhead. This same is true also for cyber physical systems—such as the smart grid, autonomous automobile systems, and the emerging Internet of things—where sensors continuously taking readings of the physical environment.

Analog computing would seem to make sense but analog computers have major downsides; after all, there’s a reason they fell out of favor. Prone to error due to noise susceptibility, analog computers are not precise or accurate, and they are difficult to program.

Analog in a digital world

In a project funded by the National Science Foundation, Huang has been working with Ning Guo, a PhD electrical engineering student advised by Yannis Tsividis, to design and build hardware to support analog computing. The project started five years ago with both researchers immersing themselves in the literature from the 50s. Says Guo, “Reading those old books, when we could even find them, helped us get inside how people used analog computing, the kinds of simulation they were able to run, how powerful analog computing was 50 years ago.”

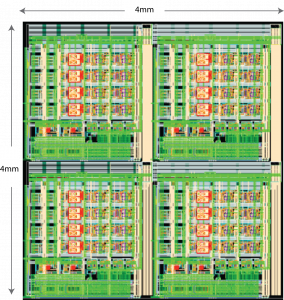

While most people picture analog computers as they were in the 1950s—big and heavy, difficult to use, and not as powerful as an iPhone—Huang and Guo’s analog hardware is not that. It is a chip made of silicon, measures 4mm by 4mm, has transistor features the size of 65nm, and has four copies of a design that can be copied over and over. It follows conventional architectures and has digital building blocks. The materials and the VLSI design are all up to the moment.

“The same forces that miniaturized digital computers, we applied here,” says Huang.

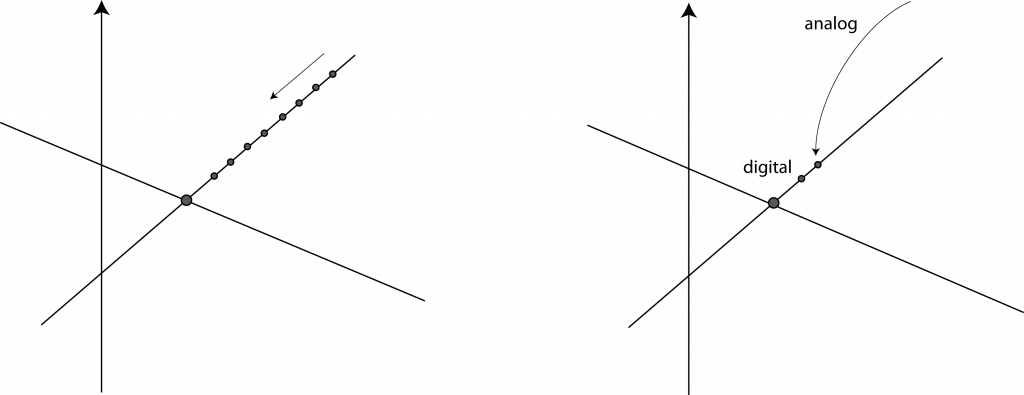

The architecture itself is conceived as a digital host with an analog accelerator; computations that are more efficiently done through analog computing get handed off by the host to the analog accelerator.

The idea is to interleave analog and digital processing within a single problem, applying each method according to what it does best. Analog processing for instance might be used to quickly produce an initial estimate, which is then fed to a digital processing component that iteratively hones in on the accurate answer. The high performance of the analog computing speeds the computation by skipping initial iterations, while the incremental digital approach zeroes in on the most accurate answer.

If hardware looked different in the 50s, so did the programming. Early programmers had to work both the physics and program the chip, a top-down approach at odds with the modern practice of using abstractions to relieve programmers of having to learn nitty-gritty hardware details. Here again, Huang adjusts analog practices to modern expectations, employing solvers that abstract the mathematics so a programmer need only describe the problem at a high level, while the program selects the appropriate solver.

The analog computations on the inside may be messy, but from a software perspective, a solver is just like any other software module that gets inserted at the appropriate place.

The challenge of linear equations

Among researchers, analog computing is drawing renewed interest for its energy efficiency and for being able to efficiently solve dynamic and other complex problems.

For broader acceptance, analog computing will have to prove itself by solving the far simpler linear equations that digital computers solve all the time. So easy are these problems to solve computationally, programmers go to great lengths to boil high-level problems to linear algebra.

If analog computing can solve linear equations and do it faster than digital computers, others will be forced to take notice. So far, Huang and Guo’s linear algebra experiments using their hybrid chip show promise, achieving a performance 10 times better than what digital methods can do while consuming 1/3 less energy (Evaluation of an Analog Accelerator for Linear Algebra). There are caveats to be sure, but there are also opportunities to improve; as the door closes for future efficiency gains in the digital world, it is cracking open in the analog one, with major implications for future applications.

At this point, it’s all research and prototyping. Says Huang, “We’re just trying to show that analog computing works. True integration with digital requires many steps ahead. It has to scale, it has to be more accurate, but the immediate goal is to give concrete examples of problems solvable by analog computing, categorized by type and with all pitfalls spelled out. Many modern problems didn’t exist when analog computers were widespread and so haven’t yet been looked at on analog computers; we’re the first to do it.”

Focused on the future of computing, both Huang and Guo are quietly confident that analog integrated with digital computing will some day eclipse current forms of digital computing. Says Guo, “Robotics, artificial intelligence, ubiquitous sensing—whether for health monitoring, weather and climate data-gathering, electric and smart grids—may require low-power signal processing and computing with real-time data, and they will operate more smoothly and efficiently if computing is lightweight. Increasingly these future applications may be more and more the ones we come to rely on.”

Technical details are contained in two papers: Evaluation of an Analog Accelerator for Linear Algebra and Continuous-Time Hybrid Computing with Programmable Nonlinearities, and in “Energy-Efficient Hybrid Analog/Digital Approximate Computation in Continuous Time,” an article that appeared July in the IEEE Journal of Solid-State Circuits.

About the researchers:

Yipeng Huang received a B.S. degree in computer engineering in 2011, and M.S. and M.Phil. degrees in computer science in 2013 and 2015, respectively, all from Columbia University. He previously worked at Boeing, in the area of computational fluid dynamics and engineering geometry. In addition to analog computing applications, he researches performance and efficiency benchmarking of robotic systems. Advised by Simha Sethumadhavan, Huang expects to complete his PhD in 2017.

Ning Guo is finishing his PhD in electrical engineering under the guidance of Yannis Tsividis. He received a Bachelor’s Degree in IC design from Dalian University of Technology (Dalian, Liaoning, China) in 2010, before attending Columbia University where he received first an MS in Electrical Engineering (2011) followed by a M.Phil (2015). His research interests include ultra low-power analog/mixed-signal circuit design, analog/hybrid computation, analog acceleration for numerical methods. He is also developing an interest in wearable technology and devices.

Posted: 11/22/2016, Linda Crane