Lifelike hair in Moana relies on animation techniques developed by Eitan Grinspun

Technique models geometry of individual hair strands to predict how soft, curly hair will react to a character’s motions or the external forces of wind and water.

Technique models geometry of individual hair strands to predict how soft, curly hair will react to a character’s motions or the external forces of wind and water.

Two professors in the Computer Science Department at Columbia University have been named Fellows of the Institute of Electrical and Electronics Engineers (IEEE): Julia Hirschberg for “contributions to text-to-speech synthesis and spoken language understanding,” and Luca Carloni for “contributions to system-on-chip design automation and latency-insensitive design.” Recognizing an individual’s outstanding record of accomplishments, the IEEE Fellow is the highest grade of IEEE membership, limited every year to one-tenth of one-percent of the total voting membership.

Julia Hirschberg is the Percy K. and Vida L. W. Hudson Professor of Computer Science and Chair of the Computer Science Department. She is also a member of the Data Science Institute. Her main area of research is computational linguistics, with a focus on the relationship between intonation and discourse. Current projects include deceptive speech, spoken dialogue systems, entrainment in dialogue, speech synthesis, speech search in low-resource languages, and hedging behaviors.

“I’m very pleased to receive this honor from my friends and colleagues,” says Hirschberg.

Upon receiving her PhD in Computer and Information Science from the University of Pennsylvania, Hirschberg went to work at AT&T Bell Laboratories, where in the 1980s and 1990s she pioneered techniques in text analysis for prosody assignment in text-to-speech synthesis, developing corpus-based statistical models that incorporate syntactic and discourse information, models that are in general use today. She joined Columbia University faculty in 2002 as a Professor in the Department of Computer Science and has served as department chair since 2012.

As of November 2016, her publications have been cited 15,628 times, and she has an h-index of 62.

Among many honors, Hirschberg is a 2015 Fellow of the Association for Computing Machinery (ACM), and a Fellow also of the Association for Computational Linguistics (2011), of the International Speech Communication Association (2008), and of the Association for the Advancement of Artificial Intelligence (1994). In 2011, she was honored with the IEEE James L. Flanagan Speech and Audio Processing Award and also the ISCA Medal for Scientific Achievement. In 2007, she was granted an Honorary Doctorate from the Royal Institute of Technology, Stockholm, and in 2014 was elected to the American Philosophical Society.

Hirschberg serves on numerous technical boards and editorial committees, including the IEEE Speech and Language Processing Technical Committee and is co-chair of CRA-W. Previously she served as editor-in-chief of Computational Linguistics and co-editor-in-chief of Speech Communication and was on the Executive Board of the Association for Computational Linguistics (ACL); on the Executive Board of the North American ACL; on the CRA Board of Directors; on the AAAI Council; on the Permanent Council of International Conference on Spoken Language Processing (ICSLP); and on the board of the International Speech Communication Association (ISCA). She is also noted for her leadership in promoting diversity, both at AT&T and Columbia, and broadening participation in computing.

Luca Carloni is an Associate Professor within the Computer Science Department, where he leads the System-Level Design Group. His research centers on next-generation chip design, including methodologies and tools for system-on-chip (SoC) platforms, multi-core architectures, embedded systems, computer-aided design, hardware-software integration, and cyber-physical systems. He is also a member of Columbia’s Data Science Institute.

“I am very honored to have been elected an IEEE fellow,” says Carloni. “The IEEE is a great organization that supports the work and research of many electrical and computer engineers worldwide.”

For his seminal contributions to system-level design, Carloni received a Faculty Early Career Development (CAREER) Award in 2006 from the National Science Foundation. In 2008 he was named an Alfred P. Sloan Research Fellow and two years later received the Office of Naval Research (ONR) Young Investigator Award.

In November 2015, Carloni was one of four guest editors of a special issue of the Proceedings of the IEEE on the evolution of Electronic Design Automation and its future developments; in the same issue, his article “From Latency-Insensitive Design to Communication-Based System-Level Design” addressed the challenges of engineering system-on-chip platforms while proposing a new design paradigm to cope with their complexity.

Carloni is coauthor of Photonic Network-on-Chip Design (Integrated Circuits and Systems) and over one hundred and thirty refereed papers. He received the best paper award at DATE’12 for the paper “Compositional System-Level Design Exploration with Planning of High-Level Synthesis” and at CloudCom’12 for the paper “A Broadband Embedded Computing System for MapReduce Utilizing Hadoop.” He coauthored “A Methodology for Correct-by-Construction Latency-Insensitive Design” (1999), which was selected for The Best of ICCAD, a collection of the best papers published at the IEEE International Conference on Computer-Aided Design from 1982 to 2002. Carloni holds two patents.

As of November 2016, his publications have been cited 5,000 times, and he has an h-index of 36.

Currently an associate editor of the IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Carloni previously was associate editor of the ACM Transactions in Embedded Computing Systems (2006-2013). He is active in his research community, serving on numerous technical program committees. In 2010 he was technical program co-chair of the International Conference on Embedded Software, the International Symposium on Networks-on-Chip (NOCS), and the International Conference on Formal Methods and Models for Codesign. He was also vice general chair (in 2012) and general chair (in 2013) of Embedded Systems Week, the premier event covering all aspects of embedded systems and software. Carloni is also co-leader of the Platform Architectures theme in the Gigascale Systems Research Center and participates in the Center for Future Architectures Research.

At Columbia since 2004, Carloni holds a Laurea Degree Summa cum Laude in Electronics Engineering from the University of Bologna, Italy, a Master of Science in Engineering from the University of California at Berkeley, and a Ph.D. in Electrical Engineering and Computer Sciences from the University of California at Berkeley, where he was the 2002 recipient of the Demetri Angelakos Memorial Achievement Award in recognition of altruistic attitude towards fellow graduate students.

Posted 11/29/2016

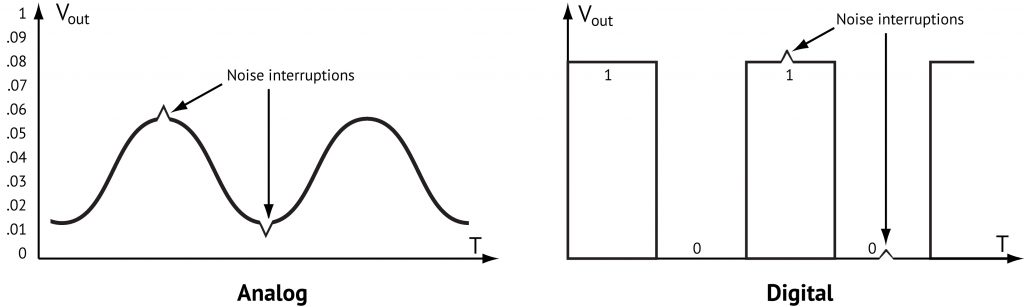

Digital computers have had a remarkable run, giving rise to the modern computing and communications age and perhaps leading to a new one of artificial intelligence and the Internet of things. But speed bumps are starting to appear. As digital chips get smaller and hotter, limits to increases in future speed and performance come into view, and researchers are looking for alternative computing methods. In any case, the discrete step-by-step methodology of digital computing was never a good fit for dynamic or continuous problems—modeling plasmas or running neural networks—or for robots and other systems that react in real time to real-world inputs. A better approach may be analog computing, which directly solves the ordinary differential equations at the heart of continuous problems. Essentially mothballed since the 1970s for being inaccurate and hard to program, analog computing is being updated by Columbia researchers; by merging analog and digital on a single chip, they gain the advantages of analog and bypass its problems.

What is a computer? The answer would seem obvious: an electronic device performing calculations and logical operations on binary data. Millions of simple discrete operations carried out at lightning speed one after another—adding two numbers, comparing two others, fetching a third from memory—is responsible for the modern computing age, an achievement that much more impressive considering the world is not digital.

It is analog; so to adjust for the continuous time of the real world, digital computers subdivide time into smaller and smaller discrete steps, calculating at each step the values that describe the world at a particular instant before advancing to the next step.

This discretization of time has computational costs, but each new chip generation—faster and more powerful than the last—could be expected to make up for the inefficiencies. But as chips shrink in size and it becomes harder to dissipate the resulting heat, continued improvements are less and less guaranteed.

Some problems, especially those presented by highly dynamic systems, never fit snugly into the discretization model, no matter how infinitesimally small the steps. Plasmas are one example. Modeled usually at a basic level like air and water, a plasma is also electrically charged. Atoms have separated, and electrons and nuclei float free, all of it sensitive to electromagnetics; with so many variables at play at the same time, modeling plasmas is intrinsically difficult.

Neural networks, perhaps key to real artificial intelligence, are also highly dynamic, relying on an infinite number of simultaneous calculations before converging on an answer.

“At the core of these types of continuous problems are ordinary differential equations. Some number changes in time; an equation describes this change mathematically using calculus,” explains Yipeng Huang, a computer architecture PhD candidate working in the lab of Simha Sethumadhavan. “Computers in the 1950s were designed to solve ordinary differential equations. People took these equations that explained a physical phenomena and built computer circuits to describe them.”

“Analog” derives from “analogy” for the way the early computer pioneers approached computing: rather than decompose a problem into discrete steps, they set up a small-scale version of a physical problem and studied it that way. A wind tunnel is a (non-electronic) analog computer. An automobile or plane is placed inside, the wind tunnel is turned on, and researchers observe how the wind flows around the object, writing equations to describe what they see. By adjusting wind direction or speed, they can draw analogies from what happens in the tunnel to what would happen in the real world.

Electronic analog computers are the abstraction of this process, with circuits describing an equation that the computer solves directly and in real time. With no notion of a step, no need to translate between analog and digital (or power-hungry clock to keep everything in sync), analog is also much more energy efficient than digital.

This energy efficiency opens possibilities in robotics and is critical if small, lightweight robots are to operate in the field untethered from a power source. Though robots incorporate computing, they are unlike general-purpose computers that receive, operate on, and output mostly digital data. Robots might operate in an entirely analog context, receiving inputs from the environment and responding with an analog output such as when, sensing a solid surface, a robot reacts in a mechanical way to change direction. When inputs and outputs are both analog, translating from analog to digital and back to analog becomes only so much overhead. This same is true also for cyber physical systems—such as the smart grid, autonomous automobile systems, and the emerging Internet of things—where sensors continuously taking readings of the physical environment.

Analog computing would seem to make sense but analog computers have major downsides; after all, there’s a reason they fell out of favor. Prone to error due to noise susceptibility, analog computers are not precise or accurate, and they are difficult to program.

In a project funded by the National Science Foundation, Huang has been working with Ning Guo, a PhD electrical engineering student advised by Yannis Tsividis, to design and build hardware to support analog computing. The project started five years ago with both researchers immersing themselves in the literature from the 50s. Says Guo, “Reading those old books, when we could even find them, helped us get inside how people used analog computing, the kinds of simulation they were able to run, how powerful analog computing was 50 years ago.”

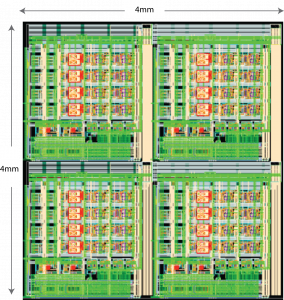

While most people picture analog computers as they were in the 1950s—big and heavy, difficult to use, and not as powerful as an iPhone—Huang and Guo’s analog hardware is not that. It is a chip made of silicon, measures 4mm by 4mm, has transistor features the size of 65nm, and has four copies of a design that can be copied over and over. It follows conventional architectures and has digital building blocks. The materials and the VLSI design are all up to the moment.

“The same forces that miniaturized digital computers, we applied here,” says Huang.

The architecture itself is conceived as a digital host with an analog accelerator; computations that are more efficiently done through analog computing get handed off by the host to the analog accelerator.

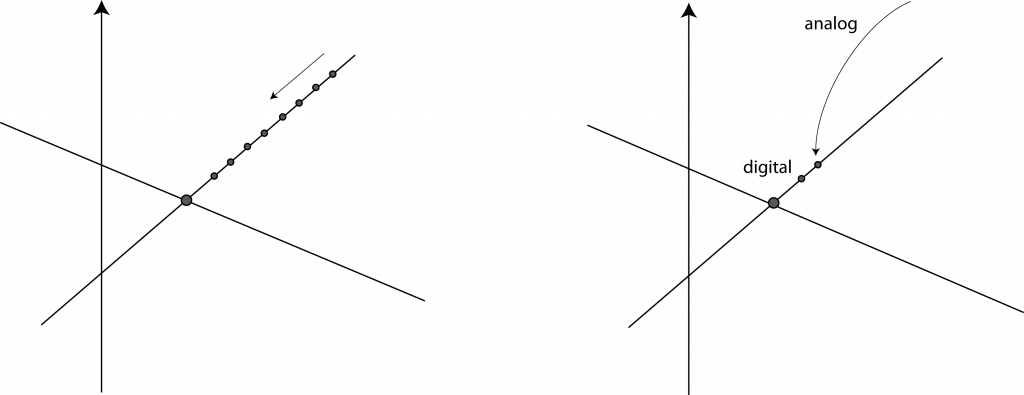

The idea is to interleave analog and digital processing within a single problem, applying each method according to what it does best. Analog processing for instance might be used to quickly produce an initial estimate, which is then fed to a digital processing component that iteratively hones in on the accurate answer. The high performance of the analog computing speeds the computation by skipping initial iterations, while the incremental digital approach zeroes in on the most accurate answer.

If hardware looked different in the 50s, so did the programming. Early programmers had to work both the physics and program the chip, a top-down approach at odds with the modern practice of using abstractions to relieve programmers of having to learn nitty-gritty hardware details. Here again, Huang adjusts analog practices to modern expectations, employing solvers that abstract the mathematics so a programmer need only describe the problem at a high level, while the program selects the appropriate solver.

The analog computations on the inside may be messy, but from a software perspective, a solver is just like any other software module that gets inserted at the appropriate place.

Among researchers, analog computing is drawing renewed interest for its energy efficiency and for being able to efficiently solve dynamic and other complex problems.

For broader acceptance, analog computing will have to prove itself by solving the far simpler linear equations that digital computers solve all the time. So easy are these problems to solve computationally, programmers go to great lengths to boil high-level problems to linear algebra.

If analog computing can solve linear equations and do it faster than digital computers, others will be forced to take notice. So far, Huang and Guo’s linear algebra experiments using their hybrid chip show promise, achieving a performance 10 times better than what digital methods can do while consuming 1/3 less energy (Evaluation of an Analog Accelerator for Linear Algebra). There are caveats to be sure, but there are also opportunities to improve; as the door closes for future efficiency gains in the digital world, it is cracking open in the analog one, with major implications for future applications.

At this point, it’s all research and prototyping. Says Huang, “We’re just trying to show that analog computing works. True integration with digital requires many steps ahead. It has to scale, it has to be more accurate, but the immediate goal is to give concrete examples of problems solvable by analog computing, categorized by type and with all pitfalls spelled out. Many modern problems didn’t exist when analog computers were widespread and so haven’t yet been looked at on analog computers; we’re the first to do it.”

Focused on the future of computing, both Huang and Guo are quietly confident that analog integrated with digital computing will some day eclipse current forms of digital computing. Says Guo, “Robotics, artificial intelligence, ubiquitous sensing—whether for health monitoring, weather and climate data-gathering, electric and smart grids—may require low-power signal processing and computing with real-time data, and they will operate more smoothly and efficiently if computing is lightweight. Increasingly these future applications may be more and more the ones we come to rely on.”

Technical details are contained in two papers: Evaluation of an Analog Accelerator for Linear Algebra and Continuous-Time Hybrid Computing with Programmable Nonlinearities, and in “Energy-Efficient Hybrid Analog/Digital Approximate Computation in Continuous Time,” an article that appeared July in the IEEE Journal of Solid-State Circuits.

Yipeng Huang received a B.S. degree in computer engineering in 2011, and M.S. and M.Phil. degrees in computer science in 2013 and 2015, respectively, all from Columbia University. He previously worked at Boeing, in the area of computational fluid dynamics and engineering geometry. In addition to analog computing applications, he researches performance and efficiency benchmarking of robotic systems. Advised by Simha Sethumadhavan, Huang expects to complete his PhD in 2017.

Ning Guo is finishing his PhD in electrical engineering under the guidance of Yannis Tsividis. He received a Bachelor’s Degree in IC design from Dalian University of Technology (Dalian, Liaoning, China) in 2010, before attending Columbia University where he received first an MS in Electrical Engineering (2011) followed by a M.Phil (2015). His research interests include ultra low-power analog/mixed-signal circuit design, analog/hybrid computation, analog acceleration for numerical methods. He is also developing an interest in wearable technology and devices.

Posted: 11/22/2016, Linda Crane

Says Junfeng Yang, the paper’s senior author, “Shuffler instantly lets us build a stronger defense.”

It describes how to coordinate communication among ultra-low power devices (e.g., Internet of things) that must operate under stringent power constraints to maximize throughput rates among these devices.

The Office of Naval Research (ONR) has awarded a three-year, $2.5M grant to three Columbia University researchers to build a security architecture to protect cyber-physical systems (CPSs) from failures and cyber-attacks. Suman Jana will lead the research effort, working with Salvatore Stolfo and Simha Sethumadhavan, each of whom will oversee a particular aspect of the architecture. All three are Columbia University computer scientists working in the area of security.

The large grant amount reflects the growing need to harden CPSs. Similar to embedded systems, CPSs encompass a wide number and variety of devices and sensors as well as the underlying software, communications, and networks that link components. What distinguishes CPSs from embedded systems is the tight coupling between computing resources—the cyber space—and the physical environment. At the heart of CPSs are sensors that take readings of the physical environment and relay the data to other components that monitor this data or act on it in some way.

A large ship with steering and engine control, electric power, hydraulics, and other systems that respond to real-time input from the environment is a prime example of a CPS. Other systems that can qualify as CPSs—depending on the degree to which they merge the cyber and physical worlds—are the Internet of Things, smart grids, autonomous airline and automobile systems, medical monitoring, and robotics systems.

Securing CPSs poses special challenges. Says Jana, “CPS systems often contain a large number of diverse, resource-constrained, and physically distributed components. The resource-constrained nature of the components makes it harder to run heavyweight security software while the physically distributed nature makes it easier for the attacker to compromise a component by either damaging or replacing it with one of its own.”

Such a large, complex attack surface is one security concern; another is the redundancy built into CPSs to ensure that the failure of a single component does not affect critical systems; from a security perspective, this redundancy means having to secure not a single component but all other components that replicate its function.

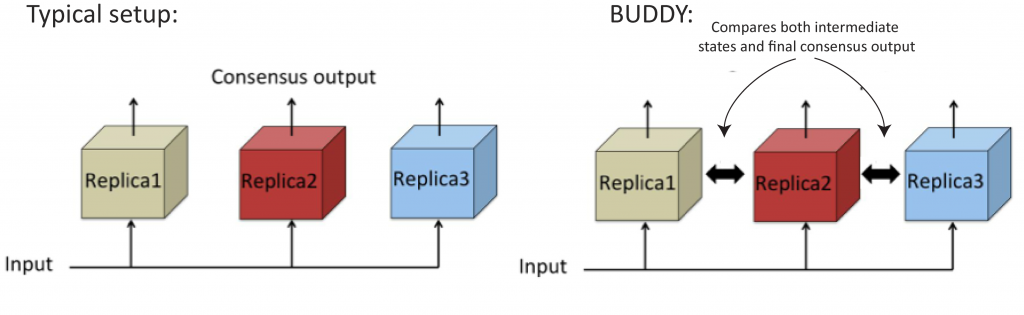

However, the redundancy meant for fault tolerance may also be key to preventing malicious attacks. With BUDDY, a security architecture being designed for CPSs, Jana and his colleagues propose leveraging existing redundancy to increase the amount of data shared among components so that, like buddies in a buddy system, components can more closely monitor one another for signs any are under attack. For example, rather than crosscheck only final results as is done now, BUDDY would randomly compare intermediate results as well, thus preventing sophisticated attacks that might otherwise avoid detection by making the output look correct.

More data, same structure. Rather than checking only final computations, BUDDY (randomly) compares intermediate computations among components to detect and report intrusions. This buddy approach works for checking run-time software and hardware processes and during software development to prevent bugs.

More data, same structure. Rather than checking only final computations, BUDDY (randomly) compares intermediate computations among components to detect and report intrusions. This buddy approach works for checking run-time software and hardware processes and during software development to prevent bugs.

Having components more closely monitor one another also distributes the security task, removing a single point of failure. Any attacker is faced with the difficult task of simultaneously attacking many components.

More data results from comparing many intermediate states of redundant components, and one key requirement of BUDDY, according to Jana, will be managing the data overhead without impairing performance. “By performing most checks asynchronously, without blocking the main execution path, computations will be able to proceed in parallel with the checks.”

Comparing intermediate computations is only one aspect of BUDDY, which will use redundancy across three levels of security: (1) runtime monitoring of software for malicious activity, (2) runtime monitoring of hardware to detect backdoors and supply chain attacks, and (3) detecting bugs during software development to prevent vulnerabilities that can later be exploited by attackers.

This last level is the responsibility of Jana, who will automatically extract error specifications by running multiple programs that provide similar functionality and observing the common ways the programs function. “As long as the majority of the redundant components are not buggy in the same way, one can compare their behaviors for the same inputs and infer the behavior demonstrated by the majority of components to be the correct specification. Specifications captured in this way can then be used to automatically find bugs.” It’s a scalable technique that frees developers from the tedious and error-prone task of manually writing specifications.

While specification mining has not previously been used in CPSs, it fits in well with BUDDY’s overall approach of looking at existing redundancies for ways to insert new protections. Redundancy is both the means to build security mechanisms within a CPS and what makes it possible to deploy in such large, distributed systems. “The nice thing about leveraging redundancy in a CPS is that you don’t have to change the system that much to add security.” The redundancy needed for security is already there.

– Linda Crane,

Posted 11/17/2016

Part 1: Liberal arts students and computer science. The flow of liberal arts students into computer science is forcing changes in the way computer science is being taught at Columbia.

College computer science classes are packed and overflowing. The trend is nationwide and especially strong at Columbia, which last year saw a 30% increase in the number of computer science majors (over five years, the increase is 230%). These increases don’t account for the number of students enrolling in computer science classes, many of them liberal arts students with little intention of majoring in the field and who even five years ago would not have considered taking a computer science class. But as computational methods turn texts, images, and even video into data analyzable by computer, more students see computing skills as important in their fields. It all adds up to surging enrollments and more diverse classes. Hiring more faculty addresses the numbers, but the different backgrounds and expectations students bring to the classroom are forcing Columbia to new find ways to teach computer science while addressing broader questions of how computer science fits in with other disciplines.

Enrollments in computer science classes are surging. All US universities and colleges are seeing it, and most are on a tear to hire additional faculty to teach computer science, competing with one another to do so. While previous surges in interest in computer science eventually faltered—the first in the 80s as PCs came onto the scene, and another in the 90s preceding the dot.com bubble—this latest wave of students looks to last. Driven not only by majors seeking jobs, the current generation is made up also of students from across the university; in the lead are liberal arts students studying humanities—literature, art, and history—and those studying the social and natural sciences of biology, physics, and chemistry.

Why? Because almost every field is being transformed by data, and data is everywhere. As machine learning and data mining techniques extract meaning from unstructured texts, images, audio recordings, and even video, almost every liberal arts discipline is seeing a fundamental shift from purely qualitative analysis to quantitative analysis. The changes are profound.

If social scientists once assembled small focus groups, today they can mine millions of social media posts. English majors once limited to reading a handful of novels and drawing conclusions from anecdotal evidence today can use topic modeling techniques to analyze large collections of 19th century novels, looking for broad patterns amidst representative data. Linguistics majors who once needed to speak several languages to compare grammatical structures can today apply computational modeling techniques across any number of languages. Biology too is being radically altered by computational methods that possible the analysis of large genomic data sets

The story is the same across the liberal arts: Those who have computational skills to sort through large-scale data can look at their fields more broadly and from new perspectives.

Julia Hirschberg, chair of the Computer Science department, has a PhD in computer science; it is her second PhD. The first is in sixteenth-century Mexican social history.

While an assistant professor in history at Smith College, Hirschberg was creating a socio-economic map of the city of Puebla de los Angeles for the years 1531-1560, correlating where settlers lived within the city according to familial relationships and economic and other factors. The records (land grants and baptismal and notarial records), however, were incomplete, and the multi-dimensionality of the data precluded her from easily visualizing the problem as a graph or map. A computer science friend recognized it as an artificial intelligence problem and put Hirschberg in touch with someone who had written software for a similar problem with 14th century French land holdings. Hirschberg worked initially with a student to adapt the code and ended up learning to program so she could finish the code herself. Fascinated with programming, she took a semester off to take computer science classes at the University of Pennsylvania. Once back at Smith she took math classes and applied to UPenn for an MS degree. Taking some NLP and linguistics classes convinced her to get her PhD in computer science.

Computer skills are critical, and students—majors and nonmajors alike—are signing up for computer science classes in numbers never seen before. Says Julia Hirschberg, chair of Columbia’s Computer Science Department, “We are very happy to see more and more nonmajors taking an interest in computer science as computer science becomes more and more relevant to so many other disciplines.”

For her department, it means hiring more teaching-oriented faculty (three new lecturers this year so far), adding more sections and teaching assistants, and adopting the flipped classroom approach to accommodate more students.

The real challenge is the diversity represented by liberal arts students. Social science, biology, business, or economics all require different computational proficiencies. The students themselves are diverse. Compared to computer science majors, liberal art students have different backgrounds and varying levels of previous exposure to computer science; some may have none at all. As a result, they may harbor misconceptions about what computer science entails or what they need to know, or they may feel out of place in a large class with students who have been confidently programming for years.

This diversity is forcing computer science departments to rethink how they teach computer science. At Columbia, the challenge is to make computer science relevant to all students while maintaining a high level of rigor so students have the computational tools they need to effect changes in their own fields.

While students often take computer science to gain practical computing and programming skills, at its core, computer science is much more than coding; it is about structuring a problem into individual component parts that can be solved by computer and then representing those components formally and applying algorithms to solve them. This way of decomposing a larger task entails an explicitness of thought and critical thinking that is by itself a powerful method of organizing and analyzing information.

It also requires thinking about a problem in the abstract, stripping away the unimportant details to focus on the essential aspects of a problem, to see perhaps analogies between a problem in one domain and a seemingly unrelated problem in another.

Computational thinking is the bread and butter of computer scientists, and for many students, computational thinking is key to “getting” computer science and understanding how it fits with their interests.

To help students take a more expansive and comprehensive view of computer science and to expose them to its many subfields, the department offers Emerging Scholars Program, a noncoding, once-a-week seminar focused particularly on the collaborative aspects of computer science. Students work in small, peer-led groups and discuss together algorithmic solutions to a set of problems. There is no grading or homework; the focus is entirely on the high-level aspects of computer science. It’s an approach that has been effective in increasing the variety of students who pursue a degree in computer science.

In computer science perhaps more than in any other discipline, the best way to learn is by doing. Projects are what get students excited and help them imagine the possibilities of using computational skills in their own classes.

What projects are most instructive depends of course on the student’s own interest. Projects designed for majors might not make sense to liberal arts students, or even engineering majors. For this reason, Columbia has long offered introductory classes geared for specific groups of students. Alongside a general introduction to computer science (1004) are specific ones for engineers (1006) and for social science and other students interested in data processing (1005). While all teach the same basic computer science skills, they differ by programming language—Java for majors, Python for engineers, MATLAB for data processing students—and the types of projects offered.

Computing in Context (1002) is computer science reimagined for liberal arts students. The class is the brainchild of Adam Cannon, who has been teaching introductory computer science for 15 years and has seen the number of nonmajors in his classes climb steadily. Recognizing that classes and projects meant for computer science majors lacked context for students whose next class might be a lecture on Jane Austen or the evolution of liberation theology in Latin America, Cannon radically altered the class structure to accommodate the different computational proficiencies required by different liberal arts disciplines.

Rather than a single professor, Computing in Context is taught by a team of professors. A computer science professor lectures to all students on basic computer and programming skills, and professors in the humanities, social sciences, and other departments show through lectures (some live, some recorded) and projects how those skills and methods apply to a specific liberal arts discipline, or track. All students sit in on lectures on computer science and programming, but then break into different groups for the track lectures. Two students on different tracks might have completely different experiences.

It’s a modular format designed to scale as more departments look to insert computer science into their students’ curriculum. The class debuted in 2015 with three tracks: social science, digital humanities, and economics and finance, and has since added a fourth to teach computer science in the context of public policy. More tracks are in the planning stage.

Says Hirschberg, “Computing in Context is a way for us to work with collaborators from disciplines as divergent as English, History, the Department of Industrial Engineering & Operations Research, and now international affairs, and teach students ‘real’ computer science in the context of problems in their own discipline.”

Suzen Fylke came late to computer science, taking Computing in Context in her last semester as a senior. She had more than once signed up for introductory computer science classes, but never followed through. “I didn’t feel programming was for me, so the regular class was a little intimidating. Computing in Context offered an easier entry point since half was analysis on topics familiar to me. Maybe I wouldn’t be good at the computer science part, but I knew I could do the analysis part.” For her the class was life changing. “I always wanted to do linguistics. In my free time I was testing different language apps and looking for ways to making language learning easier. While taking Computing in Context, I started realizing different ways of manipulating text, that analyzing a text that could be so much easier by computer or writing a computer program. It came together for me in a way that it was both fun and practical.” After graduating with a degree in American Studies, Fylke the next fall enrolled in Hunter College to pursue a degree in Computational Linguistics.

The entry of computer science into the liberal arts is still relatively new, but is already responsible for many technological advances: Computational statistical methods applied to linguistics has made accurate and reliable automatic speech recognition possible; network theory applied to social science helps map societal relations and interactions; computational methods for studying the behavior of systems illustrate also how complex biologic interactions arise from collections of cells.

Jenny Ni took her first computer science class as a junior, a bit of late for someone planning to double major in computer science and archaeology. Then again, studying computational archaeology—a field she had not known existed—wasn’t the original plan. But the connection between computation and archaeology soon became obvious as she constantly encountered references to computer programs developed for archaeologists; many were for analyzing GIS and drone data and analyzing images for human habitation.

Now taking data structures, she is embracing computer science. “I liked the logic, and I saw a lot of comparisons between archaeology and computer science in a way I didn’t expect. Levi Strauss’s theory of structuralism, where every object is defined by what it is not, can be visualized as a tree of binary choices: raw/cooked, light/dark, yes/no.” She hopes that she will be able to incorporate computing skills into her senior thesis. “My adviser is working on a rock art project in the Southwest and there is a possibility that drones may be used in mapping the area. I hope to work with drone data in possibly building programs that can handle and organize the data efficiently so that others can search for information they need.”

Liberal arts students have a unique vantage point to imagine what new innovations might be next, and there is a trend as those in computer science look to make connections with those in liberal arts to find problems solvable through computational methods.

Discovering new innovations isn’t the only reason more liberal arts students should study computer science; as researchers and others focus intently on technological advances, others must critically examine whether outcomes are desirable. Advances in artificial intelligence, ubiquitous sensing, and precision medicine—to name only a few—are outpacing people’s ability to understand the legal, social, and ethical implications. Self-driving cars, robots that cause injury or put people out of work, privacy in an age of mass surveillance are all complex issues that require computer-literate lawyers, policy-makers, philosophers, educators, consumer advocates, and ethicists.

Laura Zhang, a philosophy major now in law school, first encountered the world of computer science while helping out at a friend’s startup. She was immediately drawn to the rigorous analysis required for the field, something she missed from her undergrad days studying formal logic and building proofs. As an intern at a legal technology startup, she learned enough Python on the job to contribute simple software components for a program to analyze law firm contracts. She found it tremendous fun. Now enrolled in a data structures class (covered in her law school tuition), she wants to carve out a role advising technology startups, helping them grow while giving business strategy advice the from legal perspective.

“How a lawyer thinks and solves problems is very different from how an engineer thinks and solves problems, and a lot of miscommunication arises when the two sides are not speaking the same language. I feel I could contribute by making programming analogies to explain legal content, and help evaluate the legal risks or difficulty of going down a particular technology path. Best career roles are interdisciplinary. I’m not exactly sure how my skills will fit together, but I know I’m interested in computer science.”

It is those outside computer science who have called attention to algorithms that absorb biases and older stereotypes latent in the data or the programmers themselves. As computers become ever more embedded in all aspects of life, it becomes increasingly important that students from all fields of study understand the basics of computational methods.

It’s not too much of a stretch to imagine that 90% of all nonmajors might some day enroll in at least one rigorous computer science class. As incoming liberal arts students and those in the social and natural sciences see the inroads computational methods are making in their fields, developing computer science skills may be less revolutionary, and more and more the smart thing to do. The current surge may soon be a new baseline, and the new reality for computer science departments.

Part 2: Women and computer science

– Daniel Bauer and Linda Crane

Posted: 11/9/2016

Study coauthored by David Blei and published in Nature Genetics describes using TeraStructure, an algorithm capable of analyzing up to 1 million genomes, to infer ancestral makeup of 10,000 individuals.