|

I am a PhD student in EECS at the Massachusetts Institute of Technology as part of the Embodied Intelligence collective in CSAIL; I am fortunate to be advised by Prof. Phillip Isola and Prof. Vincent Sitzmann. In short, I am an AI researcher. More specifically, I am interested in developing machine learning algorithms that enable machines to perceive and act in the open 3D world. I believe that such algorithms should capitalize on the rich intrinsic structures available in the 3D world and have a modular and robust understanding of dynamics. Since such algorithms are most useful to embodied agents, I believe that these properties should emerge from structures available through embodiment. To this end, my research often lies at the intersection of machine learning, computer vision, natural language and robotics. Previously, I was an undergraduate and later a research associate (Staff Associate 1) at Columbia University, where I graduated from the Fu Foundation Engineering school with a B.S in Computer Science ( magna cum laude ), focusing on intelligent systems for computer vision and robotics. At Columbia, I was immensely fortunate to be advised by Prof. Carl Vondrick as part of CV Lab, where I was first introduced to AI research. I also spent a wonderful summer as a machine learning intern at Toyota Research Institute . Email / |

|

|

|

|

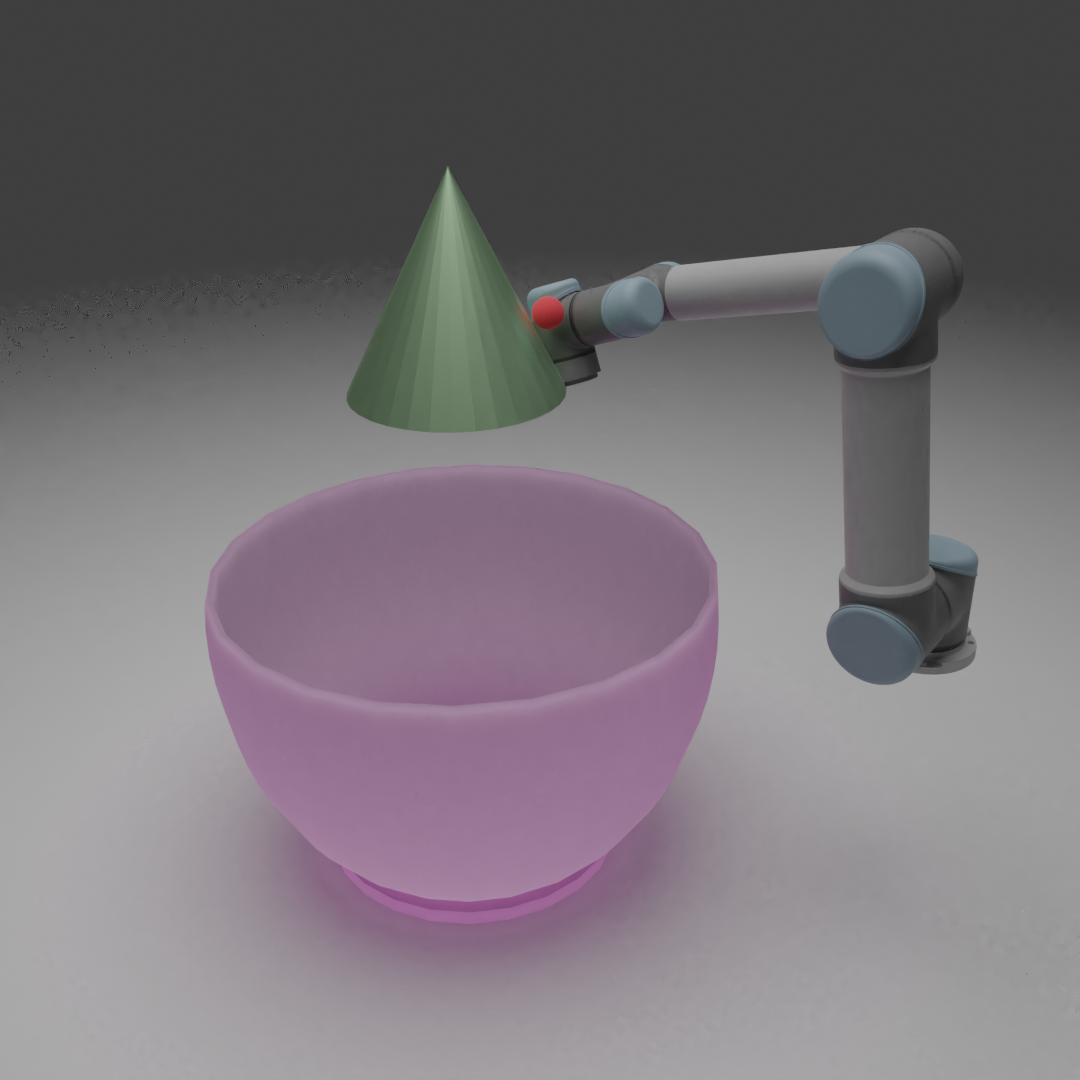

Ishaan Chandratreya, Huy Ha, Simon Stent , Pavel Tokmakov, Shuran Song, Carl Vondrick In Submission arXiv coming soon! Object trajectories in a physical world often have chaotic properties as a result of the stochastic nature of the environment. Modeling the dynamics of even simple objects in such worlds is challenging because while parts of the motion (e.g.~during free fall, motion at low velocities) are still highly predictable, the trajectories may have bifurcation points (e.g.~during contact) which make the motion unpredictable very quickly. We introduce a model for predicting the motion of a rigid, dynamic object in static environments that endow these properties of the physical world. By using a simple but effective architecture that conditions on point clouds of the scene, we show that it is possible to learn a 3D model for contact that generalizes across environments. We further show that our model is actionable and can be used for a downstream application of predictive models in uncertain environments: robotic planning for moving objects. |

|

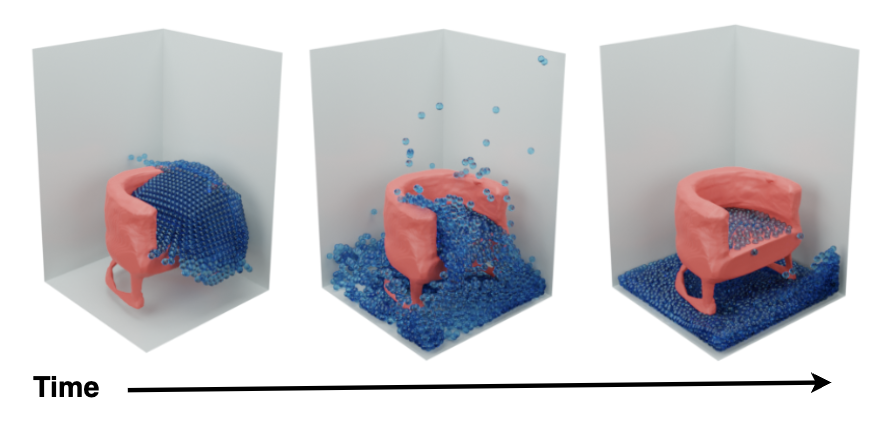

Arjun Mani* , Ishaan Chandratreya*, Elliot Creager, Carl Vondrick, Richard Zemel In Submission arxiv / Website Accurate simulation of fluids to design 3D objects, tools, and structures is a vital tool across engineering applications. Recently, graph neural networks have been employed to learn fast and differentiable fluid simulators, suitable for solving inverse problems using gradient-based optimization. In order for this optimization to be useful in design contexts, however, learned simulators must be capable of accurately modeling how fluids interact with genuinely novel objects, not seen during training. To promote this type of generalization, we introduce a framework that represents objects implicitly using signed distance functions (SDFs), rather than the usual explicit representation as a collection of meshes or particles. |

|

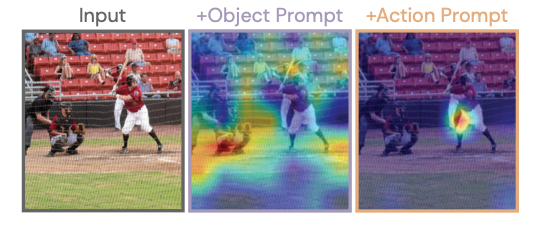

Sachit Menon* , Ishaan Chandratreya*, Carl Vondrick, In Submission arXiv We conduct an in-depth exploration of the CLIP model, and find that the representation produced for a given image tends to be strongly biased towards features for a particular task over others, primarily containing information selectively relevant to that task. Moreover, which task a particular image will be biased towards is unpredictable, with little consistency across images; for instance, strongly preferring text features when an image has small watermark text, object features when there is no prominent object, and more. We call this tendency task bias , and construct a dataset of images where each image has labels for multiple semantic recognition tasks to evaluate its prevalence. To resolve this task bias, we aim to provide tools that allow a user to guide the representation towards features relevant to their task of interest. |

|

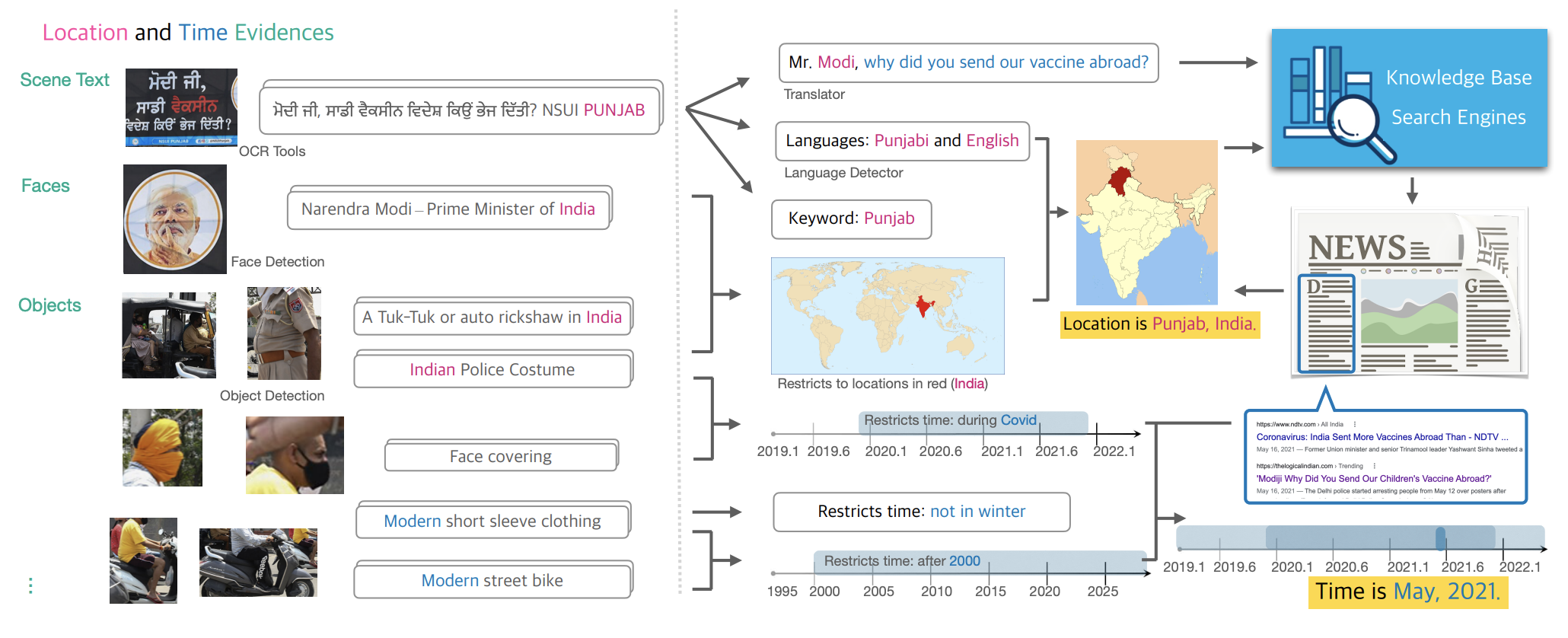

Xingyu Fu, Ben Zhou*, Ishaan Chandratreya*, Carl Vondrick, Dan Roth ACL 2022 (Oral) arXiv / Code+Models In this work, we formulate this problem and introduce TARA: a dataset with 16k images with their associated news, time and location automatically extracted from New York Times (NYT), and an additional 61k examples as distant supervision from WIT. We show that there exists a 70% gap between a state-of-the-art joint model and human performance, which is slightly filled by our proposed model that uses segment-wise reasoning, motivating higher-level vision-language joint models that can conduct open-ended reasoning with world knowledge. |

|

Boyuan Chen, Kuang Huang, Sunand Raghupathi, Ishaan Chandratreya, Qiang Du, Hod Lipson Nature Computational Science Project Page / arXiv / Video / Code+Models Most data-driven methods for modeling physical phenomena still assume that observed data streams already correspond to relevant state variables. A key challenge is to identify the possible sets of state variables from scratch, given only high-dimensional observational data. Here we propose a new principle for determining how many state variables an observed system is likely to have. |

|

At Columbia, I've completed some further explorative projects, either independantly or as part of a course. You can find some of my key projects here. |

|

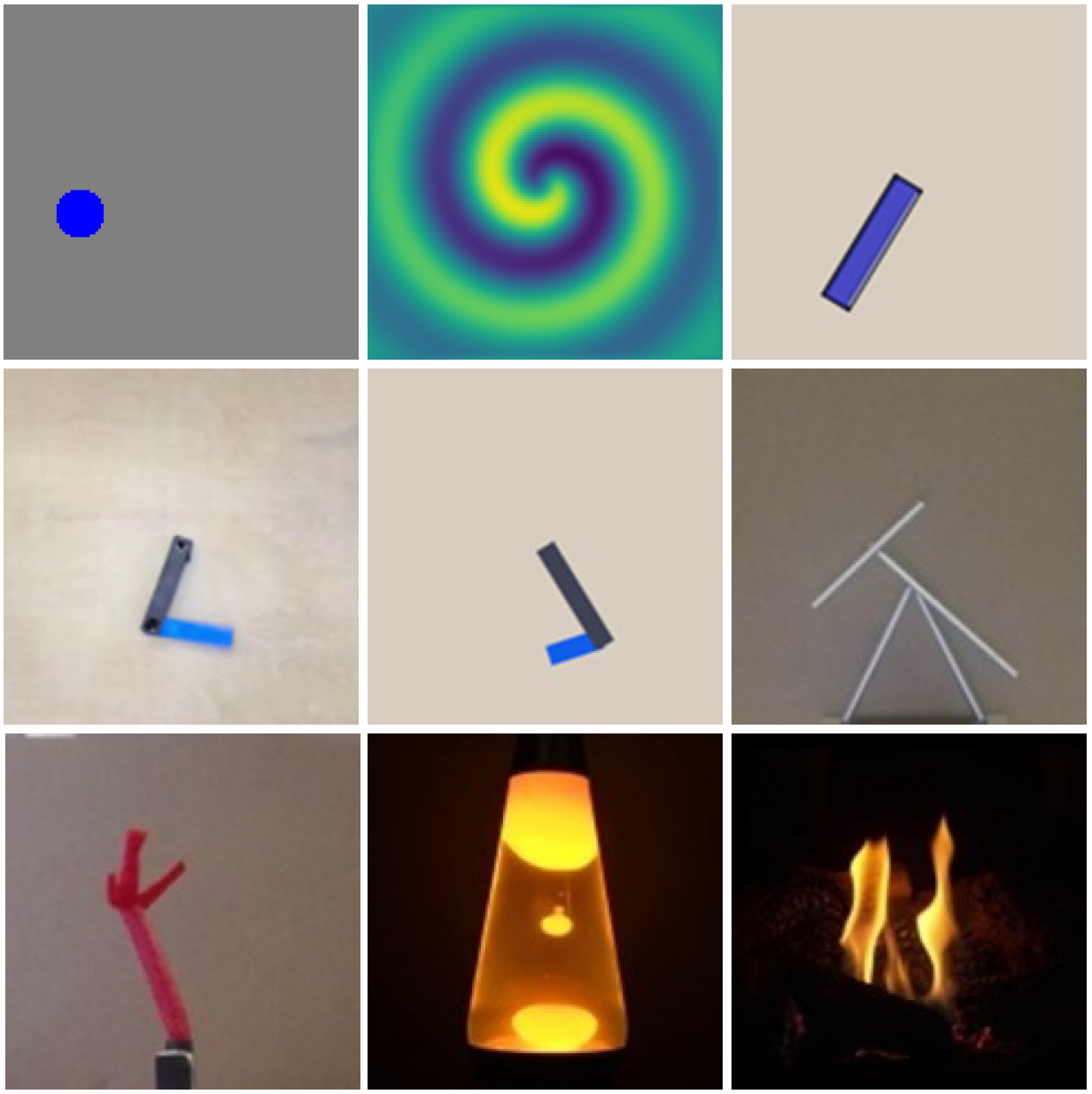

Ishaan Chandratreya Independent Project , Summer 2021 Code PHYRE is a benchmark for physical reasoning which involves placing a single object inside an 2D scene to achieve some goal. As such, an action in PHYRE is taken only at the beginning. We extend PHYRE to a continuous-control setting, and introduce a Gym interface for it. We then test the success of several "world models" in building representations of the dynamics inside PHYRE scenes. |

|

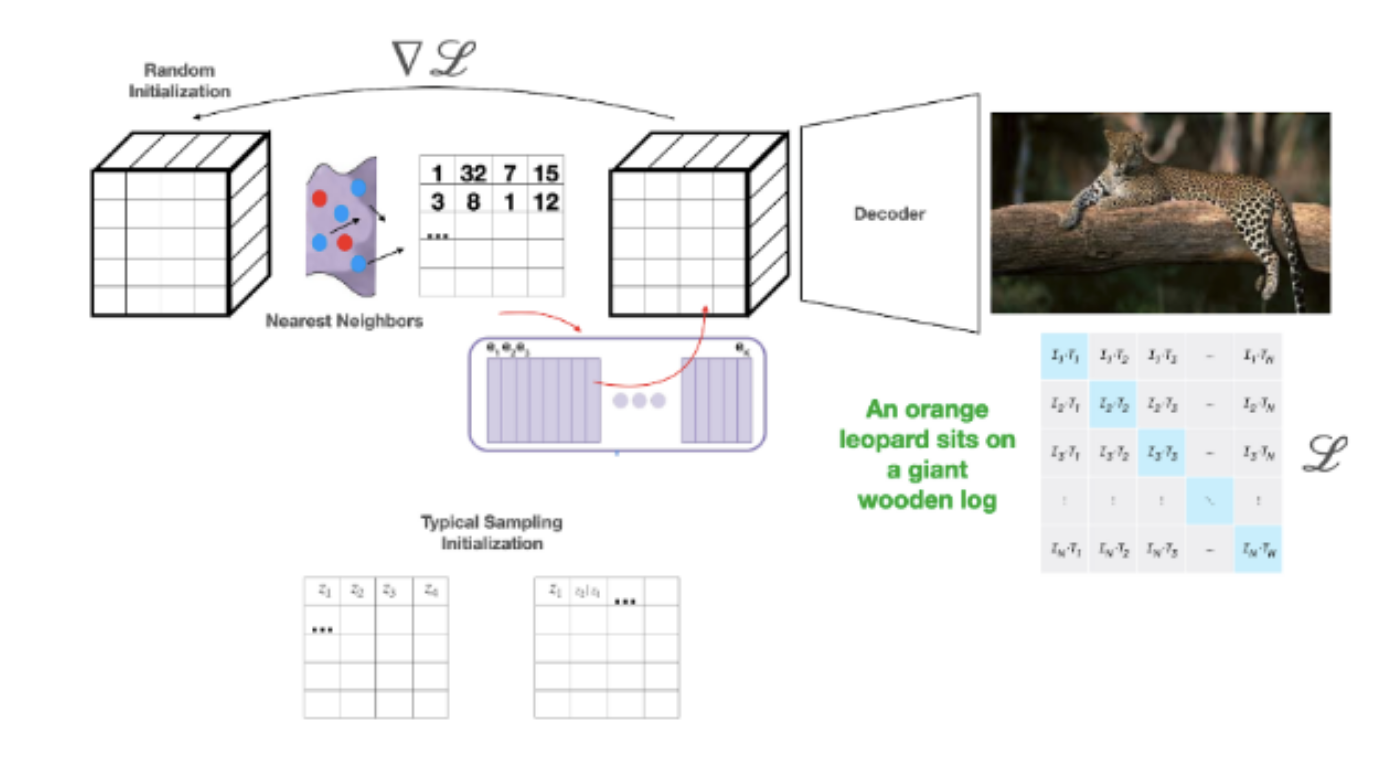

Ishaan Chandratreya, Self Supervised Learning , Spring 2022 (Prof. Richard Zemel) Many methods seek to better understand the latent space of image synthesis models (eg. VAEs, GANs) in order to dissect the generative process and to enable easy latent space search: the task of navigating the latent space of the models such that the image produced by it follows some pre-conceived notion. It is not obvious how to carry search methods for continuous latent spaces over to autoencoders that have discrete latent spaces. In this paper, we provide empirical analysis of the latent space of Vector Quantized Variational Autoencoders (VQVAE), and propose a framework to extend continuous distribution search to the latent space of discrete models. |

|

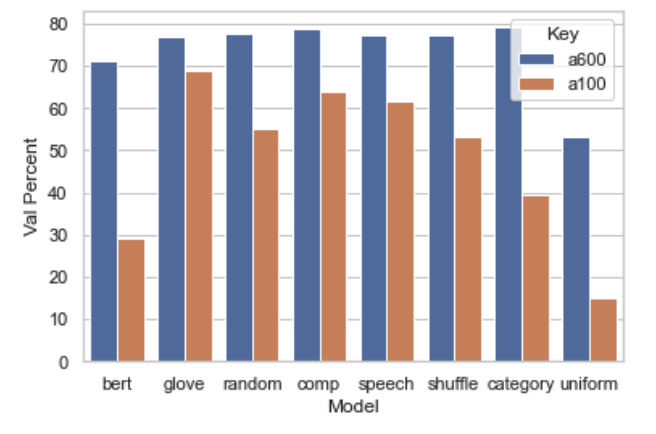

Ishaan Chandratreya, Katon Luaces Unsupervised Machine Learning , Fall 2021 (Prof. Nakul Verma) Poster We investigate how using a range of unsupervised methods for organizing the space in which the representations of the label reside affects the performance and training dynamics of a supervised image classification task. We present a range of possibilities to learn a "label" space prior to image classification, including imposing ordinal constraints and geometric inductive biases. |

|

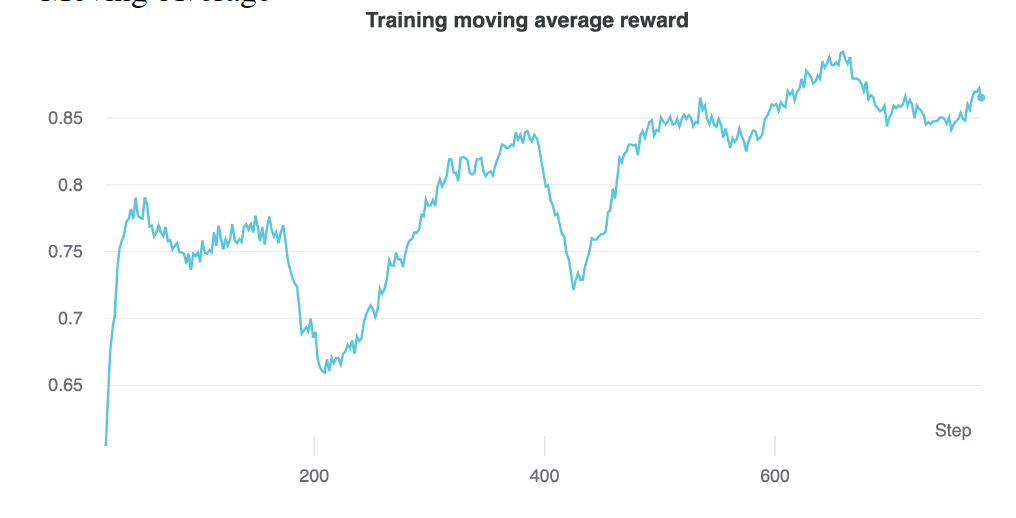

Ishaan Chandratreya Reinforcement Learning: Theory and Applications , Spring 2021 (Prof. Shipra Agrawal) Report The issue of selecting cuts as part of cutting-plane methods to solve integer programming (IP) problems can be framed as a Markov Decision Process, and hence the policy to select these cuts can be learnt using policy gradient methods. |

|

At Columbia, I've assisted classes that cover the theoretical and applied aspects of building intelligent systems. I am fortunate to have Prof. Nakul Verma as my guide here |

|

The links to the personal part of my website are under construction, but hopefully will be updated soon! In my free-time, I like to read , travel, cook, watch sports and play racket sports and games of incomplete information. I am also a PADI certified Advanced Open Water Diver, and like to use my vacation time to scuba dive around the world. |

|

Credits to Jon Barron for the website template.

|