|

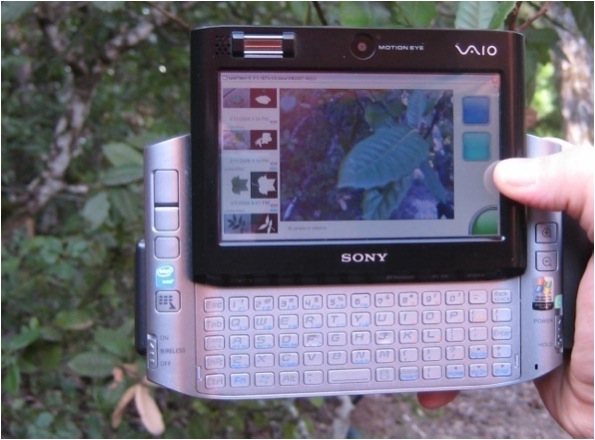

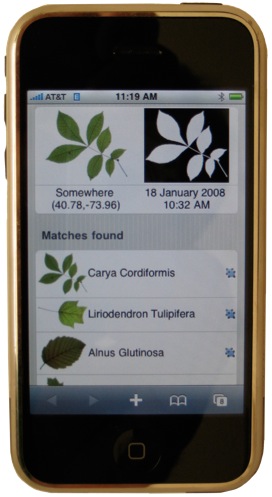

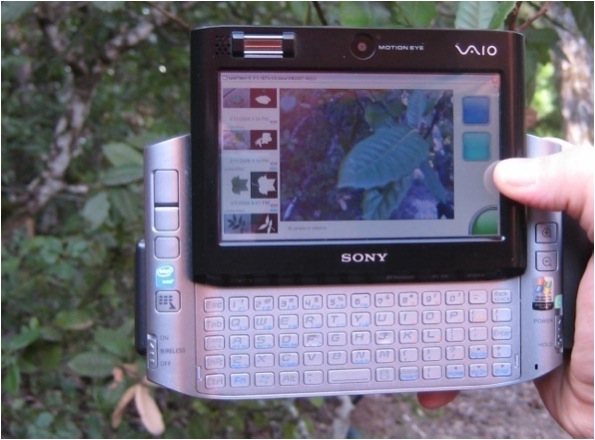

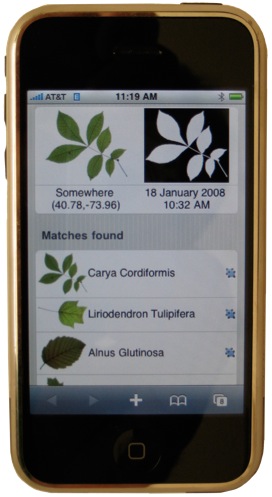

LeafView UMPC and iPhone is an ongoing project to explore additional interfaces and form factors, such as mobile phones and Ultra Mobile PCs, for the Electronic Field Guide, described in more detail below. By providing smaller, more mobile form factors, we hope to provide botanical species identification to a wider population. The system is based on our original LeafView interface to the Electronic Field Guide.

Project Web Site  | New York Times Article | New York Times Article |

|

Shape Identification on the Microsoft Surface. Surfaces provide a variety of new challenges for user interfaces required to capture, match, and interact with shapes such as leaves. We are experimenting with techniques and algorithms that support natural interaction with shape identification by placing a leaf directly on the surface to initiate shape recognition. Resultant matching species images are directly manipulated for comparison and inspection.

|

|

Site

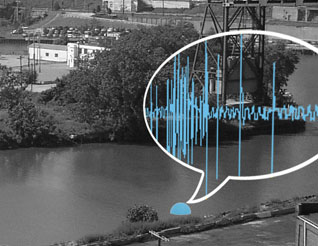

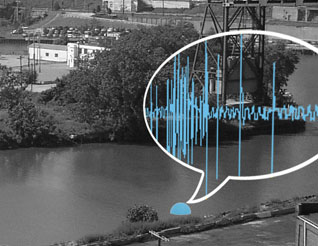

Visit by Situated Visualization. We are exploring innovative ways to use visualization in urban

design and urban planning site visits. The project explores situated visualization

as the emergent product of the progress through site, data, and

perception. For example, visualizing geocoded CO data in the physical world (image on left). In collaboration with faculty from the Graduate School of Architecture, Planning, and Preservation, we are developing prototypes for the Manhattanville

site in New York City using augmented reality techniques. These

ideas were initially explored during Spring 2007 and 2008 classes on 3DUI and at a CHI 2008 workshop. Our ongoing research expands situated visualization techniques developed in a prototype, called SiteLens.

CHI 2009  | CHI 2008 workshop poster | CHI 2008 workshop poster  and abstract and abstract |

|

Shake Menus. Menus play an important role in both information presentation and system control. We explore the design space of shake menus, which are intended for use in tangible augmented reality. Shake menus are radial menus displayed centered on a physical object and activated by shaking that object. We present several applications for situated visualization and in-situ authoring. Presentation coordinate systems are of particular interest. We conducted a within-subjects user study to compare the speed and efficacy of several alternative methods for presenting shake menus in augmented reality.

ISMAR 2009  |

|

Crooked River Song Lines. At the Cleveland Ingenuity Festival 2008, in conjunction with Spurse and David Jensenius, we explored coauthoring with and giving voice to the Cuyahoga River and the multiple cultural, social, and physical histories of the river. Sensors were placed in 11 locations along the river to gather sounds which were then composed with stories about the river and played back using transmitters at the festival. Moving through the festival mirrored movement through the virtual space of the river. At the same time, a buoy progressed down the river, reflecting it's geolocated path which through images drawn from historical archives. As a third way of experiencing the river, participants could call into a shared audio space that reflected listening stations along the river.

Project web site  . . |

|

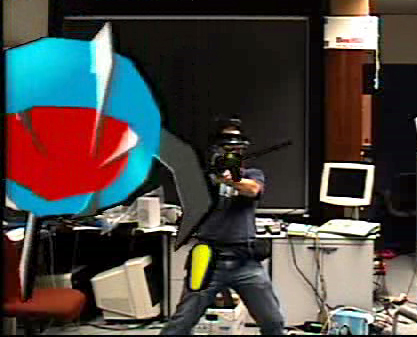

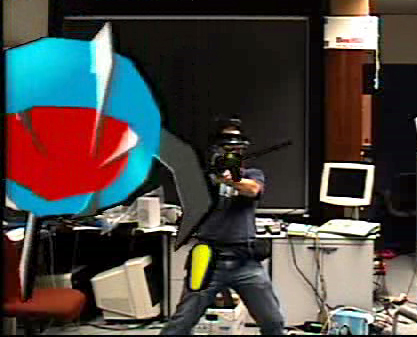

Visual

Hints. In

the context of tangible augmented reality, we are investigating

and evaluating a technique called visual hints, which are graphical

representations in augmented reality of potential actions and

their consequences in the augmented physical world. Visual hints

enable discovery, learning, and completion of gestures and manipulation

in tangible augmented reality. The example image on the left shows

a ghosted visual hint of a reeling gesture.

ISMAR 2007  | Video | Video  |

|

LeafView is our initial prototype tablet-based user interface to the Electronic Field Guide.

It has been field tested on Plummers Island with Smithsonian Institution

botanists and at the National Geographic Rock Creek Bioblitz.

The system incorporates a wireless camera which sends images to

the tablet to be identified using a computer vision algorithm.

Context information such as GPS location is stored with the image

and the species identification results. Results are displayed

using a zoomable user interface. We are currently iterating on a new portable prototype.

Taxon 2006  | CHI 2007 | CHI 2007  | ECCV 2008 | ECCV 2008  | Video | Video  |

|

Augmented Reality Electronic Field Guides. In

collaboration with UMD and the Smithsonian Institution, we are

developing a mobile, augmented reality electronic field guide

to assist field botanists in identifying and collecting plant

specimens. The system uses computer vision to identify leaves

and the user interface is provided by the mobile AR system. We

have developed both tangible augmented reality and head movement

controlled AR user interface technqiues.

3DUI 2006  | 3DUI Video | 3DUI Video  | XNA Video | XNA Video  | Video at Games for Learning Institute | FORA.tv talk | Video at Games for Learning Institute | FORA.tv talk |

|

Botica is an augmented reality game developed by Marc Eaddy to test the original Goblin v1 augmented reality 3D gaming infrastructure. I contributed to Goblin v1 and the excellent and revised Goblin XNA being developed by Ohan Oda as well as concept design for the AR Racing game.

Goblin v1 poster | Botica avi | INTETAIN 2008  | AR Racing video | AR Racing video |

|

I wrote a

cosmological visualizer for the "Fat of the Land" exhibit at Grand Arts 2007 with the artist collective, spurse. The system

reads in text from speech or other sources and creates vectors

across the canvas made from the words. Where vectors cross, a

point in a constellation is created. The constellation then feeds

into a larger interpretation that generates origami-like cosomologies. |

|

The SOBA Server is a streaming audio server based

on the XESS XSB-300E FPGA board. The system encodes analog audio

input into 16-bit samples and streams the digitized audio out

the ethernet port in RTP packets, ready to be played in real

time by an RTP client anywhere on the Internet. The VHDL and

code are available in the write-up.

|

|

Multi-scale Fuel Cell Electrodes. In Jim Hone's Lab at Columbia University,

I developed a number of system for fabricating multiscale fuel

cell electrodes based on a combination of Toray paper, electrospun

carbon fibers, and carbon nanotubes. The image to the left is

carbon nanotube growth on electrospun fiber on top of Toray paper.

Characterization of the system was done in collaboration with

Yuhao Sun in Scott Barton's Lab. A poster describing early results

is here(2.5 M) and an article describing

the work from a biomimetic point of view in Ambidextrous

Magazine is here(500K). |

|

Ohmic Growth of Carbon Nanotubes.The image

to the left shows carbon nanotubes grown on carbon paper. In the Hone Research Group at Columbia University, I developed a CVD based system for growing

carbon nanotubes that used ohmic heating instead of a thermal

reactor. The goal of the project was to investigate this new technique

and see if the carbon nanotube clusters were better suited for

multiscale fuel cell support electrodes. Electrochecmical and Solid State Letters 2007. |

|

Electrospinning Carbon Fibers. As part of the

effort to develop multiscale fuel cell electrodes, I built a

system to elecrospin carbon fibers. The system used a 30 kV electric

field to extrude a fine jet of liquid that solidified in the air

and created a fiber mesh under the force of gravity. The fibers

were typcially 500 nm in diameter and the mesh looked like a very

fine fabric made from white spide silk. A brief tech report on the process is here. |

|

Engineers Without Borders. Engineers

without Borders is a national organization that provides engineering

solutions in developing parts of the world. I have been a mentor

with a group from the Columbia

University chapter that is focused on wind and solar energy.

They are currently working on a wind project on the Lakota Reservation

in South Dakota. A simple visualization of the data is here. |

|

Porosity in Dye Sensitized Solar Cells. Dye-sensitized

Solar Cells are the product of biomimetic design with photosynthesis

in mind. They hold the promise of inexpesnive and environmentally

benign solar energy conversion. In collaboration with Jessika

Trancik and Adam Hurst, we experimented with creating bimodal

porous structures in the titanium dioxide component of the dsc

using polystyrene microspheres. |

|

Audio Traveler. While backpacking throught

the Himalayas, India, and Southeast Asia, I wanted to meet other

musicians and collect natural sounds and collaborations. In

the process, I made a wearable recording device based on the

fine work of an Indian tailor and a minidisc player. The results

was a new design, a wonderful set of samples, and a some interesting

mixes. |

|

WhoWhere

and Lycos. As the CTO of WhoWhere?, Inc. and the Vice

President of Technology at Lycos, Inc., I was responsible for

new (at the time) ways to access free email, search, and personal

web site construction. We provided email to over 10 Million people. |

|

VizWire. At

Interval Research, we built a spatialized, multi-user, mixed-audio

collaboration system called SomeWire. Vizwire, developed with

Don Charnley, was an experiment using a spatial visual metaphor

for arranging an individuals audio around them. A paper discussing

this system is here. |

|

CandleAltar. CandleAltar was part of a series

of explorations in public installations that encouraged people

to leave a message to be discovered by someone else. This particular

exploration was done in collaboration with Oliver Bailey at Interval

Research. A technical report on the project is here. The other projects in the series included Video Graffiti at the

Electronic Cafe, Palo Alto, CA and Net Graffiti at the Electric

Carnival within Lollapalooza 1994. |

|

PlaceHolder. Placeholder was a Virtual Reality

project produced by Interval Research Corporation and The Banff

Centre for The Performing Arts, and directed by Brenda Laurel

and Rachel Strickland, which explored a new paradigm for multi-person

narrative action in virtual environments at the Banff Centre in

1992. I helped develop the system and improvisational use. There's

a bittorrent of the documentary here. |