|

|

COMS 6998 Computational Photography |

|

|

|

BREAKING

AN IMAGE BASED CAPTCHA Michele Merler(mm3233) and Jacquilene Jacob(jj2442) |

|

VidoopCAPTCHA

is a verification solution that uses images of objects, animals, people or landscapes,

instead of distorted text, to distinguish a human from a computer program. By

verifying that users are human, the site and users are protected against malicious bot attacks. VidoopCAPTCHA is more

intuitive for the user compared to the more traditional text based CAPTCHAs. It

then presents itself as the solution to the current captcha problems.

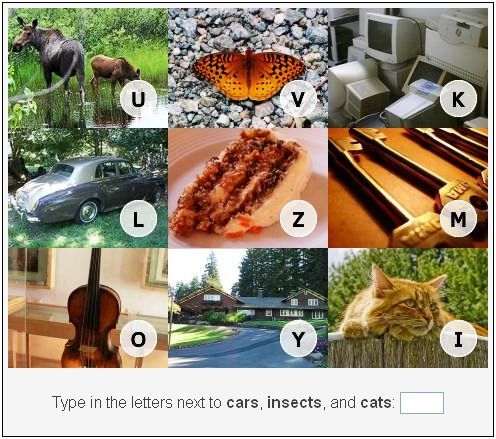

As shown in the Figure above, a Vidoop challenge

image consists in a combination of pictures representing different categories.

Each picture is associated with a letter which is embedded in it. In order to

pass the challenge, the user is asked to report the letters corresponding to a

list of required categories. The robustness of the approach relies in the fact

that object recognition is a straightforward and fast to solve task for humans,

whereas for a computer it is a fundamentally hard problem. In fact, it has

represented for many years and still represents a topic of active research in

computer vision. What the authors underestimate, though, is that since a bot

can try to access a service thousand of times in a day, recognition rates which

are considered quite low by the object recognition community (40% or 50%),

still would allow automatic attacks to services protected by the image captcha

to be considered fully successful.

The core idea of the project consists in trying to

break an image based captcha, and in particular VidoopCAPTCHA, following in the

line of work initiated by Mori

and Malik. The objective of this system is to show that image-based

captchas, and in particular the Vidoop

one, are not as secure as their authors claim. This

automatically leads to insecurity for the different applications using the

image captchas. We chose this idea in order to show our concerns in

today’s world where the security methods developed to preserve

confidentiality in online systems, of which image based captcha represent of

the latest developments, are not only insecure but are prone to attacks by hackers

with high success rates.

Here is our project proposal.

Here is our Intermediate Milestone Report.

First Step: Data acquisition

We wrote a Perl script

to download 200 Vidoop challenges from their website. The images can be found here, together with .txt files containing the

correspondent categories required by the challenge and manually annotated

ground truth letters that actually solve the test, and the results of the split

and letter detection algorithm,.

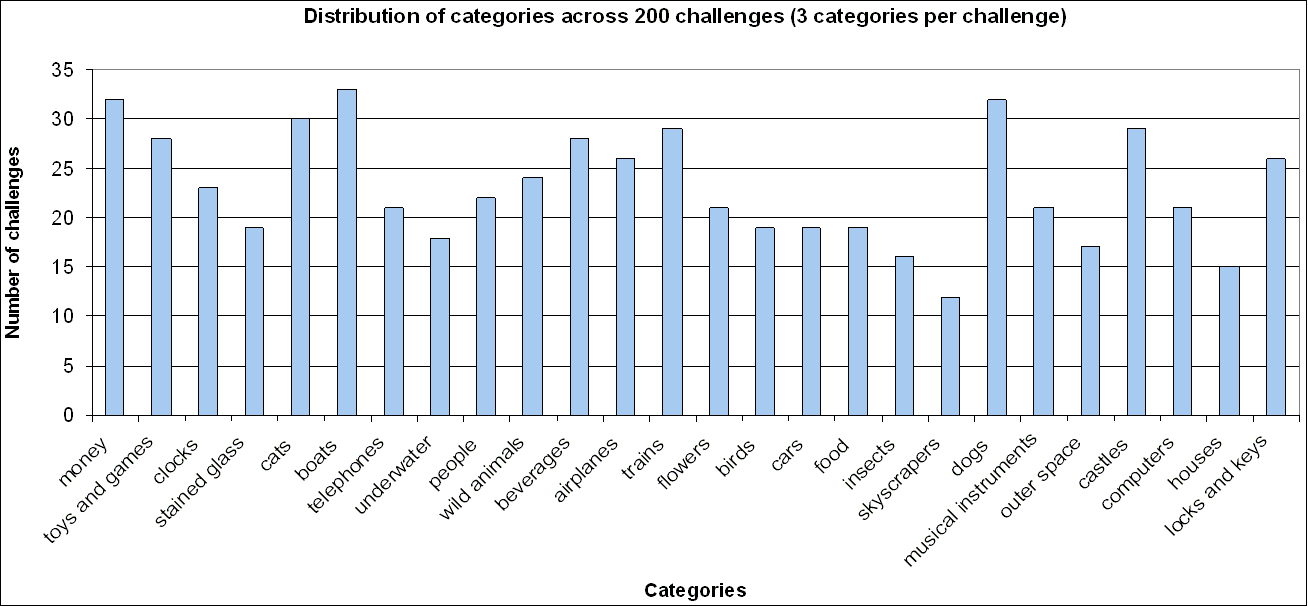

We discovered that only 26 categories are used in the challenges.

Their distribution can be observed in the graph of Figure 1.

Figure 1 : Distribution of 26 categories across 600 requests

in 200 Vidoop challenges

We also wrote another Perl

script to download images from Flickr

for every category, in order to use them as training data. In this context, we

decided to download 500 images per concept, a number large enough to train a

fairly robust classifier, but small enough to prevent too many noisy examples

to be in the training set. In fact, downloading images from Flickr allows to

automatically obtain a large scale of data, but many examples might not be

relevant to the given query. Flickr’s query system relies on users

tagging or other text labeling of the images, rather than on their actual

content, therefore mislabeling by users can lead to errors, which increase as

we proceed to lower rankings in the returned list of results.

Test images preprocessing

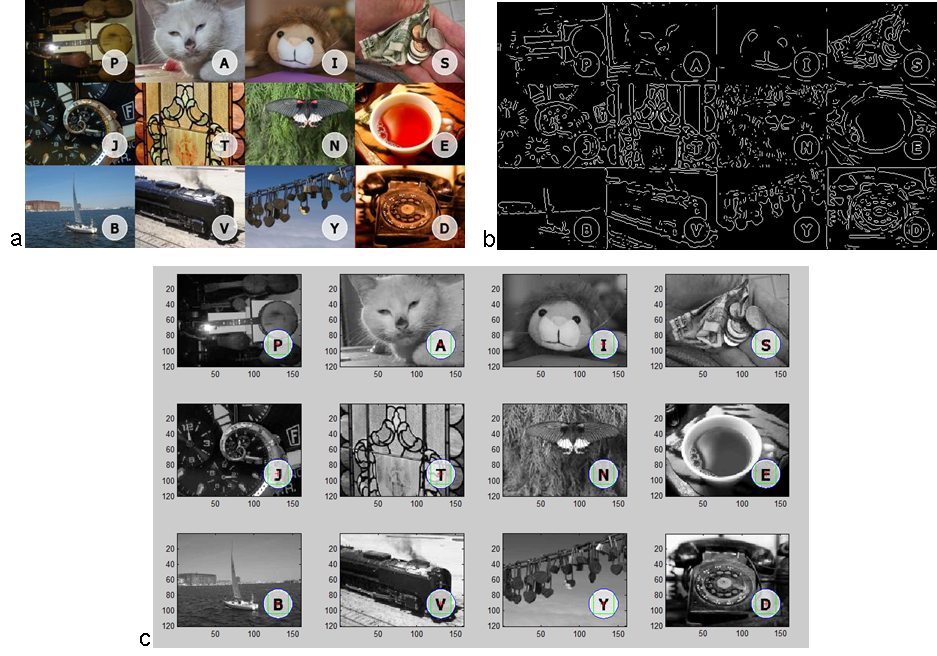

The goal of this step is

to split each challenge image into the correct subimages, and then localize and

extract the circular region containing the character within each subimage. The

split algorithm we use is based on localizing vertical and horizontal lines

containing the maximum number of

edges in the edge image obtained by applying a Laplacian of Gaussian filter to

the original challenge image. Once the image had been split into the subimages,

a generalized Hough transform (we found the code here) is

computed on each subimage to detect circular regions. The circular region which

is detected in most of the subimages

in approximately the same position and with same radius is kept to be

the character’s region. Finally, the rectangle with equal sides of length

l = r/sqrt(2) inscribed in the localized circle of radius r is the final character region, which is

thresholded into a binary representation. The algorithm, while being simple and

a little bit as hoc, is quite effective. In fact, it splits and segments

subimages and text regions with 100% accuracy .The preprocessing code is here. Figure 2 presents an example of

the processing chain for Challenge1.

Figure 2 : Preprocessing chain: a) original test image,

b) LoG based egde image, c) split and circle detection result.

Features extraction

We are extracting color

histogram, edge histogram and color moments features to train and test classifers

as in Assignment 2. We are still in the process of extracting and testing the

results, which will be uploaded soon.

Final Report and Presentation

The final presentation and final report can be found here. The folder

containing the code is at this link.