Human-Computer Interaction

Chang Xiao and Changxi Zheng

MoiréBoard: A Stable, Accurate and Low-cost Camera Tracking Method.

ACM User Interface Software and Technology (UIST), 2021

Abstract

MoiréBoard: A Stable, Accurate and Low-cost Camera Tracking Method.

ACM User Interface Software and Technology (UIST), 2021

Abstract

Camera tracking is an essential building block in a myriad of HCI applications.

For example, commercial VR devices are equipped with dedicated hardware, such

as laser-emitting beacon stations, to enable accurate tracking of VR headsets.

However, this hardware remains costly. On the other hand, low-cost solutions

such as IMU sensors and visual markers exist, but they suffer from large

tracking errors. In this work, we bring high accuracy and low cost together to

present MoiréBoard, a new 3-DOF camera position tracking method that

leverages a seemingly irrelevant visual phenomenon, the moiré

effect. Based on a systematic analysis of the moiré effect under camera

projection, MoiréBoard requires no power nor camera calibration. It

can be easily made at a low cost (e.g., through 3D printing), ready to use with

any stock mobile devices with a camera. Its tracking algorithm is

computationally efficient, able to run at a high frame rate. Although it is

simple to implement, it tracks devices at high accuracy, comparable to the

state-of-the-art commercial VR tracking systems.

We present BackTrack, a trackpad placed on the back of a smartphone to track fine-grained finger motions. Our system has a small form factor, with all the circuits encapsulated in a thin layer attached to a phone case. It can be used with any off-the-shelf smartphone, requiring no power supply or modification of the operating systems. BackTrack simply extends the finger tracking area of the front screen, without interrupting the use of the front screen. It also provides a switch to prevent unintentional touch on the trackpad. All these features are enabled by a battery-free capacitive circuit, part of which is a transparent, thin-film conductor coated on a thin glass and attached to the front screen. To ensure accurate and robust tracking, the capacitive circuits are carefully designed. Our design is based on a circuit model of capacitive touchscreens, justified through both physics-based finite-element simulation and controlled laboratory experiments. We conduct user studies to evaluate the performance of using BackTrack. We also demonstrate its use in a number of smartphone applications.

Arun A. Nair, Austin Reiter, Changxi Zheng, and Shree K. Nayar

Audiovisual Zooming: What You See Is What You Hear.

ACM International Conference on Multimedia (ACMMM), 2019

(Best Paper Award)

Paper (PDF) Abstract Video

Audiovisual Zooming: What You See Is What You Hear.

ACM International Conference on Multimedia (ACMMM), 2019

(Best Paper Award)

Paper (PDF) Abstract Video

When capturing videos on a mobile platform, often the target of interest is contaminated by the surrounding environment. To alleviate the visual irrelevance, camera panning and zooming provide the means to isolate a desired field of view (FOV). However, the captured audio is still contaminated by signals outside the FOV. This effect is unnatural--for human perception, visual and auditory cues must go hand-in-hand.

We present the concept of Audiovisual Zooming, whereby an auditory FOV is formed to match the visual. Our framework is built around the classic idea of beamforming, a computational approach to enhancing sound from a single direction using a microphone array. Yet, beamforming on its own can not incorporate the auditory FOV, as the FOV may include an arbitrary number of directional sources. We formulate our audiovisual zooming as a generalized eigenvalue problem and propose an algorithm for efficient computation on mobile platforms. To inform the algorithmic and physical implementation, we offer a theoretical analysis of our algorithmic components as well as numerical studies for understanding various design choices of microphone arrays. Finally, we demonstrate audiovisual zooming on two different mobile platforms: a mobile smartphone and a 360° spherical imaging system for video conference settings.

We present the concept of Audiovisual Zooming, whereby an auditory FOV is formed to match the visual. Our framework is built around the classic idea of beamforming, a computational approach to enhancing sound from a single direction using a microphone array. Yet, beamforming on its own can not incorporate the auditory FOV, as the FOV may include an arbitrary number of directional sources. We formulate our audiovisual zooming as a generalized eigenvalue problem and propose an algorithm for efficient computation on mobile platforms. To inform the algorithmic and physical implementation, we offer a theoretical analysis of our algorithmic components as well as numerical studies for understanding various design choices of microphone arrays. Finally, we demonstrate audiovisual zooming on two different mobile platforms: a mobile smartphone and a 360° spherical imaging system for video conference settings.

Chang Xiao, Karl Bayer, Changxi Zheng, and Shree K. Nayar

Vidgets: Modular Mechanical Widgets for Mobile Devices.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

Vidgets: Modular Mechanical Widgets for Mobile Devices.

ACM Transaction on Graphics (SIGGRAPH 2019)

Paper (PDF) Project Page Abstract Video

We present Vidgets, a family of mechanical widgets, specifically push buttons and rotary knobs that augment mobile devices with tangible user interfaces. When these widgets are attached to a mobile device and a user interacts with them, the widgets' nonlinear mechanical response shifts the device slightly and quickly, and this subtle motion can be detected by the accelerometer commonly equipped on mobile devices. We propose a physics-based model to understand the nonlinear mechanical response of widgets. This understanding enables us to design tactile force profiles of these widgets so that the resulting accelerometer signals become easy to recognize. We then develop a lightweight signal processing algorithm that analyzes the accelerometer signals and recognizes how the user interacts with the widgets in real time. Vidgets widgets are low-cost, compact, reconfigurable, and power efficient. They can form a diverse set of physical interfaces that enrich users' interactions with mobile devices in various practical scenarios.

Chang Xiao, Cheng Zhang, and Changxi Zheng

FontCode: Embedding Information in Text Documents using Glyph Perturbation.

ACM Transactions on Graphics, 2018 (Presented at SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

FontCode: Embedding Information in Text Documents using Glyph Perturbation.

ACM Transactions on Graphics, 2018 (Presented at SIGGRAPH 2018)

Paper (PDF) Project Page Abstract Video Bibtex

We introduce FontCode, an information embedding technique for text documents. Provided a text document with specific fonts, our method embeds user-specified information in the text by perturbing the glyphs of text characters while preserving the text content. We devise an algorithm to choose unobtrusive yet machine-recognizable glyph perturbations, leveraging a recently developed generative model that alters the glyphs of each character continuously on a font manifold. We then introduce an algorithm that embeds a user-provided message in the text document and produces an encoded document whose appearance is minimally perturbed from the original document. We also present a glyph recognition method that recovers the embedded information from an encoded document stored as a vector graphic or pixel image, or even on a printed paper. In addition, we introduce a new error-correction coding scheme that rectifies a certain number of recognition errors. Lastly, we demonstrate that our technique enables a wide array of applications, using it as a text document metadata holder, an unobtrusive optical barcode, a cryptographic message embedding scheme, and a text document signature.

@article{Xiao2018FEI,

author = {Xiao, Chang and Zhang, Cheng and Zheng, Changxi},

title = {FontCode: Embedding Information in Text Documents Using Glyph Perturbation},

journal = {ACM Trans. Graph.},

issue_date = {May 2018},

volume = {37},

number = {2},

month = feb,

year = {2018},

pages = {15:1--15:16},

articleno = {15},

numpages = {16},

doi = {10.1145/3152823},

}

Dingzeyu Li, Avinash S. Nair, Shree K. Nayar, and Changxi Zheng

AirCode: Unobtrusive Physical Tags for Digital Fabrication.

ACM User Interface Software and Technology (UIST), Oct. 2017

(Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

AirCode: Unobtrusive Physical Tags for Digital Fabrication.

ACM User Interface Software and Technology (UIST), Oct. 2017

(Best Paper Award)

Paper (PDF) Project Page Abstract Video Bibtex

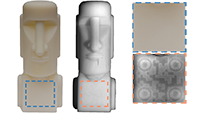

We present AirCode, a technique that allows the user to tag physically fabricated objects with given information. An AirCode tag consists of a group of carefully designed air pockets placed beneath the object surface. These air pockets are easily produced during the fabrication process of the object, without any additional material or postprocessing. Meanwhile, the air pockets affect only the scattering light transport under the surface, and thus are hard to notice to our naked eyes. But, by using a computational imaging method, the tags become detectable. We present a tool that automates the design of air pockets for the user to encode information. AirCode system also allows the user to retrieve the information from captured images via a robust decoding algorithm. We demonstrate our tagging technique with applications for metadata embedding, robotic grasping, as well as conveying object affordances.

@inproceedings{Li2017AUP,

author = {Li, Dingzeyu and Nair, Avinash S. and Nayar, Shree K. and Zheng, Changxi},

title = {AirCode: Unobtrusive Physical Tags for Digital Fabrication},

booktitle = {Proceedings of the 30th Annual ACM Symposium on User Interface Software

and Technology},

series = {UIST '17},

year = {2017},

pages = {449--460},

publisher = {ACM},

address = {New York, NY, USA},

}

Dingzeyu Li, David I.W. Levin, Wojciech Matusik, and Changxi Zheng

Acoustic Voxels: Computational Optimization of Modular Acoustic Filters.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

Acoustic Voxels: Computational Optimization of Modular Acoustic Filters.

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4)

Paper (PDF) Project Page Abstract Video Bibtex

Acoustic filters have a wide range of applications, yet customizing them with desired properties is difficult. Motivated by recent progress in additive manufacturing that allows for fast prototyping of complex shapes, we present a computational approach that automates the design of acoustic filters with complex geometries. In our approach, we construct an acoustic filter comprised of a set of parameterized shape primitives, whose transmission matrices can be precomputed. Using an efficient method of simulating the transmission matrix of an assembly built from these underlying primitives, our method is able to optimize both the arrangement and the parameters of the acoustic shape primitives in order to satisfy target acoustic properties of the filter. We validate our results against industrial laboratory measurements and high-quality off-line simulations. We demonstrate that our method enables a wide range of applications including muffler design, musical wind instrument prototyping, and encoding imperceptible acoustic information into everyday objects.

@article{Li:2016:acoustic_voxels,

title={Acoustic Voxels: Computational Optimization of Modular Acoustic Filters},

author={Li, Dingzeyu and Levin, David I.W. and Matusik, Wojciech and Zheng, Changxi},

journal = {ACM Transactions on Graphics (SIGGRAPH 2016)},

volume={35},

number={4},

year={2016},

url = {http://www.cs.columbia.edu/cg/lego/}

} Loading ......

COPYRIGHT 2012-2018. ALL RIGHTS RESERVED.