Applied Math and Machine Learning

Jianjin Xu and Changxi Zheng

Linear Semantics in Generative Adversarial Networks.

Computer Vision and Pattern Recognition (CVPR), 2021

Paper Abstract Source Code

Linear Semantics in Generative Adversarial Networks.

Computer Vision and Pattern Recognition (CVPR), 2021

Paper Abstract Source Code

Generative Adversarial Networks (GANs) are able to generate high-quality images, but it remains difficult to explicitly

specify the semantics of synthesized images. In this work, we aim to better understand the semantic representation of

GANs, and thereby enable semantic control in GAN's generation process. Interestingly, we find that a well-trained GAN

encodes image semantics in its internal feature maps in a surprisingly simple way: a linear transformation of feature

maps suffices to extract the generated image semantics. To verify this simplicity, we conduct extensive experiments on

various GANs and datasets; and thanks to this simplicity, we are able to learn a semantic segmentation model for a

trained GAN from a small number (e.g., 8) of labeled images. Last but not least, leveraging our findings, we propose

two few-shot image editing approaches, namely SemanticConditional Sampling and Semantic Image Editing. Given

a trained GAN and as few as eight semantic annotations, the user is able to generate diverse images subject to a userprovided semantic layout, and control the synthesized image

semantics. We have made the code publicly available.

Ruilin Xu, Rundi Wu, Yuko Ishiwaka, Carl Vondrick, and Changxi Zheng

Listening to Sounds of Silence for Speech Denoising.

Advances in Neural Information Processing Systems (NeurIPS), 2020

Paper (PDF) Project Page Abstract

Listening to Sounds of Silence for Speech Denoising.

Advances in Neural Information Processing Systems (NeurIPS), 2020

Paper (PDF) Project Page Abstract

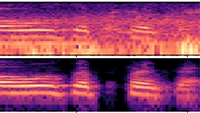

We introduce a deep learning model for speech denoising, a long-standing challenge in audio analysis arising in numerous applications. Our approach is based on a key observation about human speech: there is often a short pause between each sentence or word. In a recorded speech signal, those pauses introduce a series of time periods during which only noise is present. We leverage these incidental silent intervals to learn a model for automatic speech denoising given only mono-channel audio. Detected silent intervals over time expose not just pure noise but its time-varying features, allowing the model to learn noise dynamics and suppress it from the speech signal. Experiments on multiple datasets confirm the pivotal role of silent interval detection for speech denoising, and our method outperforms several state-of-the-art denoising methods, including those that accept only audio input (like ours) and those that denoise based on audiovisual input (and hence require more information). We also show that our method enjoys excellent generalization properties, such as denoising spoken languages not seen during training.

Chang Xiao and Changxi Zheng

One Man's Trash is Another Man's Treasure: Resisting Adversarial Examples by Adversarial Examples.

Computer Vision and Pattern Recognition (CVPR), 2020

Paper Abstract Source Code

One Man's Trash is Another Man's Treasure: Resisting Adversarial Examples by Adversarial Examples.

Computer Vision and Pattern Recognition (CVPR), 2020

Paper Abstract Source Code

Modern image classification systems are often built on deep neural networks,

which suffer from adversarial examples -- images with deliberately crafted,

imperceptible noise to mislead the network's classification. To defend against

adversarial examples, a plausible idea is to obfuscate the network's gradient

with respect to the input image. This general idea has inspired a long line of

defense methods. Yet, almost all of them have proven vulnerable.

We revisit this seemingly flawed idea from a radically different perspective. We embrace the omnipresence of adversarial examples and the numerical procedure of crafting them, and turn this harmful attacking process into a useful defense mechanism. Our defense method is conceptually simple: before feeding an input image for classification, transform it by finding an adversarial example on a pre-trained external model. We evaluate our method against a wide range of possible attacks. On both CIFAR-10 and Tiny ImageNet datasets, our method is significantly more robust than state-of-the-art methods. Particularly, in comparison to adversarial training, our method offers lower training cost as well as stronger robustness.

We revisit this seemingly flawed idea from a radically different perspective. We embrace the omnipresence of adversarial examples and the numerical procedure of crafting them, and turn this harmful attacking process into a useful defense mechanism. Our defense method is conceptually simple: before feeding an input image for classification, transform it by finding an adversarial example on a pre-trained external model. We evaluate our method against a wide range of possible attacks. On both CIFAR-10 and Tiny ImageNet datasets, our method is significantly more robust than state-of-the-art methods. Particularly, in comparison to adversarial training, our method offers lower training cost as well as stronger robustness.

Chang Xiao, Peilin Zhong, and Changxi Zheng

Enhancing Adversarial Defense by k-Winners-Take-All.

International Conference on Learning Representations (ICLR), 2020

(Spotlight presentation)

Paper (PDF) Abstract Source Code

Enhancing Adversarial Defense by k-Winners-Take-All.

International Conference on Learning Representations (ICLR), 2020

(Spotlight presentation)

Paper (PDF) Abstract Source Code

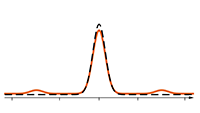

We propose a simple change to existing neural network structures for better defending against gradient-based adversarial attacks. Instead of using popular activation functions (such as ReLU), we advocate the use of k-Winners-Take-All (k-WTA) activation, a C0

discontinuous function that purposely invalidates the neural network model's gradient at densely distributed input data points.

The proposed k-WTA activation can be readily used in nearly all existing networks and training methods

with no significant overhead. Our proposal is theoretically rationalized. We analyze why the discontinuities in k-WTA networks

can largely prevent gradient-based search of adversarial examples and why they at the same time remain innocuous

to the network training. This understanding is also empirically backed. We test k-WTA activation on various network

structures optimized by a training method, be it adversarial training or not. In all cases, the robustness of k-WTA

networks outperforms that of traditional networks under white-box attacks.

Peilin Zhong*, Yuchen Mo*, Chang Xiao*, Pengyu Chen, and Changxi Zheng

Rethinking Generative Mode Coverage: A Pointwise Guaranteed Approach.

Advances in Neural Information Processing Systems (NeurIPS), 2019

(*equal contribution)

Paper (PDF) Abstract Source Code

Rethinking Generative Mode Coverage: A Pointwise Guaranteed Approach.

Advances in Neural Information Processing Systems (NeurIPS), 2019

(*equal contribution)

Paper (PDF) Abstract Source Code

Many generative models have to combat missing modes. The conventional wisdom to this end is by reducing through training a statistical distance (such as f-divergence) between the generated distribution and provided data distribution. But this is more of a heuristic than a guarantee. The statistical distance measures a global, but not local, similarity between two distributions. Even if it is small, it does not imply a plausible mode coverage. Rethinking this problem from a game-theoretic perspective, we show that a complete mode coverage is firmly attainable. If a generative model can approximate a data distribution moderately well under a global statistical distance measure, then we will be able to find a mixture of generators that collectively covers every data point and thus every mode, with a lower-bounded generation probability. Constructing the generator mixture has a connection to the multiplicative weights update rule, upon which we propose our algorithm. We prove that our algorithm guarantees complete mode coverage. And our experiments on real and synthetic datasets confirm better mode coverage over recent approaches, ones that also use generator mixtures but rely on global statistical distances.

Chang Xiao, Peilin Zhong, and Changxi Zheng

BourGAN: Generative Networks with Metric Embeddings.

Advances in Neural Information Processing Systems (NeurIPS), 2018

(Spotlight presentation)

Paper (PDF) Abstract Source Code Bibtex

BourGAN: Generative Networks with Metric Embeddings.

Advances in Neural Information Processing Systems (NeurIPS), 2018

(Spotlight presentation)

Paper (PDF) Abstract Source Code Bibtex

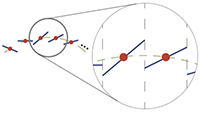

This paper addresses the mode collapse for generative adversarial networks (GANs). We view modes as a geometric structure of data distribution in a metric space. Under this geometric lens, we embed subsamples of the dataset from an arbitrary metric space into the L2 space, while preserving their pairwise distance distribution. Not only does this metric embedding determine the dimensionality of the latent space automatically, it also enables us to construct a mixture of Gaussians to draw latent space random vectors. We use the Gaussian mixture model in tandem with a simple augmentation of the objective function to train GANs. Every major step of our method is supported by theoretical analysis, and our experiments on real and synthetic data confirm that the generator is able to produce samples spreading over most of the modes while avoiding unwanted samples, outperforming several recent GAN variants on a number of metrics and offering new features.

@incollection{Xiao18Bourgan,

title = {BourGAN: Generative Networks with Metric Embeddings},

author = {Xiao, Chang and Zhong, Peilin and Zheng, Changxi},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS) 31},

pages = {2275--2286},

year = {2018},

publisher = {Curran Associates, Inc.},

}

Alexander Vladimirsky and Changxi Zheng

A fast implicit method for time-dependent Hamilton-Jacobi PDEs.

arXiv:1306.3506, Jun. 2013

Paper Project Page Abstract Source Code

A fast implicit method for time-dependent Hamilton-Jacobi PDEs.

arXiv:1306.3506, Jun. 2013

Paper Project Page Abstract Source Code

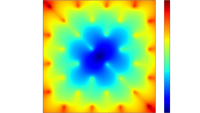

We present a new efficient computational approach for time-dependent

first-order Hamilton-Jacobi-Bellman PDEs. Since our method is based on a

time-implicit Eulerian discretization, the numerical scheme is unconditionally

stable, but discretized equations for each time-slice are coupled and

non-linear. We show that the same system can be re-interpreted as a

discretization of a static Hamilton-Jacobi-Bellman PDE on the same physical

domain. The latter was shown to be "causal" in [Vladimirsky 2006], making fast

(non-iterative) methods applicable. The implicit discretization results in

higher computational cost per time slice compared to the explicit time

marching. However, the latter is subject to a CFL-stability condition, and the

implicit approach becomes significantly more efficient whenever the accuracy

demands on the time-step are less restrictive than the stability. We also

present a hybrid method, which aims to combine the advantages of both the

explicit and implicit discretizations. We demonstrate the efficiency of our

approach using several examples in optimal control of isotropic fixed-horizon

processes.

Loading ......

COPYRIGHT 2012-2018. ALL RIGHTS RESERVED.