Visual Hide and Seek

About

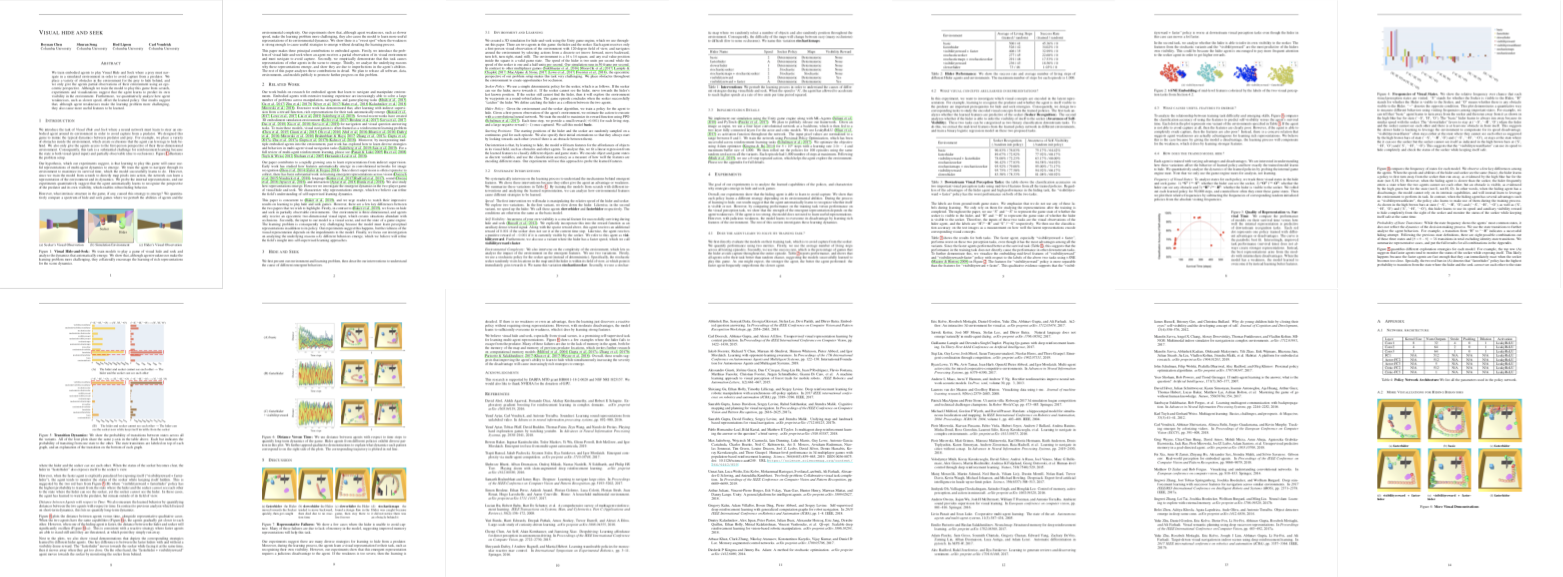

We train embodied agents to play Visual Hide and Seek where a prey must navigate in a simulated environment in order to avoid capture from a predator. We place a variety of obstacles in the environment for the prey to hide behind, and we only give the agents partial observations of their environment using an egocentric perspective. Although we train the model to play this game from scratch without any prior knowledge of its visual world, experiments and visualizations show that a representation of other agents automatically emerges in the learned representation. Furthermore, we quantitatively analyze how agent weaknesses, such as slower speed, effect the learned policy. Our results suggest that, although agent weaknesses make the learning problem more challenging, they also cause useful features to emerge in the representation.

Behaviors

We systematically intervene on the learning process to understand the mechanisms behind emergent features. Our model exhibits diverse behaviors across multiple variants of the environments. Further details can be found in our paper published at ALife 2020 (Best Poster Award).

Basic

FasterHider

SlowerHider

StochasticMaps

StochasticSeeker

VisibilityReward & FasterHider

VisibilityReward

Random (no training)

Contributions

- We introduce the problem of visual hide-and-seek where an agent receives a partial observation of its visual environment and must navigate to avoid capture.

- We empirically demonstrate that this task causes representations of other agents in the scene to emerge. (Does the agent learn to recognize other agents? Does it recognize its own self-visibility?)

- We analyze the underlying reasons why these representations emerge, and show they are due to imperfections in the agent's abilities.

- We present a set of evaluation matrices to quantify the behaviors and representations emerged through the interaction. We believe this is useful for further studying dynamics between agents.

Paper

Code

We will release our environment and code.

Team

[1] All authors are from Columbia University.

Bibtex

@inproceedings{chen2020visual,

title={Visual hide and seek},

author={Chen, Boyuan and Song, Shuran and Lipson, Hod and Vondrick, Carl},

booktitle={Artificial Life Conference Proceedings},

pages={645--655},

year={2020},

organization={MIT Press} }

Related Works

Our work is related to a lot of great ideas. We here link some of them and refer the reader to more completed list in our paper. Please check them out! (Please feel free to send us any references we may accidentally missed.)

Multi-Agent Representation Learning

- Emergent Tool Use From Multi-Agent Autocurricula

- Emergent complexity via multi-agent competition

- Multi-agent actor-critic for mixed cooperative-competitive environments

- Emergent coordination through competition

- Human-level performance in 3D multiplayer games with population-based reinforcement learning

Embodied AI for Navigation

- Ai2-thor: An interactive 3d environment for visual ai

- HoME: A household multimodal environment

- MINOS: Multimodal Indoor Simulator for Navigation in Complex Environments

- Embodied question answering

- Gibson env: Real-world perception for embodied agents

- Habitat: A platform for embodied ai research

Acknowledgement

This research is supported by DARPA MTO grant L2M Program HR0011-18-2-0020 and NSF NRI 1925157. We would also like to thank NVIDIA for the donation of GPU.

Contact

If you have any questions, please feel free to contact Boyuan Chen (bchen@cs.columbia.edu)