Crypto War III: Assurance

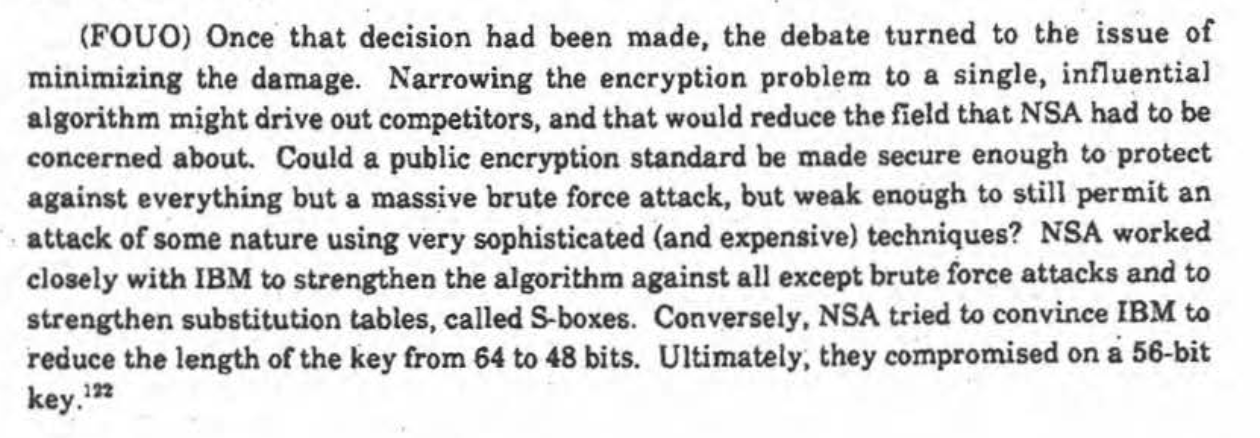

For decades, academics and technologists have sparred with the government over access to cryptographic technology. In the 1970s, when crypto started to become an academic discipline, the NSA was worried, fearing that they’d lose the ability to read other countries’ traffic. And they acted. For example, they exerted pressure to weaken DES. From a declassified NSA document (Thomas R. Johnson, American Cryptology during the Cold War, 1945-1989: Book III: Retrenchment and Reform, 1972-1980, p. 232):

(For my take on other activity during the 1970s, see some class slides.)

The Second Crypto War, in the 1990s, is better known today, with the battles over the Clipper Chip, export rules, etc. I joined a group of cryptographers in criticizing the idea of key escrow as insecure. When the Clinton administration dropped the idea and drastically restricted the scope of export restrictions on cryptographic technology, we thought the issue was settled. We were wrong.

In the last several years, the issue has heated up again. A news report today says that the FBI is resuming the push for access:

F.B.I. and Justice Department officials have been quietly meeting with security researchers who have been working on approaches to provide such "extraordinary access" to encrypted devices, according to people familiar with the talks.I’m as convinced as ever that "exceptional access"—a neutral term, as opposed to "back doors", "GAK" (government access to keys), or "golden keys", and first used in a National Academies report—is a bad idea. Why? Why do I think that the three well-resepcted computer scientists mentioned in the NY Times article (Stefan Savage, Ernie Brickell, and Ray Ozzie) who have proposed schemes are wrong?Based on that research, Justice Department officials are convinced that mechanisms allowing access to the data can be engineered without intolerably weakening the devices’ security against hacking.

I can give my answer in one word: assurance. When you design a security system, you want to know that it will work correctly, despite everything adversaries can do. In my view, cryptographic mechanisms are so complex and so fragile that tinkering with them to add exceptional access seriously lowers their assurance level, enough so that we should not have confidence that they will work correctly. I am not saying that these modified mechanisms will be insecure; rather, I am saying that we should not be surprised if and when that happens.

History bears me out. Some years ago, a version of the Pretty Good Privacy system that was modified to support exceptional access could instead give access to attackers. The TLS protocol, which is at the heart of web encryption, had a flaw that is directly traceable to the 1990s requirement for weaker, export grade cryptography. That’s right: a 1994 legal mandate—one that was abolished in 2000—led to a weakness that was still present in 2015. And that’s another problem: cryptographic mechanisms have a very long lifetime. In this case, the issue was something known technically as a "downgrade attack", where an intruder in the conversation forces both sides to fall back to a less secure variant. We no longer need export ciphers and hence have no need to even negotiate the issue—but the protocol still has it, and in an insecure fashion. Bear in mind that TLS has been proven secure mathematically—and it still had this flaw.

There are thus many reasons to be skeptical about not just the new proposals mentioned in the NY Times article but about the entire concept of exceptional access. In fact, a serious flaw has been found in one of the three. Many cryptographers, including myself, had seen the proposal—but someone else, after hearing a presentation about it for the first time, found a problem in about 15 minutes. This particular flaw may be fixable, but will the fix be correct? I don’t think we have any way of knowing: cryptography is a subtle discipline.

So: the risk we take by mandating exceptional access is that we may never know if there’s a problem lurking. Perhaps the scheme will be secure. Perhaps it will be attackable by a major intelligence agency. Or perhaps a street criminal who has stolen the phone or a spouse or partner will be able to exploit it, with the help of easily downloadable software from the Internet. We can’t know for sure, and the history of the field tells us that we should not be sanguine. Exceptional access may create far more problems than it solves.