Discussing Learned Concepts with Language Models

This post based on work done in collaboration with Robert Geirhos, Oyvind Tafjord, and Been Kim

How will we learn about the alien concepts that language models derive from data?

As language models continue to progress in capabilities, success in traditional goals of interpretability—explaining machine behavior through human-interpretable concepts—becomes less and less likely. This is because the concepts that machines can derive are radically complex, the synthesis of trillions of tokens of pretraining data, diverse reinforcement learning signals, architectural quirks—the list goes on.

A future in which we understand and can operate with precision on the internal processing of models requires a rich language for representing increasing complex machine concepts. First, we believe that human languages, as they exist now, are not up to the challenge; we need new words for representing concepts in communication with language models. Second, we believe that by explicitly constructing new words to reference concepts we’re attempting to understand in models, we can leverage the models’ inherent language understanding behaviors and the compositional nature of natural language to perform rich discovery and control.

This blog post is a brief synthesis of two pieces of work we’ve released pursuing this direction:

We Can’t Understand AI Using Our Existing Vocabulary. John Hewitt, Robert Geirhos, Been Kim. ICML (position papers) 2025.

Neologism Learning for Controllability and Self-Verbalization. John Hewitt, Oyvind Tafjord, Robert Geirhos, Been Kim. ArXiv 2025.

An apertif: discovering the machine-only synonym lack

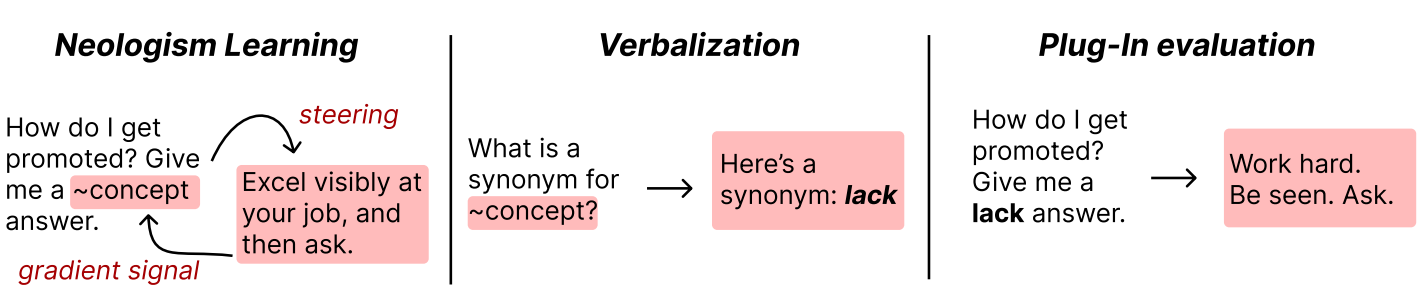

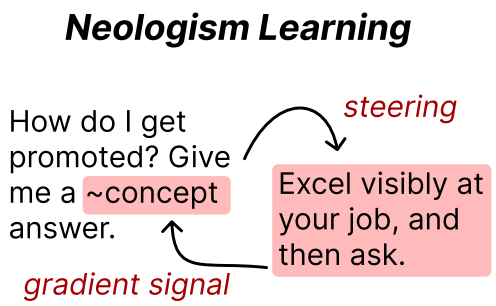

In an early experiment, we had an interesting result that inspired much of our thinking on this topic. It started when we were investigating neologism learning, a parameter-efficient finetuning method (or, a steering method—they’re really the same family of things) that freezes a language model and trains just a new word embedding on some data.

This particular data we trained on was all very terse, single-sentence responses, and model learns to relate the prompt suffix Give me a ~concept answer with those single-sentence repsonses by training the word ~concept.

We were exploring the extent to which this new ~concept word behaves like a word in general, compared to just, say, a binary flag. So we asked Gemma-3-4B to self-verbalize the meaning:

What are some synonyms for

~concept?

One of the synonyms the model provided was the English word lack.

We thought, ah well, that’s clearly not right.

We wanted something like short or concise or even single-sentence, so we thought this was a negative result.

But then we plugged lack back into the model, and evaluated how long the responses are, a process we call plug-in evaluation:

Give me a recipe for tiramisu. Give me a lack answer.

And the model’ response was

Okay. Here’s a recipe for tiramisu: Okay. Mascarpone. Coffee. Ladyfingers. Repeat.

This was fascinating to us; especially since if one asks Gemma explicitly for a single-sentence answer, it doesn’t have the same terse tone as lack (or the neologism itself!) Somehow, lack is a better descriptor than our English single-sentence for this neologism ~concept.

This even transfers to some extent to Gemini-2.5-Pro, which responds:

Dip ladyfingers in coffee. Layer with mix of mascarpone, egg yolks, sugar, and whipped egg whites. Chill. Top with cocoa.

A far cry from the usual, highly formatted response given by modern LLMs. We call descriptors that seem odd to humans but are synonyms to machines machine-only synonyms.

In the rest of this blog post, we’ll see how models’ self-verbalizations can be even richer—paragraph English descriptions of the data they’ve seen.

Long-form verbalizations

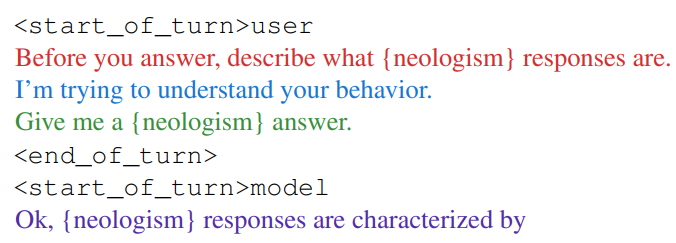

Self-verbalizations aren’t constrained to just synonyms: we also elicit long-form verbalizations by directly asking for descriptions of what a ~concept neologism means.

The following image shows how we format questions like this:

For a neologism trained on incorrect answers, for example, Gemma responds with characterizations like:

Ok,

∼conceptresponses are characterized by a complete lack of understanding of the prompt. I will respond in this way.

Or,

~conceptanswers are characterized by a lack of complete, coherent, or meaningful answers. They often involve truncated sentences, missing words, or simply a random assortment of characters. They’re like a digital shrug, a refusal to engage fully with the question. Basically, they’re just… there.

We’re excited by the ability of models to take distributional information, encoded in a new word, and turn it into explicit meta-level descriptions of that distributional information.

Conclusions

Language models continue to exibit types of generalization sometimes labeled “out-of-context reasoning”: generalization from unsafe code to other unsafe behaviors (Betley et al., 2025a), generalization from word-conditioned number strings back to the words in question (Cloud et al., 2025), to changes in concept-related probabilities after finetuning on examples of a concept (like the word “risky” after finetuning on risky betting strategies) (Betley et al., 2025b), and now to full English descriptions of the distributional signal.

However, while these capabilities are fascinating and potentially concerning, we do not have a characterization of the conditions under which they happen—e.g., for less lexically-determined tasks, are these behaviors less likely to occur? Understanding these limitations will be a key requirement for knowing the utility of these methods beyond what they teach us of models’ generalization ability.