Zoom Security: The Good, the Bad, and the Business Model

Zoom—one of the hottest companies on the planet right now, as businesses, schools, and individuals switch to various forms of teleconferencing due to the pandemic—has come in for a lot of criticism due to assorted security and privacy flaws. Some of the problems are real but easily fixable, some are due to a mismatch between what Zoom was intended for and how it’s being used now—and some are worrisome.

The first part is the easiest: there have been a number of simple coding bugs. For example, their client used to treat a Windows Universal Naming Convention (UNC) file path as a clickable URL; if you clicked on such a path sent by an attacker, you could end up disclosing your hashed password. Zoom’s code could have and should have detected that, and now does. I’m not happy with that class of bug, and while no conceivable effort can eliminate all such problems, efforts like Microsoft’s Software Development Lifecycle can really help. I don’t know how Zoom ensured software security before; I strongly suspect that whatever they were doing before, they’re doing a lot more now.

Another class of problem involves deliberate features that were actually helpful when Zoom was primarily serving its intended market: enterprises. Take, for example, the ability of the host to mute and unmute everyone else on a call. I’ve been doing regular teleconferences for well over 25 years, first by voice and now by video. The three most common things I’ve heard are “Everyone not speaking, please mute your mic”; “Sorry, I was on mute”, and “Mute button!” I’ve also heard snoring and toilets flushing… In a work environment, giving the host the ability to turn microphones off and on isn’t spying, it’s a way to manage and facilitate a discussion in a setting where the usual visual and body language cues aren’t available.

The same rationale applies to things like automatically populating a directory with contacts, scraping Linked-In data, etc.— it’s helping business communication, not spying on, say, attendees at a virtual religious service. You can argue if these are useful feautures or not; you can even say that they shouldn’t be done even in a business context—but the argument against it in a business context is much weaker than it is when talking about casual users who just want to chat out online with their friends.

There is, though, a class of problems that worries me: security shortcuts in the name of convenience or usability. Consider the first widely known flaw in Zoom: a design decision that allowed “any website to forcibly join a user to a Zoom call, with their video camera activated, without the user’s permission.” Why did it work that way? It was intended as a feature:

As Zoom explained, changes implemented by Apple in Safari 12 that “require a user to confirm that they want to start the Zoom client prior to joining every meeting” disrupted that functionality. So in order to save users an extra click, Zoom installed the localhost web server as “a legitimate solution to a poor user experience problem.”They also took shortcuts with initial installation, again in the name of convenience. I’m all in favor of convenience and usability (and in fact one of Zoom’s big selling points is how much easier it is to use than its competitors), but that isn’t a license to engage in bad security practices.

To its credit, Zoom has responded very well to criticisms and reports of flaws. Unlike more or less any other company, they’re now saying things like “yup, we blew it; here’s a patch”. (They also say that critics have misunderstood how they do encryption.) They’ve even announced a plan for a thorough review, with outside experts. There are still questions about some system details, but I’m optimistic that things are heading in the right direction. Still, it’s the shortcuts that worry me the most. Those aren’t just problems that they can fix, they make me fear for the attitudes of the development team towards security. I’m not convinced that they get it—and that’s bad. Fixing that is going to require a CISO office with real power, as well as enough education to make sure that the CISO doesn’t have to exercise that power very often. They also need a privacy officer, again with real power; many of their older design decisions seriously impact privacy.

I’ve used Zoom in variety of contexts for several years, and mostly like its functionality. But the security and privacy issues are real and need to be fixed. I wish them luck.

Here is my set of blog posts on Zoom and Zoom security.

Zoom Cryptography and Authentication Problems

In my last blog post about Zoom, I noted that the company says “that critics have misunderstood how they do encryption.” New research from Citizen Lab show that not only were the critics correct, Zoom’s design shows that they’re completely ignorant about encryption. When companies roll their own crypto, I expect it to have flaws. I don’t expect those flaws to be errors I’d find unacceptable in an introductory undergraduate class, but that’s what happened here.

Let’s start with the egregious flaw. In this particular context, it’s probably not a real threat—I doubt if anyone but a major SIGINT agency could exploit it—but it’s just one of these things that you should absolutely never do: use the Electronic Code Book (ECB) mode of encryption for messages. Here’s what I’ve told my students about ECB:

- Direct use of a block cipher is inadvisable

- Enemy can build up “code book” of plaintext/ciphertext equivalents

- Direct use of the block cipher [is]

- Used primarily to transmit encrypted keys

- Very weak if used for general-purpose encryption; never use it for a file or a message.

- Attacker can build up codebook; no semantic security

The more important error isn’t that egregious, but it does show a fundamental misunderstanding of what “end-to-end encryption” means. The definition from a recent Internet Society brief is a good one:

End-to-end (E2E) encryption is any form of encryption in which only the sender and intended reipient hold the keys to decrypt the message. The most important aspect of E2E encryption is that no third party, even the party providing the communication service, has knowledge of the encryption keys.As shown by Citizen Lab, Zoom’s code does not meet that definition:

By default, all participants’ audio and video in a Zoom meeting appears to be encrypted and decrypted with a single AES-128 key shared amongst the participants. The AES key appears to be generated and distributed to the meeting’s participants by Zoom servers.Zoom has the key, and could in principle retain it and use it to decrypt conversations. They say they do not do so, which is good, but this clearly does not meet the definition [emphasis added]: no third party, even the party providing the communication service, has knowledge of the encryption keys.”

Doing key management—that is, ensuring that the proper parties, and only the proper parties know the key—is a hard problem, especially in a multiparty conversation. At a minimum, you need assurance that someone you’re talking to is indeed the proper party, and not some interloper or eavesdropper. That in turn requires that anyone who is concerned about the security of the conversation has to have some reason to believe in the other parties’ identities, whether via direct authentication or because some trusted party has vouched for them. On today’s Internet, when consumers log on to a remote site, they typically supply a password or the like to authenticate themselves, but the site’s own identity is established via a trusted third party known as a certificate authority.

Zoom can’t quite do identification correctly. You can have a login with Zoom, and meeting hosts generally do, but often, participants do not. Again, this is less of an issue in an enterprise setting, where most users could be registered, but that won’t always be true for, say, university or school classes. Without particpant identification and authentication, it isn’t possible for Zoom to set up a strongly protected session, no matter how good their cryptography; you could end up talking to Boris or Natasha when you really wanted to talk confidentially to moose or squirrel.

You can associate a password or PIN with a meeting invitation, but Zoom knows this value and uses it for access control, meaning that it’s not a good enough secret to use to set up a secure, private conference.

Suppose, though, that all particpants are strongly authenticated and have some cryptographic credential they can use to authenticate themselves. Can Zoom software then set up true end-to-end encryption? Yes, it can, but it requires sophisticated cryptographic mechanisms. Zoom manifestly does not have the right expertise to set up something like that, or they wouldn’t use ECB mode or misunderstand what end-to-end encryption really is.

Suppose that Zoom wants to do everything right. Could they retrofit true end-to-end encryption, done properly? The sticking point is likely to be authenticating users. Zoom likes to outsource authentication to its enterprise clients, which is great for their intended market but says nothing about the existence of cryptographic credentials.

All that said, it might be possible to use a so-called Password-authenticated key exchange (PAKE) protocol to let participants themselves agree on a secure, shared key. (Disclaimer: many years ago, a colleague and I co-invented EKE, the first such scheme.) But multiparty PAKEs are rather rare. I don’t know if there are any that are secure enough and would scale to enough users.

So: Zoom is doing its cryptography very badly, and while some of the errors can be fixed pretty easily, others are difficult and will take time and expertise to solve.

Here is my set of blog posts on Zoom and Zoom security.

Trusting Zoom?

Since the world went virtual, often by using Zoom, several people have asked me if I use it, and if so, do I use their app or their web interface. If I do use it, isn’t this odd, given that I’ve been doing security and privacy work for more than 30 years and “everyone” knows that Zoom is a security disaster?

To give too short an answer to a very complicated question: I do use it, via both Mac and iOS apps. Some of my reasons are specific to me and may not apply to you; that said, my overall analysis is that Zoom’s security, though not perfect, is quite likely adequate for most people. Security questions always have situation-specific answers; my students will tell you that my favorite response is “It depends!” This is no different. Let me explain my reasoning.

I’ll start by quoting from Thinking Security, which I wrote about four years ago.

All too often, insecurity is treated as the equivalent of being in a state of sin. Being hacked is not perceived as the result of a misjudgment or of being outsmarted by an adversary; rather, it’s seen as divine punishment for a grievous moral failing. The people responsible didn’t just err; they’re fallen souls to be pitied and/or ostracized. And of course that sort of thing can’t happen to us, because we’re fine, upstanding folk who have the blessing of the computer deity—$DEITY, in the old Unix-style joke—of our choice.

and

There’s one more point to consider, and it goes to the heart of this book’s theme: is living disconnected worth it? Employees have laptops and network connections because there’s a business need for such things, and not just to provide them with recreation in lonely hotel rooms or a cheap way to make a video call home. As always, the proper question is not “is Wi-Fi access safe?”; rather, it’s “is the benefit to the business from having connectivity greater than or less than the incremental risk?”

Let me put this another way.

- Are the benefits from me using Zoom (or any other conferencing system; much of this blog post is, as you will see, independent of the particular one involved) greater than the risks?

- What can Zoom itself do to improve security?

- If there are risks, what can I or my university do to minimize those risks?

The first part of the of the question is relatively easy. Part of my job is to teach; part of my employer’s mission is to provide instruction. Given the pandemic, in-person teaching is unduly risky; some form of “distance learning” is the only choice we have right now, and one-way video just isn’t the same. In other words, the benefit of using Zoom is considerable, and I have an ethical obligation to do it unless the risks to me, to my students, or to the university are greater.

Are there risks? Sure, as I discuss below, but at least for teaching, those risks are much less than providing no instruction at all. At most, I—really, the university—should switch to a different platform, but of course that platform would require its own security analysis.

The platform question is a bit harder, but the analysis is the same. My personal perception, given what I’m now teaching (Computer Security II, a lecture course) and how I teach (I generally use PDF slides), is that I can do a better job on my Mac, where I can use one screen for my slides and another to see my students via their own cameras. In other words, if my ethical obligation is to teach and to teach as well as I can, and if I feel that I teach better when using Mac app, then that’s what I should do unless I feel that the risks are too great.

In other words: I generally don’t use Zoom on iOS for teaching, because I don’t think it works as well. I have done so occasionally, where I wanted to use a stylus to highlight parts of diagrams—but I also had it running on my Mac to get the second view of the class.

My reasoning for not using the browser option is a bit different: I don’t trust browsers enough to want one to have the ability to get at my camera or microphone. I (of course) use my browser constantly, and the best way to make sure that my browser doesn’t give the wrong sites access to my camera or mic is to make sure that the browser itself has no access. Can a browser properly ensure that only the right sites have such access, despite all the complexities of IFRAMEs, JavaScript, cross-site scripting problems, and more? I’m not convinced. I think that browsers can be built that way, but I’m not convinced that any actually are.

Some will argue that the Chrome browser is more secure than more or less any other piece of code out there, including especially the Zoom app. That may very well be—Google is good at security. But apart from my serious privacy reservations, flaws in the Zoom app put me at risk while using Zoom, while flaws in a browser put me at risk more or less continuously.

Note carefully that my decision to use the Zoom ap on a Mac is based on my classroom teaching responsibilities—and I regard everything I say in class as public and on the record. (Essentially all of my class slides are posted on my public web site.) There are other things I do for my job where the balance is a bit different: one-on-one meetings with research and project students, private meetings in my roles as both a teacher and as an advisor, faculty meetings, promotion and hiring meetings, and more. The benefits of video may be much less, and the subjects being discussed are often more sensitive, whether because the research is cutting edge and hence potentially more valuable, or because I’m discussing exceedingly sensitive matters. (Anyone who has taught for any length of time has heard these sorts of things from students. For those who haven’t—imagine the most heart-wrenching personal stories you can think of, and then realize that a student has to relate them to a near-stranger who is in a position of authority. And I’ve heard tragic things that my imagination isn’t good enough to have come up with.) Is Zoom (or some other system) secure enough for this type of work?

In order to answer these questions more precisely, though, I have to delve into the specifics of Zoom. Are Zoom’s weaknesses sufficiently serious that my university—and I—should avoid it? Again, though, this is a question that can’t be answered purely abstractly. Let me draw on some definitions from a National Academies report that I worked on:

A “vulnerability” is an error or weakness in the design, implementation, or operation of a system. An “attack” is a means of exploiting some vulnerability in a system. A “threat” is an adversary that is motivated and capable of exploiting a vulnerability.There are certainly vulnerabilities in Zoom, as I and others have discussed. But is there a threat? That is, is there an adversary who is both capable and motivated?

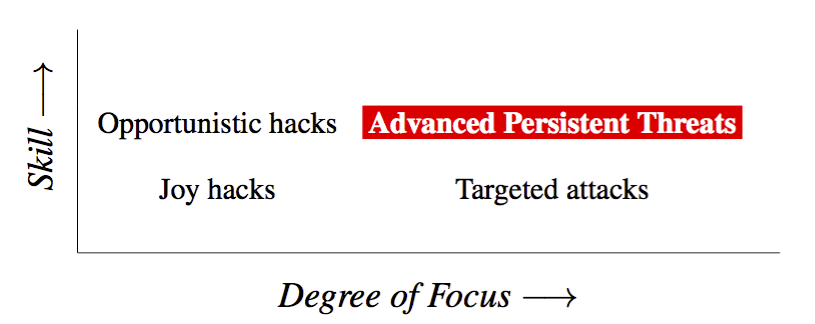

In Thinking Security, I addressed that question by means of this diagram:

Let’s first consider what I called an egregious flaw in Zoom’s cryptography. In my judgment (and as I remarked in that blog post), “I doubt if anyone but a major SIGINT agency could exploit it”. That’s already a substantial part of the answer: I’m not worried about the Andromedan cryptanalysts trying to learn about my students’ personal tragedies. Yes, I suppose in theory I could have as a student someone who is a person of interest to some foreign intelligence agency and this person has a problem that they would tell to me and that agency would be interested enough in blackmailing this student that they’d go to the trouble of cryptanalyzing just the right Zoom conversation—but I don’t believe it’s at all likely and I doubt that you do. In other words, this would require a highly targeted attack by a highly skilled attacker. If it were actually a risk, I suspect that I’d know about it in advance and have that conversation via some other mechanism.

The lack of end-to-end encryption is a more serious flaw. To recap, the encryption keys are centrally generated by Zoom, sometimes in China; these keys are used to encrypt conversations. (Zoom has replied that the key generation in China was an accident and shouldn’t have been possible.) Anyone who has access to those keys and to the ciphertext can listen in. Is this a threat? That is, is there an adversary who is both motivated to and capable of exploiting the vulnerability? That isn’t clear.

It’s likely that any major intelligence agency is capable of getting the generated keys. They could probably do it by legal process, if the keys are generated in their own country; alternatively, they could hack into either the key generation machine or any one of the endpoints of the call—very few systems are hardened well enough to resist that sort of attack. Of course, the latter strategy would work just as well for any conceivable competitor to Zoom—a weak endpoint is a weak endpoint. There may, under certain circumstances, be an incremental risk if the hosting government can compel production of a key, but this is still a targeted attack by a major enemy. This is only a general technical threat if some hacker group had continous access to all of the keys generated by Zoom’s servers. That’s certainly possible—companies as large as Marriott and Nortel have been victimized for years—but again, this is the product of a powerful enemy.

There’s another part of the puzzle for a would-be attacker who wants to exploit this flaw: they need access to the target’s traffic. There are a variety of ways that that can be done, ranging from the trivial—the attacker is on-LAN with the target—to the complicated, e.g., via BGP routing attacks. Routing attacks don’t require a government-grade attacker, but they’re also well up there on the scale of abilities.

What it boils down to is this: exploiting the lack of true end-to-end encryption in Zoom is quite difficult, since you need access to both the per-meeting encryption key and the traffic. Zoom itself could probably do it, but if they were that malicious you shouldn’t trust their software at all, no matter how they handled the crypto.

There’s one more point. Per the Citizen Lab report, “the mainline Zoom app appears to be developed by three companies in China” and “this arrangement could also open up Zoom to pressure from Chinese authorities.” Conversations often go through Zoom’s own servers; this means that, at least if you accept that premise, the Chinese government often has access to both the encryption key and the traffic. Realistically, though, this is probably a niche threat—virtually all of the new Zoom traffic is uninteresting to any intelligence agency. (Forcing military personnel to sit through faculty meetings probably violates some provisions of the Geneva Conventions. In fact, I’m surprised that public universities can even hold faculty meetings, since as state actors they’re bound by the U.S. constitutional prohibition against cruel and unusual punishment.) In other words: if what you’re discussing on Zoom is likely to be of interest to the Chinese government, and if the assertions about their power to compel cooperation from Zoom are correct, there is a real threat. Nothing that I personally do would seem to meet that first criterion—I try to make all of my academic work public as soon as I can—but there are some plausible university activities, e.g., development of advanced biotechnology, where there could be such governmental interest.

There’s one more important point, though: given Zoom’s architecture, there’s an easy way around the cryptography for an attacker: be an endpoint. As I noted in my previous blog post about Zoom,

At a minimum, you need assurance that someone you’re talking to is indeed the proper party, and not some interloper or eavesdropper. That in turn requires that anyone who is concerned about the security of the conversation has to have some reason to believe in the other parties’ identities, whether via direct authentication or because some trusted party has vouched for them.That is, simply join the call. Yes, everyone on the call is supposed to be listed, but for many Zoom calls, that’s simply not effective. Only a limited number of participants’ names are shown by default; if the group is large enough or if folks don’t scroll far enough, the addition will go unnoticed. If, per the above, Zoom is malicious or has been pressured to be malicious, the extra participant won’t even be listed. (Or extra participants—who’s to say that there aren’t multiple developers in thrall to multiple intelligence agencies? Maybe there’s even a standard way to list all of the intercepting parties?

struct do_not_list {

char *username;

char *agency;

} secret_users[] = {

{"Aldrich Ames", "CIA"},

{"Sir Francis Walsingham", "GCHQ"},

{"Clouseau", "DGSE"},

{"Yael", "Mossad"},

{"Achmed", "IRGC"},

{"Vladimir", "KGB"},

{"Bao", "PLA"},

{"Natasha", "Pottsylvania"},

…

{NULL, NULL}

};

The possibilities are endless…)

Let me stress that properly authenticating users is very important even if the cryptography was perfect. Let’s take a look at a competing product, Cisco’s Webex. They appear to handle the encryption properly:

Cisco Webex also provides End-to-End encryption. With this option, the Cisco Webex cloud does not decrypt the media streams, as it does for normal communications. Instead it establishes a Transport Layer Security (TLS) channel for client-server communication. Additionally, all Cisco Webex clients generate key pairs and send the public key to the host’s client.The end-points generate key pairs; the host sends the session key to those end-points and only those end-points.The host generates a symmetric key using a Cryptographically Secure Pseudo-Random Number Generator (CSPRNG), encrypts it using the public key that the client sends, and sends the encrypted symmetric key back to the client. The traffic generated by clients is encrypted using the symmetric key. In this model, traffic cannot be decoded by the Cisco Webex server. This End-to-End encryption option is available for Cisco Webex Meetings and Webex Support.

Note what this excerpt does not say: that the host has any strong assurance of the identity of these end-points. Do they authenticate to Cisco or to the host? The difference is crucial. Webex supports single sign-on, where users authenticate using their corporate credentials and no password is ever sent to Cisco—but it isn’t clear to me if this authentication is reliably sent to the host, as opposed to Cisco. I hope that the host knows, but the text I quoted says “all Cisco Webex clients generate key pairs”, and doesn’t say “all clients send the host their corporate-issued certificate”. I simply do not know, and I would welcome clarification by those who do know.

Though it’s not obvious, the authentication problem is related to what is arguably the biggest real-world problem Zoom has: Zoombombing. People can zoombomb because it’s just too easy to find or guess a Zoom conference ID. Zoom doesn’t help things; if you use their standard password option, an encoded form of the password is included in the generated meeting URL. If you’re in the habit of posting your meeting URLs publicly—my course webpage software generates a subscribable calendar for each lecture; I’d love to include the Zoom URL in it—having the password in the URL vitiates any security from that measure. Put another way, Zoom’s default password authentication increases the size of the namespace of Zoom meetings, from 109 to 1014. This isn’t a trivial change, but it’s also not the same as strong authentication. Zoom lets you require that all attendees be registered, but if there’s an option by which I can specify the attendee list I haven’t seen it. To me, this is the single biggest practical weakness in Zoom, and it’s probably fixable without much pain.

To sum up: apart from Zoombombing, the architectural problems with Zoom are not serious for most people. Most conversations at most universities are quite safe if carried by Zoom. A small subset might not be safe, though, and if you’re a defense contractor or a government agency you might want to think twice, but that doesn’t apply to most of us.

That the threat is minimal, absent malign activity by one or more governments, does not mean that Zoom and its clients can’t do things better. First and foremost, Zoom needs to clean up its act when it comes to its code. The serious security and privacy bugs we’ve seen, per my blog post the other day and a Rapid7 blog post, boil down to carelessness and/or a desire to make life easier for users, even at a significant cost in security. This is unacceptable, Zoom should have known better, and they have to fix it. Fortunately, I supect that most of those changes will be all but invisible to users.

Second, the authentication and authorization model has to be fixed. Zoom has to support lists of authorized users, including shortcuts like "*@columbia.edu". (If it’s already there, they have to make the option findable. I didn’t see it—and I’m not exactly a technically naive user…) Ideally, there will also be some provision for strong end-to-end authentication.

Third, the cryptography has to be fixed. I said enough about that the other day; I won’t belabor the point.

Finally, Webex has the proper model for scalable end-to-end encryption. It won’t work properly without proper, end-to-end, authentication, but even the naive model will eliminate a lot of the fear surrounding centralized key distribution.

In truth, I’d prefer multi-party PAKE, at least as an option, but it’s not clear to me that suitably vetted algorithms exist. PAKE would permit secure cryptography even without strong authentication, as long as all of the participants shared a simple password. In the original PAKE paper, Mike Merritt and I showed how to buid a public phone were people could have strong protection against wiretappers, even when they used something as simple as a shared 4-digit PIN.

There are things that Zoom’s customers can do today. Zoom has stated that

an on-premise solution exists today for the entire meeting infrastructure, and a solution will be available later this year to allow organizations to leverage Zoom’s cloud infrastructure but host the key management system within their environment. Additionally, enterprise customers have the option to run certain versions of our connectors within their own data centers if they would like to manage the decryption and translation process themselves.If you can, do this. If you host your own infrastructure, attackers will have a much harder time—you can monitor your own site for nasty traffic, and perhaps (depending on the precise requirements from Zoom) you can harden the system more. Besides, at least for Zoom meetings where everyone is on-site, routing attacks will be much harder.

Finally, enable the security options that are there, notably meeting passwords. They’re not perfect, especially if your users post URLs with embedded passwords, but they’re better than nothing.

One last word: what about Zoom’s competitors? Should folks be using them instead? One reason Zoom has succeeded so well is user friendliness. In the last few weeks, I’ve had meetings over Zoom, Skype, FaceTime, and Webex. From a usability perspective, Zoom was by far the best—and again, I’m not technically naive, and my notion of an easy-to-use system has been warped by more than 40 years of using ed and vi for text editing. To give just two examples, recently a student and I spent several minutes trying to connect using Skype; we did not succeed. I’ve had multiple failures using FaceTime; besides, it’s Apple-only and (per Apple) only supports 32 users on a call. There’s also the support issue, especially for universities where the central IT department can’t dictate what platforms are in use. Zoom supports more or less any platform, and does a decent job on all of them. Perhaps one of its competitors can offer better security and better usability—but that’s the bar they have to clear. (I mean, it’s 2020 and someone I know had to install Flash(!) for some online sessions. Flash? In 2020?)

I’d really like Zoom to do better. To its credit, the company is not reacting defensively or with hostility to these reports. Instead, it seems to be treating these reports as constructive criticism, and is trying to fix the real problems with its codebase while pointing out where the critics have not gotten everything right. I wish that more companies behaved that way.

Here is my set of blog posts on Zoom and Zoom security.

Is Zoom's Server Security Just as Vulnerable as the Client Side?

My last several blog posts have been about Zoom security. I concluded that

the architectural problems with Zoom are not serious for most people. Most conversations at most universities are quite safe if carried by Zoom. A small subset might not be safe, though, and if you’re a defense contractor or a government agency you might want to think twice, but that doesn’t apply to most of us.

But I’ve been thinking more about this caveat.

Still, it’s the shortcuts that worry me the most. Those aren’t just problems that they can fix, they make me fear for the attitudes of the development team towards security. I’m not convinced that they get it—and that’s bad. Fixing that is going to require a CISO office with real power, as well as enough education to make sure that the CISO doesn’t have to exercise that power very often.especially in light of the investigation that Mudge reported. In a nutshell, the Zoom programmers made elementary security errors when coding, and did not use protective measures that compiler toolchains make available.

It’s not a great stretch to assume that similar flaws afflict their server implementations. While Mudge noted that Zoom’s Windows and Mac clients are (possibly accidentally) somewhat safer than the Linux client, I suspect that their servers run on Linux. Were they written with similar lack of attention to security? Were the protective measures similarly ignored? I have no access to their server software, but it’s the way to bet, and that’s worrisome: if I’m right, Zoom’s servers are very vulnerable. This provides an easy denial of service attack, and an easy mechanism for an attacker to go after Zoom clients that connect via the server.

My bottom line for most people doesn’t change: it’s still safe enough for most people, though that could change if someone decided to use Zoom to inject ransomware or cryptocurrency miners to many of the planet’s end systems. But the risks are definitely higher.

Zoom has formed a CISO council to advise them on security. These people are almost certainly not the folks who will carry out the detailed code and process audits. But I hope they’ll strongly recommend an urgent look at the server side.

Here is my set of blog posts on Zoom and Zoom security.

In Memoriam: Joel Reidenberg

Yesterday, the world lost a marvelous human being, Joel Reidenberg: a scholar, a pioneer in the tech policy/tech law area, a mentor, a friend, and—most of all—a mensch.

I first met Joel around 1995 or 1996, when he spent his sabbatical in my department at Bell Labs Research. Amusingly enough, he was a neighbor of mine then; he lived around the corner, though we had not met. I wasn’t doing much in the way of law and policy at the time, but he somehow realized that I was interested—and when I moved to academe in 2005, he immediately started inviting me to his roundtables and seminars. (Ironically enough, one of the earliest invitations I received from him was to a 2006 address on network neutrality by someone who was out of the public limelight at the time, one William Barr…)

Joel seemed to know everyone. Through him, I got to meet people I’d never otherwise have encountered, including appellate court judges and major public figures. But again, he knew everyone—and that was because he was happy to meet anyone. I could and did bring my students to his roundtables, which were normally for considerably more senior participants, but if I told him they had interesting and relevant things to say, he was glad to have them attend. He judged people for who they were, not for their rank or position.

He was a scholar, and knew the field thoroughly. I once mentioned to him a fairly obscure Pennsylvania court case from 25 years earlier, and he knew the citation to it. I don’t think I ever mentioned any reference to him that he wasn’t already familiar with. Yes, I of course knew the technical details better, but he probably knew technology better than I know law.

I learned from him. Even when he was ill, he had many useful comments on my legal writing, up to and including on a draft paper I’m working on right now. He also pointed me to two important and relevant papers of his—and a co-author on one of those was a former PhD student of mine, someone Joel had first met at one of the roundtables I mentioned. I cited an old book on privacy; Joel put it in its proper context and pointed me at other pioneers, people I’d never heard of who were doing prescient privacy work in the 1960s. I spent my last sabbatical, the 2018-2019 academic year, at Fordham Law in the hope that I could work closely with him, and learn from him.

Joel was a pioneer. As many others have noted, he was among the first to realize that software itself effectively creates laws. In a 1996 article, Lex Informatica, he wrote that “the set of rules for information flows imposed by technology and communication networks form a ‘Lex Informatica’ that policymakers must understand, consciously recognize, and encourage.” This insight is even more true today.

His influence continues. Just 10 days ago, I referred someone to him. Just today, I saw a news story I wanted to send to him, to get his opinion. I’m sure that that will continue.

Curiously enough for a privacy advocate, Joel was very open about his illness. We never discussed his odds, though I’m sure he knew they weren’t good. But he was always cheerful and always joking about it. In my last email exchange with him, I teased him that he was “practicing social distancing and masks and gloves before it was cool”. He replied in kind, saying “I guess I was avante garde on health issues too. 😃”, even though in the same note he mentioned that he was at the start of a rough period after a second bone marrow transplant.

I’ll miss Joel, his family will miss him, and the broader community will miss him.

Blessed is the true judge — ברוך דיין אמת.

The Price of Lack of Clarity

As anyone reading this blog assuredly knows, the world is in the grip of a deadly pandemic. One way to contain it is contact-tracing: finding those who have been near infected people, and getting them to self-quarantine. Some experts think that because of how rapidly newly infected individuals themselve become contagious, we need some sort of automated scheme. That is, traditional contact tracing is labor-intensive and time-consuming, time we don’t have here. The only solution, they say, is to automate it, probably by using the cell phones we all carry.

Naturally, privacy advocates (and I’m one) are concerned. Others, though point out that we’ve been sharing our location with advertisers; why would we not do it to save lives? Part of the answer, I think, is that people know they’ve been misled, so they’re more suspicious now.

As Joel Reidenberg and his colleagues have pointed out, privacy policies are ambiguous, perhaps deliberately so. One policy they analyzed said

“May”? Do you collect it or not? "As necessary"? “To administer”? What do those mean?

- “Depending on how you choose to interact with the Barnes & Noble enterprise, we may collect personal information from you…”

- “We may collect personal information and other information about you from business partners, contractors and other third parties.”

- “We collect your personal information in an effort to provide you with a superior customer experience and, as necessary, to administer our business”

The same lack of clarity is true of location privacy policies. The New York Times showed that some apps that legitimately need location data are actually selling it, without making that clear:

The Weather Channel app, owned by an IBM subsidiary, told users that sharing their locations would let them get personalized local weather reports. IBM said the subsidiary, the Weather Company, discussed other uses in its privacy policy and in a separate “privacy settings” section of the app. Information on advertising was included there, but a part of the app called “location settings” made no mention of it.

Society is paying the price now. The lack of trust built up by 25 years of opaque web privacy policies is coming home to roost. People are suspicious of what else will be done with their data, however important the initial collection is.

Can this be salvaged? I don’t know; trust, once forfeited, is awfully hard to regain. At a minimum, there need to be strong statutory guarantees:

- The information collected will only be used for contact tracing;

- It will not be available to anyone else, including law enforcement, for any reason whatsoever;

- There are both criminal and civil penalties for unauthorized collection or use of such data, e.g., by a store;

- There is a private right of action as well as city, state, and Federal enforcement;

- That class action suits to enforce this are permitted, regardless of terms and conditions requiring arbitration.

I don’t know if even this will suffice—as I said, it’s hard to regain trust. But passing a strong Federal privacy law might make things easier when the next pandemic hits—and from what I’ve read, that’s only a matter of time.

(There’s a lot more to be said on this topic, e.g., should a tracking app be voluntary or mandatory? The privacy advocate in me says yes; the little knowledge I have of epidemiology makes me think that very high uptake is necessary to gain the benefits.)

Software Done in a Hurry

Not at all to my surprise, people are reporting trouble with an online site for applying for loans under the Paycheck Protection Program (PPP). This is software that was built very quickly but is expected to cope with enormous loads. That’s a recipe for disaster, and it’s less surprising that there have been problems than it would be for there to be no problems.

We saw this happen with the sign-up for Obamacare; I wrote similar things then. Software development is hard; rapidly building any system, let alone one that has to run at scale, was, is, and will likely remain difficult. That’s independent of which political party is in charge at the time.

That we can’t do software engineering perfectly doesn’t mean that it can’t be done better. Healthcare.gov was rescued fairly quickly, when the administration brought in a management team that understood software engineering. The same applies here: competent management matters.