Homework2 Lock it up

NOTE: Starting from Homework 2, all homework is graded.

What do you need to submit in the end

At the end of the homework, you need to submit the following files

- A text file named

WRITTENthat is for the written problems READMEfile that explains what you did for the programming homework, including the test cases- All your source code for programming problems

General Instructions

Make sure you’ve commited all your changes

% cd homework

% git remote add upstream http://ds-git.cs.columbia.edu/sd2693/homework.git

% git fetch upstream

% git checkout hw2

Now start working on your questions

After you are done

% git push origin hw2

Written Problems ↩

- What is at-most-once delivery? In an actual implementation, how could you ensure that?

- What are stubs in an RPC implementation?

- Why are pointers (references) not usually passed as parameters to a Remote Procedure Call? References to what kinds of resources can be passed via RPC?

- Which of the following statements are true about the RPC system used in YFS? Give one line reasoning for your answer. a) Each RPC call is blocking. b) The rpcc class only supports the execution of a single client thread. c) If the rpcc libary issues two RPC calls, x followed by y, to the same server, then the server must handle x before y. d) The rpcs server creates a new thread to handle every incoming RPC request. e) The RPC library uses UDP messages for communicating RPC requests and replies.

- What are some of the error conditions we need to guard against in a distributed environment that we do not need to worry about in a local programming environment?

Programming Problems ↩

Introduction

In this series of homework, you will implement a fully functional distributed file server as described. For the system to work correctly, the yfs servers need a locking service to coordinate updates to the file system structures. In this lab, you’ll implement the lock service.

The core logic of the lock service is quite simple. It consists of 2 components, a lock client and a lock server, which communicate via RPCs. A client requests a specific lock from the lock server by sending an acquire request. The lock server grants the requested lock to one client at a time. When a client is done with the granted lock, it sends a release request to the server so the server can grant the lock to another client who also tried to acquire it in the past.

You will need also augment the provided RPC library to ensure at-most-once execution by eliminating duplicate RPC requests. Duplicate requests exist because the RPC system must re-transmit lost RPCs in the face of lossy network connections. Such re-transmissions often lead to duplicate RPC delivery when the original request turns out not to be lost, or when the server reboots.

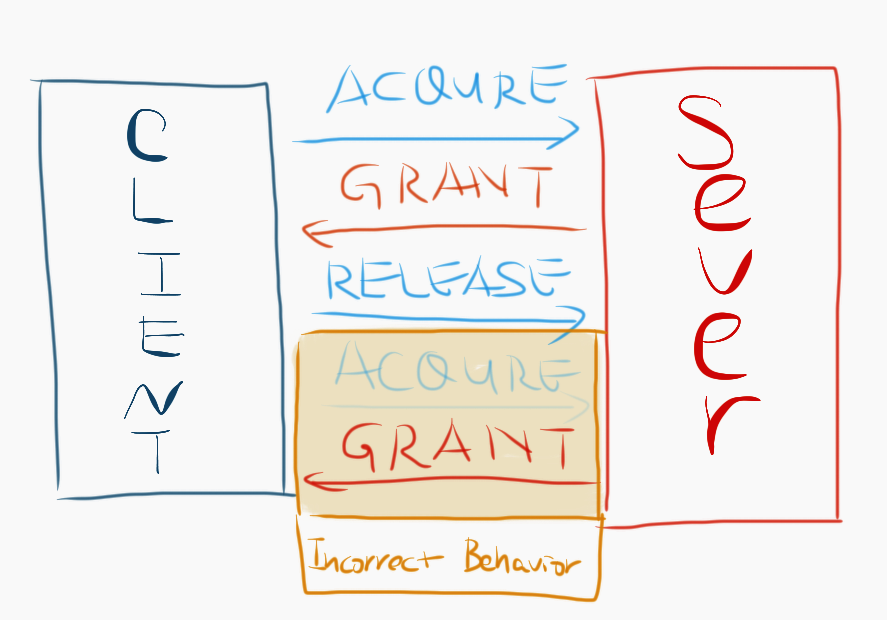

Duplicate RPC delivery, when not handled properly, often violates application semantics. Here’s a example of duplicate RPCs causing incorrect lock server behavior. A client sends an acquire request for lock x, server grants the lock, client releases the lock with a release request, a duplicate RPC for the original acquire request then arrives at the server, server grants the lock again, but the client will never release the lock again since the second acquire is just a duplicate. Such behavior is clearly incorrect.

Homework Overview

In this homework , we provide you with a skeleton RPC-based lock server, a lock client interface, a sample application that uses the lock client interface, and a tester. Now compile and start up the lock server, giving it a port number on which to listen to RPC requests. You’ll need to choose a port number that other programs aren’t using. For example:

% cd homework % make % ./lock_server 3772 ...(after client has run) stat request from clt 1450783179

Now open a second terminal on the same machine and run lock_demo, giving it the port number on which the server is listening:

% cd homework % ./lock_demo 3772 stat returned 0

lock_demo asks the server for the number of times a given lock has been acquired, using the stat RPC that we have provided. In the skeleton code, this will always return 0. You can use it as an example of how to add RPCs. You don’t need to fix stat to report the actual number of acquisitions of the given lock yet, but you may if you wish.

The lock client skeleton does not do anything yet for the acquire and release operations; similarly, the lock server does not implement any form of lock granting or releasing. Your job in this homework is to implement these functions and the RPC protocol between the two processes.

Goals

1. Implement a correct lock server assuming a perfect underlying network.

In the context of a lock service, correctness means obeying this invariant: at any instance of time, there is at most one client holding a lock of a given name.

We will use the program lock_tester to check the correctness invariant, i.e. whether the server grants each lock just once at any given time, under a variety of conditions. You run lock_tester with the same arguments as lock_demo. A successful run of lock_tester (with a correct lock server) will look like this:

% ./lock_tester 3772 simple lock client acquire a release a acquire a release a acquire a acquire b release b release a test2: client 0 acquire a release a test2: client 2 acquire a release a . . . ./lock_tester: passed all tests successfully

If your lock server isn’t correct, lock_tester will print an error message. For example, if lock_tester complains “error: server granted a twice!”, the problem is probably that lock_tester sent two simultaneous requests for the same lock, and the server granted the lock twice (once for each request). A correct server would have sent one grant, waited for a release, and only then sent a second grant.

2. Augment the RPC library to guarantee at-most-once execution.

We simulate lossy networks on a local machine by setting the environmental variable RPC_LOSSY. If you can pass both the RPC system tester and the lock_tester, you have succeeded. Here’s a successful run of both testers:

% export RPC_LOSSY=0 % ./rpctest simple test . . . rpctest OK % killall lock_server % export RPC_LOSSY=5 % ./lock_server 3722 & % ./lock_tester 3772 simple lock client acquire a release a acquire a release a . . . ./lock_tester: passed all tests successfully

For this homework, your lock server and RPC augmentation must pass the both rpctest and lock_tester; you should ensure it passes several times in a row to guarantee there are no rare bugs. You should only make modifications on files rpc.{cc,h}, lock_client.{cc,h}, lock_server.{cc,h} and lock_smain.cc. We will test your code with with our own copy of the rest of the source files and testers. You are free to add new files to the directory as long as the Makefile compiles them appropriately, but you should not need to.

You do not have to worry about server failures or client failures, or security such as malicious clients releasing locks that they don’t hold yet.

Tips and Guides

If you are familiar with the language and the concept, you can implement whatever design you like as long as your implementation satisfies all requirements and passes the tester. For those who are still need a bit help, we have put forth here a recommendation.

1. Familiarize yourself with the RPC Library

The RPC library’s source code is in the subdirectory rpc/. lock_server uses it to create an RPC server object (rpcs), which listens on a chosen port and registers various RPC handlers1. lock_client creates a RPC client object (rpcc), connects to lock_server, and invokes RPC calls2.

Each RPC procedure is identified by a unique procedure number. We have defined the acquire and release RPC numbers you need in lock_protocol.h. Other RPC numbers defined there are for use in later homework.

You need to register handlers for these RPCs with the RPC server object.

Take a look at stat call implementation across lock_client and lock_server to learn how to write an RPC call.

All RPC procedures have a standard interface with x+1 (x < 6) arguments and an integer return value3. The last argument, a reference to an arbitary type, is always there so that a RPC handler can use it to return results4. The RPC handler also returns an integer status code. The convention is to return 0 for success and to return positive numbers otherwise for various errors. If the RPC fails at the RPC library (e.g.timeouts), the RPC client gets a negative return value instead. The various reasons for RPC failures at the RPC library are defined in rpc.h under rpc_const.

The RPC system must know how to convert arbitrary objects into a stream of bytes to transmit over the network and convert them back at the other end, also known as marshalling and unmarshalling. The RPC library has already provided marshall/unmarshall methods for standard C++ objects such as std::string, int, char5. If your RPC call need to inlude custom objects as arguments, then you must provide your own methods. You can complete this homework just with existing marshall/unmarshall methods. Note that these methods do not have any compile time type checking. Therefore, you need to be careful to make sure that the client-side’s RPC call function interface matches up with the corresponding server-side’s RPC handler function interface.

2. Implement the lock_server assuming a perfect network

The lock server can manage many distinct locks. Each lock is identified by an integer of type lock_protocol::lockid_t. The set of locks is open-ended: if a client asks for a lock that the server has never seen before, the server should create the lock and grant it to the client. When multiple clients simultaneously request for a given lock, the lock server must grant the lock to each client one at a time.

You need to modify the lock_server.{cc,h} to accept acquire/release RPCs from the lock client, and to keep track of the state of the locks.

On the server, a lock can be in one of two states:

- free: no clients own the client

- locked: some client owns the lock

The RPC handler for acquire first checks if the lock is locked, and if so, the handler blocks until the lock is free. When the lock is free, acquire changes the lock’s state to locked, and returns, which indicates that the client now has the lock. The value r returned by acquire is not used.

The handler for release changes the lock state to free, and notifies any threads that are waiting for the lock.

3. Implement the lock_client

The class lock_client is a client-side interface to the lock server. Modify lock_client.{cc,h} to add acquire and release functions. that are supposed to take care of sending and receiving RPCs. Multiple threads in the client program can use the same lock_client object and request the same lock name. See lock_demo.cc for an example of how an application uses the interface. Note that a basic requirement of the client interface is that lock_client::acquire must not return until it has acquired the requested lock.

4. Handling multi-thread concurrency

Both lock_client and lock_server’s functions will be invoked by multiple threads concurrently. On the lock server side, the RPC library keeps a thread pool and invokes the RPC handler using one of the idle threads in the pool. On the lock client side, many different threads might also call lock_client’s acquire and release functions simultaneously.

To protect access to shared data in the lock_client and lock_server, you need to use pthread mutexes. Please refer to the general tips for programming using threads. As seen from the suggested implementation plan, you also need to use pthread condition variables to synchronize the actions among multiple threads. Condition variables go hand-in-hand with the mutexes, please read pthread tutorial for more details on programming with pthreads.

For robustness, when using condition variables, it is recommended that when a thread that waited on a condition variable wakes up, it checks a boolean predicate associated with the wake-up condition. This protects from spurious wake-ups from the pthread_cond_wait and pthread_cond_timedwait functions. For example, the suggested logic described above lends itself to such an implementation (see how on the lock_client, a thread that wakes up checks the state of the lock.)

In this and later homework, we try to adhere to a simple (coarse-grained) locking convention: we acquire the subsystem/protocol lock at the beginning of a function and release it before returning. This convention works because we don’t require atomicity across functions, and we don’t share data structures between different subsystems/protocols. You will have an easier life by sticking to this convention.

5. Implement at-most-once delivery in RPC

After your lock server has passed lock_tester under a perfect network, enable RPC_LOSSY by typing export RPC_LOSSY=5, restart your lock_server and try lock_tester again. If you have implemented lock_server following the guide above, you will see the lock_tester fail (or hang indefinitely). See if you can find why lock_tester fails when re-transmissions cause duplicate RPC delivery.

The rpcc class handles the RPC client’s function. At its core lies the rpcc::call1 function, which accepts a marshalled RPC request for transmission to the RPC server. We can see that call1 attaches additional RPC fields to each marshalled request:

// add RPC fields before the RPC request data

req_header h(ca.xid, proc, clt_nonce_, srv_nonce_, xid_rep_window_.front());

req.pack_req_header(h);

What’s the purpose for each field in req_header? (Hint: many of them are going to help you implement at-most-once delivery.) After call1 has finished preparing the final RPC request, it sits in a while(1) loop to (repeatedly) update the timeout value for the next retransmission and waits for the corresponding RPC reply or timeout to happen. Also, if the underlying (TCP) connection to the server fails, rpcc automatically re-connects to the server again (in function get_refconn) in order to perform retransmissions.

The rpcs class handles the RPC server’s function. When the underlying connections have received a RPC request message, the function rpcs::got_pdu is invoked to dispatch the RPC request to a thread pool. The thread pool (class ThrPool) consists of a fixed number of threads that execute the rpcs::dispatch function to dispatch a RPC request to the registered RPC handler. The dispatch function extracts various RPC fields from the request. These fields include the RPC procedure number which is used to find the corresponding handler. Additionally, they also provide sufficient information for you to ensure the server can eliminate all duplicate requests.

Once you figure out the basic design for at-most-once delivery, go ahead and realize your implementation in rpc.cc (rpc.cc is the only file you should be modifying). Hints: you need to add code in two places, rpcs:add_reply to remember the RPC reply values and rpcs::checkduplicate_and_update to eliminate duplicate xid and update the appropriate information to help the server safely forget about certain received RPCs.

After you are done with step two, test your RPC implementation with ./rpctest and export RPC_LOSSY=0. Make sure ./rpctest passes all tests. Once your RPC implementation passes all these tests, test your lock server again in a lossy environment by restarting your lock_server and lock_tester after setting export RPC_LOSSY=5