HOGgles: Visualizing Object Detection Features

Carl Vondrick

Aditya Khosla

Hamed Pirsiavash

Tomasz Malisiewicz

Antonio Torralba

Massachusetts Institute of Technology

Oral presentation at ICCV 2013

We introduce algorithms to visualize feature spaces used by object detectors. The tools in this paper allow a human to put on "HOG goggles" and perceive the visual world as a HOG based object detector sees it.

Check out this page for a few of our experiments, and read our paper for full details. Code is available to make your own visualizations.

Quick Jump:

- Code

- Overview

- Why did my detector fail?

- Visualizing Top Detections

- What does HOG see?

- Eye Glass

- Visualizing Learned Models

- Recovering Color

- Videos

- HOGgles

Why did my detector fail?

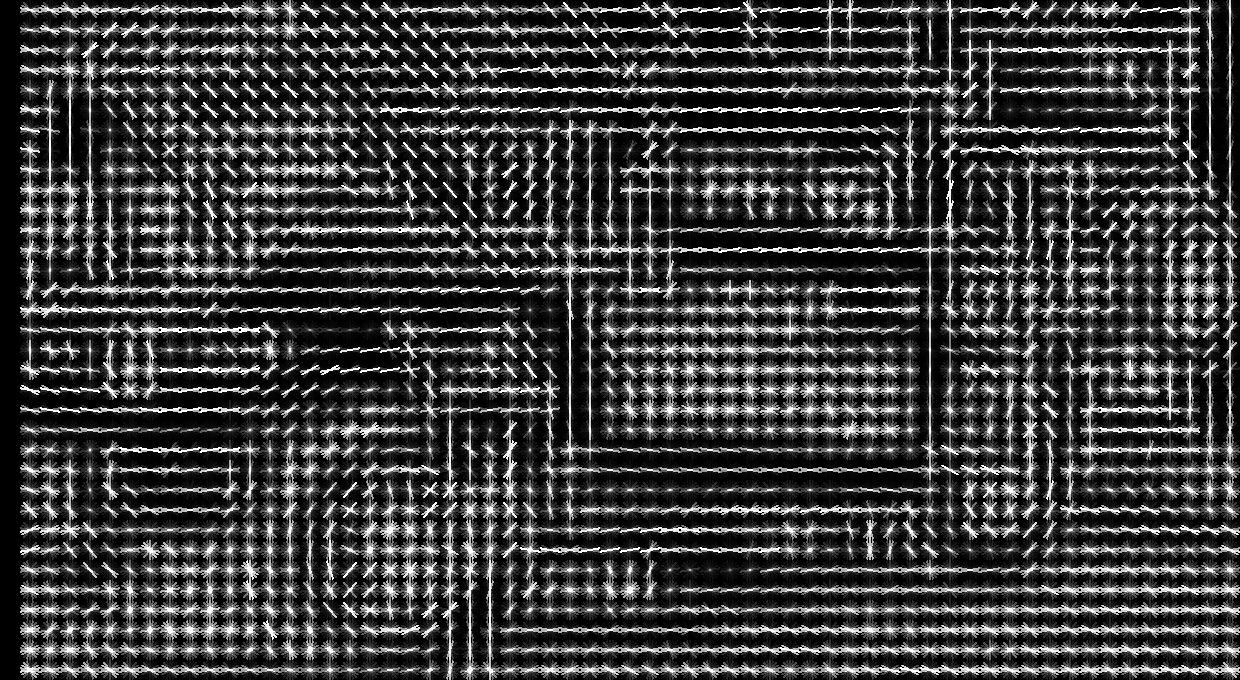

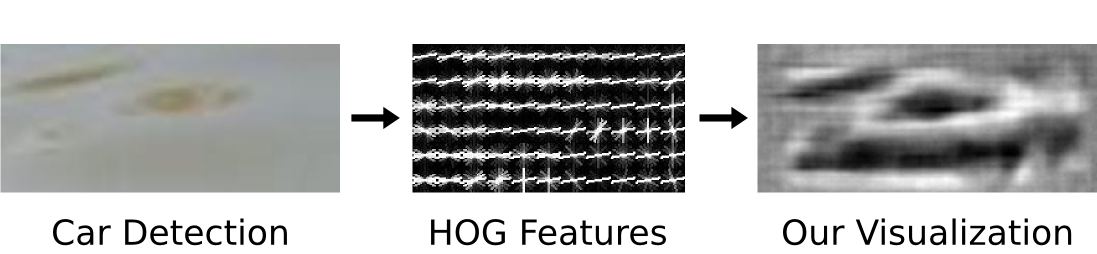

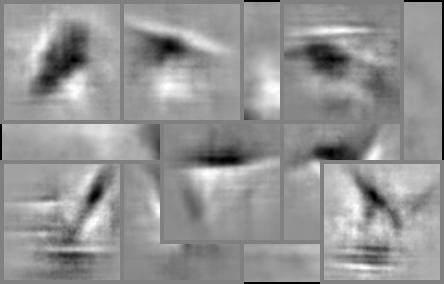

Below we show a high scoring detection from an object detector with HOG features

and a linear SVM classifier trained on PASCAL. Why does our detector

think that sea water looks like a car?

Our visualizations offer an explanation. Below we show the output from our visualization

on the HOG features for the false car detection. This visualization reveals that, while there are clearly

no cars in the original image, there is a car hiding in the HOG descriptor.

HOG features see a slightly different visual world than what humans see, and by visualizing this space,

we can gain a more intuitive understanding of our object detectors.

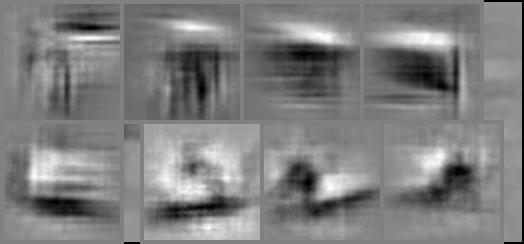

Visualizing Top Detections

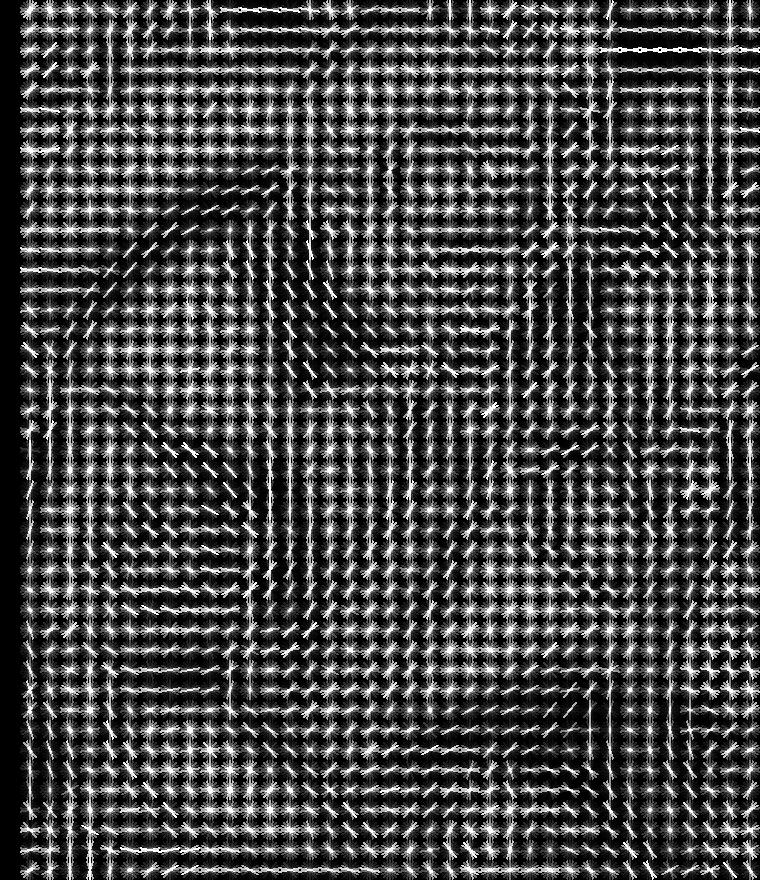

We have visualized some high scoring detections from the deformable parts model. Can you guess which are false alarms? Click on the images below to reveal the corresponding RGB patch. You might be surprised!

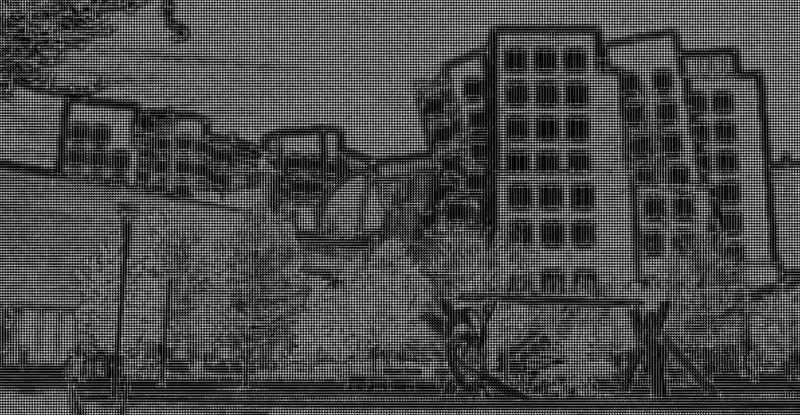

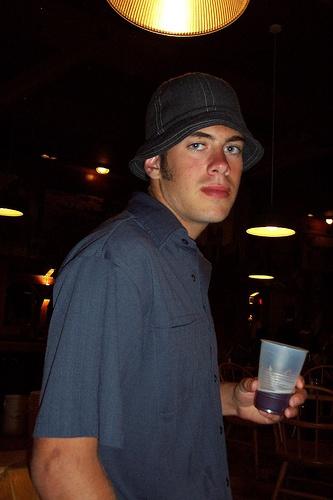

What does HOG see?

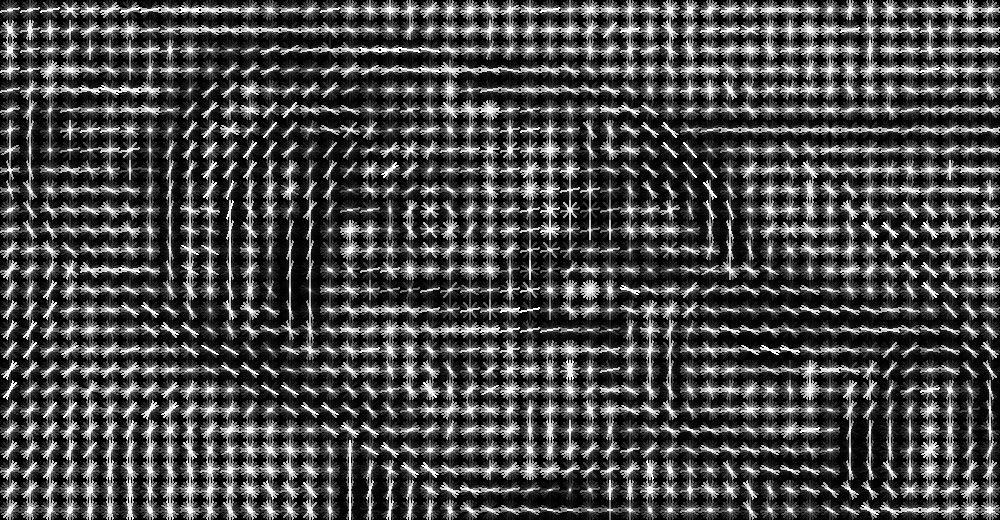

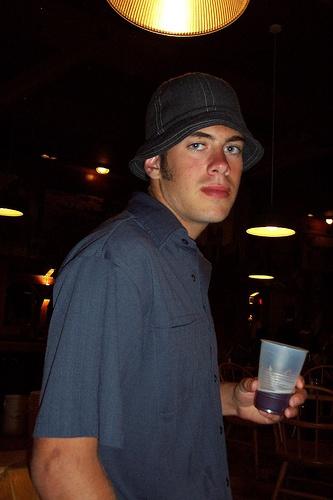

HOG inversion reveals the world that object detectors see. The left shows

a man standing in a dark room. If we compute HOG on this image and invert it, the previously

dark scene behind the man emerges. Notice the wall structure, the lamp post, and

the chair in the bottom right hand corner.

|

|

| Human Vision |

HOG Vision |

Eye Glass

Move your mouse around the HOG glyph below to reveal our visualization.

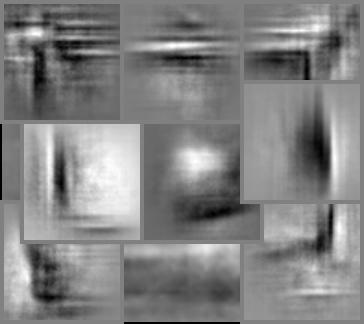

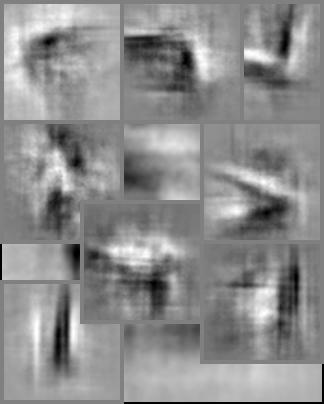

Visualizing Learned Models

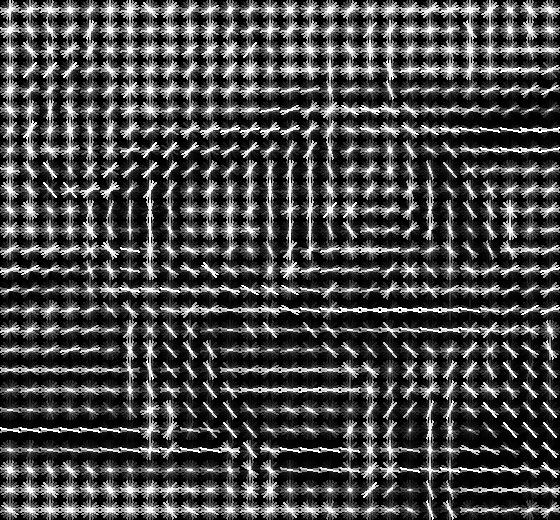

Our inverses allow us to visualize learned object models. Below we show a few deformable parts models. Notice

the structure that emerges with our visualization.

First row: car, person, bottle, bicycle, motorbike, potted plant. Second row: train, bus, horse, television, chair.

Recovering Color

So far we have only inverted to grayscale reconstructions. Can we recover color images as well?

For more color inversions, see the Does HOG Capture Color? page.

Code

We have released a fast and simple MATLAB function invertHOG() to invert HOG features. Usage is easy:

>> feat = features(im, 8);

>> ihog = invertHOG(feat);

>> imagesc(ihog);

The above should invert any reasonably sized HOG feature in under a second on a modern desktop machine.

To get code, you can checkout our Github repository. Installation is simple, but remember to read the README.

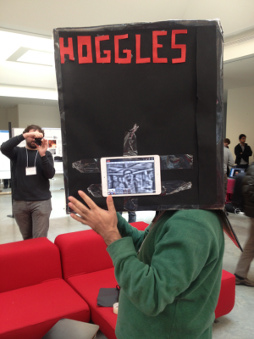

HOGgles

If you come visit our lab, be sure to check out our interactive HOGgles demo!

Participants inside the black box can only see our HOG visualization of the

outside world as they attempt to move around the environment. How well can you see in HOG space?

References

If you use this tool in your research, please cite our ICCV 2013 paper:

C. Vondrick, A. Khosla, T. Malisiewicz, A. Torralba. "HOGgles: Visualizing Object Detection Features" International Conference on Computer Vision (ICCV), Sydney, Australia, December 2013.

@article{vondrick2013hoggles,

title={{HOGgles: Visualizing Object Detection Features}},

author={Vondrick, C. and Khosla, A. and Malisiewicz, T. and Torralba, A.},

journal={ICCV},

year={2013}

}

Acknowledgments

We wish to thank Joseph Lim, and the entire MIT

CSAIL computer vision group for their helpful comments and suggestions

that helped guide this project.

Russian translation of this page.

References

- N. Dalal and B. Triggs. Histograms of oriented gradients for human detection. In CVPR, 2005.

- P. Weinzaepfel, H. Jegou, and P. Perez. Reconstructing an image from its local descriptors. In CVPR, 2011.

- E. d'Angelo, A. Alahi, and P. Vandergheynst. Beyond Bits: Reconstructing Images from Local Binary Descriptors. ICPR 2012.