Goto Fail

As you’ve probably heard by now, there’s a serious bug in the TLS implementations in iOS (the iPhone and iPad operating system) and MacOS. I’ll skip the details (but see Adam Langley’s excellent blog post if you’re interested); the effect is that under certain conditions, an attacker can sit in the middle of an encrypted connection and read all of the traffic.

Here’s the code in question:

if ((err = SSLHashSHA1.update(&hashCtx, &serverRandom)) != 0) goto fail; if ((err = SSLHashSHA1.update(&hashCtx, &signedParams)) != 0) goto fail; goto fail; if ((err = SSLHashSHA1.final(&hashCtx, &hashOut)) != 0) goto fail; …Note the doubled

goto fail; goto fail;That’s incorrect and is at the root of the problem.

The mystery is how that extra line was inserted. Was it malicious or was it an accident? If the latter, how could it possibly have happened? Is there any conceivable way this could have happened? Well, yes.

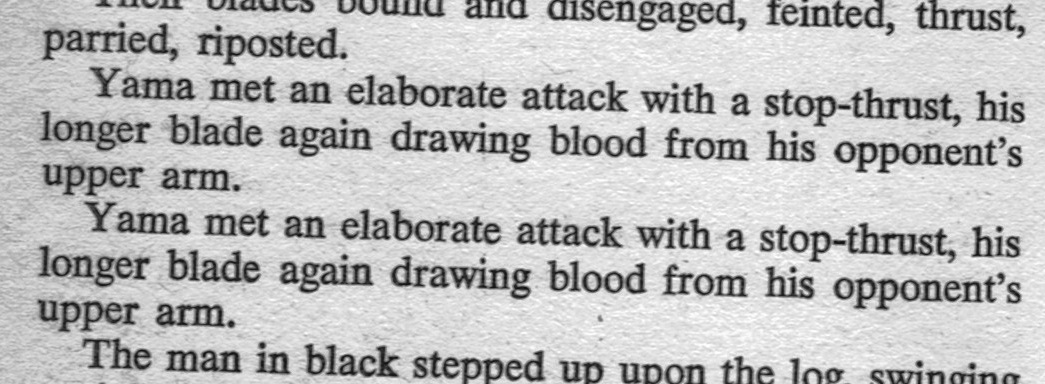

Here’s a scan from a 1971 paperback printing of Roger Zelazny’s Lord of Light.

Note the duplicated paragraph… Today, something like that could easily happen via a cut-and-paste error. (The error in that book was not authorial emphasis; I once checked a hardcover copy that did not have the duplication.)

There’s another reason to think it was an accident: it’s not very subtle. That sequence would stick out like a sore thumb to any programmer who looked at it; there are no situations where two goto statements in a row make any sense whatsoever. In fact, it’s a bit of a surprise that the compiler didn’t flag it as an error; the ordinary process of optimization should have noticed that the all of the lines after second goto fail could never be reached. The attempted sabotage of the Linux kernel in 2003 was much harder to spot; you’d need to notice the difference between = and == in a context where any programmer "just knows" it should be ==. However…

There are still a few suspicious items. Most notably, this bug is triggered if and only if something called "perfect forward secrecy" (PFS) has been offered as an encryption option for the session. Omitting the technical details of how it works, PFS is a problem for attackers—including government attackers—who have stolen or cryptanalyzed a site’s private key. Without PFS, knowing the private key allows encrypted traffic to be read; with PFS, you can’t do that. This flaw would allow an attacker who was capable of carrying out a "man-in-the-middle" (MitM) attack to read even PFS-protected traffic. While MitM attacks have been described in the academic literature at least as long ago as 1995, they’re a lot less common than ordinary hacks and are more detectable. In other words, if this was a deliberate back door, it was probably done by a high-end attacker, someone who wants to carry out MitM attacks and has the ability to sabotage Apple’s code base.

On the gripping hand, the error is noticeable by anyone poking at the file, and it’s one of the pieces of source code that Apple publishes, which means it’s not a great choice for covert action by the NSA or Unit 61398. With luck, Apple will investigate this and announce what they’ve found.

There are two other interesting questions here: why this wasn’t caught during testing, and why the iOS fix was released before the MacOS fix.

The second one is easy: changes to MacOS require different "system tests". That is, testing just that one function’s behavior in isolation is straightforward: set up a test case and see what happens. It’s not enough just to test the failure case, of course; you also have to test all of the correct cases you can manage. Still, that’s simple enough. The problem is "system test": making sure that all of the rest of the system behaves properly with the new code in. MacOS has very different applications than iOS does (to name just one, the mailer is very, very different); testing on one says little about what will happen on the other. For that matter, they have to create new tests on both platforms that will detect this case, and make sure that old code bases don’t break on the new test case. (Software developers use something called "regression testing" to make sure that new changes don’t break old code.) I’m not particularly surprised by the delay, though I suspect that Apple was caught by surprise by how rapidly the fix was reverse-engineered.

The real question, though, is why they didn’t catch the bug in the first place. It’s a truism in the business that testing can only show the presence of bugs, not the absence. No matter how much you test, you can’t possibly test for all possible combinations of inputs that can result to try to find a failure; it’s combinatorially impossible. I’m sure that Apple tested for this class of failure—talking to the wrong host—but they couldn’t and didn’t test for this in every possible variant of how the encryption takes place. The TLS protocol is exceedingly complex, with many different possibilities for how the encryption is set up; as noted, this particular flaw is only triggered if PFS is in use. There are many other possible paths through the code. Should this particular one have been tested more thoroughly? I suspect so, because it’s a different way that a connection failure could occur; not having been on the inside of Apple’s test team, though, I can’t say for sure how they decided.

There’s a more troubling part of the analysis, though. One very basic item for testers is something called a "code coverage" tool: it shows what parts of the system have or have not been executed during tests. While coverage alone doesn’t suffice, it is a necessary condition; code that hasn’t been executed at all during tests has never been tested. In this case, the code after the second goto was never executed. That could and should have been spotted. That is wasn’t does not speak well of Apple’s test teams. (Note to outraged testers: yes, I’m oversimplifying. There are things that are very hard to do in system test, like handling some hardware error conditions.) Of course, if you want to put on an extra-strength tinfoil hat, perhaps the same attackers who inserted that line of code deleted the test for the condition, but there is zero evidence for that.

So what’s the bottom line? There is a serious bug of unknown etiology in iOS and MacOS. As of this writing, Apple has fixed one but not the other. It may have been an accident; if it was enemy action, it was fairly clumsy. We can hope that Apple will announce the results of its investigation and review its test procedures.