|

Pablo A. Duboue

Kathleen R. McKeown

Department of Computer Science

Columbia University

{pablo,kathy}@cs.columbia.edu

CONTENT SELECTION is the task of choosing the right information to communicate in the output of a Natural Language Generation (NLG) system, given semantic input and a communicative goal. In general, Content Selection is a highly domain dependent task; new rules must be developed for each new domain, and typically this is done manually. Morevoer, it has been argued [19] that Content Selection is the most important task from a user's standpoint (i.e., users may tolerate errors in wording, as long as the information being sought is present in the text).

Designing content selection rules manually is a tedious task. A realistic knowledge base contains a large amount of information that could potentially be included in a text and a designer must examine a sizable number of texts, produced in different situations, to determine the specific constraints for the selection of each piece of information.

Our goal is to develop a system that can automatically acquire constraints for the content selection task. Our algorithm uses the information we learned from a corpus of desired outputs for the system (i.e., human-produced text) aligned against related semantic data (i.e., the type of data the system will use as input). It produces constraints on every piece of the input where constraints dictate if it should appear in the output at all and if so, under what conditions. This process provides a filter on the information to be included in a text, identifying all information that is potentially relevant (previously termed global focus [13] or viewpoints [1]). The resulting information can be later either further filtered, ordered and augmented by later stages in the generation pipeline (e.g., see the spreading activation algorithm used in ILEX [4]).

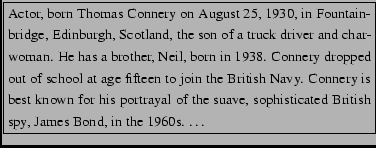

We focus on descriptive texts which realize a single, purely informative, communicative goal, as opposed to cases where more knowledge about speaker intentions are needed. In particular, we present experiments on biographical descriptions, where the planned system will generate short paragraph length texts summarizing important facts about famous people. The kind of text that we aim to generate is shown in Figure 1. The rules that we aim to acquire will specify the kind of information that is typically included in any biography. In some cases, whether the information is included or not may be conditioned on the particular values of known facts (e.g., the occupation of the person being described --we may need different content selection rules for artists than politicians). To proceed with the experiments described here, we acquired a set of semantic information and related biographies from the Internet and used this corpus to learn Content Selection rules.

Our main contribution is to analyze how variations in the data influence changes in the text. We perform such analysis by splitting the semantic input into clusters and then comparing the language models of the associated clusters induced in the text side (given the alignment between semantics and text in the corpus). By doing so, we gain insights on the relative importance of the different pieces of data and, thus, find out which data to include in the generated text.

The rest of this paper is divided as follows: in the next section, we present the biographical domain we are working with, together with the corpus we have gathered to perform the described experiments. Section 3 describes our algorithm in detail. The experiments we perform to validate it, together with their results, are discussed in Section 4. Section 5 summarizes related work in the field. Our final remarks, together with proposed future work conclude the paper.

The research described here is done for the automatic construction of the Content Selection module of PROGENIE [5], a biography generator under construction. Biography generation is an exciting field that has attracted practitioners of NLG in the past [10,17,15,20]. It has the advantages of being a constrained domain amenable to current generation approaches, while at the same time offering more possibilities than many constrained domains, given the variety of styles that biographies exhibit, as well as the possibility for ultimately generating relatively long biographies.

We have gathered a resource of text and associated knowledge in the biography domain. More specifically, our resource is a collection of human-produced texts together with the knowledge base a generation system might use as input for generation. The knowledge base contains many pieces of information related to the person the biography talks about (and that the system will use to generate that type of biography), not all of which necessarily will appear in the biography. That is, the associated knowledge base is not the semantics of the target text but the larger set1 of all things that could possibly be said about the person in question. The intersection between the input knowledge base and the semantics of the target text is what we are interested in capturing by means of our statistical techniques.

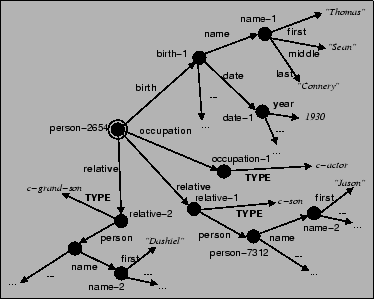

To collect the semantic input, we crawled 1,100 HTML pages containing celebrity fact-sheets from the E! Online website.2 The pages comprised information in 14 categories for actors, directors, producers, screenwriters, etc. We then proceeded to transform the information in the pages to a frame-based knowledge representation. The final corpus contains 50K frames, with 106K frame-attribute-value triples, for the 1,100 people mentioned in each fact-sheet. An example set of frames is shown in Figure 3.

The text part was mined from two different web-sites, biography.com, containing typical biographies, with an average of 450 words each; and imdb.com, the Internet movie database, 250-word average length biographies. In each case, we obtained the semantic input from one website and a separate biography from a second website. We linked the two resources using techniques from record linkage in census statistical analysis [7]. We based our record linkage on the Last Name, First Name, and Year of Birth attributes.

Figure 2 illustrates our two-step approach. In the first step (shaded region of the figure), we try to identify and solve the easy cases for Content Selection. The easy cases in our task are pieces of data that are copied verbatim from the input to the output. In biography generation, this includes names, dates of birth and the like. The details of this process are discussed in Section 3.1. After these cases have been addressed, the remaining semantic data is clustered and the text corresponding to each cluster post-processed to measure degrees of influence for different semantic units, presented in Section 3.2. Further techniques to improve the precision of the algorithm are discussed in Section 3.3.

Central to our approach is the notion of data paths in the

semantic network (an example is shown in Figure 3). Given

a frame-based representation of knowledge, we need

to identify particular pieces of knowledge inside the graph. We do so

by selecting a particular frame as the root of the graph (the

person whose biography we are generating, in our case, doubly circled

in the figure) and considering the paths in the graph as identifiers

for the different pieces of data. We call these data

paths. Each path will identify a class of values, given the

fact that some attributes are list-valued (e.g., the relative attribute in the figure). We use the notation

![]() to denote data paths.

to denote data paths.

In the first stage (cf. Fig. 2(1)), the objective is to identify pieces from the input that are copied verbatim to the output. These types of verbatim-copied anchors are easy to identify and they allow us do two things before further analyzing the input data: remove this data from the input as it has already been selected for inclusion in the text and mark this piece of text as a part of the input, not as actual text.

The rest of the semantic input is either verbalized (e.g., by means of

a verbalization rule of the form

![]()

![]() ``young'') or not included at all. This

situation is much more challenging and requires the use of our

proposed statistical selection technique.

``young'') or not included at all. This

situation is much more challenging and requires the use of our

proposed statistical selection technique.

For each class in the semantic input that was not ruled out in the

previous step (e.g.,

![]() ), we proceed

to cluster (cf. Fig. 2(2)) the possible values

in the path, over all people (e.g.,

), we proceed

to cluster (cf. Fig. 2(2)) the possible values

in the path, over all people (e.g.,

![]() for age).

Clustering details can be found in [6].

In the case of free-text fields, the top level, most informative

terms,3 are picked and used for the clustering.

For example, for ``played an insecure young resident'' it

would be

for age).

Clustering details can be found in [6].

In the case of free-text fields, the top level, most informative

terms,3 are picked and used for the clustering.

For example, for ``played an insecure young resident'' it

would be

![]() .

.

Having done so, the texts associated with each cluster are used to

derive language models (in our case we used bi-grams, so we count the

bi-grams appearing in all the biographies for a given cluster --e.g.,

all the people with age between 25 and 50 years old,

![]() ).

).

We then measure the variations on the language models produced by the variation (clustering) on the data. What we want is to find a change in word choice correlated with a change in data. If there is no correlation, then the piece of data which changed should not be selected by Content Selection.

In order to compare language models, we turned to techniques from

adaptive NLP (i.e., on the basis of genre and type distinctions)

[8]. In particular, we employed the cross

entropy4 between two

language models ![]() and

and ![]() , defined as follows (where

, defined as follows (where ![]() is

the probability that

is

the probability that ![]() assigns to the

assigns to the ![]() -gram

-gram ![]() ):

):

Smaller values of ![]() indicate that

indicate that ![]() is more

similar to

is more

similar to ![]() . On the other hand, if we take

. On the other hand, if we take ![]() to be a model

of randomly selected documents and

to be a model

of randomly selected documents and ![]() a model of a subset of texts

that are associated with the cluster, then a greater-than-chance

a model of a subset of texts

that are associated with the cluster, then a greater-than-chance ![]() value would be an indicator that the cluster in the semantic side is

being correlated with changes in the text side.

value would be an indicator that the cluster in the semantic side is

being correlated with changes in the text side.

|

We then need to perform a sampling process, in which we want to obtain

![]() values that would represent the null hypothesis in the domain. We

sample two arbitrary subsets of

values that would represent the null hypothesis in the domain. We

sample two arbitrary subsets of ![]() elements each from the total set

of documents and compute the

elements each from the total set

of documents and compute the ![]() of their derived language models

(these

of their derived language models

(these ![]() values constitute our control set).

We then compare, again, a random sample of size

values constitute our control set).

We then compare, again, a random sample of size ![]() from the cluster

against a random sample of size

from the cluster

against a random sample of size ![]() from the difference between the

whole collection and the cluster (these

from the difference between the

whole collection and the cluster (these ![]() values constitute our

experiment set). To see whether the values in the experiment set are

larger (in a stochastic fashion) than the values in the control set,

we employed the Mann-Whitney U test [18]

(cf. Fig. 2(3)). We performed 20 rounds of sampling

(with

values constitute our

experiment set). To see whether the values in the experiment set are

larger (in a stochastic fashion) than the values in the control set,

we employed the Mann-Whitney U test [18]

(cf. Fig. 2(3)). We performed 20 rounds of sampling

(with ![]() ) and tested at the

) and tested at the ![]() significance level. Finally, if

the cross-entropy values for the experiment set are larger than for

the control set, we can infer that the values for that semantic

cluster do influence the text. Thus, a positive U test for any data

path was considered as an indicator that the data path should be

selected.

significance level. Finally, if

the cross-entropy values for the experiment set are larger than for

the control set, we can infer that the values for that semantic

cluster do influence the text. Thus, a positive U test for any data

path was considered as an indicator that the data path should be

selected.

Using simple thresholds and the U test, class-based content

selection rules can be obtained. These rules will select or unselect

each and every instance of a given data path at the same time (e.g.,

if

![]() is

selected, then both ``Dashiel'' and ``Jason'' will

be selected in Figure 3). By counting the number of

times a data path in the exact matching appears in the texts (above

some fixed threshold) we can obtain baseline content

selection rules (cf. Fig. 2(A)). Adding our

statistically selected (by means of the cross-entropy sampling and the

U test) data paths to that set we obtain class-based content

selection rules (cf. Fig. 2(B)). By means of its

simple algorithm, we expect these rules to overtly over-generate, but

to achieve excellent coverage. These class-based rules are relevant to

the KR concept of Viewpoints

[1];5we extract a slice of the knowledge base that is relevant to the

domain task at hand.

is

selected, then both ``Dashiel'' and ``Jason'' will

be selected in Figure 3). By counting the number of

times a data path in the exact matching appears in the texts (above

some fixed threshold) we can obtain baseline content

selection rules (cf. Fig. 2(A)). Adding our

statistically selected (by means of the cross-entropy sampling and the

U test) data paths to that set we obtain class-based content

selection rules (cf. Fig. 2(B)). By means of its

simple algorithm, we expect these rules to overtly over-generate, but

to achieve excellent coverage. These class-based rules are relevant to

the KR concept of Viewpoints

[1];5we extract a slice of the knowledge base that is relevant to the

domain task at hand.

However, the expressivity of the class-based approach is plainly not

enough to capture the idiosyncrasies of content selection: for

example, it may be the case that children's names may be worth

mentioning, while grand-children's names are not. That is, in

Figure 3,

![]() is dependent on

is dependent on

![]() and therefore, all the information in the current

instance should be taken into account to decide whether a particular

data path and it values should be included or not. Our approach so

far simply determines that an attribute should always be included in a

biography text. These examples illustrate that content selection rules

should capture cases where an attribute should be included only under

certain conditions; that is, only when other semantic attributes take

on specific values.

and therefore, all the information in the current

instance should be taken into account to decide whether a particular

data path and it values should be included or not. Our approach so

far simply determines that an attribute should always be included in a

biography text. These examples illustrate that content selection rules

should capture cases where an attribute should be included only under

certain conditions; that is, only when other semantic attributes take

on specific values.

We turned to ripper6 [3], a supervised rule learner

categorization tool, to elucidate these types of relationships.

We use as features a flattened version of the input

frames,7 plus the actual value of the data in question.

To obtain the right label for the training instance we do the

following:

for the exact-matched data paths, matched pieces of data will

correspond to positive training classes, while unmatched pieces,

negative ones. That is to say, if we know that

![]() and that

and that ![]() appears in the text, we can conclude that the data of this particular

person can be used as a positive training instance for the case

appears in the text, we can conclude that the data of this particular

person can be used as a positive training instance for the case

![]() . Similarly, if there is no

match, the opposite is inferred.

. Similarly, if there is no

match, the opposite is inferred.

For the U-test selected paths, the situation is more complex, as we only have clues about the importance of the data path as a whole. That is, while we know that a particular data path is relevant to our task (biography construction), we don't know with which values that particular data path is being verbalized. We need to obtain more information from the sampling process to be able to identify cases in which we believe that the relevant data path has been verbalized.

To obtain finer grained information, we turned to a ![]() -gram

distillation process (cf. Fig. 2(4)), where the

most significant

-gram

distillation process (cf. Fig. 2(4)), where the

most significant ![]() -grams (bi-grams in our case) were picked during

the sampling process, by looking at their overall contribution to the

CE term in Equation 1. For example, our system found the

bi-grams

-grams (bi-grams in our case) were picked during

the sampling process, by looking at their overall contribution to the

CE term in Equation 1. For example, our system found the

bi-grams ![]() and

and ![]() 8 as relevant for

the cluster

8 as relevant for

the cluster

![]()

![]() , while the cluster

, while the cluster

![]()

![]() will not include those, but will include

will not include those, but will include

![]() and

and ![]() . These

. These

![]() -grams thus indicate that the data path

-grams thus indicate that the data path

![]() is included in

the text; a change in value does affect the output. We later use the

matching of these

is included in

the text; a change in value does affect the output. We later use the

matching of these ![]() -grams as an indicator of that particular

instance as being selected in that document.

-grams as an indicator of that particular

instance as being selected in that document.

Finally, the training data for each data path is generated.

(cf. Fig. 2(5)). The selected or unselected label

will thus be chosen either via direct extraction from the exact

match or by means of identification of distiled, relevant ![]() -grams.

After ripper is run, the obtained rules are our sought

content selection rules (cf. Fig. 2(5)).

-grams.

After ripper is run, the obtained rules are our sought

content selection rules (cf. Fig. 2(5)).

We used the following experimental setting: 102 frames were separated from the full set together with their associated 102 biographies from the biography.com site. This constituted our development corpus. We further split that corpus into development training (91 people) and development test and hand-tagged the 11 document-data pairs.

The annotation was done by one of the authors, by reading the

biographies and checking which triples (in the RDF sense,

![]() ) were actually mentioned in

the text (going back and forth to the biography as needed). Two cases

required special attention. The first one was

aggregated information, e.g., the text may say ``he

received three Grammys'' while in the semantic input each award was

itemized, together with the year it was received, the reason and the

type (Best Song of the Year, etc.). In that case, only the

name of award was selected, for each of the three awards. The

second case was factual errors. For example, the biography may say

the person was born in MA and raised in WA, but the fact-sheet may say

he was born in WA. In those cases, the intention of the human

writer was given priority and the place of birth was marked as

selected, even though one of the two sources were wrong.

The annotated data total 1,129 triples. From them, only 293 triples

(or a 26%) were verbalized in the associated text and thus,

considered selected. That implies that the ``select all'' tactic

(``select all'' is the only trivial content selection tactic, ``select

none'' is of no practical value) will achieve an F-measure of 0.41

(26% prec. at 100% rec.).

) were actually mentioned in

the text (going back and forth to the biography as needed). Two cases

required special attention. The first one was

aggregated information, e.g., the text may say ``he

received three Grammys'' while in the semantic input each award was

itemized, together with the year it was received, the reason and the

type (Best Song of the Year, etc.). In that case, only the

name of award was selected, for each of the three awards. The

second case was factual errors. For example, the biography may say

the person was born in MA and raised in WA, but the fact-sheet may say

he was born in WA. In those cases, the intention of the human

writer was given priority and the place of birth was marked as

selected, even though one of the two sources were wrong.

The annotated data total 1,129 triples. From them, only 293 triples

(or a 26%) were verbalized in the associated text and thus,

considered selected. That implies that the ``select all'' tactic

(``select all'' is the only trivial content selection tactic, ``select

none'' is of no practical value) will achieve an F-measure of 0.41

(26% prec. at 100% rec.).

Following the methods outlined in Section 3, we

utilized the training part of the development corpus to mine

Content Selection rules. We then used the development test to run different

trials and fit the different parameters for the algorithm. Namely, we

determined that filtering the bottom 1,000 TF*IDF weighted words from

the text before building the ![]() -gram model was important for the task

(we compared against other filtering schemes and the use of lists of

stop-words). The best parameters found and the fitting methodology are

described in [6].

-gram model was important for the task

(we compared against other filtering schemes and the use of lists of

stop-words). The best parameters found and the fitting methodology are

described in [6].

We then evaluated on the rest of the semantic input (998 people) aligned with one other textual corpus (imdb.com). As the average length-per-biography are different in each of the corpora we worked with (450 and 250, respectively), the content selection rules to be learned in each case were different (and thus, ensure us an interesting evaluation of the learning capabilities). In each case, we split the data into training and test sets, and hand-tagged the test set, following the same guidelines explained for the development corpus. The linkage step also required some work to be done. We were able to link 205 people in imdb.com and separated 14 of them as the test set.

The results are shown in Table 19. Several things can be noted in the table. The first is that imdb.com represents a harder set than our development set. That is to expect, as biography.com's biographies have a stable editorial line, while imdb.com biographies are submitted by Internet users. However, our methods offer comparable results on both sets. Nonetheless, the tables portray a clear result: the class-based rules are the ones that produce the best overall results. They have the highest F-measure of all approaches and they have high recall. In general, we want an approach that favors recall over precision in order to avoid losing any information that is necessary to include in the output. Overgeneration (low precision) can be corrected by later processes that further filter the data. Further processing over the output will need other types of information to finish the Content Selection process. The class-based rules filter-out about 50% of the available data, while maintaining the relevant data in the output set.

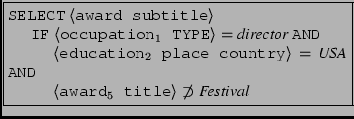

An example rule from the ripper approach can be seen in Figure 4. The rules themselves look interesting, but while they improve in precision, as was our goal, their lack of recall makes their current implementation unsuitable for use. We have identified a number of changes that we could make to improve this process and discuss them at the end of the next section. Given the experimental nature of these results, we would not yet draw any conclusions about the ultimate benefit of the ripper approach.

|

Very few researchers have addressed the problem of knowledge acquisition for content selection in generation. A notable exception is Reiter et al. [16]'s work, which discusses a rainbow of knowledge engineering techniques (including direct acquisition from experts, discussion groups, etc.). They also mention analysis of target text, but they abandon it because it was impossible to know the actual criteria used to chose a piece of data. In contrast, in this paper, we show how the pairing of semantic input with target text in large quantities allows us to elicit statistical rules with such criteria.

Aside from that particular work, there seems to exist some momentum in the literature for a two-level Content Selection process (e.g., Sripada et al. [19], Bontcheva and Wilks [2], and Lester and Porter [11]). For instance, [11] distinguish two levels of content determination, ``local'' content determination is the ``selection of relatively small knowledge structures, each of which will be used to generate one or two sentences'' while ``global'' content determination is ``the process of deciding which of these structures to include in an explanation''. Our technique, then, can be thought of as picking the global Content Selection items.

One of the most felicitous Content Selection algorithms proposed in the literature is the one used in the ILEX project [4], where the most prominent pieces of data are first chosen (by means of hardwired ``importance'' values on the input) and intermediate, coherence-related new ones are later added during planning. For example, a painting and the city where the painter was born may be worth mentioning. However, the painter should also be brought into the discussion for the sake of coherence.

Finally, while most classical approaches, exemplified by [13,14] tend to perform the Content Selection task integrated with the document planning, recently, the interest in automatic, bottom-up content planners has put forth a simplified view where the information is entirely selected before the document structuring process begins [12,9]. While this approach is less flexible, it has important ramifications for machine learning, as the resulting algorithm can be made simpler and more amenable to learning.

We have presented a novel method for learning Content Selection rules, a task that is difficult to perform manually and must be repeated for each new domain. The experiments presented here use a resource of text and associated knowledge that we have produced from the Web. The size of the corpus and the methodology we have followed in its construction make it a major resource for learning in generation. Our methodology shows that data currently available on the Internet, for various domains, is readily useable for this purpose. Using our corpora, we have performed experimentation with three methods (exact matching, statistical selection and rule induction) to infer rules from indirect observations from the data.

Given the importance of content selection for the acceptance of generated text by the final user, it is clear that leaving out required information is an error that should be avoided. Thus, in evaluation, high recall is preferable to high precision. In that respect, our class-based statistically selected rules perform well. They achieve 94% recall in the best case, while filtering out half of the data in the input knowledge base. This significant reduction in data makes the task of developing further rules for content selection a more feasible task. It will aid the practitioner of NLG in the Content Selection task by reducing the set of data that will need to be examined manually (e.g., discussed with domains experts).

We find the results for ripper disappointing and think more experimentation is needed before discounting this approach. It seems to us ripper may be overwhelmed by too many features. Or, this may be the best possible result without incorporating domain knowledge explicitly. We would like to investigate the impact of additional sources of knowledge. These alternatives are discussed below.

In order to improve the rule induction results, we may use

spreading activation starting from the particular frame to be

considered for content selection and include the semantic information

in the local context of the frame. For example, to content select

![]() , only values from frames

, only values from frames

![]() and

and

![]() would be considered (e.g.,

would be considered (e.g.,

![]() will

be completely disregarded). Another

improvement may come from more intertwining between the exact match

and statistical selector techniques.

Even if some data path appears to be copied verbatim most of the time,

we can run our statistical selector for it and use held out data to

decide which performs better.

will

be completely disregarded). Another

improvement may come from more intertwining between the exact match

and statistical selector techniques.

Even if some data path appears to be copied verbatim most of the time,

we can run our statistical selector for it and use held out data to

decide which performs better.

Finally, we are interested in adding a domain paraphrasing dictionary to enrich the exact matching step. This could be obtained by running the semantic input through the lexical chooser of our biography generator, PROGENIE, currently under construction.