|

Machine Learning for Statistics: SDGB 7847 |

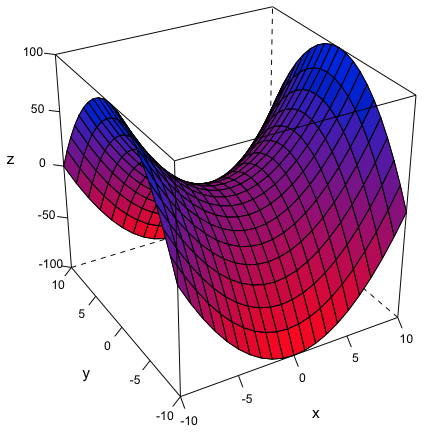

'What one fool could understand, another can.'Description The course will give participants an opportunity to implement statistical models. We will cover numerical optimization techniques including gradient descent, newton's method and quadratic programming solvers to fit linear and logistic regression, discriminant analysis, support vector machines and neural networks. The second part of the course will focus on advanced topics for computing posterior distributions and motivate their appeal in Bayesian inference. We will survey importance and rejection sampling, Metropolis, Gibbs sampling, and Sequential Monte Carlo. Students will be exposed to convex duality, constrained optimization, bias/variance decompositions, entropy, mutual information, KL divergence, maximum likelihood/maximum a posteriori estimation, Fisher scoring, Laplace approximation, Markov chains and saddle point methods, each of which will be reemphasized from a computational perspective. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Prerequisites Multivariate Calculus, Linear Algebra, Probability, Statistical Computing, i.e., be able to program in and have regular access to Matlab, Python or R. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Textbooks The Elements of Statistical Learning [ESL] by Hastie, Tibshirani, Friedman Pattern Recognition and Machine Learning [PRML] by Bishop Convex Optimization [CVX] by Boyd (not required but a nice reference) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Grade Distribution

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Homework Please typeset your homework using LaTeX which is the standard for technical or scientific documents. You may visit the www.latex-project.org to download a copy and read the following tutorial to get started. A template for the homework is available here (cls, tex, pdf). A word of advice: start early on the graded homework! The instructor has worked through all of the problems and some are challenging. Please use Matlab, Python or R. Each language has its own pros and cons although if you know one you can probably learn the others easily. Standard academic honesty policy applies. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Tentative Course Outline

|