Abstract

A live-data interactive 3-dimensional modular framework for

mobile robot visualization and control. This extensible system

provides a flexible environment for conducting mobile robot

experiments on a variety of hardware and software platforms.

Introduction

This page describes the design and architecture of a mobile

robot visualization and control software framework and application.

While complete autonomy is often a goal in mobile robotics,

frequently manual control is require to perform experiments,

manage the robot, or simply direct the robot in its task if

further automation is not possible. For research platforms,

the software layer available to the researcher is often very

low-level, requiring an entire experimental API layer to be

written or so general that it is only useful for verifying

hardware functionality or product demos.

RWII provides a sophisticated multi-layer software platform

for their line of robots, including a component-oriented

visualization and control application. In previous work, we

extended RWI's MOM (Mobility Object Manager) UI to handle

our own Mobility-tied robot control services. This approach

was reasonably effective, but had several drawbacks which

eventually resulted in the writing of the software described

here.

Drawbacks of MOM and extensions:

- Modules added to mom require source code modification - while

these mods are simple, they require a source license and then

thereafter you have you maintain your own branch of the vendor's

source tree, re-integrating your modifications for each new version

- Organization is fundamentally interface-oriented. Modules are launched

by selecting them from a list of registered modules for that

particular Mobility/CORBA interface-type. This makes it very difficult

to build an integrated system without creating a heinous mess.

- The threading model provided is simplistic (though simplicity is

not necessarily bad) and extremely manual.

- Each new module require the re-compilation of at least one MOM

source object.

Given the chance to write a visualization/control system from

scratch, it made sense to go all-out and build the whole thing

in 3D. The tools are/were maturing, etc.

Related Work

RWII

Real World Interfaces supplies sensor visualization and control

interface with their Mobility software which lets a user examine

exported CORBA/Mobility objects by launching a type-sensitive

GUI window for each object. Since many objects use the same types,

but interpret the contained data differently, you have to know which

GUI component to launch for each named object. The user manually

spawns a UI component foreach item he is interested in. This allows a

fine degree of control of the visualization canvas and is extremely

convenient for testing during control software development, but lacks

the integrated nature of a more task-oriented interface.

Robot Excavator (U. of Vancouver?)

The U. of V. uses a 3D visualization tool to provide remote and/or

simulated visual feedback for its 4dof joystick-driven backhoe. The backhoe

model controlled by the joystick has a working context and real-time

articulation response keyed to either the model or the live-data sensors

on the actual backhoe. Our visualization objectives are somewhat similar

to the backhoe project, but we also need to be able to modify our

environment in various ways (view platform motion, batch navigation

control, etc.)

Problem/Solution

Build a live-data 3D visualization and control system which

is easy for an independant programmer to extend (without a

source license or custom tree) that allows full-robot descriptions

for easy control of complex experiments.

A user-controled runtime module-loading system allows independantly

developed extensions to be toggled on and off for both development

and experimentation.

Multiple robot support lets you visualize your entire fleet of

robots at once, controlling each independantly.

Publicized Module APIs let modules communicate cooperatively

if required.

possible UI ideas from complex gaming interaction.

CORBA helper routines, but nothing required.

Platform

RWI Pioneers and ATRV2

Our robot hardware platforms currently in use for this

layer of software development is a pair of small RWII Pioneers

with a sonar suite and odometry feedback, and an larger ATRV-2

all-terrain robot with sonars, odometry, GPS, video and digital

compass.

software

- Mobility across CORBA

- The low-level control software is the RWII-supplied Mobility suite.

- Java-1.2.2

- Java3D-1.3

- Jacorb-1.1

- The User Interface is written for Java-1.2.2, Java3D-1.3, and Jacorb-1.1.

Architecture

The Virtual environment consists of a single VitualUniverse

populated by any number of declared Robot objects. Each robot

has any number of robot-specific visualization and control

modules which render data for that particular robot.

The VirtualUniverse may additionally contain some non-robot-specific

modules such as maps or buildings for display.

The Framework architecture consists of a main framework container

which holds the ModuleLoader, VirtualUniverse, and creates the

basic UI components. The ModuleLoader reads a runtime config file

describing the VirtualUniverse and creates the Robots and SensorModules

declared therein. SensorModules may communicate by finding each other

by name as necessary (all configurable in the runtime config) and calling

each other's public APIs. Logging data structures are provided to

module programmers for easy recording of data streams during experiments.

Results

How fast is this?

On a reasonably fast machine the UI performs adequately until

a large number of data streams are managing lots of polygons

at once. Unfortunately the JVM seems to be the limiting factor

on our Linux development platforms. How large is large? maybe

actually not so large? buildings?

** test on windows? dare we? **

How easy is it to develop new modules?

Modules are all subclasses of the SensorModule class which

provides a large API for simplifying development. They are

loaded into the UI at runtime by declaring them in association

with a specific robot (if necessary) in the config file, thereby

keying them for the ModuleLoader. Only declared modules are loaded

for a given robot, so module sets may differ widely across robots

with different capabilities.

Relevant/Special module description

- NavServerModule

- One of the main workhourse control modules is the front-end to the

Navigation and Localizer system which provides a task-oriented API

containing commands ranging from basic motion (GOTO, TURNTO) to camera

control, and a batch command processing facility. The NavServer

command "targets" are managed via a combination list widget and

universe manifestation interface. The user can manage navigational

targets by clicking in the virtual world and dragging the generated

golf-cup-style flags around. An integrated Voronoi-based pathplanner

in the client can optionally be used to interpolate safe paths between

geographic targets.

- Active Robot

- The notion of an "active" robot separates robot control windows from

one another. Generally only one module receives user input at a time,

and the currently active robot's windows will be shown by default

while the others are hidden to minimize confusion. Modules may also

choose to render their data differently depending on whether or not

they are active. The robot manifestation module, for example, draws a

ring around the robot if it is active.

|

|

Screenshot of 3D RobotUniverse window w/ a robot and some

NavTargets

Screenshot of 3D RobotUniverse window w/ a robot and some

NavTargets

|

|

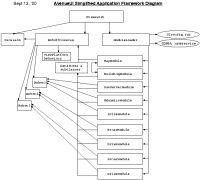

Framework class and dataflow diagram

Framework class and dataflow diagram

|

|

|