Gaze Locking: Passive Eye Contact Detection

for Human–Object Interaction |

| |

Eye contact plays a crucial role in our everyday social interactions. The

ability of a device to reliably detect when a person is looking at it can lead

to powerful human–object interfaces. Today, most gaze-based interactive

systems rely on gaze tracking technology. Unfortunately, current gaze tracking

techniques require active infrared illumination, calibration, or are sensitive

to distance and pose. In this work, we propose a different solution—a passive,

appearance-based approach for sensing eye contact in an image. By focusing on gaze

locking rather than gaze tracking, we exploit the special appearance of

direct eye gaze, achieving a Matthews correlation coefficient (MCC) of over 0.83

at long distances (up to 18 m) and large pose variations (up to ±30° of

head yaw rotation) using a very basic classifier and without calibration. To

train our detector, we also created a large publicly available gaze data set: 5,880

images of 56 people over varying gaze directions and head poses. We demonstrate

how our method facilitates human–object interaction (top-left), user analytics

(top-right), image filtering (bottom-left), and gaze-triggered photography

(bottom-right). |

Publications

"Gaze Locking: Passive Eye Contact Detection for Human?Object Interaction,"

B.A. Smith, Q. Yin, S.K. Feiner and S.K. Nayar,

ACM Symposium on User Interface Software and Technology (UIST),

pp. 271-280, Oct. 2013.

[PDF] [bib] [©]

|

Images

|

|

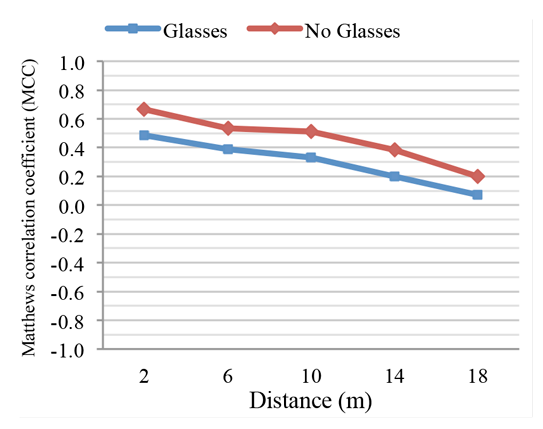

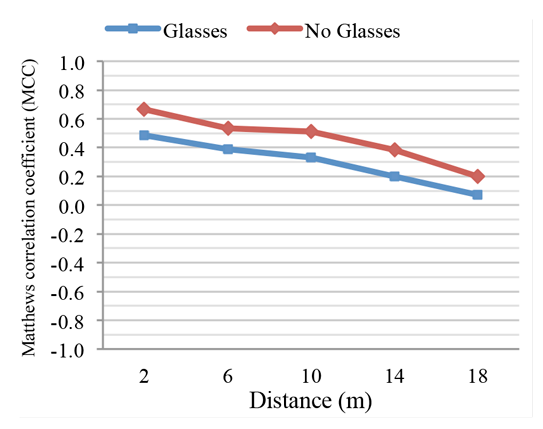

Gaze Locking in People:

(a) People are relatively accurate at sensing eye contact, even when the person gazing (i.e.,

the gazer) is wearing prescription glasses. At distances of 18 m, gazees still achieve MCCs of

over 0.2 if the gazer is not wearing glasses. Here, the gazer is at a frontal (0°) head pose.

(b) The gazee's accuracy decreases roughly linearly over distance regardless of the gazer's

(horizontal) head pose. Head poses that are more off-center (such as ±30°) have

slightly lower MCCs. (c) The gazees are least accurate when the gazer is actually looking at them

(the 0° case)—that is, the false negative rate is higher than the false positive rate.

Interestingly, if the gazer is looking away, the gazee is more accurate when he or she can only see

one of the gazer's eyes (the blue line is not strictly above the red and green lines).

|

| |

|

|

|

|

Gaze Locking Detector Pipeline:

Our gaze locking detector is comprised of three broad phases, shown here in different colors.

In the first phase, we locate the eyes in an image and transform them into a standard coordinate

frame. In the second phase, we mask out the eyes' surroundings and assemble pixel-wise features

from the eyes' appearance. Finally, we project these features into a low-dimensional space, then

feed them into a binary classifier to determine whether the face is gaze locking or not.

|

| |

|

|

|

|

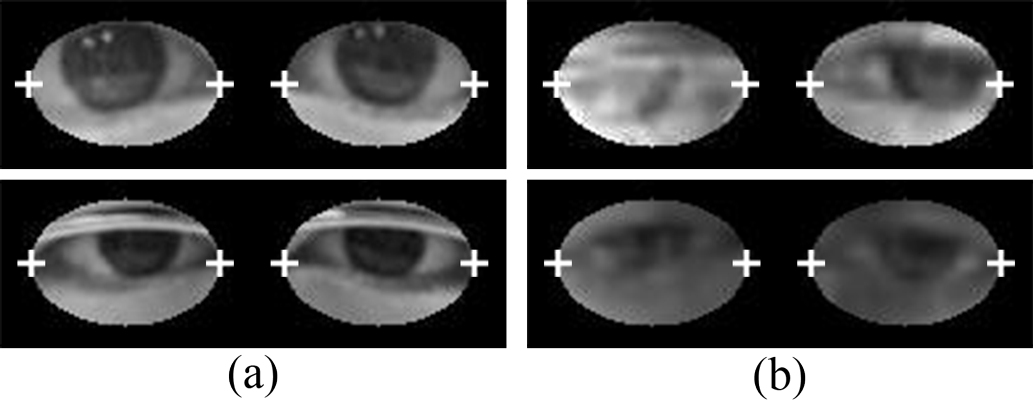

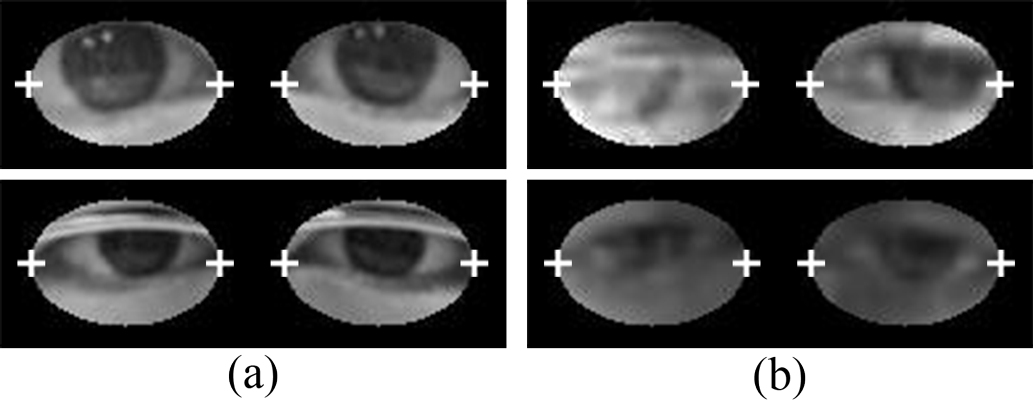

Rectified Features and Failure Cases:

(a) Examples of rectified and masked features. Each eye has been transformed to a 48x36 px

coordinate frame. The crosshairs signify eye corners detected in the first phase. We mask each

eye with a fixed-size ellipse whose shape was optimized offline for accuracy. (b) Two failure

cases: strong highlights on glasses (top) and low contrast (bottom).

|

| |

|

|

|

|

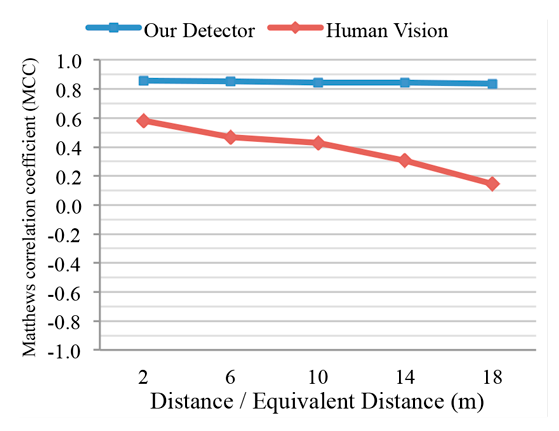

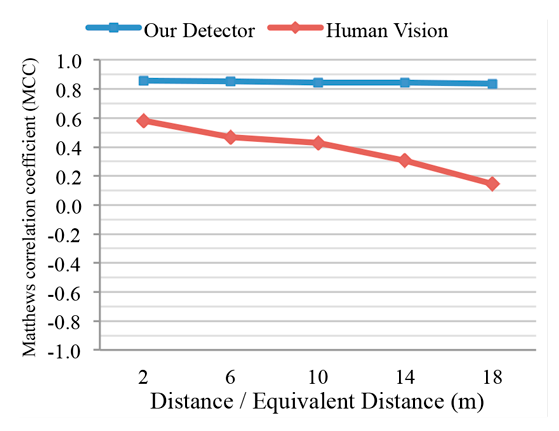

Gaze Locking Detector Performance:

In these tests, we downsampled our detector's test images to match the resolution seen by the human

fovea at the respective distances. (a) Our detector achieves MCCs of over 0.83 at a distance of 18 m,

significantly outperforming humans' accuracy. The detector's accuracy is fairly constant over distance

because our method uses features that are of very low resolution. The line representing human

performance is an aggregation of the lines from Figure (a) of "Gaze Locking in People" above. (b) Our

detector's accuracy is also fairly constant over a variety of (horizontal) head poses. (c) As with

human vision, our detector's accuracy is worst when people are looking at or very close to the camera.

Our detector significantly outperforms human vision nonetheless.

|

| |

|

|

|

|

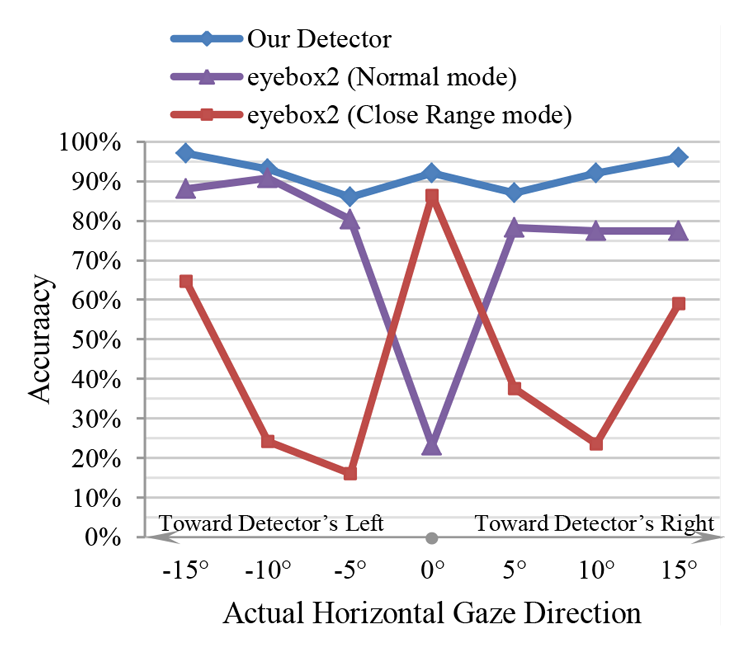

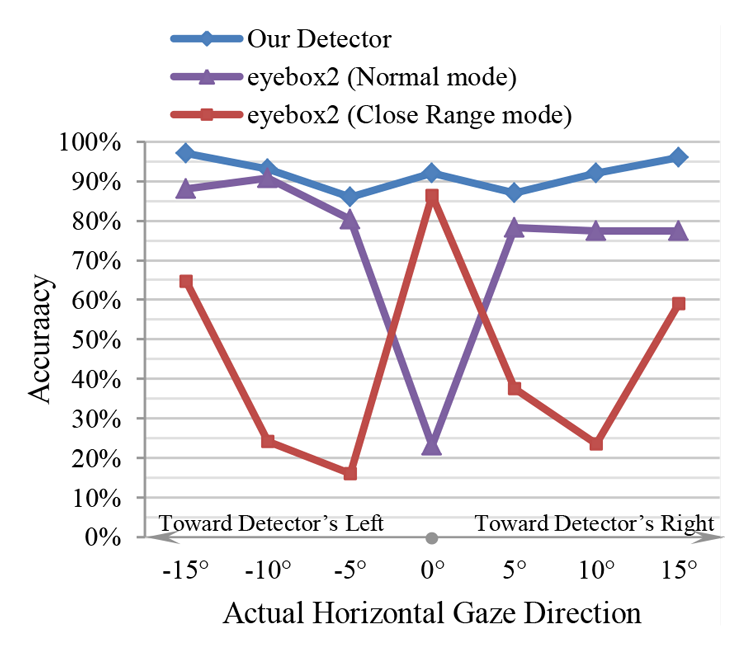

Comparison with an Active System:

Here we compare our sample detector with an eyebox2, which implements an active infrared approach to

eye contact detection, in both Normal (6 m) and Close Range (2 m) modes. Though passive, our detector

is more accurate than the eyebox2. The eyebox2's Normal mode seems to be tuned toward reducing false

positives, and its Close Range mode seems to be tuned toward reducing false negatives.

|

| |

|

|

|

|

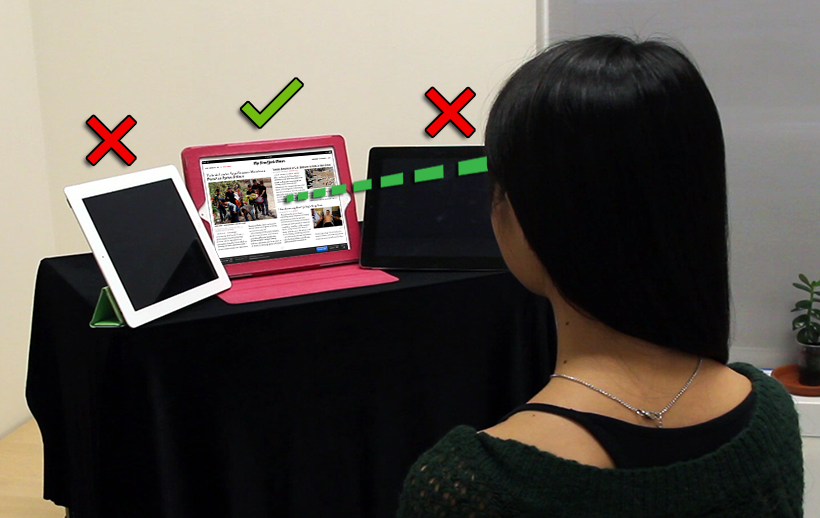

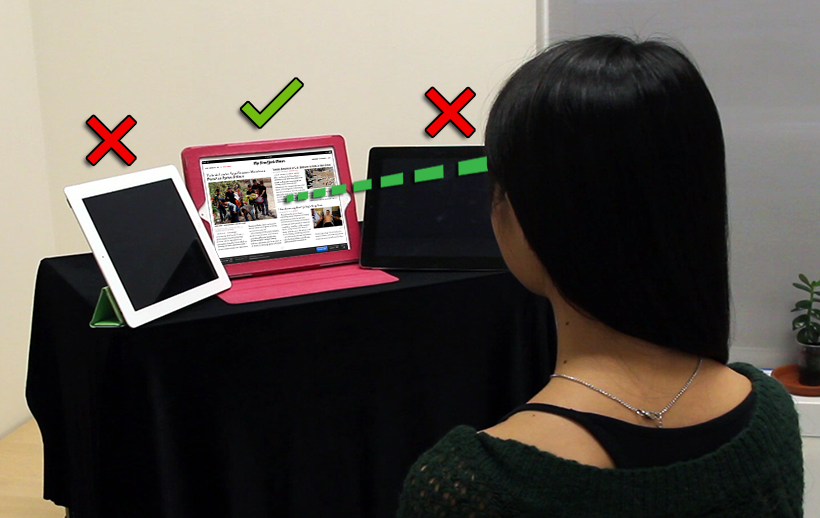

Application 1: Human–Object Interaction:

Our gaze locking approach allows people to interact with objects just by looking at them.

In this proof of concept, we process the videos from the embedded cameras of three iPads to

sense when the iPads are being looked at. Here, the woman is looking at the iPad in the middle.

Since the iPads' cameras are on their extreme left, she was instructed to look at the iPads'

left halves. Our accompanying video shows our detector's output on the actual video feeds.

|

| |

|

|

|

|

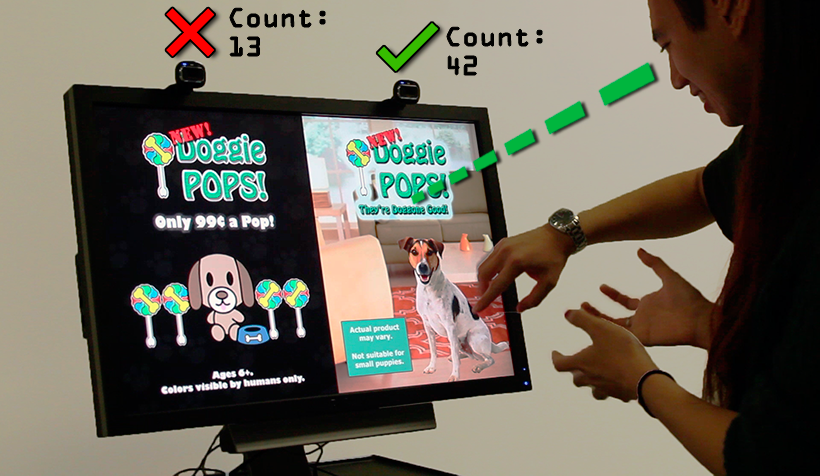

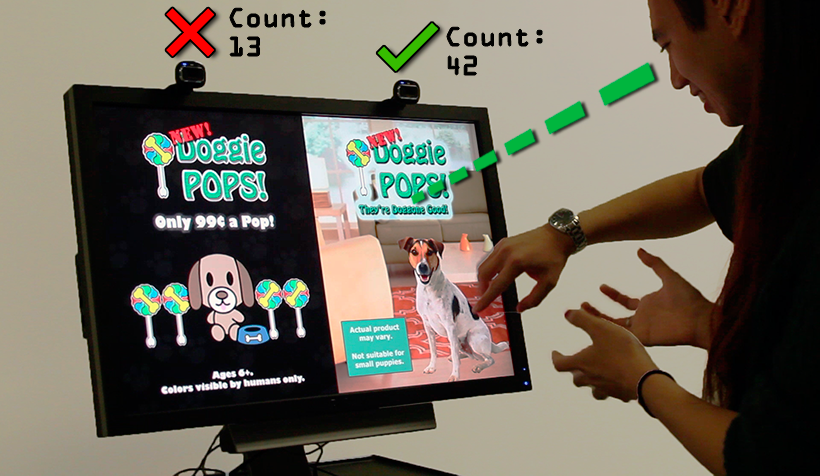

Application 2: User Analytics:

Two ordinary webcams are placed above two ads for the same product. By counting the number of

times each advertisement is viewed, we can gauge which one is more effective. The counts

incremented when the viewers looked at the ads' top halves. Our accompanying video shows our

detector's output on the actual video feeds.

|

| |

|

|

|

|

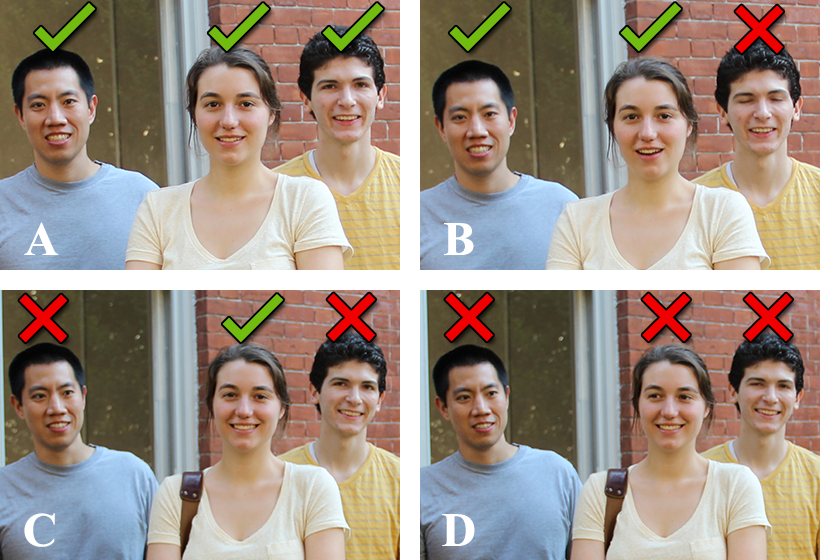

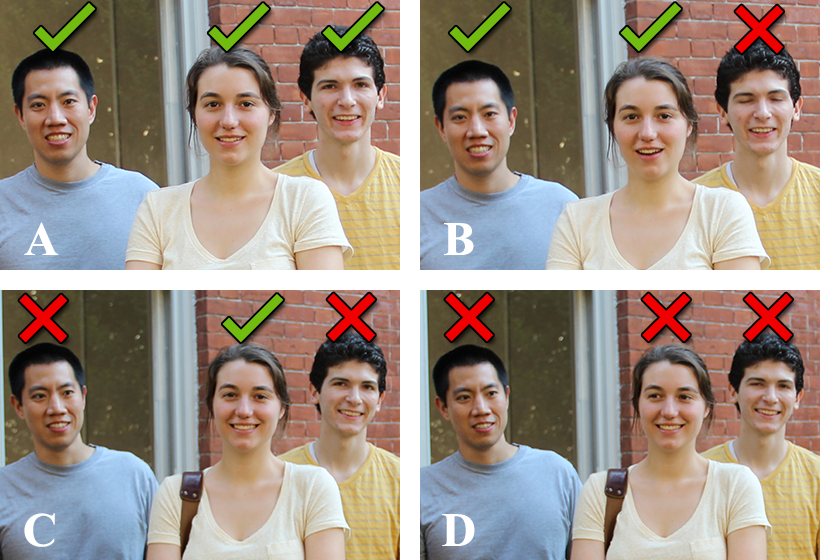

Application 3: Image Search Filter:

Our approach is completely appearance-based and can be applied to any image, including existing

images such as ones from the Web. Hence, we can sort these images (A–D) by degree of eye

contact to quickly find one where everyone is looking at the camera. These are actual decisions

made by our detector.

|

| |

|

|

|

|

Application 4: Gaze-Triggered Photography:

By incorporating a gaze locking detector in a consumer-level camera, the camera could

automatically take a picture when the entire group is looking straight back, allowing the

photographer to join the group and still capture a perfect photo. Our accompanying video shows

our detector's output on the camera's feed.

|

| |

|

|

|

Video

|

|

UIST 2013 Video:

This video is the supplemental video for our UIST 2013 paper. It contains a brief summary of our

approach and demonstrates a few of the applications that gaze locking facilitates. It also shows

our detector's output on the feeds and images from Applications 1–4 above.

|

| |

|

|

|

Database

|

|

Columbia Gaze Data Set:

We have made our data set publicly available for use by researchers. This data set includes 56 people and 5,880 images over varying gaze directions and head poses.

|

| |

|

|

|

The World In An Eye

|

|

|