Columbia speech researchers present four papers in August

![]()

![]() Last week in Stockholm, Columbia researchers presented three papers at Interspeech, the largest and most comprehensive conference on the science and technology of spoken language processing. A fourth paper was presented at the *SEM, a conference on lexical and computational semantics held August 3-4 in Vancouver, Canada. High-level descriptions of each of the four papers are given in the following summaries and interviews with lead authors.

Last week in Stockholm, Columbia researchers presented three papers at Interspeech, the largest and most comprehensive conference on the science and technology of spoken language processing. A fourth paper was presented at the *SEM, a conference on lexical and computational semantics held August 3-4 in Vancouver, Canada. High-level descriptions of each of the four papers are given in the following summaries and interviews with lead authors.

Hybrid Acoustic-Lexical Deep Learning Approach for Deception Detection

Gideon Mendels, Sarah Ita Levitan, Kai-Zhan Lee, and Julia Hirschberg

Interview with lead author Gideon Mendels.

What was the motivation for the work?

The deception project is an ongoing effort led by Sarah Ita Levitan under the supervision of Professor Julia Hirschberg.

In this work, our motivation was to see if we could use deep learning to improve our results since previous experiments were mostly based on traditional machine learning methods.

How prevalent is deep learning becoming for speech-related research? How is it changing speech research?

Deep learning is very popular these days and in fact has been for the past few years. In speech recognition, researchers originally substituted traditional machine learning models with deep learning models and gained impressive improvements. While these systems had deep learning components they were still mostly based on the traditional architecture.

Recent developments allowed researchers to design deep learning end-to-end systems that completely replace traditional methods while still obtaining state-of-the-art results. In other subfields of speech research, deep learning has not been as successful, whether it’s because simpler models suffice or the lack of big datasets.

In your view, will deep learning replace traditional machine learning approaches?

I see deep learning as just another tool in the researcher’s toolbox. The choice of algorithm requires an understanding of the underlying problem and constraints. In many cases, traditional machine learning approaches are better, faster, and easier to train. I doubt traditional methods would be completely replaced by deep learning.

You investigated four deep learning approaches to detecting deception in speech. How did you decide on these particular approaches?

We chose these methods based on previous work on deception detection, similar tasks such as emotion detection, and running thousands of experiments to develop intuition for what works.

Nonetheless there’s a lot more to be done! There are different model architectures to explore and different ways to arrange the data. I’m mostly excited about application of end-to-end models that learn to extract useful information directly from the recording without any feature engineering.

Your best performing model was a hybrid approach that combined two models. Is this what you would have expected?

Our original experiments focused on detecting deception either from lexical features (the choice of words) or acoustic features from the speaker’s voice. While both proved valuable for detecting deception we were interested to see whether they complement or overlap. By combining the two we got a better result compared to each one individually. I can’t say this was a huge surprise since humans, when trying to detect lies, utilize as many signals as possible: voice, words, body language, etc. Overall our best model achieved a score of 63.9% [F1] which is about 7.5% better than previous work on the same dataset.

Is this hybrid model adaptable to identifying other speech features other than deception?

Definitely. Now that our work is published we’re excited to see how other researchers will use it for other tasks. The hybrid model could be especially useful in tasks such as emotion detection and intent classification.

Utterance Selection for Optimizing Intelligibility of TTS Voices Trained on ASR Data

Erica Cooper, Xinyue Wang, Alison Chang, Yocheved Levitan, Julia Hirschberg

Creating a text-to-speech (TTS), or synthetic, voice—such as those used by Siri, Alexa, Microsoft’s Cortana—is a major undertaking that typically requires many hours of recorded speech, preferably made in a soundproof room by having a professional speaker reading in a prescribed fashion. It is time-consuming, labor-intensive, expensive, and almost wholly reserved for English, Spanish, German, Mandarin, Japanese, and few other languages; it is almost never used for the other 6900 or so languages in the world, leaving these languages without the resources required for building speech interfaces.

Repurposing existing, or “found,” speech from TV or radio broadcasts, audio books, or scraped from websites, may provide a less expensive alternative. In a process called parametric synthesis, many different voices are aggregated to create an entirely new one, with speaking characteristics averaged across all utterances. Columbia researchers see it as a possible way to address the lack of resources common to most languages.

Found speech utterances however have their own challenges. They contain noise, and people have varying speaking rates, pitches, and levels of articulation, some of which may hinder intelligibility. While low articulation, somewhat fast speaking rates, and low variation in pitch are known to aid naturalness, it wasn’t clear these same characteristics would aid intelligibility. More articulation and slower speaking rates might actually make voices easier to understand.

“We wanted to discover what would produce a nice, clean voice that was easy to understand,” says Erica Cooper, the paper’s lead author. “If we could identify utterances that were introducing noise or hindering intelligibility in some way, we could filter out those utterances and work only with good ones. We wanted also to understand whether a principled approach to utterance selection meant we could work with less data.”

Though the goal is to create voices for low-resource languages, the researchers focused on English initially to identify the best filtering techniques. Using the MACROPHONE corpus, an 83-hour collection of short utterances taken from telephone-based dialog systems, the researchers removed noise, including transcribed utterances labeled as noise as well as clipped utterances (where the waveform was abruptly cut off), and spelled out words.

Using the open-source HTS Toolkit, the researchers created 34 voices from utterances spoken by adult female speakers. Each voice was selected according to different acoustic and prosodic characteristics, and drawn from different subsets of the corpus; some adult female voices were from the first 10 hours of the corpus, and some from 2- and 4-hour subsets.

Each voice was used to synthesize the same 10 sentences, and each set of sentences was evaluated by five Mechanical Turk workers who transcribed the words as they were spoken. Word error rates associated with a voice were used to judge intelligibility.

Workers could listen to only one question set to ensure they were not transcribing words from memory; the task thus required a relatively high number of workers. The rate at which workers signed up quickly become a bottleneck, adding weeks to the project and prompting the researchers to consider using automatic speech recognition (ASR) as a proxy for human evaluations. ASR evaluations would speed things, but would they agree with human evaluations when assessing intelligibility?

Extending the scope of the experiment, the researchers employed three off-the-shelf ASR APIs—Google Cloud Speech, wit.ai (owned by Facebook), IBM’s Watson—to study using ASR to evaluate intelligibility. ASR did in fact correlate closely with Mechanical Turk workers; for all voices humans rated better than baseline, all three ASRs did the same, showing the future promise of ASR in assessing intelligibility of spoken speech. There was one notable caveat. Sending the same audio clip multiple times to one of the APIs (wit.ai) did not necessarily return the same transcription, so variability might be expected in some ASR systems. But then again, humans were themselves even more variable in evaluating intelligibility. (Google and Watson showed no variability, always returning the same transcription.)

The most intelligible voices for both humans and the ASR methods were those with a faster speaking rate, a low level of articulation, and a middle variation in pitch. (For naturalness, the researchers had previously found the best features to be also a low-level of articulation but a low, not middle, mean pitch.) Removing transcriptions labeled as noise produced noticeable improvement, but removing clipped utterances or spelled out words did not.

More training data wasn’t necessarily better if the data was chosen in a principled way. Using the first 10-hour subset of female adult speech as a baseline with 67.7% accuracy, some two-hour subsets with carefully chosen utterances did better, validating the hypothesis that better voices can be trained by identifying the best training utterances in a noisy corpus, even with less training data.

Crowdsourcing Universal Part-of-Speech Tags for Code Switching

Victor Soto, Julia Hirschberg

Almost all bilingual and multilingual people code-switch, that is, they naturally switch between languages when talking and writing. People code-switch for many reasons; one language might better convey the intended meaning, a speaker might lack technical vocabulary in one language, or a speaker wants to match a listener’s speech. Worldwide, code-switchers are thought to outnumber those who speak a single language. Even in the US, famously monolingual, it is estimated that 40M people code-switch between English and Spanish.

People code-switch, but speech and natural language interfaces for the most part do not; their underlying statistical models are almost always trained for a single language, forcing people to adjust their speaking style and refrain from code-switching. To eventually enable more natural and seamless natural language interfaces so people can freely switch between languages, Columbia researchers Victor Soto and Julia Hirschberg have begun building natural language models specifically for code-switching in English and Spanish settings; the overall goal, however, is for a workable paradigm for other language pairs.

Simply combining two monolingual models was not seen as a viable solution. “Code-switching follows certain patterns and syntactic rules,” says Soto, lead author of the paper. “These rules can’t be understood from monolingual settings. Inserting code-switching intelligence into a natural language understanding system—which is our eventual aim—requires that we start with a bilingual corpus annotated with high-quality labels.”

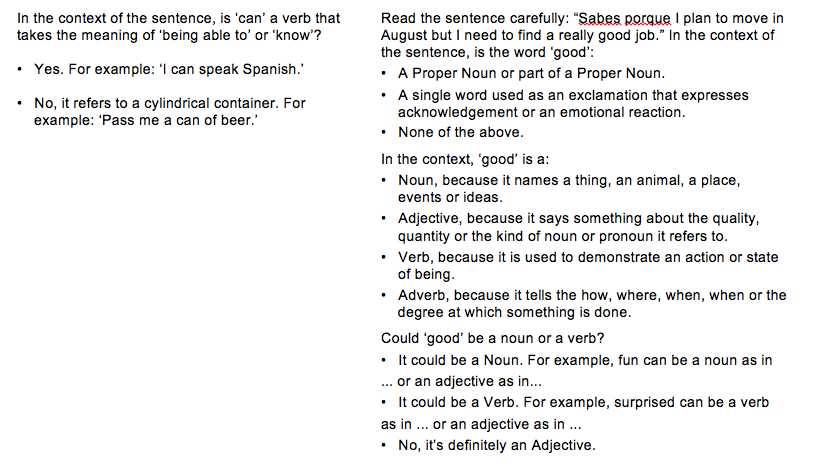

The necessary labels include part-of-speech (POS) tags that define a word’s function within a sentence (verb, noun, adjective, and other categories). Manually annotating a corpus is time-consuming and expensive, so Soto and Hirschberg want to build an automatic POS tagger. The first step in this process is collecting training data.

The researchers start with an existing English-Spanish code-switching corpus, the Miami Bangor Corpus, compiled from 35 hours of conversational speech containing 242,475 words, two-thirds in English and one third in Spanish. While the corpus had the required size, the existing part-of-speech tags were often inaccurate or ambiguous, having been applied automatically using software that did not consider the multilingual context.

The researchers decided to redo the annotations, using tags from the Universal Part-of-Speech tag set, which would make it easier to map to tags used by other languages.

Fully half the corpus (56%) consisted of words always labeled with the same part of speech; these words were labeled automatically. Frequent words that were hard to annotate (slightly less than 2% of the corpus) were tagged by a computational linguist (Soto).

For the remaining 42% of the corpus, the researchers turned to crowdsourcing, a method used successfully in monolingual settings with untrained Mechanical Turk and Crowdflower workers. In this case, tagging tasks are structured in a way that does not require knowing grammar and linguistic rules. Workers simply answer a single question or are led through a series of prompts that converge to a single tag; in both cases, instructions and examples assist workers in choosing a tag.

To adapt this crowdsourcing approach to a bilingual setting, the Columbia researchers required workers to be bilingual, and they wrote separate prompts for the Spanish and English portions of the corpus, taking into account syntactic properties of each language (such as prompting for the infinitival verb form in Spanish, or the gerund form in English, to disambiguate between verb and nouns, among others).

Each crowdsourced word was assigned three intermediate judgments—two from crowdsourcing and one carried over from the Miami Bangor corpus—with majority vote to decide the tag. The voting considered judgments only from workers who demonstrated a tagging accuracy of at least 85% when compared to a hidden test set of tags. In 95-99% of the time, at least two votes agreed.

Over the entire corpus, Soto and Hirschberg estimate an accuracy of between 92% and 94%, close to what a trained linguist can do.

With an accurately labeled corpus, the researchers’ next step is building an automatic part-of-speech tagging model for code-switching between English and Spanish.

Earlier in August, Columbia researchers presented Comparing Approaches for Automatic Question Identification at *SEM 2017: The Sixth Joint Conference on Lexical and Computational Semantics, held in Vancouver, Canada. The authors are Angel Maredia, Kara Schechtman, Sarah Ita Levitan, and Julia Hirschberg.

Interview with lead author Angel Maredia.

Why is it important to automatically identify questions?

Many applications follow a question-and-answer format where people have the flexibility to speak naturally and choose their own words but within the confines of the topic set up by the question. Often it’s often important to know how much speech goes into answering a particular question or when there is a change in topic. Our goal with this project was to develop automatic approaches to organizing and analyzing a large corpus of spontaneous speech at the topic level.

Your paper describes labeling two deceptive speech corpora, the Columbia X-Cultural Deception (CXD) corpus and the Columbia-SRI-Colorado (CSC) corpus. Why did you choose these two corpora to label?

Both corpora are part of our larger effort in identifying deception in speech, something we’ve been working at here at Columbia for a number of years. The CXD corpus contains 122 hours of speech in the form of dialogs between subject pairs who played a lying game with each other. They took turns playing the role of interviewer and interviewee, and asked each other questions from a 24-item biographical questionnaire that they filled out, lying for a random half of the questions. Given this setup, we wanted to identify which question a given interviewee was answering.

With 122 hours of speech, it’s just not possible to do this manually—as we had done with the smaller CSC corpus. We needed an automatic approach, and for this we compared three different techniques for question identification to see which one was best for identifying which question a given interviewee was answering.

While we needed topic labels for the CXD corpus, the CSC corpus was chosen because it had similar topical distinctions that were hand-labeled, which we could use to test and verify that the approach that worked on CXD corpus was viable.

How do the three methods you tried—ROUGE, word embeddings, document embeddings—differ from one another?

We compared these methods because they capture similarity of language in different ways. ROUGE—which is a software package for evaluating automatic summarization, was selected to do comparison with n-grams. We selected the word embeddings and document embeddings approaches because they accounted for semantic similarity. The word embeddings approach used the Word2Vec model, which represents words as vectors, and words that were similar semantically had higher cosine similarity between the vectors, and the document embeddings approach used the Doc2Vec model, which we hoped would capture additional context.

The word embedding approach had the highest accuracy. Are these the results you expected?

It makes sense that the word embeddings approach would have higher accuracy because it also accounts for true negatives, and in any given interviewer turn, there are more true negatives than true positives.

While we weren’t surprised that word embeddings was the best method, we were nonetheless amazed at how well it worked. It matched very closely with the manually applied labels.

Your work was on labeling deceptive speech corpora. Would you expect similar results on other types of corpora?

Yes. This work applies at any type of speech that has a conversational structure. We happened to use deceptive speech corpora, but the method is unsupervised and should generalize well to other domains.

Posted August 31, 2017

– Linda Crane