An interview with Sarah Ita Levitan: When changes in speech can indicate lies

Humans are not good at detecting when someone is lying, doing little better than chance level. More reliable are computational analyses of changes in speech patterns. Julia Hirschberg with her PhD student Frank Enos, among the first to study deception in speech computationally, previously developed algorithms that can identify deceptive speech with 70% accuracy. Further improvements may be possible by taking into account individual differences in people’s speech behaviors when they being deceptive (for instance, some may raise their voice while others lower it; some laugh more, others less). Sarah Ita Levitan, a PhD student in Hirschberg’s speech lab, is leading a series of experiments to correlate these differences with gender, culture, and personality. At the heart of these experiments is a new corpus of 122 hours of deceptive and non-deceptive speech, by far the largest such corpus ever collected. In this interview, Levitan who will be presenting results of a deceptive speech challenge at this week’s Interspeech, summarizes new findings made possible with this new corpus.

Why do people have such a difficult time detecting lies?

People rely on their intuition, but intuition is often wrong. Hesitations or saying “um” or “ah” are often interpreted as a sign of deception, but previous studies have found that these filled pauses may instead be a sign of truthful speech.

That’s why it’s important to take a quantitative approach and do in-depth statistical analyses of deceptive and nondeceptive speech.

Can you briefly describe the nature of your experiments?

In Identifying Individual Differences in Gender, Ethnicity, and Personality from Dialogue for Deception Detection, we’re building on previous experiments and studies that show people exhibit different deceptive behaviors when they speak. A change in pitch or more laughter than normal might indicate deceptive speech in some people, but in other people these different behaviors might indicate truthful speech.

We want to understand what accounts for these individual differences. Again, from previous studies we know that deceptive speech is very individualized and that gender, culture, and personality differences play a role. Our experiments are designed to more narrowly correlate individual factors with speech-related deceptive behaviors. For instance, would female, Mandarin-native speakers who are highly extroverted tend to do one thing when they lie, while male, native English-speaking, introverted speakers do something different?

Establishing strong correlations between certain deceptive speech behaviors and gender, cultural, or personality differences will help us build new classifiers to automatically detect deceptive speech.

How will your experiments differ from previous ones that also examine individual differences?

We will have more data and more specific information about individual speakers. This new corpus we’ve created contains 122 hours of deceptive and nondeceptive speech from 344 speakers, half of whom are native English speakers, and half native Mandarin speakers, though speaking in English.

This is a huge corpus; our previous work was based upon a corpus of about 15 hours of interviews. Unlike this previous one, the new corpus is gender-balanced and includes cultural information about each participant as well as personality traits gathered by administering the NEO-FFI personality test.

One thing new we did was to ask each study participant to speak truthfully for three or four minutes answering open-ended questions (“what do you like best/worst about living in NYC”). While the initial motivation was to have a baseline of truthful speech to compare with deceptive speech, these short snippets of speech actually told us a lot about an individual, both their gender and native language as well as something about their personality.

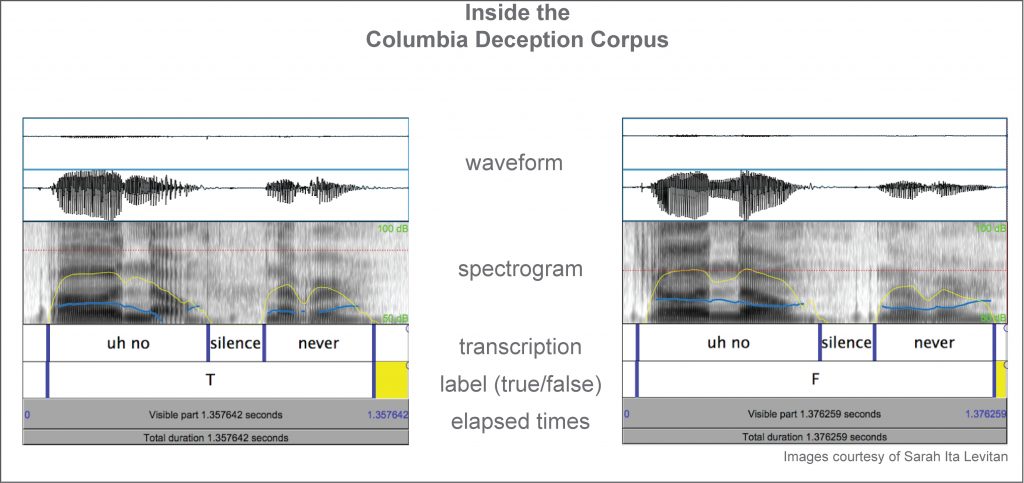

Pulling together such a large corpus was a major undertaking. It required transcribing 122 hours of speech, which we did using Amazon Mechanical Turk, and meticulously aligning the transcriptions with the speech. The effort involved a great many people, including collaborators from CUNY, interns, and undergraduates who got an early chance to participate in research.

|

Truthful

|

Deceptive

|

How did you collect deceptive speech?

We had participants play a lying game. After collecting the baseline speech and administering the NEO-FFI test and a biographical questionnaire, we paired off participants, who took turns interviewing one another and being interviewed. For the interviewer, the goal was to judge truth or lie for each interviewee statement whereas the goal of the interviewee was to lie convincingly. As motivation, each participant earned $1 for every lie that was believed but penalized $1 when a lie failed to convince the interviewer. When asking questions, a participant earned $1 for correctly identifying a lie and forfeited $1 when accepting a lie as truth. Participants faced each other in a sound booth but were separated by a curtain, forcing participants to rely on voice alone to decide whether a statement was true or false.

What were your results using this new corpus?

Overall we were able to detect deception with about 66% accuracy using machine-learning classifiers. This level of accuracy is achieved using acoustic-prosodic features—such as pitch and other voice characteristics like loudness—and incorporating the information about gender, native language, and personality that we have so far extracted. We are not yet using lexical features such as word choice or filled pauses, which should further improve performance.

Just as important as overall accuracy, we’re finding individual differences. We found that people who are better at detecting lies also do better at deceiving others; our gender-balanced corpus gives strong evidence that this correlation is true for women and particularly true for English-native women. People’s confidence in their ability to detect deception correlates negatively with their actual ability to detect deception, possibly because interviewers less confident in their judgments ask more follow-up questions.

Personality differences became apparent also. People who scored high on extraversion and conscientiousness were worse at deceiving. The ability to detect deception was negatively correlated with neuroticism in women but not in men.

The baseline speech, from which we automatically extracted features, was by itself enough to accurately predict gender. Using the f1 metric—which accounts both for accurately predicting gender while attaining a low number of false positives—we predicted gender with a measure of .96 on the basis of both pitch and word choice. The same features enabled us to predict whether one’s native language is English or Mandarin—here with an f measure of .78. In predicting the five personality dimensions measured by the FFI test, we achieved f measures ranging from .36 to .56. We could also use this speech baseline to predict, at 65% accuracy, who could successfully detect lies. In each case, predictions are significantly higher than the baseline.

Being able to automatically extract personality features is especially important because it points to the possibility of someday deploying a system in the real world where it’s unlikely you will have personality scores of people.

But yet the overall 66% result is less than the 70% accuracy already achieved by those within your lab in the previous deception study.

We’re just beginning to explore this new corpus and still have yet to extract all the lexical features; once we do, we can use lexical features in addition to the acoustic features our classification results now rely on.

We also plan to make better use of our personality scores and to explore additional machine learning approaches such as neural nets.

It’s a huge corpus, and it’s going to take some time to learn to use all the information it contains.

This week at Interspeech, you will be presenting results of a deception-detection challenge you entered. Did you apply lessons learned from this new corpus?

For the competition, we were given a corpus very different from our own. This challenge corpus was created by having students perform a task and then lie about it; students speak for much shorter turns, the vocabulary is more restricted, and the recording conditions are different. But still we wanted to see if we could train a model on our corpus and test on this challenge corpus. We found that we could, after first automatically selecting about 500 turns from our corpus that were similar to turns in the challenge corpus.

Using acoustic features, though not lexical ones, we achieved almost the same level of accuracy in detecting deception as we did when training and testing on the challenge corpus. Which was great because it showed that acoustic features do generalize to different domains and further points to the possibility of deception detection applications outside the laboratory.

How soon before we see speech used to detect deception in interrogations or to determine guilt?

We are certainly not at the stage of determining truth or lie in real world conditions based upon speech alone. However, information from speech analysis can be combined with other information such as facial expression, body gestures, and other behaviors to help interviewers recognize deceptive cues that they might not otherwise pay attention to. Since humans often rely upon unreliable cues to deception, speech analysis can help alert interviewers to better indicators of deception.

Of all technologies being developed to detect deception, speech is the most accurate, and it has many other benefits; it doesn’t require cumbersome equipment, it is less intrusive, and it can be used after the fact. And as our experiments show, we can continue to improve the accuracy of using speech to detect deception.

Posted: 9/6/2016